503_lecture1b_F14 - Computer Science

advertisement

UMass Lowell Computer Science 91.503

Analysis of Algorithms

Prof. Karen Daniels

Design Patterns for

Optimization Problems

Dynamic Programming

Matrix Parenthesizing

Longest Common Subsequence

Activity Selection

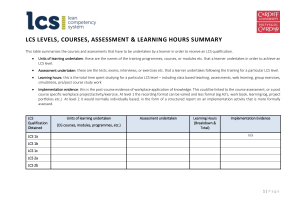

Algorithmic Paradigm Context

Divide &

Conquer

View problem as collection of

subproblems

“Recursive” nature

Independent subproblems

Number of subproblems

Preprocessing

Characteristic running time

Primarily for optimization

problems (find an optimal

solution)

Optimal substructure:

optimal solution to problem

contains within it optimal

solutions to subproblems

Greedy choice property:

locally optimal produces

globally optimal

Heuristic version useful for

bounding optimal value

Subproblem solution order

Dynamic

Programming

overlapping

depends on

partitioning

factors

typically log

function of n

Greedy

Algorithm

typically

sequential

dependence

typically small

depends on number

and difficulty of

subproblems

typically sort

often dominated

by nlogn sort

Solve subproblem(s),

then make choice

Make choice, then solve

subproblem(s)

Dynamic Programming Approach

to Optimization Problems

1.

2.

3.

4.

Characterize structure of an optimal

solution.

Recursively define value of an optimal

solution.

Compute value of an optimal solution,

typically in bottom-up fashion.

Construct an optimal solution from

computed information.

(separate slides for rod cutting)

source: 91.503 textbook Cormen, et al.

Dynamic Programming

Matrix Parenthesization

Example: Matrix Parenthesization

Definitions

Given “chain” of n matrices: <A1, A2, … An, >

Compute product A1A2… An efficiently

Multiplication order matters!

Matrix multiplication is associative

Minimize “cost” = number of scalar

multiplications

source: 91.503 textbook Cormen, et al.

Example: Matrix Parenthesization

Step 1: Characterizing an Optimal Solution

Observation:

Any parenthesization of AiAi+1… Aj must split it between Ak and Ak+1 for some k.

THM: Optimal Matrix Parenthesization:

If an optimal parenthesization of AiAi+1… Aj splits at k, then

parenthesization of prefix AiAi+1… Ak must be an optimal parenthesization.

Why?

If existed less costly way to parenthesize prefix, then substituting that

parenthesization would yield less costly way to parenthesize AiAi+1… Aj ,

contradicting optimality of that parenthesization.

common DP proof technique:

“cut-and-paste” proof by contradiction

source: 91.503 textbook Cormen, et al.

Example: Matrix Parenthesization

Step 2: A Recursive Solution

Recursive definition of minimum parenthesization

cost:

0

if i = j

m[i,j]= min{m[i,k] + m[k+1,j] + pi-1pkpj}

if i < j

i <= k < j

How many distinct subproblems?

each matrix Ai has dimensions pi-1 x pi

source: 91.503 textbook Cormen, et al.

Example: Matrix Parenthesization

Step 3: Computing Optimal Costs

2,500

2,625

1,000

0

s: value of k that achieves optimal

cost in computing m[i, j]

source: 91.503 textbook Cormen, et al.

Example: Matrix Parenthesization

Step 4: Constructing an Optimal Solution

PRINT-OPTIMAL-PARENS(s, i, j)

if i == j

print “A”i

else print “(“

PRINT-OPTIMAL-PARENS(s, i, s[i, j])

PRINT-OPTIMAL-PARENS(s, s[i, j]+1, j)

print “)“

source: 91.503 textbook Cormen, et al.

Example: Matrix Parenthesization

Memoization

•

•

source: 91.503 textbook Cormen, et al.

Provide Dynamic Programming

efficiency

But with top-down strategy LOOKUP-CHAIN(m,p,i,j)

•

•

Use recursion

Fill in m table “on demand”

•

(can modify to fill in s table)

1 if m[i,j] <

2

return m[i,j]

3 if i==j

4

m[i,j] = 0

MEMOIZED-MATRIX-CHAIN(p)

5 else for k = i to j-1

1 n = p.length – 1

6

q = LOOKUP-CHAIN(m,p,i,k)

2 let m[1…n,1…n] be a new table.

+ LOOKUP-CHAIN(m,p,k+1,j)

3 for i = 1 to n

+ pi-1 pk pj

4

5

for j = i to n

m[i,j] =

6 return LOOKUP-CHAIN(m, p,1,n)

7

8

if q < m[i,j]

m[i,j] = q

9 return m[i,j]

Dynamic Programming

Longest Common Subsequence

Example: Longest Common

Subsequence (LCS): Motivation

•

Strand of DNA: string over finite set {A,C,G,T}

•

each element of set is a base: adenine, guanine, cytosine or thymine

•

Compare DNA similarities

S1 = ACCGGTCGAGTGCGCGGAAGCCGGCCGAA

S2 = GTCGTTCGGAATGCCGTTGCTCTGTAAA

•

One measure of similarity:

•

•

•

find the longest string S3 containing bases that also appear (not

necessarily consecutively) in S1 and S2

•

S3 = GTCGTCGGAAGCCGGCCGAA

source: 91.503 textbook Cormen, et al.

Example: LCS

Definitions

•

Sequence

is a subsequence of

if

indices of X) such that

• example:

•

source: 91.503 textbook Cormen, et al.

(strictly increasing

is subsequence of

with index sequence

Z is common subsequence of X and Y if Z is

subsequence of both X and Y

•

example:

•

•

common subsequence but not longest

common subsequence. Longest?

Longest Common Subsequence Problem: Given 2 sequences

X, Y, find maximum-length common subsequence Z.

Example: LCS

Step 1: Characterize an LCS

THM 15.1: Optimal LCS Substructure

Given sequences:

For any LCS

of X and Y:

(using prefix notation)

1 if

then

and Zk-1 is an LCS of Xm-1 and Yn-1

2 if

then

Z is an LCS of Xm-1 and Y

3 if

then

Z is an LCS of X and Yn-1

PROOF: based on producing contradictions

1 a) Suppose

. Appending

to Z contradicts longest nature of Z.

b) To establish longest nature of Zk-1, suppose common subsequence W of Xm-1 and Yn-1

has length > k-1. Appending

to W yields common subsequence of length > k = contradiction.

2 To establish optimality (longest nature), common subsequence W of Xm-1 and Y of length >

k would also be common subsequence of Xm, Y, contradicting longest nature of Z.

3 Similar to proof of (2)

source: 91.503 textbook Cormen, et al.

Example: LCS

Step 2: A Recursive Solution

Implications of Theorem 15.1:

yes

Find LCS(Xm-1, Yn-1)

LCS1(X, Y) = LCS(Xm-1, Yn-1) + xm

?

no

Find LCS(Xm-1, Y)

Find LCS(X, Yn-1)

LCS2(X, Y) = max(LCS(Xm-1, Y), LCS(X, Yn-1))

An LCS of 2 sequences contains, as a prefix, an LCS of

prefixes of the sequences.

Example: LCS

Step 2: A Recursive Solution (continued)

source: 91.503 textbook Cormen, et al.

•

Overlapping subproblem structure:

LCS ( X m1 , Yn1 ) LCS ( X m1 , Y )

LCS ( X m1 , Yn1 ) LCS ( X , Yn1 )

LCS ( X , Y )

Q(mn) distinct

subproblems

•

Recurrence for length of optimal solution:

0

c[i,j]= c[i-1,j-1]+1

max(c[i,j-1], c[i-1,j])

if i=0 or j=0

if i,j > 0 and xi=yj

if i,j > 0 and xi=yj

Conditions of problem can exclude some subproblems!

Example: LCS

Step 3: Compute Length of an LCS

What is the

asymptotic worstcase time

complexity?

0

1

2

3

4

c table

(represent b table)

source: 91.503 textbook Cormen, et al.

Example: LCS

Step 4: Construct an LCS

8

source: 91.503 textbook Cormen, et al.

Dynamic Programming

…leading to a Greedy Algorithm…

Activity Selection

Activity Selection

Optimization Problem

•

Problem Instance:

•

•

Set S = {a1,a2,...,an} of n activities

Each activity i has:

•

•

•

•

•

start time: si

finish time: fi

si f i

Activities require exclusive use of a common resource.

Activities i, j are compatible iff non-overlapping:

[s j f j )

[ si f i )

Objective:

•

select a maximum-sized set of mutually compatible activities

source: 91.404 textbook Cormen, et al.

Activity Selection

Activity Time Duration

1

Activity

Number

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

1

2

3

4

5

6

7

8

What is an answer in this case?

Activity Selection

Sij {ak S : f i sk f k s j }

Solution to Sij including ak produces 2 subproblems:

1) Sik (start after ai finishes; finish before ak starts)

2) Skj (start after ak finishes; finish before aj starts)

c[i,j]=size of maximum-size subset of

mutually compatible activities in Sij.

0

if Sij 0

c[i, j ]

max

{

c

[

i

,

k

]

c

[

k

,

j

]

1

}

if

S

0

i k j ; ak S ij

ij

source: 91.404 textbook Cormen, et al.