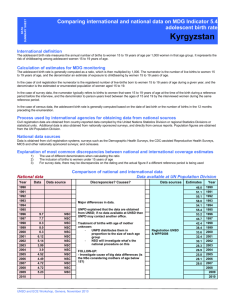

slides

advertisement

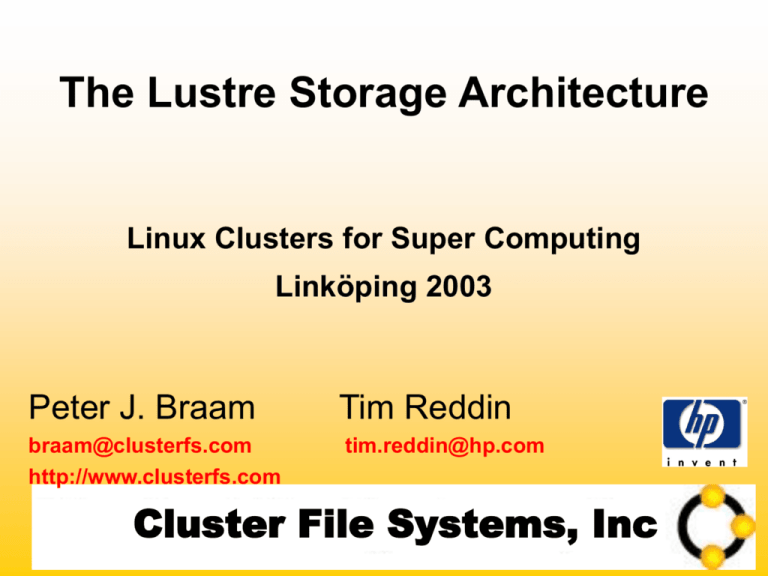

The Lustre Storage Architecture

Linux Clusters for Super Computing

Linköping 2003

Peter J. Braam

Tim Reddin

braam@clusterfs.com

http://www.clusterfs.com

tim.reddin@hp.com

Cluster File Systems, Inc

6/10/2001

1

Topics

History of project

High level picture

Networking

Devices and fundamental API’s

File I/O

Metadata & recovery

Project status

Cluster File Systems, Inc

2 - NSC 2003

Lustre’s History

3 - NSC 2003

Project history

1999 CMU & Seagate

4 - NSC 2003

Worked with Seagate for one year

Storage management, clustering

Built prototypes, much design

Much survives today

2000-2002 File system challenge

First put forward Sep 1999 Santa Fe

New architecture for National Labs

Characteristics:

100’s GB’s/sec of I/O throughput

trillions of files

10,000’s of nodes

Petabytes

From start Garth & Peter in the running

5 - NSC 2003

2002 – 2003 fast lane

3 year ASCI Path Forward contract

with HP and Intel

MCR & ALC, 2x 1000 node Linux Clusters

PNNL HP IA64, 1000 node Linux cluster

Red Storm, Sandia (8000 nodes, Cray)

Lustre Lite 1.0

Many partnerships (HP, Dell, DDN, …)

6 - NSC 2003

2003 – Production, perfomance

Spring and summer

Performance

LLNL MCR from no, to partial, to full time use

PNNL similar

Stability much improved

Summer 2003: I/O problems tackled

Metadata much faster

Dec/Jan

7 - NSC 2003

Lustre 1.0

High level picture

8 - NSC 2003

Lustre Systems – Major Components

Clients

OST

Object storage targets

Handle (stripes of, references to) file data

MDS

Have access to file system

Typical role: compute server

Metadata request transaction engine.

Also: LDAP, Kerberos, routers etc.

9 - NSC 2003

OST 1

MDS 1

(active)

MDS 2

(failover)

Linux

OST

Servers

with disk

arrays

QSW Net

OST 2

SAN

OST 3

Lustre Clients

(1,000 Lustre Lite)

Up to 10,000’s

OST 4

GigE

OST 5

3rd party OST

Appliances

OST 6

Lustre Object Storage

Targets (OST)

10 - NSC 2003

OST 7

LDAP Server

configuration information,

network connection details,

& security management

Clients

directory operations,

meta-data, &

concurrency

Meta-data Server

(MDS)

11 - NSC 2003

file I/O & file

locking

recovery, file status, &

file creation

Object Storage Targets

(OST)

Networking

12 - NSC 2003

Lustre Networking

Currently runs over:

Beta

Myrinet, SCI

Under development

TCP

Quadrics Elan 3 & 4

Lustre can route & can use heterogeneous nets

SAN (FC/iSCSI), I/B

Planned:

13 - NSC 2003

SCTP, some special NUMA and other nets

Lustre Network Stack - Portals

0-copy marshalling libraries,

Service framework,

Client request dispatch,

Connection & address naming,

Generic recovery infrastructure

Move small & large buffers,

Remote DMA handling,

Generate events

Lustre Request Processing

NIO API

Sandia’s API,

CFS improved impl.

Portal Library

Portal NAL’s

Device Library (Elan,Myrinet,TCP,...)

14 - NSC 2003

Network Abstraction Layer for

TCP, QSW, etc. Small & hard

Includes routing api.

Devices and API’s

15 - NSC 2003

Lustre Devices & API’s

Lustre has numerous driver modules

One API - very different implementations

Driver binds to named device

Stacking devices is key

Generalized “object devices”

Drivers currently export several API’s

16 - NSC 2003

Infrastructure - a mandatory API

Object Storage

Metadata Handling

Locking

Recovery

Lustre Clients & API’s

Lustre File System (Linux)

or Lustre Library (Win, Unix, Micro Kernels)

Data Object & Lock

Metadata & Lock

Logical Object Volume

(LOV driver)

OSC1

17 - NSC 2003

…

OSCn

Clustered MD

driver

MDC

…

MDC

Object Storage Api

Objects are (usually) unnamed files

Improves on the block device api

create, destroy, setattr, getattr, read, write

OBD driver does block/extent allocation

Implementation:

18 - NSC 2003

Linux drivers, using a file system backend

Bringing it all together

Lustre Client File

System

System &

Parallel File I/O,

File Locking

Portal NAL’s

Device (Elan,TCP,…)

OSC’s

MDS

Server

Lock

Server

Lock

Client

Ext3, Reiser, XFS, … FS

Recovery

Recovery,

File Status,

File Creation

Load Balancing

Fibre Channel

19 - NSC 2003

MDS

Networking

Recovery

Fibre Channel

Lock

Client

Directory

Metadata &

Concurrency

Networking

Ext3, Reiser, XFS,… FS

MDC

Networking

OST

Object-Based Disk Lock

Server (OBD server Server

Metadata

WB cache

Recovery

Request Processing

NIO API

Portal Library

File I/O

20 - NSC 2003

File I/O – Write Operation

Open file on meta-data server

Get information on all objects that are part of file:

Objects id’s

What storage controllers (OST)

What part of the file (offset)

Striping pattern

Create LOV, OSC drivers

Use connection to OST

21 - NSC 2003

Object writes to OST

No MDS involvement at all

Lustre Client

File system

LOV

OSC

1

OSC

2

Write (obj 1)

File open request

MDS

File meta-data

Inode A {(O1,obj1),(O3, obj2)}

Write (obj 2)

OST 1

22 - NSC 2003

MDC

Meta-data Server

OST 2

OST 3

I/O bandwidth

100’s GB/sec => saturate many100’s OSTs

OST’s:

Do ext3 extent allocation, non-caching direct I/O

Lock management spread over cluster

Achieve 90-95% of network throughput

23 - NSC 2003

Single client, single thread Elan3: W 269MB/sec

OST’s handle up to 260MB/sec

W/O extent code, on 2 way 2.4GHz Xeon

Metadata

24 - NSC 2003

Intent locks & Write Back caching

Clients – MDS: protocol adaptation

Low concurrency - write back caching

Client in memory updates

delayed replay to MDS

High concurrency (mostly merged in 2.6)

25 - NSC 2003

Single network request per transaction

No lock revocations to clients

Intent based lock includes complete request

Client

Client

lookup

Network

Network

File

FileServer

Server

lookup

lookup

lookup

mkdir

mkdir

mkdir

create

dir

mkdir

a)a)Conventional

Conventionalmkdir

mkdir

Lustre

Client

Network

Meta-data

Server

lookup

lookup intent mkdir

lock module

lookup

mkdir

exercise

the intent

Lustre_mkdir

26 - NSC 2003

Mds_mkdir

b) Lustre mkdir

Lustre 1.0

Only has high concurrency model

Aggregate throughput (1,000 clients):

Single client:

Achieve ~5000 file creations (open/close) /sec

Achieve ~7800 stat’s in 10 x1M file directories

Around 1500 creations or stat’s /sec

Handling 10M file directories is effortless

Many changes to ext3 (all merged in 2.6)

27 - NSC 2003

Metadata Future

Lustre 2.0 – 2004

Metadata clustering

Common operations will parallelize

100% WB caching in memory or on disk

28 - NSC 2003

Like AFS

Recovery

30 - NSC 2003

Recovery approach

Keep it simple!

Based on failover circles:

At HP we use failover pairs

Use existing failover software

Left working neighbor is failover node for you

Simplify storage connectivity

I/O failure triggers

31 - NSC 2003

Peer node serves failed OST

Retry from client routed to new OST node

OST Server – redundant pair

OST1

OST 2

FC Switch

FC Switch

C1

32 - NSC 2003

C2

C1

C2

Configuration

33 - NSC 2003

Lustre 1.0

Good tools to build configuration

Configuration is recorded on MDS

Or on dedicated management server

Configuration can be changed,

Clients auto configure

1.0 requires downtime

mount –t lustre –o … mds://fileset/sub/dir /mnt/pt

SNMP support

34 - NSC 2003

Futures

35 - NSC 2003

Advanced Management

Snapshots

Global namespace

Combine best of AFS & autofs4

HSM, hot migration

All features you might expect

Driven by customer demand (we plan XDSM)

Online 0-downtime re-configuration

36 - NSC 2003

Part of Lustre 2.0

Security

Authentication

POSIX style authorization

NASD style OST authorization

Refinement: use OST ACL’s and cookies

File crypting with group key service

38 - NSC 2003

STK secure file system

Project status

43 - NSC 2003

Lustre Feature Roadmap

Lustre (Lite) 1.0

Lustre 2.0 (2.6)

Lustre 3.0

2003

2004

2005

Failover MDS

Metadata cluster

Metadata cluster

(Linux 2.4 & 2.6)

Basic Unix security Basic Unix security Advanced Security

File I/O very fast

(~100’s OST’s)

Collaborative read

cache

Storage

management

Intent based

scalable metadata

POSIX compliant

Write back

metadata

Parallel I/O

Load balanced MD

44 - NSC 2003

Global namespace

Cluster File Systems, Inc.

45 - NSC 2003

Cluster File Systems

Small service company: 20-30 people

Extremely specialized and extreme expertise

Software development & service (95% Lustre)

contract work for Government labs

OSS but defense contracts

we only do file systems and storage

Investments - not needed. Profitable.

Partners: HP, Dell, DDN, Cray

46 - NSC 2003

Lustre – conclusions

Great vehicle for advanced storage software

Things are done differently

Protocols & design from Coda & InterMezzo

Stacking & DB recovery theory applied

Leverage existing components

Initial signs promising

47 - NSC 2003

HP & Lustre

Two projects

ASCI PathForward – Hendrix

Lustre Storage product

48 - NSC 2003

Field trial in Q1 of 04

Questions?

49 - NSC 2003