Answer

advertisement

Question Answering

Tutorial

Based on:

John M. Prager

IBM T.J. Watson Research Center

jprager@us.ibm.com

Taken from (with deletions and adaptations):

RANLP 2003 tutorial

http://lml.bas.bg/ranlp2003/

Tutorials link, Prager tutorial

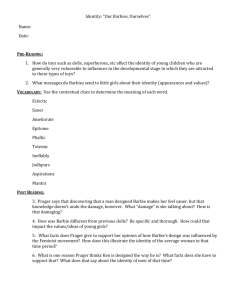

Part I - Anatomy of QA

A Brief History of QA

Terminology

The Essence of Text-based QA

Basic Structure of a QA System

NE Recognition and Answer Types

Answer Extraction

John M. Prager

RANLP 2003 Tutorial on Question Answering

A Brief History of QA

NLP front-ends to Expert Systems

SHRDLU (Winograd, 1972)

User manipulated, and asked questions about, blocks world

First real demo of combination of syntax, semantics, and reasoning

** NLP front-ends to Databases

LUNAR (Woods,1973)

User asked questions about moon rocks

Used ATNs and procedural semantics

LIFER/LADDER (Hendrix et al. 1977)

User asked questions about U.S. Navy ships

Used semantic grammar; domain information built into grammar

** NLP + logic

CHAT-80 (Warren & Pereira, 1982)

NLP query system in Prolog, about world geography

Definite Clause Grammars

** “Modern Era of QA” – answers from free text

MURAX (Kupiec, 2001)

NLP front-end to Encyclopaedia

** IR + NLP

TREC-8 (1999) (Voorhees & Tice, 2000)

Today – all of the above

John M. Prager

RANLP 2003 Tutorial on Question Answering

Some “factoid” questions from TREC8-9

9: How far is Yaroslav from Moscow?

15: When was London's Docklands Light Railway constructed?

22: When did the Jurassic Period end?

29: What is the brightest star visible from Earth?

* 30: What are the Valdez Principles?

73: Where is the Taj Mahal?

197: What did Richard Feynman say upon hearing he would receive

the Nobel Prize in Physics?

198: How did Socrates die?

199: How tall is the Matterhorn?

200: How tall is the replica of the Matterhorn at Disneyland?

* 227: Where does dew come from?

269: Who was Picasso?

298: What is California's state tree?

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology

Question Type

Answer Type

Question Topic

Candidate Passage

Candidate Answer

Authority File/List

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology – Question Type

Question Type: an idiomatic categorization of questions

for purposes of distinguishing between different

processing strategies and/or answer formats

E.g. TREC2003

FACTOID: “How far is it from Earth to Mars?”

LIST: “List the names of chewing gums”

DEFINITION: “Who is Vlad the Impaler?”

Other possibilities:

RELATIONSHIP: “What is the connection between Valentina

Tereshkova and Sally Ride?”

SUPERLATIVE: “What is the largest city on Earth?”

YES-NO: “Is Saddam Hussein alive?”

OPINION: “What do most Americans think of gun control?”

CAUSE&EFFECT: “Why did Iraq invade Kuwait?”

…

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology – Answer Type

Answer Type: the class of object (or rhetorical type of

sentence) sought by the question. E.g.

PERSON (from “Who …”)

PLACE (from “Where …”)

DATE (from “When …”)

NUMBER (from “How many …”)

…

but also

EXPLANATION (from “Why …”)

METHOD (from “How …”)

…

Answer types are usually tied intimately to the classes

recognized by the system’s Named Entity Recognizer.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Broader Answer Types

E.g.

“In what state is the Grand Canyon?”

“What is the population of Bulgaria?”

“What colour is a pomegranate?”

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology – Question Topic

Question Topic: the object (person, place,

…) or event that the question is about.

The question might well be about a

property of the topic, which will be the

question focus.

E.g. “What is the height of Mt. Everest?”

Mt. Everest is the topic

Topic has to be mentioned in answer

passage

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology – Candidate Passage

Candidate Passage: a text passage

(anything from a single sentence to a

whole document) retrieved by a search

engine in response to a question.

Candidate passage expected to contain

candidate answers.

Candidate passages will usually have

associated scores, from the search

engine.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology – Candidate Answer

Candidate Answer: in the context of a question, a small

quantity of text (anything from a single word to a

sentence or bigger, but usually a noun phrase) that is of

the same type as the Answer Type.

In some systems, the type match may be approximate

Candidate answers are found in candidate passages

E.g.

50

Queen Elizabeth II

September 8, 2003

by baking a mixture of flour and water

John M. Prager

RANLP 2003 Tutorial on Question Answering

Terminology – Authority List

Authority List (or File): a collection of instances of a class of interest, used to test a

term for class membership. <Answer type>

Instances should be derived from an authoritative source and be as close to complete

as possible.

Ideally, class is small, easily enumerated and with members with a limited number of

lexical forms.

Good:

Days of week

Planets

Elements

Good statistically, but difficult to get 100% recall:

Animals

Plants

Colours

Problematic

People

Organizations

Impossible

All numeric quantities

Explanations and other clausal quantities

John M. Prager

RANLP 2003 Tutorial on Question Answering

Essence of Text-based QA

Need to find a passage that answers the

question. Steps:

Find a candidate passage (search)

Check that semantics of passage and

question match

Extract the answer

John M. Prager

RANLP 2003 Tutorial on Question Answering

Basic Structure of a QA-System

See for example Abney et al., 2000; Clarke et al., 2001;

Harabagiu et al.; Hovy et al., 2001; Prager et al. 2000

Question

Question

Analysis

Query

Search

Answer

Type

Answer

Documents/

passages

Answer

Extraction

John M. Prager

RANLP 2003 Tutorial on Question Answering

Corpus

or

Web

Essence of Text-based QA

Search

For a very small corpus, can consider every

passage as a candidate, but this is not

interesting

Need to perform a search to locate good

passages.

If search is too broad, have not achieved that

much, and are faced with lots of noise

If search is too narrow, will miss good passages

Two broad possibilities:

Optimize search

Use iteration

John M. Prager

RANLP 2003 Tutorial on Question Answering

Essence of Text-based QA

Match

Need to test whether semantics of

passage match semantics of question

Approaches:

Count question words present in passage

Score based on proximity

Score based on syntactic relationships

Prove match

John M. Prager

RANLP 2003 Tutorial on Question Answering

Essence of Text-based QA

Answer Extraction

Find candidate answers of same type as

the answer type sought in question.

Has implications for size of type hierarchy

John M. Prager

RANLP 2003 Tutorial on Question Answering

Essence of Text-based QA

High-Level View of Recall

Have three broad locations in the system where

expansion takes place, for purposes of matching

passages

Where is the right trade-off?

Question Analysis.

Expand individual terms to synonyms (hypernyms, hyponyms,

related terms)

Reformulate question (paraphrases)

In Search Engine

At indexing time

Stemming/lemmatization

John M. Prager

RANLP 2003 Tutorial on Question Answering

Essence of Text-based QA

High-Level View of Precision

Have three broad locations in the system where

narrowing/filtering/matching takes place

Where is the right trade-off?

Question Analysis.

Include all question terms in query, vs. allow partial matching

Use IDF-style weighting to indicate preferences

Search Engine

Possibly store POS information for polysemous terms

Answer Extraction

Reward (penalize) passages/answers that (don’t) pass matching test

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Types and Modifiers

Name 5 French Cities

Most likely there is no type for “French Cities”

Cf. Wikipedia

So will look for CITY

include “French/France” in bag of words, and hope for the best

include “French/France” in bag of words, retrieve documents,

and look for evidence (deep parsing, logic)

If you have a list of French cities, could either

Filter results by list

Use Answer-Based QA (see later)

Domain Model: Use longitude/latitude information of cities and

countries – practical for domain oriented systems (e.g.

geographical)

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Types and Modifiers

Name a female figure skater

Most likely there is no type for “female figure skater”

Most likely there is no type for “figure skater”

Look for PERSON, with query terms {figure, skater}

What to do about “female”? Two approaches.

1.

Include “female” in the bag-of-words.

•

•

2.

Relies on logic that if “femaleness” is an interesting property, it

might well be mentioned in answer passages.

Does not apply to, say “singer”.

Leave out “female” but test candidate answers for gender.

•

Needs either an authority file or a heuristic test

•

•

e.g. look for she,her, …

Test may not be definitive.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Named Entity Recognition

BBN’s IdentiFinder (Bikel et al. 1999)

Hidden Markov Model

Sheffield GATE (http://www.gate.ac.uk/)

Development Environment for IE and other NLP activities

IBM’s Textract/Resporator (Byrd & Ravin, 1999;

Wacholder et al. 1997; Prager et al. 2000)

FSMs and Authority Files

+ others

Inventory of semantic classes recognized by NER

related closely to set of answer types system can handle

John M. Prager

RANLP 2003 Tutorial on Question Answering

Named Entity Recognition

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Extraction

Also called Answer Selection/Pinpointing

Given a question and candidate passages, the process

of selecting and ranking candidate answers.

Usually, candidate answers are those terms in the

passages which have the same answer type as that

generated from the question

Ranking the candidate answers depends on assessing

how well the passage context relates to the question

3 Approaches:

Heuristic features

Shallow parse fragments

Logical proof

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Extraction using Features

Heuristic feature sets (Prager et al. 2003+). See also (Radev at al.

2000)

Calculate feature values for each CA, and then calculate linear

combination using weights learned from training data.

Features are generic/non-lexicalized, question independent (vs. supervised IE)

Ranking criteria:

Good global context:

the global context of a candidate answer evaluates the relevance of the

passage from which the candidate answer is extracted to the question.

Good local context

the local context of a candidate answer assesses the likelihood that the

answer fills in the gap in the question.

Right semantic type

the semantic type of a candidate answer should either be the same as or a

subtype of the answer type identified by the question analysis component.

Redundancy

the degree of redundancy for a candidate answer increases as more

instances of the answer occur in retrieved passages.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Extraction using

Features (cont.)

Features for Global Context

KeywordsInPassage: the ratio of keywords present in a

passage to the total number of keywords issued to the search

engine.

NPMatch: the number of words in noun phrases shared by both

the question and the passage.

SEScore: the ratio of the search engine score for a passage to

the maximum achievable score.

FirstPassage: a Boolean value which is true for the highest

ranked passage returned by the search engine, and false for all

other passages.

Features for Local Context

AvgDistance: the average distance between the candidate

answer and keywords that occurred in the passage.

NotInQuery: the number of words in the candidate answers that

are not query keywords.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Extraction using

Relationships

Can be viewed as additional features

Computing Ranking Scores –

Linguistic knowledge to compute passage & candidate answer scores

Perform syntactic processing on question and candidate passages

Extract predicate-argument & modification relationships from parse

Question: “Who wrote the Declaration of Independence?”

Relationships: [X, write], [write, Declaration of Independence]

Answer Text: “Jefferson wrote the Declaration of Independence.”

Relationships: [Jefferson, write], [write, Declaration of Independence]

Compute scores based on number of question relationship matches

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Extraction using

Relationships (cont.)

Example: When did Amtrak begin operations?

Question relationships

[Amtrak, begin], [begin, operation], [X, begin]

Compute passage scores: passages and relationships

In 1971, Amtrak began operations,…

[Amtrak, begin], [begin, operation], [1971, begin]…

“Today, things are looking better,” said Claytor, expressing optimism

about getting the additional federal funds in future years that will allow

Amtrak to begin expanding its operations.

[Amtrak, begin], [begin, expand], [expand, operation], [today, look]…

Airfone, which began operations in 1984, has installed air-to-ground

phones…. Airfone also operates Railfone, a public phone service on

Amtrak trains.

[Airfone, begin], [begin, operation], [1984, operation], [Amtrak, train]…

John M. Prager

RANLP 2003 Tutorial on Question Answering

Answer Extraction

using Logic

Logical Proof

Convert question to a goal

Convert passage to set of logical forms

representing individual assertions

Add predicates representing subsumption

rules, real-world knowledge

Prove the goal

See section on LCC next

John M. Prager

RANLP 2003 Tutorial on Question Answering

LCC

Moldovan & Rus, 2001

Uses Logic Prover for answer justification

Question logical form

Candidate answers in logical form

XWN glosses

Linguistic axioms

Lexical chains

Inference engine attempts to verify answer by negating

question and proving a contradiction

If proof fails, predicates in question are gradually relaxed

until proof succeeds or associated proof score is below a

threshold.

John M. Prager

RANLP 2003 Tutorial on Question Answering

LCC: Lexical Chains

Q:1518 What year did Marco Polo travel to Asia?

Answer: Marco polo divulged the truth after returning in 1292 from his

travels, which included several months on Sumatra

Lexical Chains:

(1) travel_to:v#1 -> GLOSS -> travel:v#1 -> RGLOSS -> travel:n#1

(2) travel_to#1 -> GLOSS -> travel:v#1 -> HYPONYM -> return:v#1

(3) Sumatra:n#1 -> ISPART -> Indonesia:n#1 -> ISPART ->

Southeast _Asia:n#1 -> ISPART -> Asia:n#1

Q:1570 What is the legal age to vote in Argentina?

Answer: Voting is mandatory for all Argentines aged over 18.

Lexical Chains:

(1) legal:a#1 -> GLOSS -> rule:n#1 -> RGLOSS ->

mandatory:a#1

(2) age:n#1 -> RGLOSS -> aged:a#3

(3) Argentine:a#1 -> GLOSS -> Argentina:n#1

John M. Prager

RANLP 2003 Tutorial on Question Answering

LCC: Logic Prover

Question

Which company created the Internet Browser Mosaic?

QLF: (_organization_AT(x2) ) & company_NN(x2) & create_VB(e1,x2,x6) &

Internet_NN(x3) & browser_NN(x4) & Mosaic_NN(x5) & nn_NNC(x6,x3,x4,x5)

Answer passage

... Mosaic , developed by the National Center for Supercomputing Applications (

NCSA ) at the University of Illinois at Urbana - Champaign ...

ALF: ... Mosaic_NN(x2) & develop_VB(e2,x2,x31) & by_IN(e2,x8) &

National_NN(x3) & Center_NN(x4) & for_NN(x5) & Supercomputing_NN(x6) &

application_NN(x7) & nn_NNC(x8,x3,x4,x5,x6,x7) & NCSA_NN(x9) &

at_IN(e2,x15) & University_NN(x10) & of_NN(x11) & Illinois_NN(x12) &

at_NN(x13) & Urbana_NN(x14) & nn_NNC(x15,x10,x11,x12,x13,x14) &

Champaign_NN(x16) ...

Lexical Chains develop <-> make and make <->create

exists x2 x3 x4 all e2 x1 x7 (develop_vb(e2,x7,x1) <-> make_vb(e2,x7,x1) &

something_nn(x1) & new_jj(x1) & such_jj(x1) & product_nn(x2) & or_cc(x4,x1,x3)

& mental_jj(x3) & artistic_jj(x3) & creation_nn(x3)).

all e1 x1 x2 (make_vb(e1,x1,x2) <-> create_vb(e1,x1,x2) &

manufacture_vb(e1,x1,x2) & man-made_jj(x2) & product_nn(x2)).

Linguistic axioms

all x0 (mosaic_nn(x0) -> internet_nn(x0) & browser_nn(x0))

John M. Prager

RANLP 2003 Tutorial on Question Answering

USC-ISI

Textmap system

Ravichandran and Hovy, 2002

Hermjakob et al. 2003

Use of Surface Text Patterns

When was X born ->

Mozart was born in 1756

Gandhi (1869-1948)

Can be captured in expressions

<NAME> was born in <BIRTHDATE>

<NAME> (<BIRTHDATE>

These patterns can be learned

Similar in nature to DIRT, using Web as a corpus

Developed in the QA application context

John M. Prager

RANLP 2003 Tutorial on Question Answering

USC-ISI TextMap

Use bootstrapping to learn patterns.

For an identified question type (“When was X born?”), start with known answers

for some values of X

Mozart 1756

Gandhi 1869

Newton 1642

Issue Web search engine queries (e.g. “+Mozart +1756” )

Collect top 1000 documents

Filter, tokenize, smooth etc.

Use suffix tree constructor to find best substrings, e.g.

Mozart (1756-1791)

Filter

Mozart (1756-

Replace query strings with e.g. <NAME> and <ANSWER>

Determine precision of each pattern

Find documents with just question term (Mozart)

Apply patterns and calculate precision

John M. Prager

RANLP 2003 Tutorial on Question Answering

USC-ISI TextMap

Finding Answers

Determine Question type

Perform IR Query

Do sentence segmentation and smoothing

Replace question term by question tag

i.e. replace Mozart with <NAME>

Search for instances of patterns associated with

question type

Select words matching <ANSWER>

Assign scores according to precision of pattern

John M. Prager

RANLP 2003 Tutorial on Question Answering

Additional Linguistic Phenomena

John M. Prager

RANLP 2003 Tutorial on Question Answering

Negation (1)

Q: Who invented the electric guitar?

A: While Mr. Fender did not invent the electric

guitar, he did revolutionize and perfect it.

Note: Not all instances of “not” will

invalidate a passage.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Negation (2)

Name a US state where cars are manufactured.

versus

Name a US state where cars are not manufactured.

Certain kinds of negative events or instances are

rarely asserted explicitly in text, but must be

deduced by other means

John M. Prager

RANLP 2003 Tutorial on Question Answering

Other Adverbial Modifiers

(Only, Just etc.)

Name an astronaut who nearly

made it to the moon

To satisfactorily answer such questions, need to know

what are the different ways in which events can fail to

happen. In this case there are several.

John M. Prager

RANLP 2003 Tutorial on Question Answering

Attention to Details

Tenses

Who is the Prime Minister of Japan?

Number

What are the largest snakes in the world?

^

John M. Prager

RANLP 2003 Tutorial on Question Answering

Jeopardy Examples - Correct

Literary Character

Wanted for killing sir Danvers Carew ;

Seems to have a split personality

Hyde – correct ( Dr. Jekyll and Mr. Hyde)

category: olympic oddities

Milrad Cavic almost upset this man's perfect 2008

olypmics, losing to him by 100th of a second

Michael Phelps

(identified name type – “man”)

John M. Prager

RANLP 2003 Tutorial on Question Answering

Jeopardy Examples - Wrong

Name the decade:

The first modern crossword puzzle is published

& Oreo cookies are introduced

Watson: wrong - 1920’s (57%),

but the correct 1910’s with 30%

largest US airport named after a World War II hero

Toronto, the name of a Canadian city.

(Missed that US airport means that the airport is in the

US, or that Toronto isn’t in the U.S.)

John M. Prager

RANLP 2003 Tutorial on Question Answering

General Perspective on

Semantic Applications

Semantic applications as “text matching”

Matching between target texts and

Supervised: training texts

Unsupervised: user input (e.g. question)

Cf. the textual entailment paradigm

John M. Prager

RANLP 2003 Tutorial on Question Answering