Top-down classification

advertisement

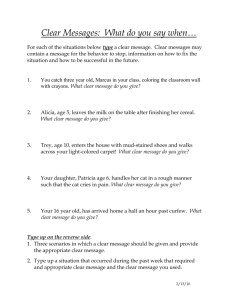

Information Access I Information Representation and Text Searching GSLT, Göteborg, September 2003 Barbara Gawronska, Högskolan i Skövde Requirements on Information Representation: Discriminating power Descriptive power Similarity identification Ambiguity minimalization Conciseness Those requirements may collide... Traditional descriptors Classification codes (e.g. Universal Decimal Classification) Subject headings Key words Problems: Standarized lists of subject headings needed Different spelling conventions Morphology: inflectional and derivational, compounding Semantic relations Strategies for linking related words and phrases Different spelling conventions: spelling checkers; in proper names - counting the number of identical letters or identical bigrams (letter pairs). Could be improved by adding some phonological knowledge (metathesis etc.) BARBARA GAWRONSKA BARBRO GRAVONSKA Relations on morphological level: Truncation: finding the common part of a string; no language specific morphological knowledge. Problems: too many unrelated words may pass trough ren#: renen, renar, rena, rent, renad... ren$$: renen, renar, renad... Strategies for linking related words and phrases (2) Lemmatization: identifying the lexical form Stemming: a strategy between truncation and lemmatization The general principle for English (Lovins 1968,Paice 1990): remove the ending, and transform the ending of the remaining string, if needed Language-dependent infinitive tawar pikir beri sewa algorithms; consider e.g. Indonesian: active menawar ”bargain” memikir ”think” memberi ”give” menyewa ”rent” Strategies for linking related words and phrases (3) multi-word entries: context operators, e.g. exact distance between words retrieval$information: retrieval of information retrieval with information loss maximal distance between words text##retrieval: text retrieval text and data retrieval unspecified word order information#,retrieval: information retrieval, retrieval of information + word pair co-occurence rate Strategies for linking related words and phrases (4) Semantic relations: thesauri, lexicons, semantic nets as tools for term expansion; some examples: ERIC Thesaurus of Descriptors (the Dialog Corporation) Roger Thesaurus KL-ONE WordNet... Normally used relations: broader/narrower term, related term, synonym,”used for”/ ”use” (identifies a preferred synonym); Even entailment (WordNet), role (KL-ONE) Thesauri: Top-down classification monohierarchy cat wildcat Panter Ocelot domestic cat Siamese cat Angora Thesauri: Polyhierarchy domestic cat mouser Angora Thesauri: Polydimensional hierarchy domestic cat mouser stray Burmese cat Siamese cat Angora Thesauri: Polydimensional hierarchy domestic cat Function/Life style mouser Breed stray Angora Siamese cat Burmese cat Thesauri: WordNet, some problems feline mammal usually having thick soft fur and being unable to roar; domestic cats; wildcats any domesticated member of the genus Felis any small or medium-sized cat resembling the domestic cat and living in the wild Thesauri: WordNet, some problems feline mammal usually having thick soft fur and being unable to roar; domestic cats; wildcats any domesticated member of the genus Felis female cat a long-haired breed similar to the Persian cat a slender short-haired blue-eyed breed of cat having a pale coat with dark ears paws face and tail tip Siamese cat having a bluish cream body and dark gray points a cat proficient at mousing a long-haired breed of cat a short-haired breed with body similar to the Siamese cat but having a solid dark brown or gray coat a short-haired bluish-gray cat breed a small slender short-haired breed of African origin having brownish fur with a reddish undercoat homeless cat …. …. …. Thesauri: WordNet, some problems feline mammal usually having thick soft fur and being unable to roar….. any small or medium-sized cat resembling the domestic cat and living in the wild widely distributed wildcat of Africa and Asia Minor long-bodied long-tailed tropical American wildcat small spotted wildcat found from Texas to Brazil bushy-tailed European wildcat resembling the domestic tabby and regarded as the ancestor of the domestic cat medium-sized wildcat of Central and South America having a dark-striped coat small Asiatic wildcat a desert-dwelling wildcat …. …. short-tailed wildcats with usually tufted ears; valued for their fur of northern Eurasia of southern Europe small lynx of North America of deserts of northern Africa and southern Asia of northern North America Thesauri: Bottom-up classification Attribute A: size Attribute B: fur Attribute C: colour Attribute D: eye colour A1: middle B1: short C1: pale D1: blue A2: small B2: long C2: dark D2: green A3: big C3: striped Finding significant words Significance as a function of rank (Luhn 1958) 100 10 1 1 10 100 1000 A simple frequency-based indexing method: frequent words – stop list + truncation/conflating Finding significant words (2) Term weighting: Salton & McGill1983 The ”Tf x idf” method (also called document frequency, or inverse term frequency): n Weightij Frequencyij log 2 DocFreq j ”Tf x idf” can be combined with similarity measures, e.g. the vector space model Similarity measures Models for comparing texts normally make use of words the texts have in common Some models also utilize the size of the documents and/or the number of words the texts do not have in common Similarity measures (2) DocSim1D1, D 2 t D1 j t D 2 j T i 1 t D1 j = THE WEIGHT OF AN OCCURENCE OF TERM j IN DOCUMENT i T = THE MAXIMUM NUMBER OF TERMS IN BOTH DOCUMENTS COMBINED No attention is paid to the size of a document Similarity measures (3) Dice’s coefficient DocSim 2D1, D 2 Jaccard’s coefficient DocSim3D1, D 2 t D1 j 2 t D1 j t D 2 j t t t D1 j D1 j D2 j t D 2 j t D 2 j t D1 j t D 2 j Similarity measures (4) The cosine coefficient (the cosine of the angle between two vectors) DocSim4D1, D 2 t t 2 D1 j D1 j t D 2 j t 2 1 D2 j 2 Similarity measures (5) Clustering by similarity matrices (Jaccard’s coefficient applied to attribute/value matrices) Document signature matching (documents coded into very compact binary representations, so-called signatures) Discriminator words (Williams 1963): the discrimination coefficient ascribes high values to words that occur with a probability much different from the mean probability Latest advances in document clustering – wait for Hercules Dalianis’ lecture! Which words should count as common to both documents? As summer turns to fall, many brewers start to plan their Oktoberfest brewing. This installment of "Brewing in Styles" looks at the materials and techniques used for brewing traditional and modern Maerzen beers and offers some radical tips for brewing Oktoberfest-like ales. Ein prosit! Several people called in response to the last installment of "Brewing in Styles" ("American Wheat," BrewingTechniques 1 [1], May/June 1993) to say that they were confused because many pubs and micros in the Midwest brew wheat beers in the traditional German manner, complete with the 4-vinylguaiacol clovelike character. Many fine German-style Weizenbiers are brewed in America. ***************************************************************************************************** Republished from BrewingTechniques' July/August 1993. What to do with that unfortunate mistake of a recipe? Design another beer that is out of balance in an opposite and complementary way. It invariably happens, even to the best of us. The beer that should have been so good ends up out of balance and undrinkable. Not being the type to accept less-than-perfect products graciously, I decided to take a page from the Belgian book of brewing. Belgian brewers have long used the practice of blending to even out inconsistent, wild fermentations Relevance estimation The Retrieval Status Value (rsv) – the measure of closeness between the query and the document In strictly Boolean systems: 0 or 1 Fuzzy (weighted) Boolean retrieval : values between 0 and 1; however, ”false drops” very probable because of the definition of retrieval functions QR3 QR1 QR2 rsvi ,3 min(rsvi ,1 , rsvi , 2 ) QR3 QR1 QR2 rsvi ,3 max(rsvi ,1 , rsvi , 2 ) Relevance estimation (2) The vector space model: the closeness of the query and the document vectors, computed using some of the previously mentioned similarity measures (Dice, Jaccard, or cosine) rsvi ,k MT dtw qtw i , j j ,k j 1 normalization factor Relevance estimation (3) The probabilistic model (a feedback model) qtw j ,k rj MD n j Rk rj n j rj Rk rj qtw j ,k query term weight rj number of relevant documents in which the term occurs MD the total number of documents nj the number of documents in which the term occus Rk the total number of documents that are relevant for query q (a non trivial problem!) rsvi ,k qtw Qk Di j ,k