Document

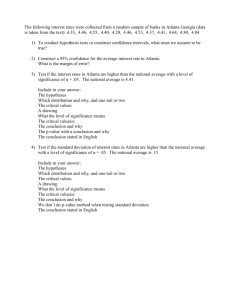

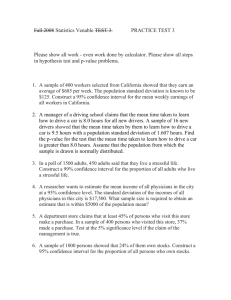

advertisement

Based on a sample x1, x2, … , x12 of 12 values from a population that is presumed normal, Genevieve tested H0: = 20 versus H1: 20 at the 5% level of significance. She got a t statistic of 2.23, and Minitab reported the p-value as 0.048. Genevieve properly rejected H0. But Genevieve is a little concerned. Why? (a) (b) (c) (d) She is worried that she might have made a type II error. She is concerned about whether the 95% confidence interval might contain the value 20. The p-value is close to 0.05, and she worries that she might have a type I error. She wonders if using a test at the 10% level of significance might have given her an even smaller p-value. Since Genevieve rejected H0 , type II error is not in play. Thus choice (a) is incorrect. As for (b), we know for sure that the comparison value 20 is outside a 95% confidence interval, as H0 was rejected. Thus (b) is also incorrect. The best answer is (c). The hypothesis test with a small sample size just barely rejected H0 . It is certainly possible that a Type I error is involved. Choice (d) is completely misguided. After all, the pvalue is computed from the data and its calculation has nothing to do with the level of significance. Keep in mind that the p-value can be compared to a specified significance level for the simple purpose of telling whether H0 would have been rejected at that level. For example, using α = 0.05 and getting then p = 0.0381 from the data would correspond to rejecting H0 . Based on a sample x1, x2, … , x19,208 of 19,208 observations from a population that is presumed normal, Karl tested H0: = 140 versus H1: 140. He got a t statistic of -2.18, and Minitab reported the p-value as 0.029. Karl properly rejected H0. But Karl is a little concerned. Why? (a) (b) (c) (d) He is considering whether a sample size of around 40,000 might give him a smaller p-value. He is concerned that his results would not be found significant at the 0.01 level of significance. He is concerned that his printed t table did not have a line for 19,207 degrees of freedom. He is worried that his significant result might not be useful. The best answer is (d). These results are useless! Observe that t = -2.18 = leads to x 140 s 19, 208 x 140 , which s -0.0157. It seems that the true mean μ is only about 1.57% of a standard deviation away from the target 140. No one will notice or care! Statement (a) happens to be true, but it should not be the cause of any concern. Yes, a bigger sample size is going to get a smaller p-value, but the results with a sample size over 19,000 are useless. It would be madness to run up the expenses for a sample of 40,000! Statement (b) is also true, but not the proper cause of any concern. Yes, it’s awkward to run such a large experiment and not get significance at the 1% level. It’s already clear that the results are useless, so this kind of concern is not helpful. As for (c), the value of t0.025; 19,207 is very, very close to 1.96. Minitab gives this as 1.96009. Impossible … or … could happen ? Alicia tested H0: = 405 against H1: 405 and rejected H0 with a p-value of 0.0278. Alicia was unaware of the true value of and committed a Type I error. Yes, this could happen. If we reject H0 we might be making a Type I error. Impossible … or … could happen ? Celeste tested H0: p = 0.70 versus H1: p 0.70, where p is the population probability that a randomly-selected subject will like Citrus-Ola orange juice better that Tropicana. She had 85 subjects, and she ended up rejecting H0 with = 0.05. Lou worked the next day with 105 subjects (all 85 that Celeste had, plus an additional 20), and Lou accepted H0 with = 0.05. This could happen. It’s not expected, however. Usually, enlarging the sample size makes it even easier to reject H0. Impossible … or … could happen ? In an experiment with two groups of subjects, it happened that sx = standard deviation of x-group = 4.0 sy = standard deviation of y-group = 7.1 sp = pooled standard deviation = 7.8 This is impossible, as sp must be between sx and sy . Impossible … or … could happen ? In an experiment with two groups of subjects, it happened that sx = standard deviation of x-group = 4.0 sy = standard deviation of y-group = 7.1 sz = standard deviation of all subjects combined = 7.8 This can happen. In fact, the standard deviation of combined groups is usually larger than the two separate standard deviations. True . . . or . . . false ? Alvin always uses = 0.05 in his hypothesis testing problems. In the long run, Alvin will reject about 5% of all the hypotheses he tests. False! Alvin’s rejection rate depends on what hypotheses he chooses to test. True: In the long run, Alvin will reject about 5% of the TRUE hypotheses that he tests. True . . . or . . . false ? If you perform 40 independent hypothesis tests, each at the significance level 0.05, and if all 40 null hypotheses are true, you will commit exactly two Type I errors. False. The number of Type I errors is a binomial random variable. The expected number of errors is two. True . . . or . . . false ? The test of H0: = 0 versus H1: 0 at level 0.05 with data x1, x2, … , x50 will have a larger type II error probability than the test of H0: = 0 versus H1: 0 at level 0.05 with data x1, x2, … , x100 . True. All else equal, the major benefit to enlarging the sample size is reducing the Type II error probability. True . . . or . . . false ? Steve had a binomial random variable X based on n = 84 trials. He tested H0: p = 0.60 versus H1: p 0.60 with observed value x = 65, and this resulted in a p-value of 0.001. The probability is 99.9% that H0 is false. This is false. This is a very serious misinterpretation of the p-value. Remember that p = P[ these data | H0 true ]. True . . . or . . . false ? You are testing H0: p = 0.70 versus H1: p 0.70 at significance level = 0.05. A sample of size n = 200 will allow you to claim a smaller Type I error probability than a sample of size n = 150. This is false. The probability of Type I error is 0.05, exactly the value you specify. From Museum of Bad Art, Somerville, Massachusetts.