Lothar Bauerdick

advertisement

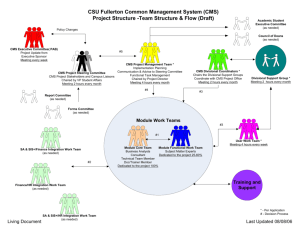

US CMS Software and Computing Project US CMS Collaboration Meeting at FSU, May 2002 Lothar A T Bauerdick/Fermilab Project Manager Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 1 Scope and Deliverables Provide Computing Infrastructure in the U.S. — that needs R&D Provide software engineering support for CMS Mission is to develop and build “User Facilities” for CMS physics in the U.S. To provide the enabling IT infrastructure that will allow U.S. physicists to fully participate in the physics program of CMS To provide the U.S. share of the framework and infrastructure software Tier-1 center at Fermilab provides computing resources and support User Support for “CMS physics community”, e.g. software distribution, help desk Support for Tier-2 centers, and for physics analysis center at Fermilab Five Tier-2 centers in the U.S. Together will provide same CPU/Disk resources as Tier-1 Facilitate “involvement of collaboration” in S&C development Prototyping and test-bed effort very successful Universities will “bid” to host Tier-2 center taking advantage of existing resources and expertise Tier-2 centers to be funded through NSF program for “empowering Universities” Proposal to the NSF submitted Nov 2001 Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 2 Project Milestones and Schedules Prototyping, test-beds, R&D started in 2000 “Developing the LHC Computing Grid” in the U.S. R&D systems, funded in FY2002 and FY2003 Used for “5% data challenge” (end 2003) release Software and Computing TDR (technical design report) Prototype T1/T2 systems, funded in FY2004 for “20% data challenge” (end 2004) end “Phase 1”, Regional Center TDR, start deployment Deployment: 2005-2007, 30%, 30%, 40% costs Fully Functional Tier-1/2 funded in FY2005 through FY2007 ready for LHC physics run start of Physics Program S&C Maintenance and Operations: 2007 on Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 3 US CMS S&C Since UCR Consolidation of the project, shaping out the R&D program Project Baselined in Nov 2001: Workplan for CAS, UF, Grids endorsed CMS has become “lead experiment” for Grid work Koen, Greg, Rick US Grid Projects PPDG, GriPhyN and iVDGL EU Grid Projects DataGrid, Data Tag LHC Computing Grid Project Fermilab UF team, Tier-2 prototypes, US CMS testbed Major production efforts, PRS support Objectivity goes, LCG comes We do have a working software and computing system! Physics Analysis CCS will drive much of the common LCG Application are Major challenges to manage and execute the project Since fall 2001 we knew LHC start would be delayed new date April 2007 Proposal to NSF in Oct 2001, things are probably moving now New DOE funding guidance (and lack thereof from NSF) is starving us in 2002-2004 Very strong support for the Project from individuals in CMS, Fermilab, Grids, FA Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 4 Other New Developments NSF proposal guidance AND DOE guidance are (S&C+M&O) That prompted a change in US CMS line management Program Manager will oversee both Construction Project and S&C Project New DOE guidance for S&C+M&O is much below S&C baseline + M&O request Europeans have achieved major UF funding, significantly larger relative to U.S. LCG started, expects U.S. to partner with European projects LCG Application Area possibly imposes issues on CAS structure Many developments and changes that invalidate or challenge much of what PM tried to achieve Opportunity to take stock of where we stand in US CMS S&C before we try to understand where we need to go Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 5 Vivian has left S&C Thanks and appreciation for Vivian’s work of bringing the UF project to the successful baseline New scientist position opened at Fermilab for UF L2 manager and physics! Other assignments Hans Wenzel Tier-1 Manager Jorge Rodrigez U.Florida pT2 L3 manager Greg Graham CMS GIT Production Task Lead Rick Cavenaugh US CMS Testbed Coordinator Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 6 Project Status User Facilities status and successes: US CMS Prototypes systems: Tier-1, Tier-2, testbed Intense collaboration with US Grid project, Grid-enabled MC production system User Support: facilities, software, operations for PRS studies Core Application Software status and successes: See Ian’s talk Project Office started Project Engineer hired, to work on WBS, Schedule, Budget, Reporting, Documenting SOWs in place w/ CAS Universities —MOUs, subcontracts, invoicing is coming In process of signing the MOUs Have a draft of MOU with iVDGL on prototype Tier-2 funding Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 7 Successful Base-lining Review “The Committee endorses the proposed project scope, schedule, budgets and management plan” Endorsement for the “Scrubbed” project plan following the DOE/NSF guidance $3.5MDOE + $2MNSF in FY2003 and $5.5DOE + $3MNSF in FY2004! Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 8 CMS Produced Data in 2001 Simulated Events TOTAL = 8.4 M Caltech 2.50 M FNAL 1.65 M Bristol/RAL 1.27 M CERN 1.10 M INFN 0.76 M Moscow 0.43 M IN2P3 0.31 M Helsinki 0.13 M Wisconsin 0.07 M UCSD 0.06 M UFL 0.05 M TYPICAL EVENT SIZES Reconstructed w/ Pile-Up TOTAL = 29 TB Simulated 1 CMSIM event = 1 OOHit event = 1.4 MB Reconstructed 1 “1033” event = 1.2 MB 33 1 “2x10 ” event = 1.6 MB 1 “1034” event = 5.6 MB CERN 14 TB FNAL 12 TB Caltech Moscow 0.60 TB 0.45 TB INFN 0.40 TB Bristol/RAL 0.22 TB UCSD 0.20 TB IN2P3 0.10 TB Wisconsin 0.05 TB Helsinki UFL 0.08 TB These fully simulated data samples are essential for physics and trigger studies Technical Design Report for DAQ and Higher Level Triggers Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 9 Production Operations Production Efforts are Manpower intensive! At Fermilab (US CMS, PPDG) ∑ = 1.7 FTE sustained effort to fill those 8 roles Fermiulab Tier-1 Production Operations + the system support people thatShafqat need to help if something goes wrong!!! Greg Graham, Aziz, Yujun Wu, Moacyr Souza, Hans Wenzel, Michael Ernst, Shahzad Muzaffar + staff At U Florida (GriPhyN, iVDGL) Dimitri Bourilkov, Jorge Rodrigez, Rick Cavenaugh + staff At Caltech (GriPhyN, PPDG, iVDGL, USCMS) Vladimir Litvin, Suresh Singh et al At UCSD (PPDG, iVDGL) Ian Fisk, James Letts + staff At Wisconsin Pam Chumney, R. Gowrishankara, David Mulvihill + Peter Couvares, Alain Roy et al At CERN (USCMS) Tony Wildish + many Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 10 US CMS Prototypes and Test-beds Tier-1 and Tier-2 Prototypes and Test-beds operational Facilities for event simulation including reconstruction Sophisticated processing for pile-up simulation User cluster and hosting of data samples for physics studies Facilities and Grid R&D Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 11 Tier-1 equipment Chimichanga Chocolat Chalupa Winchester Raid IBM - servers CMSUN1 Dell -servers Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting snickers May 11, 2002 12 Tier-1 Equipment frys(user) gyoza(test) Popcorns (MC production) Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 13 Using the Tier-1 system User System Until the Grid becomes reality (maybe soon!) people who want to use computing facilities at Fermilab need to obtain an account That requires registration as a Fermilab user (DOE requirement) We will make sure that turn-around times are reasonably short, did not hear complains yet Go to http://computing.fnal.gov/cms/ click on the "CMS Account" button that will guide you through the process Step 1: Get a Valid Fermilab ID Step 2: Get a fnalu account and CMS account Step 3: Get a Kerberos principal and krypto card Step 4: Information for first-time CMS account users http://consult.cern.ch/writeup/form01/ Got > 100 users, currently about 1 new user per week Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 14 US CMS User Cluster FRY1 FRY2 FRY3 R&D on “reliable i/a service” 100Mbps OS: Mosix? batch system: Fbsng? Storage: Disk farm? FRY4 FRY5 BIGMAC SWITCH GigaBit SCSI 160 FRY6 FRY7 FRY8 RAID 250 GB To be released June 2002! nTuple, Objy analysis etc Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 15 User Access to Tier-1 Data Hosting of Jets/Met data Muons will be coming soon AMD server AMD/Enstore interface Enstore STKEN Silo > 10 TB Working on providing Powerful disk cache Snickers Network IDE Users Host redirection protocol RAID 1TB allows to add more servers --> scaling+ load balancing Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 16 US CMS T2 Prototypes and Test-beds Tier-1 and Tier-2 Prototypes and Test-beds operational Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 17 California Prototype Tier-2 Setup UCSD Lothar A T Bauerdick Fermilab Caltech U.S. CMS Collaboration Meeting May 11, 2002 18 Benefits Of US Tier-2 Centers Bring computing resources close of user communities Provide dedicated resources to regions (of interest and geographical) More control over localized resources, more opportunities to pursue physics goals Leverage Additional Resources, which exist at the universities and labs Reduce computing requirements of CERN (supposed to account for 1/3 of total LHC facilities!) Help meet the LHC Computing Challenge Provide diverse collection of sites, equipment, expertise for development and testing Provide much needed computing resources US-CMS plans for about 2 FTE at each Tier-2 site + Equipment funding supplemented with Grid, University and Lab funds (BTW: no I/S costs in US CMS plan) Problem: How do you run a center with only two people that will have much greater processing power than CERN has currently ? This involved facilities and operations R&D to reduce the operations personnel required to run the center e.g. investigating cluster management software Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 19 U.S. Tier-1/2 System Operational CMS Grid Integration and Deployment on U.S. CMS Test Bed Data Challenges and Production Runs on Tier-1/2 Prototype Systems “Spring Production 2002” finishing Physics, Trigger, Detector studies Produce 10M events and 15 TB of data also 10M mini-bias fully simulated including pile-up fully reconstructed Large assignment to U.S. CMS Successful Production in 2001: 8.4M events fully simulated, including pile-up, 50% in the U.S. 29TB data processed 13TB in the U.S. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 20 US CMS Prototypes and Test-beds All U.S. CMS S&C Institutions are involved in DOE and NSF Grid Projects Integrating Grid software into CMS systems Bringing CMS Production on the Grid Understanding the operational issues CMS directly profit from Grid Funding Deliverables of Grid Projects become useful for LHC in the “real world” Major success: MOP, GDMP Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 21 Grid-enabled CMS Production Successful collaboration with Grid Projects! MOP (Fermilab, U.Wisconsin/Condor): Remote job execution Condor-G, DAGman GDMP (Fermilab, European DataGrid WP2) File replication and replica catalog (Globus) Successfully used on CMS testbed First real CMS Production use finishing now! Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 22 Recent Successes with the Grid Grid Enabled CMS Production Environment NB: MOP = “Grid-ified” IMPALA, vertically integrated CMS application Brings together US CMS with all three US Grid Projects PPDG: Grid developers (Condor, DAGman), GDMP (w/ WP2), GriPhyN: VDT, in the future also the virtual data catalog iVDGL: pT2 sites and US CMS testbed CMS Spring 2002 production assignment of 200k events to MOP Half-way through, next week transfer back to CERN This is being considered a major success — for US CMS and Grids! Many bugs in Condor and Globus found and fixed Many operational issues that needed and still need to be sorted out MOP will be moved into production Tier-1/Tier-2 environment Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 23 Successes: Grid-enabled Production Major Milestone for US CMS and PPDG From PPDG internal review of MOP: “From the Grid perspective, MOP has been outstanding. It has both legitimized the idea of using Grid tools such as DAGMAN, Condor-G, GDMP, and Globus in a real production environment outside of prototypes and trade show demonstrations. Furthermore, it has motivated the use of Grid tools such as DAGMAN, Condor-G, GDMP, and Globus in novel environments leading to the discovery of many bugs which would otherwise have prevented these tools from being taken seriously in a real production environment. From the CMS perspective, MOP won early respect for taking on real production problems, and is soon ready to deliver real events. In fact, today or early next week we will update the RefDB at CERN which tracks production at various regional centers. This has been delayed because of the numerous bugs that, while being tracked down, involved several cycles of development and redeployment. The end of the current CMS production cycle is in three weeks, and MOP will be able to demonstrate some grid enabled production capability by then. We are confident that this will happen. It is not necessary at this stage to have a perfect MOP system for CMS Production; IMPALA also has some failover capability and we will use that where possible. However, it has been a very useful exercise and we believe that we are among the first team to tackle Globus and Condor-G in such a stringent and HEP specific environment.” Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 24 Successes: File Transfers In 2001 were observing typical rates for large data transfers, e.g. CERN - FNAL 4.7 GB/hour After network tuning, using Grid Tools (Globus URLcopy) we gain a factor 10! Today we are transferring 1.5 TByte of simulated data from UCSD to FNAL at rates of 10 MByte/second! That almost saturates the network I/f out of Fermilab (155Mbps) and at UCSD (FastEthernet)… The ability to transfer a TeraByte in a day is crucial for the Tier-1/Tier-2 system Many operational issues remain to be solved GDMP is a grid tool for file replication, developed jointly b/w US and EU “show case” application for EU Data Grid WP2: data replication Needs more work and strong support VDT team (PPDG, GriPhyN, iVDGL) e.g. CMS “GDMP heartbeat” for debugging new installations and monitoring old ones. Installation and configuration issues — releases of underlying software like Globus Issues with site security and e.g. Firewall Uses Globus Security Infrastructure, which demands”VO” Certification Authority infrastructure for CMS Etc pp… This needs to be developed, tested, deployed and shows that the USCMS testbed is invaluable! Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 25 DOE/NSF Grid R&D Funding for CMS DOE/NSF Grid R&D Funding for CMS 2001 2002 2003 2004 GriPhyN Total, including CS and all Experiments 2543 2543 2543 2241 582 582 582 582 2650 2750 232 234 192 336 3180 3180 3180 187 132 80 399 187 132 80 399 187 132 80 399 CMS Staff iVDGL Total, including CS and all Experiments CMS Equipment CMS Staff PPDG Total, including CS and all Experiements Caltech FNAL UCSD Total CMS Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting 2005 2006 2750 2750 2750 187 358 57 390 65 390 May 11, 2002 26 Farm Setup Almost any computer can run the CMKIN and CMSIM steps using the CMS binary distribution system (US CMS DAR) As long as ample storage is available problem scales well This step is “almost trivially” put on the Grid — almost… Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 27 e.g. on the 13.6 TF - $53M TeraGrid? 26 4 Site Resources Site Resources HPSS HPSS 24 8 External Networks Caltech HPSS Argonne SDSC 4.1 TF 225 TB NCSA/PACI 8 TF 240 TB TeraGrid/DTF: NCSA, SDSC, Caltech, Argonne U.S. CMS Collaboration Meeting Lothar A T Bauerdick Fermilab 5 External Networks External Networks Site Resources External Networks Site Resources UniTree www.teragrid.org May 11, 2002 28 Farm Setup for Reconstruction • The first step of the reconstruction is Hit Formatting, where simulated data is taken from the Fortran files, formatted and entered into the Objectivity data base. • Process is sufficiently fast and involves enough data that more than 10-20 jobs will bog down the data base server. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 29 Pile-up simulation! (+30 minimum bias events) Unique at LHC due to high Luminosity and short bunch-crossing time Up to 200 “Minimum Bias” events overlayed on interesting triggers Lead to “Pile-up” in detectors needs to be simulated! All charged tracks with pt > 2 GeV Reconstructed tracks with pt > 25 GeV This makes a CPU-limited task (event simulation) VERY I/O intensive! Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 30 Farm Setup for Pile-up Digitization The most advanced production step is digitization with pile-up The response of the detector is digitized the physics objects are reconstructed and stored persistently and at full luminosity 200 minimum bias events are combined with the signal events Due to the large amount of minimum bias events multiple Objectivity AMS data servers are needed. Several configurations have been tried. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 31 Objy Server Deployment: Complex 4 Production Federations at FNAL. (Uses catalog only to locate database files.) 3 FNAL servers plus several worker nodes used in this configuration. 3 federation hosts with attached RAID partitions 2 lock servers 4 journal servers 9 pileup servers Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 32 Example of CMS Physics Studies Resolution studies for jet reconstruction QCD 2-jet events, no FSR No pile-up, no tracks recon, no HCAL noise QCD 2-jet events with FSR Full simulation w/ tracks, HCAL noise Full detector simulation essential to understand jet resolutions Indispensable to design realistic triggers and understand rates at high lumi Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 33 Pile up & Jet Energy Resolution Jet energy resolution Pile-up contribution to jet are large and have large variations Can be estimated event-by-event from total energy in event Large improvement if pile-up correction applied (red curve) e.g. 50% 35% at ET = 40GeV 1 3.5 4.5 Physics studies depend on full detailed detector simulation essential! realistic pile-up processing is Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 34 Tutorial at UCSD Very successful 4-day tutorial with ~40 people attending Covering use of CMS software, including CMKIN/CMSIM, ORCA, OSCAR, IGUANA Covering physics code examples from all PRS groups Covering production tools and environment and Grid tools Opportunity to get people together UF and CAS engineers with PRS physicists Grid developers and CMS users The tutorials have been very well thought through very useful for self-study, so they will be maintained It is amazing what we already can do with CMS software E.g. impressive to see IGUANA visualization environment, including “home made” visualizations However, our system is (too?, still too?) complex We maybe need more people taking a day off and go through the self- guided tutorials Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 35 FY2002 UF Funding Excellent initial effort and DOE support for User Facilities Fermilab established as Tier-1 prototype and major Grid node for LHC computing Tier-2 sites and testbeds are operational and are contributing to production and R&D Headstart for U.S. efforts has pushed CERN commitment to support remote sites The FY2002 funding has given major headaches to PM DOE funding $2.24M was insufficient to ramp the Tier-1 to base-line size The NSF contribution is unknown as of today According to plan we should have more people and equipment at Fermilab T1 Need some 7 additional FTEs and more equipment funding This has been strongly endorsed by the baseline reviews All European RC (DE, FR, IT, UK, even RU!) have support at this level of effort Allocation for FY2002 Caltech UCSD FNAL NEU 2.0 3.0 Princeton UC Davis UFlorida TOTAL Core Applications Software (CAS) FTE 2.0 1.0 1.0 9.0 User Facilities (UF) FTE TOTAL FTE CAS Personnel (salary, PC, travel,...) UF Personnel (salary, PC, travel,...) UF Tier 1 Equipment UF Tier 2 Equipment Project Office, Management Reserve TOTAL COST [AY$ x 1000] Lothar A T Bauerdick Fermilab 1.0 3.0 310.0 155.0 1.0 1.0 5.5 7.5 155.0 4.0 1.0 1.0 270.0 795.0 140.0 620.0 150.0 185.0 390.0 274.0 1.5 1.5 232.0 120.0 112.0 585.0 155.0 1595.0 894.0 U.S. CMS Collaboration Meeting 150.0 185.0 344.0 May 11, 2002 9.0 18.0 1,535.0 1,337.0 140.0 232.0 664.0 3,908.0 36 Plans For 2002 - 2003 Finish Spring Production challenge until June User Cluster, User Federations Upgrade of facilities ($300k) Develop CMS Grid environment toward LCG Production Grid Move CMS Grid environment from testbed to facilities Prepare for first LCG-USUF milestone, November? Tier-2, -iVDGL milestones w/ ATLAS, SC2002 LCG-USUF Production Grid milestone in May 2003 Bring Tier-1/Tier-2 prototypes up to scale Serving user community: User cluster, federations,Grid enabled user environment UF studies with persistency framework Start of physics DCs and computing DCs CAS: LCG “everything is on the table, but the table is not empty” persistency framework - prototype in September 2002 Release in July 2003 DDD and OSCAR/Geant4 releases New strategy for visualization / IGUANA Develop distributed analysis environment w/ Caltech et al Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 37 Funding for UF R&D Phase There is lack of funding and lack of guidance for 2003-2005 NSF proposal guidance AND DOE guidance are (S&C+M&O) New DOE guidance for S&C+M&O is much below S&C baseline + M&O request Fermilab USCMS projects oversight has proposed minimal M&O for 2003-2004 and large cuts for S&C given the new DOE guidance The NSF has “ventilated the idea” to apply a rule of 81/250*DOE funding This would lead to very serious problems in every year of the project we would lack 1/3 of the requested funding ($14.0M/$21.2M) Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 38 DOE/NSF funding shortfall DOE Project Costs - 11/2001 DOE-FNAL Funding Profile - 4/2002 14 NSF Project Costs - 11/2001 NSF guidance using 81/250 rule 14 Million AY$ 12.8 12 12.8 Million AY$ 12 10 10 9.0 8 8 6 6 4 4 2 3.5 2.9 2.2 4.13 2.92 2 0 0 2002 4.13 2003 2004 2005 2006 2007 FY 0.94 0.73 2002 2003 1.13 2004 2005 2006 2007 FY CAS NSF UF Tier2 labor CAS DOE UF Tier1 labor UF Tier2 h/w Project Office NSF UF Tier1 h/w Project Office DOE Mgmt Reserve NSF NSF (assumed) Mgmt Reserve DOE DOE-FNAL(2002 S&C) Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 39 FY2003 Allocation à la Nov2001 FY2003 Budget Allocation - 11/2001 Total Costs $5.98M DOE $4.53M NSF $1.45M Management Reserve Project Office Facilities Equipment Facilities Labor Software Labor 0.0 DOE Lothar A T Bauerdick Fermilab 0.5 1.0 1.5 2.0 NSF U.S. CMS Collaboration Meeting 2.5 3.0 $M iVDGL May 11, 2002 40 Europeans Achieved Major UF Funding Funding for European User Facilities in their countries now looks significantly larger than UF funding in the U.S. This statement is true relative to size of their respective communities It is in some cases event true in absolute terms!! Given our funding situation: are we going to be a partner for those efforts? BTW: USATLAS proposes major cuts in UF/Tier-1 “pilot flame” at BNL Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 41 How About The Others: DE Forschungszentrum Karlsruhe Technik und Umwelt RDCCG Evolution (available capacity) 30% rolling upgrade each year after 2007 month/year LHC+nLHC 11/2001 4/2002 4/2003 4/2004 2005 2007 2007+ CPU /kSI95 1 9 19 46 134 896 +276 Disk / TByte 7 45 113 203 437 1421 +450 Tape / TByte 7 111 211 345 806 3737 +2818 Networking Evolution 2002 - 2005 1) RDCCG to CERN/FermiLab/SLAC (Permanent point to point): => 2 GBit/s could be arranged on a very short timescale 2) RDCCG to general Internet: => Current situation, generally less affordable than 1) 1 GBit/s Ğ 10 GBit/s 34 MBit/s Ğ 100 MBit/s FTE Evolution 2002 - 2005 Support: 5 - 30 Development: 8 - 10 New office building to accommodate 130 FTE in 2005 Regional Data and Computing Centre Germany (RDCCG) Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 42 How About The Others: IT TIER1 Resources PERSONNEL Type N. New Manager 1 Deputy 1 LHC Experiments Software 2 Programs, Tools, Procedures 2 FARM Management & Planning 2 ODB & Data Management 2 Network (LAN+WAN) 2 Other Services (Web, Security, etc.) 2 Administration 2 System Managers & Operators 6 Total 22 Outsource 2 2 1 2 1 1 9 6 6 May 11, 2002 43 Tier2 Personnel is of the same order of magnitude. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting How About The Others: RU Russian Tier2-Cluster Cluster of institutional computing centers with Tier2 functionality and summary resources at 5070% level of the canonical Tier1 center for each experiment (ALICE, ATLAS, CMS, LHCb): analysis; simulat io ns; users dat a suppo rt . Participating institute s: M o sc o w M o sc o w regio n St . Pet ersburg No vo sibirsk SINP MSU, ITEP, KI, É JINR, IHEP, INR RAS PNPI RAS, É BINP SB RAS C ohe re nt use of distribute d re source s by m e ans DataGrid of te chnologie s. Active participation in the LC G Phase 1 Prototyping and Data C halle nge s (at 5-10% le ve l). 2002 Q 1 2002 Q 4 2004 Q 4 2007 CPU kSI95 5 10 25-35 410 Disk TB 6 12 50-70 850 Tape TB 10 20 50-100 1250 2-3/10 20-30/15-30 Netw o rk 155/É Gbps/É Mbps LCG/commodity FTE: 10-12 (2002 Q 1), 12-15 (2002 Q 2), Lothar A T Bauerdick Fermilab 25-30 (2004 Q 4) U.S. CMS Collaboration Meeting May 11, 2002 44 How About The Others: UK UK Tier1/A Status Current EDG TB setup 14 Dual 1GHz PIII, 500MB RAM 40GB disks Compute Element (CE) Storage Element (SE) User Interfaces (UI) Information Node (IN) + Worker Nodes (WN) +Central Facilities (Non Grid) 250 CPUs 10TB Disk 35TB Tape (Capacity 330 TB) Hardware Purchase for delivery today Projected Staff Effort [SY] 156 Dual 1.4GHz 1GB RAM , 30GB dis ks (312 cpus) 26 Dis k servers (Dual 1.266GHz) 1.9TB disk e ach Expand the capacity of the tape robot by 35TB John Gordon - LCG 13th March 2002- n° 5 Area GridPP @CERN WP1 Workload Management WP2 Data Management WP3 Monitoring Services Security WP4 Fabric Management WP5 Mass Storage WP6 Integration Testbed WP7 Network Services 2.0 WP8 Applications ATLAS/ LHCb (Gaudi /Athena) CMS CDF/D0 (SAM) BaBar UKQCD Tier1/A Total 0.5 [IC] 1.5++ [Ggo] 5.0++ [RAL, QMW] ++ [RAL] 1.5 [Edin., LÕ pool] 3.5++ [RAL, LÕ pool ] 5.0++ [RAL/MÕ cr/IC/Bristol] [UCL/ MÕ cr] 17.0 6.5 [Oxf, Cam, RHUL, BÕ ham, 3.0 [IC, Bristol, Brunel ] 4.0 [IC, Ggo, Oxf, Lanc ] 2.5 [IC, MÕ cr, Bristol] 1.0 E [ din.] 13.0 [RAL] 49.0++ 10.0 ->25.0 CS 2.0 [IC] 1.0 [Oxf] 1.0 [HW] 1.0 [Oxf] 1.0 [UCL] RAL] 6.0 = 80++ John Gordon - LCG 13th March 2002- n° 8 Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 45 How About The Others: FR CC-IN2P3 ONE computing centre for IN2P3-CNRS & DSM-CEA (HEP, Astropart., NP,..) 45 people National : 18 laboratories, 40 experiments, 2500 people/users International : Tier-1 / Tier-A status for several US, CERN and astrop. experiments 0,5 PBytes Data Bases, Hierarchical storage ~ 700 cpu's, 20 k SI-95 40 TB disk Budget : ~ 6- 7 M Plus ~ 2 M € / year € f or per sonnel Network & QoS. Custom services "à la carte" LCG workshop -13 March 2002 - Denis LINGLIN Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 46 FY2002 - FY2004 Are Critical in US Compared to European efforts the US CMS UF efforts are very small In FY2002 The US CMS Tier-1 is sized at 4kSI CPU and 5TB storage The Tier-1 effort is 5.5 FTE. In addition there is 2 FTE CAS and 1 FTE Grid S&C base-line 2003/2004: Tier-1 effort needs to be at least $1M/year above FY2002 to sustain the UF R&D and become full part of the LHC Physics Research Grid Need some some 7 additional FTEs, more equipment funding at the Tier-1 Part of this effort would go directly into user support Essential areas are insufficiently covered now, need to be addressed in 2003 the latest Fabric managent • Storage resource mgmt • Networking • System configuration management • Collaborative tools • Interfacing to Grid i/s • System management & operations support This has been strongly endorsed by the S&C baseline review Nov 2001 All European RC (DE, FR, IT, UK, even RU!) have support at this level of effort USA UK RU NL IT FR DE Country 463 98 271 262 137 95 4kSI, 5TB 20kSI, 50TB 10kSI, 12TB 9kSI, 20TB 20kSI, 80TB 40 kSI, 55 TB 9 kSI, 45 TB 5.5 Op, 4 Dev 13 Op, 20 Dev 13 Op 10 Op 13 Op, >> Dev 47 Op/Dev @ Lyon 5 Op, 8 Dev collaborators #CMS Equip Tier-1 Personnel Tier-1 Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting x2 for LHC total GridPP: >25M£ ItGrid 5 Mio€ to CERN Other May 11, 2002 47 The U.S. User Facilities Will Seriously Fall Back Behind European Tier-1 Efforts Given The Funding Situation! To Keep US Leadership and Not to put US based Science at Disadvantage Additional Funding Is Required at least $1M/year at Tier-1 Sites Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 48 LHC Computing Grid Project $36M project 2002-2004, half equipment half personnel: “Successful” RRB Expect to ramp to >30 FTE in 2002, and ~60FTE in 2004 About $2M / year equipment e.g. UK delivers 26.5% of LCG funding AT CERN ($9.6M) The US CMS has requested $11.7M IN THE US + CAS $5.89 Current allocation (assuming CAS, iVDGL) would be $7.1 IN THE US Largest personnel fraction in LCG Applications Area “All” personnel to be at CERN “People staying at CERN for less than 6 months are counted at a 50% level, regardless of their experience.” CCS will work on LCG AA projects US CMS will contribute to LCG This brings up several issues that US CMS S&C should deal with Europeans have decided to strongly support LCG Application Area But at the same time we do not see more support for the CCS efforts CMS and US CMS will have to do at some level a rough accounting of LCG AA vs CAS and LCG facilities vs US UF Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 49 Impact of LHC Delay Funding shortages in FY2001 and FY2002 already lead to significant delays Others have done more --- we seriously are understaffed and do not do enough now We lack 7FTE already this year, and will need to start hiring only in FY2003 This has led to delays and will further delay our efforts Long-term do not know, too uncertain predictions of equipment costs to evaluate possible costs savings due to delays by roughly a year However, schedules become more realistic Medium term Major facilities (LCG) milestones shift by about 6 months 1st LCG prototype grid moved to end of 2002 --> more realistic now End of R&D moves from end 2004 to mid 2005 Detailed schedule and work plan expected from LCG project and CMS CCS (June) No significant overall costs savings for R&D phase We are already significantly delayed, and not even at half the effort of what other countries are doing (UK, IT, DE, RU!!) Catch up on our delayed schedule feasible, if we can manage to hire 7 people in FY2003 and manage to support this level of effort in FY2004 Major issue with lack of equipment funding Re-evaluation of equipment deployment will be done during 2002 (PASTA) Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 50 US S&C Minimal Requirements The DOE funding guidance for the preparation of the US LHC research program approaches adequate funding levels around when the LHC starts in 2007, but is heavily back-loaded and does not accommodate the baselined software and computing project and the needs for pre-operations of the detector in 2002-2005. We take up the charge to better understand the minimum requirements, and to consider non-standard scenarios for reducing some of the funding short falls, but ask the funding agencies to explore all available avenues to raise the funding level. The LHC computing model of a worldwide distributed system is new and needs significant R&D. The experiments are approaching this with a series of "data challenges" that will test the developing systems and will eventually yield a system that works. US CMS S&C has to be part of the data challenges (DC) and to provide support for trigger and detector studies (UF subproject) and to deliver engineering support for CMS core software (CAS subproject). Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 51 UF Needs The UF subproject is centered on a Tier-1 facility at Fermilab, that will be driving the US CMS participation in these Data Challenges. The prototype Tier-2 centers will become integrated parts of the US CMS Tier-1/Tier-2 facilities. Fermilab will be a physics analysis center for CMS. LHC physics with CMS will be an important component of Fermilab's research program. Therefore Fermilab needs to play a strong role as a Tier-1 center in the upcoming CMS and LHC data challenges. The minimal Tier-1 effort would require to at least double the current Tier-1 FTEs at Fermilab, and to grant at least $300k yearly funding for equipment. This level represents the critical threshold. The yearly costs for this minimally sized Tier-1 center at Fermilab would approach $2M after an initial $1.6M in FY03 (hiring delays). The minimal Tier-2 prototypes would need $400k support for operations, the rest would come out of iVDGL funds. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 52 CAS Needs Ramping down the CAS effort is not an option, as we would face very adverse effects on CMS. CCS manpower is now even more needed to be able to drive and profit from the new LCG project - there is no reason to believe that the LCG will provide a “CMS-ready” solution without CCS being heavily involved in the process. We can even less allow for slips or delays. Possible savings with the new close collaboration between CMS and ATLAS through the LCG project will potentially give some contingency to the engineering effort that is to date missing in the project plan. That contingency (which would first have to be earned) could not be released before end of 2005. The yearly costs of keeping the current level for CAS are about $1.7M per year (DOE $1000k, NSF $700k), including escalation and reserve. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 53 Minimal US CMS S&C until 2005 Definition of Minimal: if we can’t afford even this, the US will not participate in the CMS Data Challenges and LCG Milestones in 2002 - 2004 For US CMS S&C the minimal funding for the R&D phase (until 2005) would include (PRELIMINARY) Tier1: $1600k in FY03 and $2000k in the following years Tier2: $400k per year from the NSF to sustain the support for Tier2 manpower CAS: $1M from DOE and $700k from the NSF Project Office $300k (includes reserve) A failure to provide this level of funding would lead to severe delays and inefficiencies in the US LHC physics program. Considering the large investments in the detectors, and the large yearly costs of the research program such an approach would not be cost efficient and productive. The ramp-up of the UF to the final system, beyond 2005, will need to be aligned with the plans of CERN and other regional centers. After 2005 the funding profile seems to approach the demand. Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 54 Where do we stand? Setup of an efficient and competent s/w engineering support for CMS David is happy and CCS is doing well “proposal-driven” support for det/PRS engineering support Setup of an User Support organization out of UF (and CAS) staff PRS is happy (but needs more) “proposal driven” provision of resources: data servers, user cluster Staff to provide data sets and nTuples for PRS, small specialized production Accounts, software releases, distribution, help desk etc pp Tutorials done at Tier-1 and Tier-2 sites Implemented & commissioned a first Tier-1/Tier-2 system of RCs UCSD, Caltech, U.Florida, U.Wisconsin, Fermilab Shown that Grid-tools can be used in “production” and greatly contribute to success of Grid projects and middleware Validated use of network between Tier-1 and Tier-2: 1TB/day! Developing a production quality Grid-enabled User Facility “impressive organization” for running production in US Team at Fermilab and individual efforts at Tier-2 centers Grid technology helps to reduce the effort Close collaboration with Grid project infuses additional effort into US CMS Collaboration between sites (including ATLAS, like BNL) for facility issues Starting to address many of the “real” issues of Grids for Physics Code/binary distribution and configuration, Remote job execution, Data replication Authentication, authorization, accounting and VO services Remote database access for analysis Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 55 What have we achieved? We are participating and driving a world-wide CMS production DC We are driving a large part of the US Grid integration and deployment work That goes beyond the LHC and even HEP We have shown that the Tier-1/Tier-2 User Facility system in the US can work! We definitely are on the map for LHC computing and the LCG We also are threatened to be starved over the next years The FA have failed to recognize the opportunity for continued US leadership in this field — as others like the UK are realizing and supporting! We are thrown back to a minimal funding level, and even that has been challenged But this is the time where our partners at CERN will expect to see us deliver and work with the LCG Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 56 Conclusions The US CMS S&C Project looks technically pretty sound Our customers (CCS and US CMS Users) appear to be happy, but want more We also need more R&D to build the system, and we need to do more to measure up to our partners We have started in 1998 with some supplemental funds we are a DOE-line item now We have received less than requested for a couple of years now, but this FY2002 the project has become bitterly under-funded — cf. the reviewed and endorsed baseline The funding agencies have faulted on providing funding for the US S&C and on providing FA guidance for US User Facilities The ball is in our (US CMS) park now it is not an option to do “just a little bit” of S&C the S&C R&D is a project: base-line plans, funding profiles, change control It is up to US CMS to decide I ask you to support my request to build up the User Facilities in the US Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 57 THE END Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 58 UF Equipment Costs Detailed Information on Tier-1 Facility Costing See Document in Your Handouts! All numbers in FY2002$k Fiscal Year 2002 2003 2004 2005 2006 2007 Total 2008 (Ops) 1.1 T1 Regional Center 1.2 System Support 1.3 O&M 1.4 T2 Regional Centers 1.5 T1 Networking 1.6 Computing R&D 1.7 Det. Con. Support 1.8 Local Comp. Supp. 0 29 0 232 61 511 84 12 0 23 0 240 54 472 53 95 0 0 0 870 42 492 52 128 2,866 35 0 1,870 512 0 0 23 2,984 0 0 1,500 462 0 0 52 2,938 0 0 1,750 528 0 0 23 8,788 87 0 6,462 1,658 1,476 189 333 2,647 15 0 1,250 485 0 0 48 Total 929 938 1,584 5,306 4,998 5,239 18,992 4,446 Total T1 only 697 698 714 3,436 3,498 3,489 12,530 3,196 Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 59 Total Project Costs In AY$M Fiscal Year 2002 2003 2004 2005 2006 2007 Project Office 0.32 0.48 0.49 0.51 0.52 0.54 DOE NSF 0.15 0.17 Software Personnel 1.49 DOE NSF 0.87 0.62 UF Personnel 1.14 for Tier-1 DOE for Tier-2 NSF 0.83 0.31 UF Equipment 0.45 for Tier-1 DOE for Tier-2 NSF 0.45 0.00 Management Reserve DOE NSF Total Costs Total DOE Total NSF Lothar A T Bauerdick Fermilab 0.34 0.23 0.11 3.73 2.53 1.20 0.31 0.17 1.72 0.91 0.81 2.26 1.97 0.29 0.75 0.70 0.05 0.77 0.64 0.13 5.98 4.53 1.45 0.32 0.18 2.14 0.96 1.18 3.00 2.48 0.52 1.51 0.71 0.80 0.71 0.45 0.27 7.86 4.91 2.94 U.S. CMS Collaboration Meeting 0.33 0.18 2.25 1.01 1.24 5.26 4.33 0.93 5.35 3.44 1.91 1.34 0.34 0.19 2.36 1.06 1.30 6.99 5.42 1.57 5.19 3.50 1.69 1.51 0.35 0.19 2.48 1.11 1.37 7.89 6.28 1.62 5.52 3.49 2.03 1.96 0.91 0.43 1.03 0.47 1.44 0.52 14.71 16.57 18.39 10.02 4.69 11.35 5.22 12.67 5.73 May 11, 2002 60 U.S. CMS Tier-1 RC Installed Capacity Fiscal Year 2002 2003 2004 2005 2006 2007 Simulation CPU (Si95) Analysis CPU (Si95) Server CPU (Si95) Disk (TB) 2,000 750 50 16 3,000 2,100 140 31 4,000 4,000 270 46 7,200 8,000 1,500 65 28,800 32,000 6,000 260 72,000 80,000 15,000 650 CPU Disk (Si95) (TB) 310,000 1,400 Total Resources U.S. CMS Tier-1 and all Tier-2 Lothar A T Bauerdick Fermilab 310 kSI95 today is ~ 10,000 PCs U.S. CMS Collaboration Meeting May 11, 2002 61 Alternative Scenarios Q: revise the plans as to not have CMS and ATLAS identical scope? - never been tried in HEP: always competitive experiments - UF model is NOT to run a computer centers, but to have an experiment-driven effort to get the physics environment in place - S&C is engineering support for the physics project -- outsourcing of engineering to a non-experiment driven (common) project would mean a complete revision of the physics activities. This would require fundamental changes to experiment management and structure, that are not in the purview of the US part of the collaboration - specifically the data challenges are not only and primarily done for the S&C project, but are going to be conducted as a coherent effort of the physics, detector AND S&C groups with the goal to advance the physics, detector AND S&C efforts. - The DC are why we are here. If we cannot participate there would be no point in going for a experiment driven UF Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 62 Alternative Scenarios Q: are Tier-2 resources spread too thin? - The Tier-2 efforts should be as broad as we can afford it. We are including university (non-funded) groups, like Princeton - If the role of the Tier-2 centers were just to provide computing resources we would not distribute this, but concentrate on the Tier1 center. Instead the model is to put some resources at the prototype T2 centers, which allows us to pull in additional resources at these sites. This model seems to be rather successful. - iVDGL funds are being used for much of the efforts at prototype T2 centers. Hardware investments at the Tier-2 sites up to know have been small. The project planned to fund 1.5FTE at each site (this funding is not yet there). In CMS we see additional manpower at those sites of several FTE, that comes out of the base program and that are being attracted from CS et al communities through the involvement in Grid projects Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 63 Alternative Scenarios Q: additional software development activities to be combined? - - this will certainly happen. Concretely we already started to plan the first large-scale ATLAS-CMS common software project, the new persistency framework. Do we expect significant savings in the manpower efforts? These could be in the order of some 20-30%, if these efforts could be closely managed. However, the management is not in US hands, but in the purview of the LCG project. Also, the very project is ADDITIONAL effort that was not necessary when Objectivity was meant to provide the persistency solution. generally we do not expect very significant changes in the estimates for the total engineering manpower required to complete the core software efforts, the possible savings would give a minimal contingency to the engineering effort that is to date missing in the project plan. -> to be earned first, then released in 2005 Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 64 Alternative Scenarios Q: are we loosing, are there real cost benefits? - any experiment that does not have a kernel of people to run the data challenges will significantly loose - The commodity is people, not equipment - Sharing of resources is possible (and will happen), but we need to keep minimal R&D equipment. $300k/year for each T1 is very little funding for doing that. Below that we should just go home… - Tier2: the mission of the Tier2 centers is to enable universities to be part of the LHC research program. That function will be cut in as much as the funding for it will be cut. - To separate the running of the facilities form the experiment’s effort: This is a model that we are developing for our interactions with Fermilab CD -- this is the ramping to “35 FTE” in 2007, not the “13 FTE” now; some services already now are being “effort-reported” to CD-CMS. We have to get the structures in place to get this right - there will be overheads involved - I do not see real cost benefits in any of these for the R&D phase. I prefer not to discuss the model for 2007 now, but we should stay open minded. However, if we want to approach unconventional scenarios we need to carefully prepare for them. That may start in 2003-2004? Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 65 UF + PM Control room logbook Code dist, dar, role for grid T2 work CCS schedule? More comments on the job Nucleation point vs T1 user community new hires Tony’s assignment, prod running Disk tests, benchmarking, common work w/ BNL and iVDGL facility grp Monitoring, ngop, ganglia, iosif’s stuff Mention challenges to test bed/MOP: config, certificates, installations, and help we get from grid projects: VO, ESNET CA, VDT UF workplan Lothar A T Bauerdick Fermilab U.S. CMS Collaboration Meeting May 11, 2002 66