Abstract

advertisement

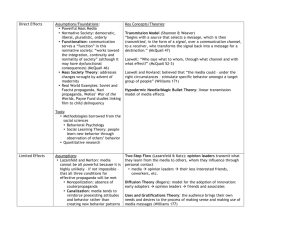

Data Analysis in Survey Research: Contesting ‘Methodological Hegemony’ Through the Choices of Paul Lazarsfeld More than 70 years have passed since Paul Lazarsfeld and his colleagues interviewed registered voters in Erie County, Ohio about decision-making processes during a presidential election campaign. The results of that study, conducted in 1940 and subsequently published in The People’s Choice (Lazarsfeld, Berelson & Gaudet, 1948), led to a paradigm shift in mass-communication theory and research, from the hypodermic-needle model to the two-step flow (Rogers, 1994; Schramm, 1997). While scholars have questioned the validity of that shift (Chaffee & Hochheimer, 1985), few have disputed its perceived existence and reification (see Glynn et al., 1999, p. 399; Katz, 1987); political information, the study suggested, flowed from the media to opinion leaders, and then from opinion leaders to those less engaged in politics. The current study is concerned less with the two-step flow than the quantitative research philosophy of Paul Lazarsfeld. Considering much of his work exploratory, Lazarsfeld did not believe in applying tests of statistical significance to the data that he and his colleagues gathered through survey research (Leahey, 2005, p. 18). He conceptualized social research as an avenue to formulate substantive hypotheses about human behavior (Rogers, 1994, pp. 300-301; Selvin, 1957, p. 527), and his crosstabulations of data reflected that conceptualization (see Lazarsfeld, 1955). Seeking to contribute both theoretically and methodologically to the study of political communication and public opinion, the present study applies log-linear analysis to data gathered in the 2008 National Election Studies (NES). The research demonstrates how analyses of contingency tables can reduce violations of statistical assumptions while preserving substantive information. Through its methodological approach, the study aspires to inform analyses of secondary data more generally, providing a framework for testing relationships among nominal and ordinal variables. Ordinal measures, in particular, are frequently treated as “quasi-interval” without appropriate checks on randomness, distribution normality and equality of variance assumptions. But as the proposed study suggests, data gathered in survey research do not have to be analyzed with techniques that are “close enough” to those that offer an equal or superior treatment of the data.