ppt - Department of Computer Science

advertisement

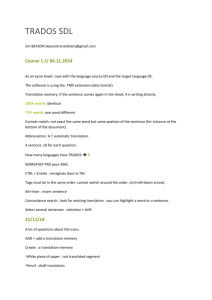

Empiricism from TMI-1992 to AMTA-2002 to AMTA-2012 Have IBM Models 1-5 failed to solve all the world’s problems? Kenneth W. Church AT&T Labs-Research church@att.com The organizers asked me… • What's changed since TMI-92 (if anything)? – TMI-92: great excitement over the use of aligned parallel corpora to help human translators (translation tools) – Also, much controversy over IBM Models 1-5 • So what's happened since 1992? – Empiricism has come of age • Textbooks: Charniak, Jelinek, Manning & Schultze, Jurafsky & Martin • Textbooks courses in many universities around the world – What used to be considered radical is now accepted practice • Evaluation is practically required for publication – Mercer’s fighting words: More data is better data! • Aren’t as shocking when Brill makes the case a decade later – The new field of Machine Learning has absorbed many good (and formally controversial) ideas including • IBM Models 1-5 • Yarowsky's Word Sense Disambiguation – Grew out of Machine Translation, – But is now widely cited in Machine Learning as an early example of co-training October 10, 2002 AMTA 2 Has the pendulum swung too far? • What happened since TMI-1992 (if anything)? – 1980-2000: Revival of Empirical Methods • Have empirical methods become too popular? – Has too much happened since TMI-1992? • I worry that the pendulum has swung so far that – we are no longer training students for the possibility • that the pendulum might swing the other way • We ought to be preparing students with a broad education including: – Statistics and Machine Learning – as well as Linguistic Theory October 10, 2002 AMTA 3 Empiricism: Academia Commercial Practice • Empiricism has not only come of age in academic venues (e.g., conferences, textbooks) – but also in commercial venues • Translation tools (e.g., alignment): – Academia commercial practice (Trados) • Good Applications for Crummy MT – Even better apps: • CLIR (cross-language information retrieval) • MT in web search engines (Systran & AltaVista) October 10, 2002 AMTA 4 So, what do I expect to happen over the next decade? • Scale, stupid: – There is a lot of excitement about the web • Not only large and growing and sexy • But also contains a rich structure of hypertext links – I will propose a bait and switch strategy • Bait: public Internet • Switch: the real target is something larger and more valuable – but more elusive • Good Apps for Crummy NLP: – Spend more time on: • what we can do with what we have • and not spend all our resources on the core technology – There is a lot to a killer app: • Great technology helps, but there is a lot more • Similar arguments apply beyond MT to much of NLP and speech October 10, 2002 AMTA 5 Overview Historical rational reconstruction emphasizing empiricism & business Before TMI-1992: How to Cook a Demo • TMI-1992 Debate: Rationalism v. Empiricism • Hybrid/Tools: – – – • What happened to the IBM-Approach to MT? – – – • Kay’s Workstation Good Apps for Crummy MT Trados Support for human translators (Translation Tools: Trados) Fully automatic apps (CLIR) Academia (Machine Learning) Revival of Empiricism: A Personal Perspective – – The IBM-approach to MT was always controversial But there are lots of less controversial spin-offs: • • Future (AMTA-2012): Bait and Switch Strategy – – • Bait: Use Public Internet to develop and test and socialize new ways of extracting value Switch: Apply learnings to larger and more valuable private linguistic repositories Market sizing: translation business is too small for fortune-500 companies – – October 10, 2002 Tools, lexicography apps, word sense, machine learning Major lasting contribution of IBM-Approach: academic (machine learning) MT Strategy: work on MT because it is fun, but apply learnings elsewhere to pay the bills AMTA 6 How To Cook A Demo (TMI-1992) • Great fun! • Effective demos – – – – Theater, theater, theater Production quality matters Entertainment >> evaluation Strategic vision >> technical correctness • Maturity: Many fields have come of age since 1950s – Computer Science, Artificial Intelligence, Machine Learning, Natural Language, Machine Translation, Empiricism • Success/Catastrophe – Warning: demos can be too effective – Dangerous to raise unrealistic expectations • Seeds of empiricism – Empirical methods: speech language October 10, 2002 AMTA 7 Let’s go to the video tape! (Lesson: manage expectations) • Lots of predictions – – Entertaining in retrospect Nevertheless, many of these people went on to very successful careers: president of MIT, Microsoft exec, etc. 1. Machine Translation (1950s) video – Classic example of a demo embarrassment in retrospect 2. Translating telephone (late 1980s) video – – Pierre Isabelle pulled a similar demo because it was so effective The limitations of the technology were hard to explain to public • • But well understood by research community We aren’t asking what happened to translating telephones (if anything) 3. Apple (~1990) video – – – Still having trouble setting appropriate expectations Strategy: Point & Click Speech recognition What happened to that (if anything)… Has it moved to Microsoft? 4. Andy Rooney (~1990): reset expectations video October 10, 2002 AMTA 8 TMI-1992 Debate: Rationalism v. Empiricism • Self-organizing systems (IBM) – Statistics do it all (no human intuition) • Stone Soup (Wilks) – Statistics don’t do nothing (all human intuition) • Hybrid/Tools (Kay’s Workbench) – Proper Place of Men and Machines in MT • Use people for what they are good at – Easy vocabulary and easy grammar • Use machines for that they are good at – Technical terminology, translation memories, re-use of previously translated texts – Good Application of Crummy MT – Trados – Pragmatism: low hanging fruit • Supply: do what we can do (with or without stats) • Demand: do what is worth doing October 10, 2002 AMTA 9 Stone Soup Debate (mid-1990s) • IBM-style MT is obnoxious – Agreed • It has all been done before – Agreed • Stone soup: they’ve been adding intuition to their stats – Agreed • It doesn’t work (Systran is better) – Systran is also better than Pangloss • It isn’t about empiricism, evaluation, etc. – Martin Kay’s advice about debating • Natural Ceiling – Chomsky used this argument against Shannon – In the part of speech case, the ceiling was broken with stats • Lack of data (lots of Canadian Hansards, but not much else) – We don’t hear this argument so much any more… • The Future: Hybrid Approaches – Agreed October 10, 2002 AMTA 10 Bottom line Hybrid/Tools • Yorick will get the last word • But from his abstract, it looks like he’s going to tell us that I was right all along • And just in case he doesn’t… – Let me say it now: I told you so! October 10, 2002 AMTA 11 Overview Historical rational reconstruction emphasizing empiricism & business • Before TMI-1992: How to Cook a Demo • TMI-1992 Debate: Rationalism v. Empiricism Hybrid/Tools: – – – • What happened to the IBM-Approach to MT? – – – • Kay’s Workstation Good Apps for Crummy MT Trados Support for human translators (Translation Tools: Trados) Fully automatic apps (CLIR) Academia (Machine Learning) Revival of Empiricism: A Personal Perspective – – The IBM-approach to MT was always controversial But there are lots of less controversial spin-offs: • • Future (AMTA-2012): Bait and Switch Strategy – – • Bait: Use Public Internet to develop and test and socialize new ways of extracting value Switch: Apply learnings to larger and more valuable private linguistic repositories Market sizing: translation business is too small for fortune-500 companies – – October 10, 2002 Tools, lexicography apps, word sense, machine learning Major lasting contribution of IBM-Approach: academic (machine learning) MT Strategy: work on MT because it is fun, but apply learnings elsewhere to pay the bills AMTA 12 Kay’s Workstation (1980) Proper Place of Men and Machines in MT • The translator’s [workstation] will not run before it can walk. – It will be called on only for that for which its masters have learned to trust it. – It will not require constant infusions of new ad hoc devices that only expensive vendors can supply. • It is a framework that will gracefully accommodate the future contributions – that linguistics and computer science are able to make. • One day it will be built because – its very modesty assures its success. • It is to be hoped that it will be built with taste by people – who understand languages and computers well enough to know how little it is that they know. October 10, 2002 AMTA 13 CWARC: Canadian Workplace Automation Research Center (1989) • A PC • Network access to the Termium terminology database CD-ROM • WorkPerfect • CompareRite (a diff tool) • TextSearch (a concordance tool) • Mercury/Termex (terminology) • Procomm (remote access to data banks via telephone modem) • Seconde Memoire (French verb inflections) • Software Bridge (tool for converting word processing files from one commercial format into another) October 10, 2002 AMTA 14 Good Apps for Crummy MT • • • • It should set reasonable expectations It should make sense economically It should be attractive to the intended users It should exploit the strengths of the machine – and not compete with the strengths of the human • It should be clear to the users what the system can and cannot do, and • It should encourage the field to move forward toward a sensible long-term goal. October 10, 2002 AMTA 15 Evaluation: MultiLingual 13:6 A Trade Magazine for Translators SelfOrganizing Stone Soup Hybrid/ Tools Page Description of Article 18 Reviews: Reverso Pro 5, Reverso Expert Products offer useful new features √ 21 T-Remote Memory √ 24 Content Management: Systems for Managing Content Transformations √ 31 Comparing Tools Used in Software Localization √ 37 Working With Machine Translation √* 42 Integrating Translation Tools With Document Creation (CMU → Déjà Vu) √ 49 A Look At Two Web Translation Portals 53 Going Global With Lingo Systems October 10, 2002 AMTA √ √* 16 MultiLingual 13:6 Very Positive on Tools • T-Remote Memory (p. 21) – Combination of translation memory, workflow & distributed work centers (work at home) • Moore’s Law: large revenues better technology – “Hold on to your seats, translators and agencies, our industry is about to change again… Forecast shakeup: extreme.” • Comparing Tools Used in Software Localization (p. 31): A Consumer Reports-like Review – Presupposition: tools are ready for wide-spread use • “Finally, I am told that there are people out there for whom price does matter.” – ATA Conference (~1990): the translator and the MT salesman October 10, 2002 AMTA 17 MultiLingual 13:6 is mostly positive on technology, but… • Working With Machine Translation (p. 37) – Although the formatting of the source text is extremely simple, the machine translation output requires a lot of painstaking postediting • Grim reality: mark-up is more valuable than translation – The verdict is unanimous – The translators, who have gained a lot of experience of EU topics, prefer to work without Systran • A Look at Two Web Translation Portals (p. 49) – At first I thought it was a machine translation site..., but soon discovered that it was not one of those “free translation” sites. October 10, 2002 AMTA ≈ $0.25/word 18 Machine Translation + Post-Editing: Long History of Mixed Results • Positive: – Magusson-Murray (1985, p. 180): Although you can expect to at least double your translator’s output, the real cost-saving in MT likes in complete electronic transfer of information and the integration into a fully electronic publishing system. – Lawson (1984, p. 6): Substantial rises in translations output, by as much as 75 per cent in one case, are being reported by users of the Logos machine translation (MT) system after only a few months. – Tschira (1985): For one type of text (data description manuals), we observed an increase in throughput of 30 per cent. October 10, 2002 AMTA 19 Surprisingly, automation (MT + Post-editing) can be more expensive than manual baseline • Negative: – Macklovitch (1991, p. 3): The HT production chain was significantly faster than the MT production chain. – Kay (1980): Proper Place of Men and Machines in MT – ALPAC (1966, p. 19): The postedited translation took slightly longer to do and was more expensive than conventional human translation… Dr. J. C. R. Licklider of IBM and Dr. Paul Garvin of Bunker-Ramo said they would not advise their companies to establish such a service. • Credibility gap: – Why so little consistency? 200%? 75%? 30%? 0%? – Why haven’t these products done better in the marketplace? – The tools argument (terminology and translation memories) works better with translators than post-editing – Translators may be biased • but they have considerable expertise (and influence) • Automation will be easier if they believe in it October 10, 2002 AMTA 20 Overview Historical rational reconstruction emphasizing empiricism & business • • • Before TMI-1992: How to Cook a Demo TMI-1992 Debate: Rationalism v. Empiricism Hybrid/Tools: – – – Kay’s Workstation Good Apps for Crummy MT Trados What happened to the IBM-Approach to MT? – – – • Support for human translators (Translation Tools: Trados) Fully automatic apps (CLIR) Academia (Machine Learning) Revival of Empiricism: A Personal Perspective – – The IBM-approach to MT was always controversial But there are lots of less controversial spin-offs: • • Future (AMTA-2012): Bait and Switch Strategy – – • Bait: Use Public Internet to develop and test and socialize new ways of extracting value Switch: Apply learnings to larger and more valuable private linguistic repositories Market sizing: translation business is too small for fortune-500 companies – – October 10, 2002 Tools, lexicography apps, word sense, machine learning Major lasting contribution of IBM-Approach: academic (machine learning) MT Strategy: work on MT because it is fun, but apply learnings elsewhere to pay the bills AMTA 21 What has happened to the IBMApproach to Machine Translation? • Support for human translators (MultiLingual 13:6) 1. 2. 3. • Fully automatic – – • CLIR: cross-language information retrieval Translating web pages Academic fields – – October 10, 2002 Terminology: translators don’t need help with the easy vocabulary and the easy grammar Translation Memory: translators are often asked to translate the same material again and again (e.g., revisions of manuals) Alignment Machine Learning: most important contribution Corpus-based Lexicography: spreading into lots of other fields including politics (Nunberg) AMTA 22 Use of Political Labels in Major Newspapers Geoffrey Nunberg Commentary broadcast on "Fresh Air," March 19, 2002 Total instances in newspapers database Liberal Pct within 7 words of relevant label Total instances in "liberal" papers 4.8% Pct. within 7 words of label in "liberal" papers 3.78% Paul Wellstone 2939 10.90% 578 8.48% Barney Frank 8501 4.70% 1439 3.89% Tom Harkin 10,147 3.70% 1784 2.02% Ted Kennedy 17,197 3.00% 2444 2.74% Barbara Boxer 8977 2.00% 3093 1.78% Conservative 3.6% 2.89% Jesse Helms 19,874 9.10% 4718 6.02% Tom DeLay 6351 3.60% 1859 2.90% John Ashcroft 10,187 2.10% 1157 3.03% Dick Armey 9222 2.10% 1460 1.44% Trent Lott 18,048 1.40% 4976 1.05% October 10, 2002 AMTA 23 Surprisingly, liberals are more likely to be labeled as such than conservatives • In fact, I [Nunberg] did find a big disparity in the way the press labels liberals and conservatives, – but not in the direction that Goldberg claims. • On the contrary: the average liberal legislator has a 30% greater likelihoods of being identified with a partisan label than the average conservative does. • The press describes – Barney Frank as a liberal 2.5 times as frequently – as it describes Dick Armey as a conservative. • It gives Barbara Boxer a partisan label – almost twice as often as it gives one to Trent Lott. • And while it isn't surprising that the press applies the label conservative to Jesse Helms more often than to any other Republican in the group, October 10, 2002 – it describes Paul Wellstone as a liberal – 20% more frequently than that. AMTA 24 1990s Revival of Empiricism • Empiricism was at its peak in the 1950s – Dominating a broad set of fields • Ranging from psychology (behaviorism) • To electrical engineering (information theory) • At the time, it was common practice in linguistics to classify words not only by meaning but also by collocations (word associations) – Firth: “You shall know a word by the company it keeps” – Collocations: Strong tea v. powerful computers – Word Associations: bread and butter, doctor/nurse • Regrettably, interest in empiricism faded – with Chomsky’s criticism of ngrams in Syntactic Structures (1957) – and Minsky and Papert’s criticism of neural networks in Perceptrons (1969). • Availability of massive amounts of data (even before the web) – “More data is better data” – Quantity >> Quality (balance) • Pragmatic focus: – What can we do with all this data? – Better to do something than nothing at all • October 10, 2002 Empirical methods (and focus on evaluation): Speech Language AMTA 25 Shannon’s: Noisy Channel Model Language Model Channel Model • I Noisy Channel O • I΄ ≈ ARGMAXI Pr(I|O) = ARGMAXI Pr(I) Pr(O|I) Language Model Word Rank More likely alternatives We 9 The This One Two A Three Please In need 7 are will the would also do to 1 resolve 85 have know do… all 9 The This One Two A Three Please In of 2 The This One Two A Three Please In the important issues October 10, 2002 Channel Model Application Input Output Speech Recognition writer rider OCR (Optical Character Recognition) all a1l Spelling Correction government goverment 1 657 14 document question first… thing point to AMTA 26 Using (Abusing) Shannon’s Noisy Channel Model: Part of Speech Tagging and Machine Translation • Speech – Words Noisy Channel Acoustics • OCR – Words Noisy Channel Optics • Spelling Correction – Intended Text Noisy Channel Typos • Part of Speech Tagging (POS): – POS Noisy Channel Words • Machine Translation: – English Noisy Channel French October 10, 2002 AMTA 27 Statistical MT • E Noisy Channel F • E΄ = ARGMAXE Pr(E) Pr(F|E) • Language Model, Pr(E): – Trigram model (borrowed from speech recog) • Channel Model, Pr(F|E): – Based on aligned parallel corpora – Models 1-5: alignment • Mercer & Church (Computational Linguistics, 1993) – Statistical MT may fail for reasons advanced by Chomsky – Regardless of its ultimate success or failure, – There is a growing community of researchers in corpus-based linguistics who believe it will produce valuable lexical resources • Bilingual concordances • Translation tools • Training & testing material for word sense disambig (senseval) October 10, 2002 AMTA 28 Word Sense Disambiguation • Knowledge Acquisition Bottleneck – – – – Bar-Hillel (1960) Expert systems don’t scale Sense-tagged text: expensive Parallel text! • Translation = sense-tagged text – Sentence (judicial sense) peine – Sentence (syntactic sense) phrase • Yarowsky: bilingual monolingual • One sense per discourse • Machine Learning: early example of co-training October 10, 2002 AMTA 29 Rationalism Empiricism Well-known Chomsky, Minsky advocates Shannon, Skinner, Firth, Harris Model Competence Model Noisy Channel Model Contexts of Phrase-Structure Interest N-Grams All and Only Minimize Prediction Error (Entropy) Goals Explanatory Descriptive Theoretical Applied Linguistic Agreement & Wh-movement Generalizations Principle-Based, CKY Parsing Strategies (Chart), ATNs, Unification Collocations & Word Associations Forward-Backward (HMMs), Inside-outside (PCFGs) Understanding Recognition Who did what to whom Noisy Channel Applications Applications October 10, 2002 AMTA 30 Revival of Empiricism: A Personal Perspective • At MIT, I was solidly opposed to empiricism – But that changed soon after moving to AT&T Bell Labs (1983) • Letter-to-Sound Rules (speech synthesis) – Names: Letter stats Etymology Pronunciation video – NetTalk: Neural Nets video • • • • • • Demo: great theater unrealistic expectations Self-organizing systems v. empiricism Machine Learning v. Corpus-based Linguistics I did it, I did it, I did it, but… Part of Speech Tagging (1988) Word Associations (Hanks) – Mutual info collocations & word associations • Collocations: Strong tea v. powerful computers • Word Associations: bread and butter, doctor/nurse • • • Good-Turing Smoothing (Gale) Aligning Parallel Corpora (inspired by MT) Word Sense Disambiguation – Bilingual Monolingual • October 10, 2002 Even if IBM’s approach fails for MT lasting benefit (tools, linguistic resources, academic contributions to machine learning) AMTA 31 Overview Historical rational reconstruction emphasizing empiricism & business • • • Before TMI-1992: How to Cook a Demo TMI-1992 Debate: Rationalism v. Empiricism Hybrid/Tools: – – – • What happened to the IBM-Approach to MT? – – – • Kay’s Workstation Good Apps for Crummy MT Trados Support for human translators (Translation Tools: Trados) Fully automatic apps (CLIR) Academia (Machine Learning) Revival of Empiricism: A Personal Perspective – – The IBM-approach to MT was always controversial But there are lots of less controversial spin-offs: • Tools, lexicography apps, word sense, machine learning Future (AMTA-2012): Bait and Switch Strategy – – • Market sizing: translation business is too small for fortune-500 companies – – October 10, 2002 Bait: Use Public Internet to develop and test and socialize new ways of extracting value Switch: Apply learnings to larger and more valuable private linguistic repositories Major lasting contribution of IBM-Approach: academic (machine learning) MT Strategy: work on MT because it is fun, but apply learnings elsewhere to pay the bills AMTA 32 Strategy is Important www.elsnet.org • Our field is doing better and better! – It used to be hard to prepare a talk for a CL audience • because there was almost nothing that you could assume everyone knew. – The field will really have arrived when a course in speech and language processing is a normal part of every undergraduate and graduate Computer Science, Electronic Engineering, and Linguistics programme • and we're a long way from that • • But things are improving… Now have several textbooks: Manning & Schutze, Jurafsky and Martin – A quick search of the web: textbooks courses (around the world) • There were, of course, many other obstacles – that limited the size of the field. • It used to be hard to join in on the fun – because only a few large industrial labs could afford to collect data • Thanks to data collection efforts such as LDC and ELSNET and the web – Data is no longer the problem it used to be – Of course, you can never have too much of a good thing… • October 10, 2002 Tools also used to be a problem…. AMTA 33 Learn from Theoretical Computer Science • There is always, though, more – we could do to promote our field • Learn from Theoretical Computer Science – Theory has paid more attention to teaching • than we have – They have also worked hard on strategy • www.research.att.com/~dsj/nsflist.html • The theory community regularly exchange lists of open problems along with difficulty ratings – Students know before they solve a problem whether it is worth a conference paper or a superstar award October 10, 2002 AMTA 34 Strategy: not urgent, but important • Many orgs (.edu, .com, .gov) work hard on strategy • Plenty of examples on the web: – – – – www.nsf.gov/pubs/2001/nsf0104/strategy.htm www.darpa.mil/body/mission.html medg.lcs.mit.edu/doyle/publications/sdcr96.pdf www.gridforum.org/L_About/about.htm • Hard to say why strategy is important – But I have noticed, at least within AT&T, that groups that work hard on strategy have grown and prospered over the years – Strategy is never as urgent as the next conference paper deadline, but it is probably more important October 10, 2002 AMTA 35 Strategy documents have impact (even if it appears that they are being ignored) • Organizations may or may not follow their own recommendations • The discussion that produces the strategy document is extremely valuable, nevertheless, – perhaps more so that anything that happens after doc is finalized • Strategy panels offer a forum for people to meet – and look at the field from a broader perspective • In addition, the theory community has observed that even after the people involved in the original discussion have long since forgotten the outcome – Recommendations continue to live on – and broaden the best and most aggressive students for years October 10, 2002 AMTA 36 Strategy Discussions in Our Field • There are a few discussions of strategy within our field: – – – – http://www.elsnet.org/about.html http://www.ldc.upenn.edu/ldc/about/ldc_intro.html http://www-nlpir.nist.gov/projects/duc/papers/ LREC workshops (to order proceedings, see www.lrec-conf.org) • LDC link developed a decade ago – Largely responsible for the success of LDC – If more groups in our field put the same kind of energy into strategy, • There would be more success stories like the LDC • A delightful "near miss" is Martin Kay's reflections on ICCL and COLING – Establishes direction for the format of Coling conferences in a “classic” Martin-style • Proposal: convince Martin to write a doc in the same delightful style – Establish direction for the field rather than atmosphere for Coling October 10, 2002 AMTA 37 Bait and Switch Strategy www.elsnet.org • Bait: public Internet – Large, sexy, available, rich hypertext structure • Switch: as large as the web is – There are larger & more valuable private repositories • Private Intranets & telephone networks – Exclusivity Value • No one cares about data that everyone can have • Just as Groucho Marx doesn’t want to be in a club that… • Strategy: Use the public Intranet to develop, test and socialize new ways to extract value from large linguistic repositories – Value to society: Apply solutions to private repositories October 10, 2002 AMTA 38 Call Centers: An Intelligence Bonanza October 10, 2002 • Some companies are collecting information with technology designed to monitor incoming calls for service quality. • Last summer, Continental Airlines Inc. installed software from Witness Systems Inc. to monitor the 5,200 agents in its four reservation centers. • But the Houston airline quickly realized that the system, which records customer phone calls and information on the responding agent's computer screen, also was an intelligence bonanza, says André Harris, reservations training and quality-assurance director. AMTA 39 Bait: Use Web to establish: More data is better data • • Shocking at TMI-92 (Mercer), but less so a decade later (Brill) EMNLP-02 best paper: Using the Web to Overcome Data Sparseness – Larger corpora (Google) >> smaller corpora (British National Corpus) for predicting psycholinguistic judgements. – Suggested in the conclusions that web counts better than standard smoothing techniques (back-off) for language modelling – Really exciting! Performance on a broad range of computational linguistics tasks will improve as we collect more and more data – The rising tide of data will lift all boats! • Brill (AskMSR Question Answering): – One can do remarkably well in TREC question answering competitions by using a search engine like Google and very little else • • Norvig (ACL-02 invited talk): ditto Google is also very good at finding collocations/associations – http://labs1.google.com/sets • Cat & dog animals • Cat & more Unix commands! – We used to try to do similar things a decade ago, but the results where not as good, probably because we were working with relatively tiny corpora in the subbillion-word range – Unix commands and many other subjects (esp taboo subjects) are overrepresented on web • Quantity v. quality/corpus size v. balance – Is collecting more data better than smoothing? October 10, 2002 AMTA 40 Question Answering & Google October 10, 2002 AMTA 41 http://labs1.google.com/sets Cat more England Japan Dog Horse Fish Bird cat ls rm mv France Germany Italy Ireland China India Indonesia Malaysia Rabbit Cattle Rat cd cp mkdir Spain Scotland Belgium Korea Taiwan Thailand Livestock Mouse Human man tail pwd Canada Austria Australia Singapore Australia Bangladesh October 10, 2002 AMTA 42 How Large is Large? • Web Renewed Excitement – Large, rich hypertext structure & publicly available – Google = 1000 * BNC • Google: 100 Billion Words • British National Corpus (BNC): 100 Million Words • • • It is often said that the web is the largest repository but… Changes to copyright laws could unlock vast quantities of data: www.lexisnexis.com Private Intranets and telephone networks >> Public Web – FCC (trends.html): 200 million telephones in USA (1 line/person) • Usage: 1 hour/day/line • Assume 1 sec ≈ 1 word 10 Google collections/day – Currently, Intranets (data) ≈ telephones (voice) • But data is growing faster than voice • Admittedly, much of the data on Intranets cannot be distributed – And much of the speech on the telephone networks cannot be recorded – But attitudes are changing • It used to be considered rude to have a telephone answering machine • Now it is considered rude not to have one – Between answering machines and call centers, perhaps 10% can be recorded October 10, 2002 AMTA 43 In the past, recording all this data would have been prohibitively expensive • Thanks to Moore’s Law – Storage costs have been falling faster than transport – And will continue to do so for some time • Even at current prices, transport >> storage – Long-distance telephone calls: $0.05/min – Disk space: $0.005/min • If I am willing to pay for a call – I might as well keep the speech online for a long time • Similar comments hold for data (web pages) – If I am willing to pay to fetch a web page • I might as well cache it for a long time • Why flush a page if there is any chance that it might be requested again? – Web caches crawlers • Go find the pages that I might ask for and keep them forever • Storage is cheap (compared to transport) October 10, 2002 AMTA 44 Recommendations Bait and Switch Strategy • Papers: – Keep up the good work! – There is considerable interest in eval on corpora – There will be more interest in how well methods port to new corpora – More interest in how performance scales with size – Hopefully corpus size helps • but of course, all the data in the world will not solve all the world’s problems – Need to understand when more data will help • And when it is better to do something else – revival of linguistics October 10, 2002 AMTA 45 More Bait and Switch Recommendations Investments in infrastructure • In addition to traditional data collection efforts focused on publicly available linguistic repositories – We out to think about private repositories, as well. • Potential: Huge impact on size of private repositories – By making it more convenient to capture private data, and – By demonstrating that there is value in doing so. • For example, most of us do not keep voice mail for long – though I have been using Scanmail to copy voice mail to email – and like many people, I keep a lot of email online for a long time • Unfortunately, tools for searching email and other private repositories are not as good as the tools for searching public repositories (Google) October 10, 2002 AMTA 46 Overview Historical rational reconstruction emphasizing empiricism & business • • • Before TMI-1992: How to Cook a Demo TMI-1992 Debate: Rationalism v. Empiricism Hybrid/Tools: – – – • What happened to the IBM-Approach to MT? – – – • Kay’s Workstation Good Apps for Crummy MT Trados Support for human translators (Translation Tools: Trados) Fully automatic apps (CLIR) Academia (Machine Learning) Revival of Empiricism: A Personal Perspective – – The IBM-approach to MT was always controversial But there are lots of less controversial spin-offs: • • Tools, lexicography apps, word sense, machine learning Future (AMTA-2012): Bait and Switch Strategy – – Bait: Use Public Internet to develop and test and socialize new ways of extracting value Switch: Apply learnings to larger and more valuable private linguistic repositories Market sizing: translation business is too small for fortune-500 companies – – October 10, 2002 Major lasting contribution of IBM-Approach: academic (machine learning) MT Strategy: work on MT because it is fun, but apply learnings elsewhere to pay the bills AMTA 47 Market Opportunities for Translation • CACM (with Rau): Commercial Opportunities for NLP – Do we count garage outfits funded by grants? – Fortune-500 perspective: min of $100 million – Identified two application areas • Word Processing (Microsoft) • Information Retrieval (Lexis-Nexis/Web) – Did not identify translation • How large is translation market? Huge estimates: – $10+ Billion (Eurolang) – Comparable to AT&T’s revenues for consumer services – Telcos are a major employer (unlike translation) • Estimates of Market Size – English-only (ASCII): English • Pairs of English Speakers – Monolingual (ISO/Unicode) • Pairs of speakers who share a language Monolingual – Multi-lingual (translation) • Pairs of speakers October 10, 2002 Multi-lingual AMTA 48 Surprisingly Little Demand for Multi-lingual Applications: Translation & Interpretation • AT&T Language Line Lesson: – Surprisingly, monolingual market >> multi-lingual market • • • • Lots of demand for telephone service where both parties speak the same language We thought there would be even more demand for a translating telephone Because there are more pairs of people who don’t share a common language than do But people don’t talk (much) to people they don’t know – AT&T Language Line Service: • Speech to speech interpretation over the phone (low tech except conf calling/work-at-home) • Plus a traditional writing to writing translation service – Interpretation market: focused on emergencies: police, hospital (too small for AT&T) – Translation market: focus on technical manuals (also, too small for AT&T) – Surprise: interpretation market ≠ translation market; demand for language pair • Interpretation (speech to speech): depends on number of domestic speakers • Translation (writing to writing): depends on world-wide GNP • • Putnam: Bowling Alone Bridging and Bonding Employment opportunities for translators – – – – October 10, 2002 Not Good Markup is more valuable than translation Desktop publishing is a better business Business case: Adobe >> Trados AMTA English Monolingual Multi-lingual 49 Summary Historical rational reconstruction emphasizing empiricism & business • • • Before TMI-1992: How to Cook a Demo TMI-1992 Debate: Rationalism v. Empiricism Hybrid/Tools: – – – • What happened to the IBM-Approach to MT? – – – • Kay’s Workstation Good Apps for Crummy MT Trados Support for human translators (Translation Tools: Trados) Fully automatic apps (CLIR) Academia (Machine Learning) Revival of Empiricism: A Personal Perspective – – The IBM-approach to MT was always controversial But there are lots of less controversial spin-offs: • • Future (AMTA-2012): Bait and Switch Strategy – – • Bait: Use Public Internet to develop and test and socialize new ways of extracting value Switch: Apply learnings to larger and more valuable private linguistic repositories Market sizing: translation business is too small for fortune-500 companies – – October 10, 2002 Tools, lexicography apps, word sense, machine learning Major lasting contribution of IBM-Approach: academic (machine learning) MT Strategy: work on MT because it is fun, but apply learnings elsewhere to pay the bills AMTA 50