IDPD

advertisement

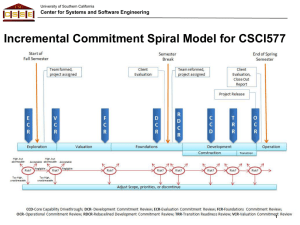

Incremental Development Productivity Decline Ramin Moazeni 4/14/2014 • Introduction • Incremental Development Background • Incremental Development Productivity Decline Background • Research Hypotheses • Research Approach • Research Results • Summary of Contributions Copyright © USC-CSSE Outline 2 4/14/2014 Introduction Incremental development The most common development paradigm Reduces risks by allowing flexibility per increment IDPD : a phenomenon in which there is an overall decline in productivity of the increments IDPD factor : the percentage of decline in software productivity from one increment to the next Reason of decline : previous-increment breakage, usage feedback, increased integration and testing effort, all charged to current-increment budget Copyright © USC-CSSE Incremental Development Productivity Decline (IDPD) 3 4/14/2014 Incremental Development – Definition for This Research Any development effort with: More than one development step More than one released build Each step builds on previous ones and would not be able to stand alone without the steps that came before it Contribute a significant amount of new functionality Add a significant amount of size (not less than 1/10th of the previous one) Not just a bug fix of the previous one (otherwise counted as part of that one) Copyright © USC-CSSE Increments have to: 4 • Model relating productivity decline to number of builds needed to reach 8M SLOC Full Operational Capability • Assume Build 1 production of 2M SLOC @100 SLOC/PM 20000 PM/24mo. = 833 developers Constant Staff size for all builds • Going from IDPD=10 to IDPD=20 increases schedule by 8/5=1.6, or 60% 4/14/2014 Copyright © USC-CSSE Effects of IDPD on Number of Increments 5 4/14/2014 Exploration of IDPD Factor Sources of variations based on experience on several projects: Higher IDPD (less productivity) Lower IDPD (more productivity) Effort to maintain previous increments; bug fixing, COTS upgrades, interface changes, all reused SLOC, not ESLOC Next increment requires previousincrement modifications Next increment has more previous increments to integrate/interact with Next increment touches less of previous increments Staff turnover reduces experience level Current staff more experienced, productive Next increment software more complex Next increment software less complex Copyright © USC-CSSE Next increment spun-out to more platforms Previous increments incompletely developed, tested, integrated Previous increments did much of next increment’s requirements, architecture 6 Software Category Impact on IDPD Factor Non-Deployable Support Software Throw-away code. Low Build-Build integration. High reuse. IDPD factor lowest than any type of deployable/operational software Infrastructure Software Often the most difficult software. Developed early on in the program. Touched by all application software. IDPD factor likely to be the highest. 4/14/2014 IDPD Type Characteristics Platform Software Developed by HW manufacturers. Single vendor, experienced staff in a single controlled environment. Integration effort is primarily with HW. IDPD will be lower due to the benefits mentioned above. Firmware (Single Build) IDPD factor not applicable. Single build increment. Copyright © USC-CSSE Application Software Builds upon Infrastructure software. IDPD is expected to be medium to high. 7 4/14/2014 Theoretical Foundations of IDPD - 1 Lehman Laws of Software Evolution: • Software evolution is a predictable process with invariances and that in order to preserve quality, responsible organizations will need to perform regular and organized maintenance on their existing software and mental maintenance on their training. • Brittle, point-solution architecture • Unscalable, incompatible COTS products, services • Deferring ilities: security, scalability, availability Copyright © USC-CSSE Technical Debt: Short-term decisions causing longterm rework 8 4/14/2014 Theoretical Foundations of IDPD - 2 Maintenance Phenomena: • Maintenance necessary due to factors such as technological progress, discovery of bugs, changing external interfaces, and others. • There are situations where peaks occur (i.e. Y2K (addressed by fixing the date format and handling of years) and Sarbanes-Oxley (changes in accounting standards). Copyright © USC-CSSE Code base consists of adding, modifying and deleting code. Enhanced maintenance and reuse cost model suggests effort is required for deleting code. 9 • 4/14/2014 Research Hypotheses Productivity Decline Hypothesis 1: In incrementally developed software projects that have coherence and dependency between their increments, productivity declines over their course. • Build-to-Build Behavior Hypothesis 2: The rate of productivity decline from increment to increment is not constant. Although some projects and “Laws” suggest that there is a statistically invariant percentage of productivity decline across increments, this may not be the case in general. Domain Hypothesis 3: For different domains (IDPD types), the average decline in productivity over the course of a project varies significantly. Used to evaluate current software evolution “laws” Copyright © USC-CSSE • 10 • Analyze sources of effort, and the activities that go on during Incremental development and identify their likely impact on productivity • Collect the attributes of increments, parameters of the projects, quantitative data of the increments (SLOC, dates), and environmental data (cost drivers, scale factors). 4/14/2014 Behavioral Analysis Copyright © USC-CSSE Research Approach 11 Data Collection and Analysis - 1 4/14/2014 Research Approach Main sources of data collection Software industry Controlled experiments Open source Starting and ending dates are clear. Has at least two increments of significant capability that have been integrated with other software (from other sources) and shown to work in operationally-relevant situations . Has well-substantiated sizing and effort data by increment. Less than an order of magnitude difference in size or effort per increment. Realistic reuse factors for COTS and modified code. Uniformly code-counted source code. Effort pertaining just to increment deliverables. Copyright © USC-CSSE Criteria of projects used for data collection 12 • Inaccurate, inadequate or missing information on modified code (size provided), size change or growth, average staffing or peak staffing, personnel experience, schedule, and effort. • Inconsistent size measurement (different tools for different increments). • Replicated duration (start and end dates) across all increments. • Low number of increments (less than 3). • Unknown data history. 4/14/2014 Data Collection Challenges Copyright © USC-CSSE Research Approach 13 4/14/2014 Research Approach • Identify classes of projects that exhibit different patterns of IDPD, and provide rationales for their varying behavior. • Separate projects into domains by their position in the hierarchy (applications on the top and firmware on the bottom, with some consideration given to support software). Copyright © USC-CSSE Contextual Analysis 14 4/14/2014 • Introduction • Incremental Development Background • Incremental Development Productivity Decline Background • Research Hypotheses • Research Approach • Research Results • Summary of Contributions Copyright © USC-CSSE Outline 15 Statistical Analysis 4/14/2014 Research Approach Linear Correlation Measure the strength of the linear association between two paired sets of data. Correlation coefficient Significance level Compare the means from two sets of data in order to test the probability to accept the null hypothesis. paired t-test two-tailed t-test Copyright © USC-CSSE T-Test 16 Statistical Analysis (Cont.) 4/14/2014 Research Approach • Whether the IDPD of the three categories differs in a statistically significant way? ANOVA, which is a way to determine whether the means differ significantly. • F test: any significant difference existing among any of the means. It is calculated by the division of between-groups variance and within-groups variance. Within Groups variance is the variance within individual groups, variance that is not due to the independent variable. Between Groups variance is the explained variance that is due to the independent variable, the difference among the different categories Copyright © USC-CSSE Analysis of Variance (ANOVA) 17 4/14/2014 Case Studies • Project 1 and 2 from “Balancing Agility and Discipline” Management Platform (QMP) Copyright © USC-CSSE • Quality 18 • Two web based client–sever systems developed in Java. • Data mining systems. • Agile process similar to XP with several short iteration cycles and customer-supplied stories. • Productivity as new SLOC per user story. Assumption: Every user story takes the same time to implement. 4/14/2014 Copyright © USC-CSSE Projects 1 and 2 19 4/14/2014 Polynomial Trend Line New Development Effort of Project 1 1,00 0,90 0,85 0,82 0,80 0,77 0,70 0,60 0,57 0,50 Project 1 0,49 0,40 0,36 0,30 y = -0,023x5 + 0,3966x4 - 2,5291x3 + 7,321x2 - 9,5311x + 5,2174 R² = 1 0,20 0,10 0,00 1 2 3 4 5 6 Copyright © USC-CSSE Полиномиальная (Project 1) 20 4/14/2014 Polynomial Trend Line (Cont.) New Development Effort of Project 1 2,00 1,00 0,85 0,82 0,77 0,57 0,49 0,36 0,00 1 2 3 4 5 6 7 -1,00 Project 1 -2,00 -3,00 -4,00 -5,00 y = -0,023x5 + 0,3966x4 - 2,5291x3 + 7,321x2 - 9,5311x + 5,2174 R² = 1 Copyright © USC-CSSE Полиномиальная (Project 1) 21 4/14/2014 Comparison of Trend Lines New Development Efforts of Project 1 1,00 0,90 0,85 0,82 0,80 0,77 0,70 0,60 0,57 0,50 Project 1 Логарифмическая (Project 1) 0,49 0,40 Степенная (Project 1) 0,36 0,30 y = -0,233ln(x) + 0,8975 R² = 0,6012 0,20 y = 0,9141x-0,364 R² = 0,4982 0,10 y = 0,9445e-0,124x R² = 0,4563 0,00 1 2 3 4 5 6 Copyright © USC-CSSE Экспоненциальная (Project 1) 22 4/14/2014 Comparison of Trend Lines (Cont.) New Development Efforts of Project 2 1,40 y = -0,392ln(x) + 1,0218 R² = 0,7731 1,20 y = 1,2393e-0,251x R² = 0,585 1,00 0,80 y = 1,175x-0,782 R² = 0,5687 0,78 Project 2 Логарифмическая (Project 2) 0,67 Степенная (Project 2) 0,60 Экспоненциальная (Project 2) 0,53 0,46 0,40 0,21 0,20 0,14 Copyright © USC-CSSE 1,00 0,00 1 2 3 4 5 6 7 23 4/14/2014 Quality Management Platform Web-based application. Facilitates the process improvement initiatives in many small and medium software organizations. 6 builds, 6 years, different increment duration. Size after 6th build: 548 KSLOC mostly in Java. Average staff on project: ~20 Copyright © USC-CSSE QMP Project Information: 24 4/14/2014 Comparison of Trend Lines QMP 12 10 8 Productivity 6 Логарифмическая (Productivity) 4 y = -0,7989x + 8,5493 R² = 0,3693 2 y = -2,708ln(x) + 8,7233 R² = 0,5326 Copyright © USC-CSSE Линейная (Productivity) 0 1 2 3 4 5 6 25 • Logarithmic is best fit in most observed realworld cases • Trend line alone is not enough for reasonably precise prediction of effort for next increment 4/14/2014 Trend Line Summary • COCOMO II cost drivers can influence the decline for the next given increment (i.e. CPLX, PCON, RELY, RESL, etc). Copyright © USC-CSSE Additional predictors needed 26 4/14/2014 Trend Line Summary Normalized Productivity Trendlines 1,20 Cisco streaming 1,00 Cisco unnamed XP 1 0,80 XP 2 QMP 0,60 ODA Vu 5 Линейная (Cisco streaming) 0,40 Линейная (Cisco unnamed) Линейная (XP 1) Линейная (XP 2) 0,20 Линейная (QMP) Линейная (System of Systems) Линейная (ODA) 0,00 Линейная (Vu 5) 1 -0,20 2 3 4 5 6 7 8 Copyright © USC-CSSE Productivity System of Systems 27 4/14/2014 Copyright © USC-CSSE Results - Statistical Correlation for Productivity Decline Hypothesis 1 28 4/14/2014 Copyright © USC-CSSE Results - T-Test for Build-to-Build Behavior Hypothesis 2 29 4/14/2014 Results - ANOVA Testing for Domain Hypothesis 3 20% 18% 18% 16% 14% 10% 9% 8% 6% 5% 4% 2% 0% Application Infrastructure Platform Copyright © USC-CSSE IDPD 12% Domain Names 30 4/14/2014 Results - ANOVA Testing for Domain Hypothesis 3 (Cont.) Copyright © USC-CSSE Average IDPD for different domains differed significantly across the three sizes. Significance level is 0.002 < 0.05. Therefore, there is a statistically significant difference in the mean Average IDPD among different domains. 31 4/14/2014 Results - ANOVA Testing for Domain Hypothesis 3 (Cont.) 7 6 6 5 4 3 0%-10% 3 11%-20% 21%-30% 2 2 1 0 0 Application 0 0 Infrastructure Domain Platform 0 Copyright © USC-CSSE Number of Projects 5 6 32 4/14/2014 Results - ANOVA Testing for Domain Hypothesis 3 (Cont.) 35% 30% 25% 20% 10% 5% 0% 0 1 2 3 Application 4 5 Infrastructure 6 7 8 9 Copyright © USC-CSSE 15% platform 33 • COCOMO II, or some other cost model, with • COPSEMO, cost model, • Had or its equivalent in some other to be used for each build because their incremental development models assume no change in cost drivers by increment Copyright © USC-CSSE • Along 4/14/2014 Constructive Incremental Cost Model (COINCOMO) 34 Copyright © USC-CSSE 4/14/2014 COINCOMO Component 35 • Confirmed nontrivial existence of IDPD (Hypothesis 1) • Rejected (confirmed null hypothesis) build-tobuild constancy of IDPD (Hypothesis 2) 4/14/2014 Summary of Contributions • Confirmed IDPD variation by domain (Hypothesis 3) • Developed COINCOMO cost estimation model supporting cost driver variation by increment Copyright © USC-CSSE Lehman “Laws” 3 and 4 on statistical invariance not confirmed 36 • Moazeni, R, Link D, Chen C, and Boehm B. Software Do mains in Incremental Development Productivity Decline.” ACCEPTED for publication, ICSSP 2014 • Moazeni, R, Link D, & Boehm B. “COCOMO II Parameters and IDPD: Bilateral Relevances” ACCEPTED for publication, ICSSP 2014 • Moazeni, R., Link, D., & Boehm, B., Incremental development productivity decline. In Proceedings of the 9th International Conference on Predictive Models in Software Engineering (p. 7). ACM, 2013 • Moazeni, R., Link, D., & Boehm, B., Lehman’s laws and the productivity of increments: Implications for productivity," in APSEC 2013, Bangkok, Thailand, 2013. 4/14/2014 Copyright © USC-CSSE Publications 37 • Tan, T., Li, Q., Boehm, B., Yang, Y., He, M., & Moazeni, R., Productivity trends in incremental and iterative software development. In Proceedings of the 2009 3rd International Symposium on Empirical Software Engineering and Measurement (pp. 1-10). IEEE Computer Society, 2009 • Brown AW, Moazeni R, Boehm B., Realistic Software Cost Estimation for Fractionated Space Systems, AIAA 2009 • Moazeni R, Brown AW, Boehm B., Productivity Decline in Directed System of Systems Software Development , ISPA/SCEA 2009 • Brown AW, Moazeni R, Boehm B., Software Cost Estimation for Fractionated Space Systems, AIAA 2008 4/14/2014 Copyright © USC-CSSE Publications 38 Copyright © USC-CSSE Questions? 39 4/14/2014 Copyright © USC-CSSE Backup 40 4/14/2014 • 4/14/2014 Lehman’s Laws of Software Evolution S-type S-type or static-type systems are formal and verifiable set of specifications with easy to understand solutions (their specifications do not change). Examples: square root, planetary orbits • P-Type • E-type E-types, or embedded-types, defined as all programs that ‘operate in or address a problem or activity of the real world’. Laws focus on E-type systems, which constitute most of the world’s software. Copyright © USC-CSSE P-type systems, or practical-type systems, defined precisely and formally . The solution is not immediately apparent to the user. Examples: bridges, highways 41 4/14/2014 Lehman’s Laws and IDPD -1 • 1st Law: Continuing Change Description Continuing Change or loss of quality/usefulness Application to IDPD Maintenance necessary to keep up quality/usefulness The validity of this “law” will be tested by hypotheses 1: i.e., if IDPD=0, then no effort was needed to maintain the earlier increments quality and value. 2nd Law: Increasing Complexity Description Increasing complexity unless effort applied to reduction Application to IDPD Integration work needs to be done in terms of integration, documentation, adaptation Again, the validity of this “law” will be tested by hypotheses 1 Copyright © USC-CSSE • 42 4/14/2014 Lehman’s Laws and IDPD -2 • 3rd Law: Fundamental Law of Program Evolution Description Evolutionary dynamics are self-regulating Any change or variance in one system attribute will also be relevant for all others. Application to IDPD • 4th Law: Conservation of Organizational Stability Description Global activity rate is statistically invariant Application to IDPD Beyond a certain upper limit, adding more resources cannot benefit system, therefore no escaping IDPD by committing them. Again, statistical invariance will be tested by hypotheses 2. Copyright © USC-CSSE Similar parameters should yield similar results decline in productivity over increments should be predictable. Time spent on making a system secure, will take away time spent on improving UI. The validity of this “law” will be tested by hypotheses 2: i.e. statistical invariance implies that IDPD will be relatively constant across increments. 43 4/14/2014 Lehman’s Laws and IDPD -3 • 5th Law: Conservation of Familiarity Description Mental maintenance has to be performed Application to IDPD Mastery of the system will have to keep up with the increments and there is an upper bound to the beneficial effort (4th law), so training will reduce productivity 6th Law: Continuing Growth Description Functionality must continually increase to maintain user satisfaction Application to IDPD Existing older increments must increase functionality to the detriment of newer ones, taking away productivity Copyright © USC-CSSE • 44 4/14/2014 Lehman’s Laws and IDPD -4 • 7th Law: Declining Quality Description System quality will appear to be declining without rigorous maintenance, adaptation to environmental changes Application to IDPD Same as continuing change Hypotheses 1 will test this over the long run. Hypotheses 2 will test “continuality”: there may be increments that focus more on improving quality rather than functionality. 8th Law: Feedback System Description Evolution processes are multi-level, multi-loop, multi-agent feedback systems Restatement of the definition of an E-type system Application to IDPD Parameters of all increments are relevant within the increments and to other increments Validity of this will be tested by hypotheses 3. Copyright © USC-CSSE • 45 4/14/2014 Research Approach Data Collection and Analysis - 1 Number of increments 40 35 30 25 20 10 5 0 Number of increments Copyright © USC-CSSE 15 46 4/14/2014 • Nguyen, V. "Improved Size and Effort Estimation Models for Software Maintenance", PhD Dissertation, University of Southern California, 2010. • Larman, Craig. "Agile and iterative development: a manager's guide". Addison-Wesley Professional, 2004. • Lehman, Meir M. "Programs, life cycles, and laws of software evolution."Proceedings of the IEEE 68.9 (1980): 1060-1076. • Boehm, Barry, et al. "Future Software Sizing Metrics and Estimation Challenges", 15th Annual Practical Systems and Software Measurement (PSM) Users' Group Conference, 2011. • Boehm B., Software Engineering Economics. Englewood Cliffs, NJ, PrenticeHall, 1981. • Boehm, Barry, and Richard Turner. "Balancing agility and discipline: A guide for the perplexed ", Addison-Wesley Professional, 2003. • Defense Cost and Resource Center. (2012, 6 5). Understanding the Software Resource Data Report (SRDR) Requirements. Available at Defense Cost and Resource Center: http://dcarc.cape.osd.mil/Files/Training/CSDR_Training/DCARC%20Training %20X.%20SRDR%20102012.pdf Copyright © USC-CSSE References 47 4/14/2014 Constructive Incremental Cost Model calculating the total schedule in a multi-build approach, only the parts up to an overlap are counted. Total Efforts are additive Schedule is cumulative (at the longest subsystem build) Copyright © USC-CSSE • When 48 4/14/2014 COINCOMO Naming Conventions • • • • System is conceptual aggregator of Sub-Systems Sub-System is aggregator for (software) Components Component = COCOMO Project Sub-Component = COCOMO Module Copyright © USC-CSSE COINCOMO Systems, Sub-Systems and Components 49 4/14/2014 COINCOMO Naming Conventions • • • • System is conceptual aggregator of Sub-Systems Sub-System is aggregator for (software) Components Component = COCOMO Project Sub-Component = COCOMO Module Copyright © USC-CSSE COINCOMO Systems, Sub-Systems and Components 50 4/14/2014 MBASE/RUP Concurrent Activities L C O L C A C C D I O C P R R Copyright © USC-CSSE I R R 51 4/14/2014 Overlap across builds Possible Overlapping Software Development Spirals Traditional Deliver And Enhance Inception Elaboration Construction Transition Inception Elaboration Construction Transition Evolve During Transition [After Sw IOC] Inception Elaboration Construction Transition Evolve After Architecture Complete Inception Elaboration with Evol. Req. Construction Transition Incept. Elaboration Construction Transition I. Elab. Construction Transition ... Copyright © USC-CSSE Inception Elaboration Construction Transition 52 4/14/2014 • build a base to integrate all of the components of the COCOMO "suite" of software development estimation tools • cover all software development activities • accommodate the multiple (from different organizations), builds (or deliveries) and systems • COCOMO model as a base: estimated the software Effort (PM) and Schedule (M) for each module • COPSEMO model to separate the man power loading across Elaboration and Construction phases • COPSEMO model to add additional effort and schedule for Inception and Transition phases Copyright © USC-CSSE COINCOMO Vision 53 4/14/2014 Multi-Build COINCOMO New Build x Build x+1 Carried Build x+2 Modify Build x Carried New, Reused and COTS New Build x+1 New, Reused and COTS Box size notional for effort. Modify Build x+1 Carried New Build x+2 New, Reused and COTS etc. Copyright © USC-CSSE Build x 54 Copyright © USC-CSSE 4/14/2014 COINCOMO COPSEMO 55 4/14/2014 Controlled Experiment - 1 • Testing the IDPD hypotheses has been problematic due to challenges in data collection – hence the controlled experiment (Fall 2012, Spring 2013) • Setup of experiment Fall 2012 21 graduate students of Computer Science with varying degrees of skills in software engineering and programming Determination of skills Questionnaire Survey about their programming experiences (programming languages with skill levels and years of experience) Results used in team formation Copyright © USC-CSSE The students had committed to between one and four units of directed research. Students were expected to work five hours per week per unit. 56 4/14/2014 Controlled Experiment - 2 • Projects 3 Web applications and 1 Desktop application Requirements were rolled out to teams weekly Working new increment expected each week (code compiles, no showstopper runtime errors) Changes & Manipulations Personnel turnover Some members left because they dropped the DR course Some students were moved from one team to the other Focus was on the cost drivers and scale factors whose manipulation was at the same time possible and promised to change the productivity of the increment significantly Copyright © USC-CSSE • 57 4/14/2014 Controlled Experiment - 3 Requirement Change Teams 1 and 2 as well as 3 and 4 had their projects and their codebases swapped with each other. Teams 1 and 2 had the same requirements, save for one creating a web application and the other a desktop application. This meant a significant requirements change and the need to analyze the code. Teams 3 and 4 had completely different projects. Therefore these projects had a complete change of personnel. Copyright © USC-CSSE • 58 4/14/2014 Controlled Experiment - 4 • Requirements Some weeks, teams were flooded with requirements, i.e. given more requirements than they would be able to fulfill. All teams were given two bogus (but official looking) requirements that were objectively not possible to fulfill. Data Collection Time sheets Web surveys (cost drivers / scale factors) Report at end of semester Copyright © USC-CSSE • 59 4/14/2014 Controlled Experiment - 5 External validity People Students instead of industry professionals Motivation is weaker (no real threat of losing job or failing course) Time spent per week less Attention split Cannot fire anyone TAs/RAs instead of actual customers Time Weekly increments Overall limited to one semester Situations All requirements imposed by us (not negotiated) Unable to simulate certain cost drivers (e.g. RELY can’t be simulated at all, CPLX only within a range) Copyright © USC-CSSE • 60