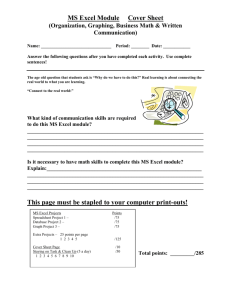

Washington DC 2012 Poster take 8 Monica Approved

advertisement

Managerial Accounting Excel Assignments: A Potential Leading Indicator of Exam Performance Michael J. Krause INTRODUCTION GENERAL OBSERVATIONS During the spring 2012 semester, I was a visiting professor at Ithaca College. I taught two sections of Managerial Accounting. In the college catalog, the the course description for ACCT 226 specifically says “projects include the use of basic computerized spreadsheets.” In the course objectives I listed the third objective as “to enhance skills in computerized spreadsheets.” To implement this objective and to reflect the course’s catalog description, I assigned to be graded nine Excel exercises. These exercises could earn students 30 points which amounted to 10% of their total grade calculation. Rather than dropping the lowest grade, three bonus points awarded to those who did well on all homework submitted. The bonus points also served to offset any reasonable errors made on any individual assignments. Grading was more on effort and setup rather than on absolute mathematical accuracy. I tried to convince students to organize their worksheet so as to place problem data at the top, and then feed the data into the main body of the worksheet. The idea was to design a worksheet template usable for more than one application of a particular topic. This poster covers the first two exams and the related assigned Excel projects for each unit. Both exams had two companion Excel assignments. Both assignments in the first unit required students to calculate the cost of goods manufactured and the resulting cost of goods sold. Repetitive nature of the first unit assignments designed to emphasize to students the power of Excel as revealed when attempting a second iteration of a particular topic. The second unit Excel assignments covered different topics. One assignment generated a five step approach to assign cost in a process costing environment. The other assignment dealt with the C-V-P model with an ultimate payoff calculation of a point of indifference between two business models with significantly different operating leverage strategies. All Excel problems appeared in some shape or form on the unit exams. Student performance on the two observed exams measured and evaluated based on the multiple choice questions for two reasons. First, using Bloom’s Taxonomy to guide question selection from text test bank establishes exams’ expectations balance (see chart below). Second, multiple choice questions provide an objective measure. Bloom’s Taxonomy Categories for Multiple Choice Knowledge and Comprehension Application Total Exam #1 Exam #1 Multiple Multiple Choice Choice Existence % Error rate % Exam #2 Multiple Choice Existence % Exam #2 Multiple Choice Error rate % 52.40% 46.00% 42.90% 44.90% 47.60% 100.00% 54.00% 100.00% 57.10% 100.00% 55.10% 100.00% ADDITIONAL OBSERVATIONS EXHIBIT 1 – Exam #1 Multiple Choice Error Rate vs. Excel Homework Grade Spring 2012 Number of students observed Average multiple choice error rate (max = 21) Excel homework score (max = 8) Total Class Performance Achievers Excel Grade > 4 Non-Achievers Excel Grade < 5 75 63 12 5.95 5.54 8.08 Number of students observed Average multiple choice error rate Excel homework score 6.12 6.90 2.00 Total Class Performance Achievers Excel Grade > 4 Non-Achievers Excel Grade < 5 100.00% 84.00% 16.00% Number of students observed Average multiple choice error rate Excel homework score Bottom Third Multiple Choice Errors (Max = 21) 3.36 5.64 8.84 Excel HW Grade (Max = 8) 7.16 6.24 4.96 0 4 8 Multiple Choice Errors (Max = 21) 2.00 5.88 11.44 Excel HW Grade (Max = 9) 8.96 8.4 5.84 1 1 9 Top Third Middle Third Bottom Third 14.72 11.44 5.72 Exam #1 – Spring 2012 Non-Excel Achievers (Max = 75) Non-Excel Achievers (Max = 75) EXHIBIT 6- Class Test Performance on Assigned Excel Subject Matter Exam #1 – Spring 2012 100.00% 93.11% 135.80% 100.00% 112.75% 32.68% Excel HW Grade (Max = 8) 6.92 5.92 5.52 Multiple Choice Errors (Max = 21) 4.72 6.00 7.12 Non- Excel Achievers (Max = 75) 1 5 6 Total Class Performance Achievers Excel Grade > 5 Non-Achievers Excel Grade < 6 75 64 11 6.44 5.95 9.27 7.73 8.64 2.45 EXHIBIT 4 – Exam #2 Multiple Choice Error Rate vs. Excel HW(Common-Sized) Spring 2012 Middle Third Test Score on Subject Matter Assigned for Excel HW (Max = 16) EXHIBIT 3 – Exam #2 Multiple Choice Error Rate vs. Excel Homework Grade Spring 2012 Number of students observed Average multiple choice error rate (max = 21) Excel homework score (max = 10) Top Third Exam #2 – Spring 2012 EXHIBIT 2 – Exam #1 Multiple Choice Error Rate vs. Excel HW(Common-Sized) Spring 2012 EXHIBIT 5- Detailed Analysis of Class Performance on the Exams Total Class Performance Achievers Excel Grade > 5 Non-Achievers Excel Grade < 6 100.00% 85.33% 14.67% 100.00% 92.39% 143.94% 100.00% 111.77% 31.69% CONCLUSIONS The proposal for this poster admitted that Excel use is no longer deemed innovative. However, Excel use can cause one to reflect on the theme of this convention “seeds of innovation.” If students fail to put forth efforts with a simple tool like Excel, should future pedagogical research prioritize identifying unwilling students? In this poster, Excel non-achievers defined as those who fail to obtain 50% of available homework points when grading not based upon simple math accuracy. 1. Exams’ credibility established in poster’s introductory chart that used Bloom’s Taxonomy as a benchmark to measure errors along with test achievement goals. 2. Exhibits 2 and 4 suggest that Excel non-achievers (less than 17% of the class) lowered the total class test performance average while earning homework grades which were less than 33% of the total class average. (See also Exhibits 1 and 3). 3. Exhibits 5 and 6 give conflicting insights. When sorting performance by multiple choice errors, a considerable majority of Excel non-achievers ended up in bottom third of the class. But when sorting performance by a test question which mirrored the Excel homework assignment, only 50% of non-achievers found in bottom third. Conflict in point 3 forms a new research question. If students willing to memorize but not willing to use other learning methods, will this fact presage poor results on multiple choice testing since that format requires more critical thinking skills?