Robustness

advertisement

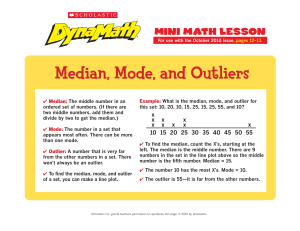

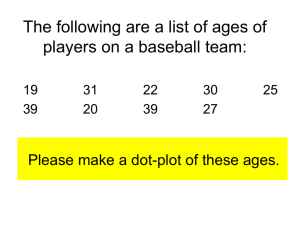

Robust Statistics Osnat Goren-Peyser 25.3.08 Outline 1. 2. 3. 4. 5. 6. Introduction Motivation Measuring robustness M estimators Order statistics approaches Summary and conclusions 1. Introduction Problem definition Let x x1 , x2 ,..., xn be a set of iid variables with distribution F, sorted in increasing order so that x1 x 2 ... x n x m is the value of the m’th order statistics An estimator ˆ ˆ x1 , x2 ,..., xn is a function of the observations. We are looking for estimators such that ˆ Asymptotic values of estimator ˆ F Define: ˆ ˆ F such that ˆn p where ˆ F is the asymptotic value of estimator ˆn at F. Estimator ˆn is consistent for θ if: ˆ F We say that ˆn is asymptotically normal with parameters θ,V(θ) if n ˆn N 0,V d Efficiency An unbiased estimator is efficient if I var ˆ 1 Where I is Fisher information An unbiased estimator is asymptotic efficient if lim I var ˆ 1 n Relative efficiency For fixed underlying distribution, assume two unbiased estimators ˆ1 and ˆ2 of . we say ˆ is more efficient than ˆ2 if 1 var ˆ1 var ˆ2 The relative efficiency (RE) of ˆ1 estimator with respect to ˆ2 is defined as the ratio of their variances. RE ˆ2 ;ˆ1 var ˆ1 var ˆ2 Asymptotic Relative efficiency The asymptotic relative efficiency (ARE) is the limit of the RE as the sample size n→∞ For two estimators ˆ 1 ,ˆ 2 which are each consistent for θ and also asymptotically normal [1]: var ˆ 1 ARE ˆ 2 ;ˆ 1 lim n var ˆ 2 The location model xi=μ+ui ; i=1,2,…,n Where μ is the unknown location parameter, ui are the errors, and xi are the observations. The errors ui‘s are i.i.d random variables each with the same distribution function F0. The observations xi‘s are i.i.d random variables with common distribution function: F(x) = F0(x- μ) Normality Classical statistical methods rely on the assumption that F is exactly known 2 The assumption that F N , is a normal distribution is commonly used But normality often happens approximately robust methods Approximately normality The majority of observations are normally distributed some observations follow a different pattern (not normal) or no pattern at all. Suggested model: a mixture model A mixture model Formalizing the idea of F being approximate normal Assume that a proportion 1-ε of the observations is generated by the normal model, while a proportion ε is generated by an unknown model. The mixture model: F=(1- ε)G+ εH F is a contamination “neighborhood” of G and also called the gross error model F is called a normal mixture model when both G and H are normal 2. Motivation Outliers Outlier is an atypical observation that is well separated from the bulk of the data. Statistics derived from data sets that include outliers will often be misleading Even a single outlier can have a large distorting influence on a classical statistical methods Without the outliers 100 90 With the outliers 180 2 1 160 80 140 70 120 60 100 50 80 40 60 30 outliers 40 20 20 10 0 -4 -2 0 values 2 4 0 -15 Estimators not sensitive to outliers are said to be robust -10 -5 values 0 5 Mean and standard deviation The sample mean is defined by 1 n x xi n i 1 A classical estimation for the location (center) of the data 2 2 For N , , the sample mean is unbiased with N , n The sample standard deviation (SD) is defied by 1 n 2 s x x i n 1 i 1 A classical estimation for the dispersion of the data How much influence a single outlier can have on these classical estimators? Example 1 – the flour example Consider the following 24 determinations of the copper content in wholemeal flour (in parts per million), sorted in ascending order [6] 2.20,2.20,2.40,2.40,2.50,2.70,2.80,2.90, 3.03,3.03,3.10,3.37,3.40,3.40,3.40,3.50, 3.60,3.70,3.70,3.70,3.70,3.77,5.28,28.95 The value 28.95 considered as an outlier. Two cases: Case A - Taking into account the whole data Case B - Deleting the suspicious outlier Example 1 – PDFs Case A Case B x 4.28, s 5.30 x 3.21, s 0.69 Case B - Deleting the outlier Case A - Using the whole data for estimation 0.18 0.18 data sample mean 0.14 0.14 0.12 0.12 0.1 0.08 0.1 0.08 0.06 0.06 0.04 0.04 0.02 0.02 0 0 5 mean 10 15 Observation value 20 25 data sample mean 0.16 Probability Probability 0.16 30 outlier 0 2 2.5 3 3.5 4 Observation value mean 4.5 5 5.5 Example 1 – arising question Question: How much influence a single outlier can have on sample mean and sample SD? Assuming the outlier value 28.95 is replaced by an arbitrary value varying from −∞ to +∞: The value of the sample mean changes from −∞ to +∞. The value of the sample SD changes from −∞ to +∞. Conclusion: A single outlier has an unbounded influence on these two classical estimators! This is related to sensitivity curve and influence function, as we will see later. Handling outliers approaches Detect and remove outliers from the data set Manual screening The normal Q-Q plot The “three-sigma edit” rule Robust estimators! Manual screening Why screen the data and remove outliers is not sufficient? Users do not always screen the data. Outliers are not always errors! Outliers may be correct, and very important for seeing the whole picture including extreme cases. It can be very difficult to spot outliers in multivariate or highly structured data. It is a subjective decision Without any unified criterion: Different users different results It is difficult to determine the statistical behavior of the complete procedure The Q-Q normal plot Manual screening tool for an underlying Normal distribution A quantile-quantile plot of the sample quantiles of X versus theoretical quantiles from a normal distribution. If the distribution of X is normal, the plot will be close to linear. The “three-sigma edit” rule Outlier detection tool for an underlying Normal distribution Define the ratio between xi distance to the sample mean and the sample SD: ti xi x s The “three-sigma edit rule”: Observations with |ti|>3 are deemed as suspicious Example 1 - The largest observation in the flour data has ti=4.65, and so is suspicious Disadvantages: In a very small samples the rule is ineffective Masking: When there are several outliers, their effects may interact in such a way that some or all of them remain unnoticed Example 2 – Velocity of light Consider the following 20 determinations of the time (in microseconds) needed for light to travel a distance of 7442 m [6]. 28,26,33,24,34,-44,27,16,40,-2 29, 22,24,21,25,30, 23,29,31,19 The actual times are the table values × 0.001 + 24.8. The values -2 and -44 suspicious as outliers. Example 2 – QQ plot QQ Plot of Sample Data versus Standard Normal 24.84 24.83 Quantiles of Input Sample 24.82 24.81 24.8 24.79 Outlier -2 24.78 24.77 24.76 24.75 -2 Outlier -44 -1.5 -1 -0.5 0 0.5 Standard Normal Quantiles 1 1.5 2 Example 2 – Masking Results: ti xi 2 1.35 , ti xi 44 3.73 Based on the three-sigma edit rule: the value of |ti | for the observation −2 does not indicate that it is an outlier. the value −44 “masks” the value −2. Detect and remove outliers There are many other methods for detecting outliers. Deleting an outlier poses a number of problems: Affects the distribution theory Underestimating data variability Depends on the user’s subjective decisions difficult to determine the statistical behavior of the complete procedure. Robust estimators provide automatic ways of detecting, and removing outliers Example 1 – Comparing the sample median to the sample mean Case C - Testing the median estimator data Case A Case A Case B Case B 0.16 0.14 Probability 0.12 sample mean sample median sample mean sample median 0.1 • Case A: med = 3.3850 • Case B: med = 3.3700 • The sample median fits the bulk of the data in both cases • The value of the sample median does not change from −∞ to +∞ as was the case for the sample mean. 0.08 0.06 0.04 0.02 0 2 2.5 3 3.5 4 Observation value 4.5 5 5.5 Median A Mean B Mean A Median B • The sample median is a good robust alternative to the sample mean. Robust alternative to mean Sample median is a very old method for estimating the “middle” of the data. The sample median is defined for some integer m by if n is odd , n 2m 1 x m Med x x m x m 1 if n is even, n 2m 2 For large n and F N , 2 , the sample median is approximately N , 2 2n At normal distribution: ARE(median;mean)=2/π≈64% Single outlier affection The sample mean can be upset completely by a single outlier The sample median is little affected by a single outlier The median is resistant to gross errors whereas the mean is not The median will tolerate up to 50% gross errors before it can be made arbitrarily large Breakdown point: Median: 50% Mean: 0% Mean & median – robustness vs. efficiency For mixture mode: ε 1.4 1.68 1.8 1.8 4 1 1.57 1.75 1.7 2.5 1.84 5 1 1.57 2.2 1.7 3.4 1.86 6 1 1.57 2.75 1.71 4.5 1.87 2 2n 1 10 1 1.57 5.95 1.72 10.9 1.9 20 1 1.57 20.9 1.73 40.9 1.92 nvar(med) The sample median variance is approximately The gain in robustness due to using the median is paid for by a loss in efficiency when F is very close to normal nvar(med) 1.57 nvar(mean) 1 nvar(mean) n 3 The sample mean variance is 1 2 0.1 τ nvar(med) 0.05 nvar(mean) F 1 N ,1 N , 2 0 So why not always use the sample median? If the data do not contain outliers, the sample median has statistical performance which is poorer than that of the classical sample mean Robust estimation goal: “The best of both worlds” We shall develop estimators which combine the low variance of the mean at the normal, with the robustness of the median under contamination. 3. Measuring robustness Analysis tools Sensitivity curve (SC) Influence function (IF) Breakdown point (BP) Sensitivity curve SC measures the effect of different locations of an outlier on the sample The sensitivity curve of an estimator ̂ for the samples x1 , x2 ,..., xn is: SC x0 ˆ x1 , x2 ,..., xn , x0 ˆ x1 , x2 ,..., xn where x0 is the location of a single outlier Bounded SC(x0) high robustness! SC of mean & median -3 5 4 4 3 3 2 2 1 1 SC n=200 F=N(0,1) SC 5 -3 Sample mean x 10 0 -1 -2 -2 -3 -3 -4 -4 -5 0 outlier 5 10 Sample median 0 -1 -5 -10 x 10 -5 -10 -5 0 outlier 5 10 Standardized sensitivity curve The standardized sensitivity curve is defined by SCn x0 ˆ x1 , x2 ,..., xn , x0 ˆ x1 , x2 ,..., xn 1 n 1 What happens if we add one more observation to a very large sample? Influence function The influence function of an estimator̂ (Hampel, 1974) is an asymptotic version of its sensitivity curve. It is an approximation to the behavior of θ∞ when the sample contains a small fraction ε of identical outliers. It is defined as ˆ 1 F x0 ˆ F IFˆ x0 , F lim 0 ˆ 1 F 0 0 Where x is the point-mass at x0 and stands for “limit from the right” 0 IF main uses ˆ 1 F x is the asymptotic value of the estimate when the underlying distribution is F and a fraction ε of outliers is equal to x0. 0 IF has two main uses: Assessing the relative influence of individual observation towards the value of estimate Unbounded IF less robustness Allowing a simple heuristic assessment of the asymptotic variance of an estimate IF as limit version of SC The SC is a finite sample version of IF If ε is small ˆ 1 F x ˆ F IFˆ x0 , F 0 bias ˆ 1 F x0 ˆ F IFˆ x0 , F for large n: SCn x0 IFˆ x0 , F where ε=1/(n+1) Breakdown Point BP is the proportion of arbitrarily large observations an estimator can handle before giving an arbitrarily large result Maximum possible BP = 50% High BP more robustness! As seen before: Mean: 0% Median: 50% Summary SC measures the effect of different outliers on estimation IF is the asymptotic behavior of SC The IF and the BP consider extreme situations in the study of contamination. IF deals with “infinitesimal” values of ε BP deals with the largest ε an estimate can tolerate. 4. M estimators Maximum likelihood of μ Consider the location model, and assume that F0 has density f 0 The likelihood function is: n L x1 , x2 ,..., xn ; f 0 xi i 1 The maximum likelihood estimate (MLE) of μ is ˆ arg max L x1 , x2 ,..., xn ; M estimators of location (μ) MLE-like estimators: generalizing ML estimators If we have density f 0 , which is everywhere positive The MLE would solve: n (*) Let ˆ arg min xi log f 0 i 1 , if this exists, then n (**) x ˆ 0 i 1 i M estimator can almost equivalently described by ρ or Ψ If ρ is everywhere differentiable and ψ is monotonic, then the forms (*) and (**) are equivalent [6] If ψ continuous and increasing, the solution is unique [6] Special cases The sample mean The sample median x x2 2 x x n 1 i 1 i 1 1 x sign x 0 1 I x 0 I x 0 x0 x0 x0 n n xi ˆ 0 ˆ n xi x x sign x ˆ 0 i i 1 n I x ˆ 0 I x ˆ 0 0 i 1 i i # xi ˆ # xi ˆ 0 ˆ Med x Special cases: ρ and ψ Squared errors Absolute errors 4 2 2 1 0 -4 0 -4 -2 0 2 x Squared errors 4 4 1 2 0.5 (x) (x) Sample mean (x) 3 (x) 6 0 -2 -4 -4 -2 0 2 x Absolute errors 4 0 -0.5 -2 0 x 2 4 -1 -4 -2 0 x 2 4 Sample median Asymptotic behavior of location M estimators For a given distribution F, assume ρ is differentiable and ψ is increasing, and define 0 0 F as the solution of EF x 0 0 For large n, ˆ 0 p and the distribution of estimator is approximately N 0 , n with If ̂ (***) EF x 0 is uniquely defined then is consistent at F [3] 2 ̂ EF0 x 2 Desirable properties M estimators are robust to large proportions of outliers The IF is proportional to ψ Ψ function may be chosen to bound the influence of outliers and achieve high efficiency M estimators are asymptotically normal When ψ is odd, bounded and monotonically increasing, the BP is 0.5 Can be also consistent for μ M estimators can be chosen to completely reject outliers (called redescending M estimators) , while maintaining a large BP and high efficiency Disadvantages They are in general only implicitly defined and must be found by iterative search They are in general will not be scale equivariant Huber functions A popular family of M estimator (Huber,1964) This estimator is an odd nondecreasing ψ function which minimizes the asymptotic variance among all estimator satisfying: IF ( x) c where c 2 Advantages: Combines sample mean for small errors with sample median for gross errors Boundedness of ψ Huber ρ and ψ functions 2 x k x 2 2k x k if x k if x k With derivative 2 k x , where x k x sign x k 0 x sign x if x k , k 0 if x k , k 0 Huber ρ and ψ functions ρ (x) Ψ(x) Ricardo A. Maronna, R. Douglas Martin and V´ıctor J. Yohai, Robust Statistics: Theory and Methods , 2006 John Wiley & Sons Huber functions – Robustness & efficiency tradeoff Increasing v Special cases: median Decreasing robustness mean Ricardo A. Maronna, R. Douglas Martin and V´ıctor J. Yohai, Robust Statistics: Theory and Methods , 2006 John Wiley & Sons K=0 sample median K ∞ sample mean Asymptotic variances at normal mixture model with G = N(0, 1) and H = N(0, 10), The larger the asymptotic variance, the less efficient estimator, but the more robust. Efficiency come on the expense of robustness Redescending M estimators Redescending M estimators have ψ functions which are nondecreasing near the origin but then decrease toward the axis far from the origin They usually satisfy ψ(x)=0 for all |x|≥r, where r is the minimum rejection point. Accept the completely outliers rejection ability, they: Do not suffer form masking effect Has a potential to have high BP Their ψ functions can be chosen to redescend smoothly to zero information in moderately large outliers is not ignored completely improve efficiency! A popular family of redescending M estimators (Tukey), called Bisquare or biweight Bisquare ρ and ψ functions 2 3 if x k 1 1 x / k x if x k 1 2 With derivative 6 x / k , where x x x 1 k 2 2 I x k Bisquare ρ and ψ functions ρ (x) Ψ(x) Ricardo A. Maronna, R. Douglas Martin and V´ıctor J. Yohai, Robust Statistics: Theory and Methods , 2006 John Wiley & Sons Bisquare function – efficiency ARE(bisquare;MLE)= ARE 0.8 0.85 0.9 0.95 k 3.14 3.44 3.88 4.68 Achieved ARE close to 1 Choice of ψ and ρ In practical, the choice of ρ and ψ function is not critical to gaining a good robust estimate (Huber, 1981). Redescending and bounded ψ functions are to be preferred Bounded ρ functions are to be preferred Bisquare function is a popular choice 5. Order statistics approaches The β-trimmed mean Let 0, 0.5 and m n 1 The β-trimmed mean is defined by nm 1 x x i n 2m i m 1 Where [.] stands for the integer part and x i denotes the ith order statistic. x is the sample mean after the m largest and the m smallest observation have been discard The β-trimmed mean – cont. Limit cases the sample mean the sample median Distribution of trimmed mean β=0 β 0.5 The exact distribution is intractable For large n the, distribution under location model is approximately normal BP of β % trimmed mean = β % Example 1 – trimmed mean All data Delete outlier Mean 4.28 3.2 Median 3.38 3.37 Trimmed mean 10% 3.2 3.11 Trimmed mean 25% 3.17 3.17 Median and trimmed mean are less sensitive to outliers existence The W-Winsorized mean xw, The W-Winsorized mean is defied by n m 1 1 m 1 x m 1 x i m 1 x n m n i m 2 The m smallest observations are replaced by the (m+1)’st smallest observation, and the m largest observations are replaced by the (m+1)’st largest observations Trimmed and W-Winsorized mean disadvantages Uses more information from the sample than the sample median Unless the underlying distribution is symmetric, they are unlikely to produce an unbiased estimator for either the mean or the median. Does not have a normal distribution L estimators Trimmed and Winsorized mean are special cases of L estimators L estimators is defined as Linear combinations of order statistics: n ˆ i x i i 1 Where the i ' s are given constants 1 For β-trimmed mean: i I m 1 i n m n 2m L vs. M estimators M estimators are: More flexible Can be generalized straightforwardly to multi-parameter problems Have high BP L estimator Less efficient because they completely ignore part of the data 6. Summary & conclusions SC of location M estimators n=20 xi~N(0,1) Trimmed mean: α=25% Huber: k=1.37 Bisquare: k=4.68 The effect of increasing contamination on a sample Replace m points by a fixed value x0=1000 biased SC m ˆ x0 ,..., x0 , xm1 ,..., xn ˆ x1, x2 ,..., xn n=20 xi~N(0,1) Trimmed mean: α=8.5% Huber: k=1.37 Bisquare: k=4.68 IF of location M estimator IF is proportional to ψ (Huber, 1981) In general x0 ˆ IFˆ x0 , F E x ˆ BP of location M estimator In general When ψ is odd, bounded and monotonically increasing, the BP is 50% Assume k1 , k2 are finite, then the BP is: min k1 , k2 k1 k2 Special cases: Sample mean = 0% Sample median = 50% Comparison between different location estimators Estimator BP SC/IF/ψ Redescending ψ Efficiency in mixture model unbounded No low Median 50% bounded No low Huber 50% bounded No high Bounded at 0 Yes high bounded No Mean 0% Bisquare 50% x% trimmed mean x% Conclusions Robust statistics provides an alternative approach to classical statistical methods. Robust statistics seeks to provide methods that emulate classical methods, but which are not unduly affected by outliers or other small departures from model assumptions. In order to quantify the robustness of a method, it is necessary to define some measures of robustness Efficiency vs. Robustness Efficiency can be achieved by taking ψ proportional to the derivative of the loglikelihood defined by the density of F: ψ(x)=-c(f’/f)(x), where c is constant≠0 Robustness is achieved by choosing ψ that is smooth and bounded to reduce the influence of a small proportion of observations References 1. 2. 3. 4. 5. 6. 7. 8. Robert G. Staudte and Simon J. Sheather, Robust estimation and testing, Wiley 1990. Elvezio Ronchetti, “THE HISTORICAL DEVELOPMENT OF ROBUST STATISTICS”, ICOTS-7, 2006: Ronchetti, University of Geneva, Switzerland, 2006. Huber, P. (1981). Robust Statistics. New York: Wiley. Hampel F. R., Ronchetti, E. M., Rousseeuw, P. J. and Stahel, W. A. (1986). Robust Statistics: The Approach Based on Influence Functions. New York: Wiley. Tukey Ricardo A. Maronna, R. Douglas Martin and V´ıctor J. Yohai, Robust Statistics: Theory and Methods , 2006 John Wiley & Sons B. D. Ripley , M.Sc. in Applied Statistics MT2004, Robust Statistics, 1992–2004. Robust statistics From Wikipedia.

![[#GEOD-114] Triaxus univariate spatial outlier detection](http://s3.studylib.net/store/data/007657280_2-99dcc0097f6cacf303cbcdee7f6efdd2-300x300.png)