Temple University – CIS Dept. CIS661 – Principles of Data

advertisement

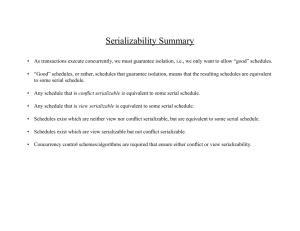

Temple University – CIS Dept. CIS661 – Principles of Data Management V. Megalooikonomou Transactions (based on slides by C. Faloutsos at CMU) General Overview Relational model - SQL Functional Dependencies & Normalization Physical Design &Indexing Query optimization Transaction processing concurrency control recovery Transactions - dfn = unit of work, eg. move $10 from savings to checking Atomicity (all or none) Consistency () Isolation (as if alone) Durability (changes persist) recovery concurrency control Operational details ‘read(x)’: fetches ‘x’ from disk to main memory (= buffer) ‘write(x)’: writes ‘x’ to disk (sometime later) power failure -> troubles! Also, could lead to inconsistencies... Durability transactions should survive failures (after a transaction completes succesfully the changes in the DB persist) Atomicity straightforward: Checking = Checking + 10 Savings = Savings - 10 Consistency eg., the total sum of $ is the same, before and after (but not necessarily during) Isolation Other transactions should not affect us counter-example: lost update problem: read(N) read(N) N=N-1 N=N-1 write(N) write(N) Transaction states partially active committed failed committed aborted Outline concurrency control (-> isolation) ‘correct’ interleavings how to achieve them recovery (-> durability, atomicity) Concurrency why do we want it? Example of interleaving: T1: moves $10 from savings (X) to checking (Y) T2: adds 10% interest to everything Interleaved execution Read(X) Read(X) X=X-10 Write(X) Read(Y) Y=Y+10 Write(Y) ‘correct’? X = X * 1.1 Write(X) Read(Y) Y=Y*1.1 Write(Y) How to define correctness? Let’s start from something definitely correct: Serial executions Serial execution T1 Read(X) X=X-10 Write(X) Read(Y) Y=Y+10 Write(Y) T2 ‘correct’ Read(X) X = X * 1.1 Write(X) Read(Y) Y=Y*1.1 Write(Y) by definition How to define correctness? A: Serializability: A schedule (=interleaving) is ‘correct’ if it is serializable, ie., equivalent to a serial interleaving (regardless of the exact nature of the updates) examples and counter-examples: Example: ‘Lost-update’ problem T1 Read(N) T2 Read(N) N=N-1 N= N-1 Write(N) Write(N) not equivalent to any serial execution (why not?) -> incorrect! More details: ‘conflict serializability’ T1 Read(N) T2 Read(N) N=N-1 N= N-1 Write(N) Write(N) Conflict serializability r/w: eg., object X read by Ti and written by Tj w/w: ........written by Ti and written by Tj PRECEDENCE GRAPH: Nodes: transactions Arcs: r/w or w/w conflicts Precedence graph T1 Read(N) T2 Read(N) N=N-1 N= N-1 N Write(N) T2 N Write(N) T1 Cycle -> not serializable Example T1 Read(A) … write(A) T2 T3 Read(A) … Write(A) Read(B) … Write(B) Read(B) … Write(B) Example T1 Read(A) … write(A) T2 T3 A T3 Read(A) … Write(A) Read(B) … Write(B) Read(B) … Write(B) T1 B T2 serial execution? Example A: T2, T1, T3 (Notice that T3 should go after T2, although it starts before it!) Q: algo for generating serial execution from (acyclic) precedence graph? Example A: Topological sorting A topological sort of a DAG=(V,E) is a linear ordering of all its vertices such that if G contains an edge (u,v), then u appears before v in the ordering. …it is the ordering of its vertices along a horizontal line so that all directed edges go from left to right. …topologically sorted vertices appear in reverse order of their finishing times according to depth first search (DFS) Serializability Ignore ‘view serializability’ We assume ‘no blind writes’, ie, ‘read before write’ (counter) example: ‘Inconsistent analysis’ T1 Read(A) A=A-10 Write(A) T2 Read(A) Sum = A Read(B) Sum += B Read(B) B=B+10 Write(B) Precedence graph? Conclusions ‘ACID’ properties of transactions recovery for ‘A’, ‘D’ concurrency control for ‘I’ correct schedule -> serializable precedence graph acyclic -> serializable