Maximum Likelihood Estimation

advertisement

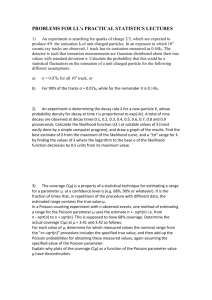

MAXIMUM LIKELIHOOD ALISON BOWLING GENERAL LINEAR MODEL • 𝑌𝑖 = 𝐵0 + 𝐵1 𝑋1𝑖 + 𝐵2 𝑋2𝑖 + 𝐵3 𝑋3𝑖 + ⋯ + 𝐵𝑘 𝑋𝑘𝑖 + 𝜀𝑖 • ei ~ i.i.d. N(0, s2) • Residuals are • Independent and identically distributed • Normally distributed • Mean 0, Variance s2 • What to do when the normality assumption does not hold? • We can fit an alternative distribution • This requires Maximum Likelihood methods. ALTERNATIVE DISTRIBUTIONS • Binomial (proportions) • P (event occurring), 1-P (event not occurring) • Poisson (count data) MAXIMUM LIKELIHOOD • Myung, J. (2003). Tutorial on maximum likelihood estimation. Journal of Mathematical Psychology, 47, 90 – 100. • Standard approach to parameter estimation and inference in statistics • Many of the inference methods in statistics are based on MLE. • Chi-square test • Bayesian methods • Modelling of random effects PROBABILITY DISTRIBUTIONS • Imagine a biased coin, with the probability of heads, w, = 0.7, is tossed 10 times. • The following probability distribution, can be computed using the binomial theorem. 0.3 0.25 Probality of result (f(y)) This is a probability distribution. • the probability of obtaining a particular outcome for 10 tosses of a coin with w = .7 • 7 heads are more likely to occur than any other combination 0.2 0.15 0.1 0.05 0 0 1 2 3 4 5 6 7 Number of Heads 8 9 10 LIKELIHOOD FUNCTION • Suppose we don’t know w, but have tossed the coin 10 times and obtained y = 7 heads. • What is the most likely value of w? • This may be obtained from the likelihood function. • This is a function of the parameter, w, given the data, y. • The most likely value of w is at the peak of this function. MAXIMUM LIKELIHOOD ESTIMATION • We are interested in finding the probability distribution that underlies that data that have been collected. • We are consequently interested in finding the parameter value(s) that correspond to the desired probability distribution. • The MLE estimate is the maximum (peak) of the maximum likelihood function • This may be obtained from the first derivative of the MLF. • To make sure this is a peak (and not a valley), the second derivative is also checked. ITERATIVE METHOD • For very simple scenarios, the maximum can be obtained using calculus as in the example. • This is usually not possible, especially when the model involves many parameters. • This is done by an iterative series of trial and error steps. • Start with a value of a parameter, w, and compute the likelihood of obtaining this. • Then try another, and see if the likelihood is higher. • If so, keep going • Stop when the maximum is found (solution converges). MLE ALGORITHMS • Different algorithms are used to obtain the result • EM: estimation maximisation algorithm • Newton-Raphson • Fisher Scoring. • SPSS uses both the Newton-Raphson and the Fisher scoring method. LOG LIKELIHOOD • The computation of likelihood involves multiplying probabilities for each individual outcome • This can be computationally intensive. • For this reason, the log of the likelihood is computed instead. • Instead of multiplying, the outcomes are added. • Log (A x B) = Log A + Log B • We maximise the log of the likelihood rather than the likelihood itself, for computational convenience. -2LL • The log likelihood is the sum of the probabilities associated with the predicted and actual outcomes. • This is analogous to the residual sum of squares in OLS regression. • The larger the log likelihood the greater the unexplained variance. • This is usually negative, and can be made positive by adding the negative sign. • We multiply by 2 to enable us to obtain p values to compare models. • This value is -2LL EVALUATING MODELS • Using OLS we use R2 to evaluate models. • i.e. does the addition of a predictor produce a significant increase in R2? • R2 is based on Sums of Squares, which we do not have when using ML. • We use the -2LL, Deviance, and Information Criteria to evaluate models using ML. • Unlike R2, -2LL is not meaningful in its own right. • Used to compare with other models. DEVIANCE • Deviance is a measure of lack of fit. • Measures how much worse the model is than a perfectly fitting model. • Deviance can be used to obtain a measure of pseudo-R2 • 𝑅2 = 1 − 𝑑𝑒𝑣𝑖𝑎𝑛𝑐𝑒(𝑓𝑖𝑡𝑡𝑒𝑑 𝑚𝑜𝑑𝑒𝑙) 𝑑𝑒𝑣𝑖𝑎𝑛𝑐𝑒 (𝑖𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡𝑜𝑛𝑙𝑦 ) LIKELIHOOD RATIO STATISTIC • 𝐿𝑅 = −2𝐿𝐿𝑅 − −2𝐿𝐿𝐹 • LR = likelihood of reduced model (without the parameters) • LF = likelihood of the full model (with the parameters) • LR ~ c2r , where r = dffull – dfreduced • G2 compares the fitted model with the interceptonly model. MAXIMUM LIKELIHOOD IN SPSS • Logistic regression. • Used with a binomial outcome variable • E.g. yes, no; correct, incorrect; married, not married. • Generalised Linear models • Provides a range of non-linear models to be fitted. BAR-TAILED GODWIT DATA • Dependent variable is a count: • Maximum number of birds observed at each estuary for each year • Independent variables • Estuary: Richmond, Hastings, Clarence, Hunter, Tweed • categorical • Year: 1981 – 2014. • Continuous (centred to 0 at 1981). • Research question: • Does the number of Bar-tailed Godwits in the Richmond Estuary remain stable, or improve, compared to the other estuaries? STEP 1: GRAPH THE DATA It is obvious that these data have problems. Counts in the Hunter estuary are much higher than the other estuaries, and have much greater variance. STEP 2: DUMMY CODE THE ESTUARY DATA Use Richmond as the comparison category. Each of the other estuaries may be compared in turn with Richmond. Richmond Clarence Hunter Hastings Tweed 0 1 0 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1 STEP 3: RUN OLS ANALYSIS OF THE DATA • I will just include Hunter in this analysis to illustrate. • Model: • 𝐺𝑜𝑑𝑤𝑖𝑡𝑖 = 𝐵0 + 𝐵1 𝐻𝑢𝑛𝑡𝑒𝑟𝑖 + 𝐵2 𝑌𝑒𝑎𝑟0𝑖 + 𝐵3 𝑌𝑒𝑎𝑟0𝑖 ∗ 𝐻𝑢𝑛𝑡𝑒𝑟𝑖 + 𝜀𝑖 • Including just the Year0: • There is a non-significant change in Godwit numbers over the years. OLS DATA ANALYSIS • Including the estuary and estuary * Year0 interaction. There is a significant increase in R2 when the Hunter and Hunter* year interaction are included in the model. INTERPRETATION OF THE FULL MODEL • At year0 =0, the predicted Godwit for Richmond = 292 birds • Change in numbers over the years for Richmond = -4.4 • At Year0=0, difference between numbers in the Hunter and Richmond = 1449.7 (p < .001) • Over 24 years, difference in rate of change for Hunter, compared with Richmond is -15.2 (p = .031) • i.e. there is a steeper decline in bird numbers in Hunter estuary, than the Richmond estuary. CHECKING RESIDUALS…. • Residuals are not normally distributed. • The assumptions for a linear model are not met!! WHAT TO DO? • We could try a transformation of the DV • A Square root transformation is better, but not perfect • We could use a non-linear model • The data are counts, and we could use either a Poisson or Negative Binomial distribution • We will use a Negative Binomial (for reasons that will be explained later) • Use Generalized Linear Models for the analysis. INTERCEPT ONLY MODEL • No predictors are included, and the model simply tests whether the overall number of BT Godwits is different to zero. • The Log likelihood is -827.26 • -2LL = 1654.53 MODEL WITH THREE PARAMETERS • Running the model including Year0, Hunter and Hunter*Year0 gives the following Goodness of Fit Measures Log likelihood = -781.3 -2LL = 1562.6 COMPARING THE TWO MODELS • • • • -2LL for intercept only model = 1654.53 -2LL for full model (with parameters) = 1562.6 Likelihood ratio (G2) = 1654.5 – 1562.6 = 91.9 df = 3 , p < .001 • Therefore the model including the three parameters is a better fit to the data than just the intercept only model. • Limitations: 1. the models must be nested (one model must be contained within the other) 2. Data sets must be identical INFORMATION CRITERIA • Akaike’s Information Criterion : AIC = -2LL + 2k • Schwartz’s Bayesian Criterion : BIC = -2LL + k + ln(N) • k = number of parameters • N = number of participants • Can be used with non-nested models • These IC are similar to restricted R2 • The more parameters you have, the better a model is likely to fit the data. • The IC take this into account by penalising for additional parameters and/or participants. • Better fitting models have lower values of the IC. ANALYSIS OF COUNT DATA • Coxe, S., West, S.G. and Aiken, L. (2009). The analysis of count data: a gentle introduction to Poisson regression and its alternatives. Journal of Personality Assessment, 91, 121- 136. • • • • Poisson regression Overdispersed Poisson regression models Negative binomial regression models Models which address problems with zeros. ANALYSIS OF COUNT DATA • Count data are discrete numbers • Usually not normally distributed. • E.g. number of drinks on a Saturday night. • Modelled by a Poisson distribution. • This has one parameter, m. 𝜇 𝑦 −𝜇 𝑃 𝑌 = 𝑒 𝑦! POISSON MODEL • ln(𝜇 ) = 𝐵0 + 𝐵1 𝑋1 + 𝐵2 𝑋2 + 𝐵3 𝑋3 + ⋯ + 𝐵𝑘 𝑋𝑘 • Assumptions: (Y|X)~ Poi(μ), Var(Y|X)=fμ, f=1 • i.e. The residuals have a Poisson distribution. EXAMPLE: DRINKS DATA • Coxe et al Poisson dataset in SPSS format. • Sensation: mean score on a sensation seeking scale (1-7) • Gender (0 = female, 1 = male) • Y : number of drinks on a Saturday night. OLS REGRESSION • Intercept < 0 • When sensation = 0, number of drinks is negative!! • Residuals are not normally distribution. • OLS has problems!! POISSON REGRESSION: PARAMETERS • Sensation only • When sensation = 0, drinks = e-.14 = .86 • For every 1 unit change in sensation, number of drinks is multiplied by e-.231 = 1.26. POISSON REGRESSION: MODEL FIT • Sensation only: Model fit • G2 = 35.07 • Model fits better than the intercept only model • Deviance = 1151 • -2LL = -(-1037.5) x2 = 2075 • BIC = 2087 • Deviance for the intercept-only model = 1186 (check) • Pseudo-R2 = 1 − 𝑑𝑒𝑣𝑖𝑎𝑛𝑐𝑒 𝑠𝑒𝑛𝑠𝑎𝑡𝑖𝑜𝑛 𝑑𝑒𝑣𝑖𝑎𝑛𝑐𝑒 𝑖𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡𝑜𝑛𝑙𝑦 =1− 1151 1186 = .03 POISSON REGRESSION: PARAMETERS • Sensation and Gender as predictors • What is the effect of gender on number of drinks consumed (holding sensation constant)?? EFFECT OF GENDER • Intercept = -.789 (for gender = 0; female) • Exp(-.789) = .45 • Females drink .45 drinks on a Saturday night • B = .839 (gender = 1: male) • Exp(.839) = 2.3 • Males drink 2.3 times as many drinks as females (when sensation seeking = 0). POISSON REGRESSION: MODEL FIT • -2LL = -2 * (-.941.4) = 1828.2 • BIC = 1900.77 • Model including gender is a substantially better fit than sensation model alone • (1900 vs 2087) Pseudo-R2 =1 − 𝑑𝑒𝑣𝑖𝑎𝑛𝑐𝑒 𝑠𝑒𝑛𝑠𝑎𝑡𝑖𝑜𝑛&𝑔𝑒𝑛𝑑𝑒𝑟 𝑑𝑒𝑣𝑖𝑎𝑛𝑐𝑒 𝑖𝑛𝑡𝑒𝑟𝑐𝑒𝑝𝑡 =1− 959.5 1186 = .19 MODEL ADEQUACY • Save deviance residuals and predicted values, and plot the residuals against predicted values. OVERDISPERSION • A Poisson distribution has only one parameter, m, where m is the mean and variance of the distribution. • Often the variance of a set of data is greater than the mean • The data are overdispersed. OVERDISPERSED POISSON REGRESSION MODELS • A second parameter, f, is estimated to scale the variance. • The parameters from the overdispersed model are the same as with the simple model, but standard errors are larger. • Use information criteria to compare models NEGATIVE BINOMIAL MODELS • Negative binomial models use a Poisson distribution, but allow for individuals to vary in the distribution fitted. HOMEWORK • Use PGSI Data.sav (Leigh’s Honours data) • DV = PGSI (Score on Problem Gambling Severity Scale) • Predictors = GABS, FreqCoded • Run a Poisson regression to predict PGSI from GABS • Does GABS significantly predict PGSI score? • Look at the likelihood ratio (G2) • Interpret the coefficients for the intercept and GABS • Run a second regression including FreqCode (as a continuous variable) in the model. • Does this second predictor improve the model fit? • (hint – look at the BIC for the two models)