ee3.cma - Computer Architecture

advertisement

EE3004 (EE3.cma) - Computer Architecture

Roger Webb

R.Webb@surrey.ac.uk

University of Surrey

http://www.ee.surrey.ac.uk/Personal/R.Webb/l3a15

also link from Teaching/Course page

3/16/2016

EE3.cma - Computer Architecture

1

Introduction

Book List

Computer Architecture - Design & Performance

Barry Wilkinson, Prentice-Hall 1996

(nearest to course)

Advanced Computer Architecture

Richard Y. Kain, Prentice-Hall 1996

(good for multiprocessing + chips + memory)

Computer Architecture

Behrooz Parhami, Oxford Univ Press, 2005

(good for advanced architecture and Basics)

Computer Architecture

Dowsing & Woodhouse

(good for putting the bits together..)

Microprocessors & Microcomputers - Hardware & Software

Ambosio & Lastowski

(good for DRAM, SRAM timing diagrams etc.)

Computer Architecture & Design

Van de Goor

(for basic Computer Architecture)

Wikipedia is as good as anything...!

3/16/2016

EE3.cma - Computer Architecture

2

Introduction

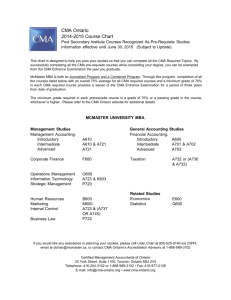

Outline Syllabus

Memory Topics

• Memory Devices

• Interfacing/Graphics

• Virtual Memory

• Caches & Hierarchies

Instruction Sets

• Properties & Characteristics

• Examples

• RISC v CISC

• Pipelining & Concurrency

Parallel Architectures

• Performance Characteristics

• SIMD (vector) processors

• MIMD (message-passing)

• Principles & Algorithms

3/16/2016

EE3.cma - Computer Architecture

3

Computer Architectures - an overview

What are computers used for?

3 ranges of product cover the majority of processor

sales:

• Appliances (consumer electronics)

• Communications Equipment

• Utilities (conventional computer systems)

3/16/2016

EE3.cma - Computer Architecture

4

Computer Architectures - an overview

Consumer Electronics

This category covers a huge range of processor performance

• Micro-controlled appliances

– washing machines, time switches, lamp dimers

– lower end, characterised by:

• low processing requirements

• microprocessor replaces logic in small package

• low power requirements

• Higher Performance Applications

– Mobile phones, printers, fax machines, cameras, games

consoles, GPS, TV set-top boxes, video/DVD/HD

recorders…...

•

•

•

•

3/16/2016

High bandwidth - 64-bit data bus

Low power - to avoid cooling

Low cost - < $20 for the processor

Small amounts of software - small cache (tight program loops)

EE3.cma - Computer Architecture

5

Computer Architectures - an overview

Communications Equipment

has become the major market – WWW, mobile comms

• Main products containing powerful processors are:

– LAN products - bridges, routers, controllers in computers

– ATM exchanges

– Satellite & Cable TV routing and switching

– Telephone networks (all-digital)

• The main characteristics of these devices are:

– Standardised application (IEEE, CCITT etc.) - means

competitive markets

– High bandwidth interconnections

– Wide processor buses - 32 or 64 bits

– Multi-processing (either per-box, or in the distributed

computing sense

3/16/2016

EE3.cma - Computer Architecture

6

Computer Architectures - an overview

Utilities (Conventional Computer Systems)

Large scale computing devices will, to some extent, be replaced by

greater processing power on the desk-top.

• But some centralised facilities are still required, especially

where data storage is concerned

– General-purpose computer servers; supercomputers

– Database servers - often safer to maintain a central corporate

database

– File and printer servers - again simpler to maintain

– Video on demand servers

• These applications are characterised by huge memory

requirements and:

– Large operating systems

– High sustained performance over wide workload variations

– Scalability - as workload increases

– 64 bit (or greater) data paths, multiprocessing, large caches

3/16/2016

EE3.cma - Computer Architecture

7

Computer Architectures - an overview

Computer System Performance

• Most manufacturers quote performance of their processors in terms of

the peak rate - MIPS (MOPS) of MFLOPS.

• Most of the applications above depend on the continuous supply of

data or results - especially for video images

• Thus critical criterion is the sustained throughput of instructions

– (MPEG image decompression algorithm requires 1 billion

operations per second for full-quality widescreen TV)

– Less demanding VHS quality requires 2.7Mb per second of

compressed data

– Interactive simulations (games etc) must respond to a user input

within 100ms - re-computing and displaying the new image

• Important measures are:

– MIPS per dollar

– MIPS per Watt

3/16/2016

EE3.cma - Computer Architecture

8

Computer Architectures - an overview

% of CPU time spent managing interaction

User Interactions

Consider how we interact with our computers:

100

Virtual Reality, Cyberspace

90

80

What does a typical

CPU do?

70% User interface; I/O

processing

20% Network interface;

protocols

9%

Operating system;

system calls

1%

User application

70

60

WYSIWIG, Mice, Windows

50

40

Menus, Forms

30

Timesharing

20

Punched Card & Tape

10

Lights & Switches

0

1955

3/16/2016

1965

1975

1985

1995

2005

EE3.cma - Computer Architecture

9

Computer Architectures - an overview

Sequential Processor Efficiency

The current state-of-the-art of large microprocessors include:

• 64-bit memory words, using interleaved memory

• Pipelined instructions

• Multiple functional units (integer, floating point, memory

fetch/store)

• 5 GHz practical maximum clock speed

• Multiple processors

• Instruction set organised for simple decoding (RISC?)

However as word length increases, efficiency may drop:

• many operands are small (16 bit is enough for many VR tasks)

• many literals are small - loading 00….00101 as 64 bits is a waste

• may be worth operating on several literals per word in parallel

3/16/2016

EE3.cma - Computer Architecture

10

Computer Architectures - an overview

Example - reducing the number of instructions

Perform a 3D transformation of a point (x,y,z) by multiplying the 4-element

matrix (x,y,z,1) by a 4x4 transformation matrix A. All operands are 16-bits

long.

x y z 1

a

e

i

m

b

f

j

n

c

g

k

o

d

h

l

p

=

x’ y’ z’ r

Conventionally this requires 20 loads, 16 multiplies, 12 adds and 4 stores, using

16-bit operands on a 16-bit CPU.

On a 64-bit CPU with instructions dealing with groups of four parallel 16-bit

operands, as well as a modest amount of pipelining, all this can take just 7

processor cycles.

3/16/2016

EE3.cma - Computer Architecture

11

Computer Architectures - an overview

The Effect of Processor Intercommunication Latency

In a multiprocessor, and even in a uniprocessor, the delays associated with

communicating and fetching data (latency) can dominate the processing times.

Consider:

memory

memory

Interconnection Network

CPU

CPU

memory

memory

Symmetrical

Multiprocessor

CPU

Uniprocessor

cache

CPU

Delays can be minimised by placing components closer together and:

• Add caches to provide local data storage

• Hide latency by multi-tasking - needs fast context switching

• Interleave streams of independent instructions - scheduling

• Run groups of independent instructions together (each ending with long latency

instruction)

3/16/2016

EE3.cma - Computer Architecture

12

Computer Architectures - an overview

Memory Efficiency

Quote from 1980s “Memory is free”

By the 2000s the cost per bit is no longer falling so fast and

consumer electronics market is becoming cost sensitive

Renewed interest in compact instruction sets and data

compactness - both from the 1960s and 1970s 1977 - £3000/Mb

1994 - £4/Mb

Now – <1p/Mb

Instruction Compactness

RISC CPUs have a simple register-based instruction encoding

• Can lead to codebloat - as can poor coding and compiler design

• Compactness gets worse as the word size increases

e.g. INMOS (1980s) transputer had a stack based register scheme

• needed 60% of the code of an equivalent register based cpu

• lead to smaller cache needs for instruction fetches & data

3/16/2016

EE3.cma - Computer Architecture

13

Computer Architectures - an overview

Cache Efficiency

• Designer should aim to optimise the instruction

performance whilst using the smallest cache possible

• Hiding latency (using parallelism & instruction

scheduling) is an effective alternative to minimising it

(by using large caches)

• Instruction scheduling can initiate cache pre-fetches

• Switch to another thread if the cache is not ready to

supply data for the current one

• In video and audio processing, especially, unroll the

inner code loops – loop unrolling (more on that later)

3/16/2016

EE3.cma - Computer Architecture

14

Computer Architectures - an overview

Predictable Codes

In many applications (e.g. video and audio processing) much is

known about the code which will be executed. Techniques

which are suitable for these circumstances include:

• Partition the cache separately for code and different data

structures

• The cache requirements of the inner code loops can be predetermined, so cache usage can be optimised

• Control the amounts of a data structure which are cached

• Prevent interference between threads by careful scheduling

• Notice that a conventional cache’s contents are destroyed by a

single block copy instruction

3/16/2016

EE3.cma - Computer Architecture

15

Computer Architectures - an overview

Processor Engineering Issues

• Power consumption must be minimised (to simplify on-chip and inbox cooling issues)

– Use low-voltage processors (2V instead of 3.3V)

– Don’t over-clock the processor

– Design logic carefully to avoid propagation of redundant signals

– Tolerance of latency allows lower performance (cheaper)

subsystems to be used

– Explicit subsystem control allows subsystems to be powered down

when not in use

– Eliminate redundant actions - eg speculative pre-fetching

– Provide non-busy synchronisation to avoid the need for spin-locks

• Battery design is advancing slowly - power stored per unit weight or

volume will quadruple (over NiCd) with 5-10 years

3/16/2016

EE3.cma - Computer Architecture

16

Computer Architectures - an overview

Processor Engineering Issues

• Speed to market is increasing, so processor design is becoming

critical. Consider the time for several common devices to

become established:

– 70 years

Telephone (0% to 60% of households)

– 40 years

Cable Television

– 20 years

Personal Computer

– 10 years

Video Recorders

– <10years Web based video

• Modularity and common processor cores provide design

flexibility

– reusable cache and CPU cores

– product-specific interfaces and co-processors

– common connection schemes

3/16/2016

EE3.cma - Computer Architecture

17

Computer Architectures - an overview

Interconnect Schemes

Wide data buses are a problem:

• They are difficult to route on printed circuit boards

• They require huge numbers of processor and memory pins

(expensive to manufacture on chips and PCBs)

• Clocking must accommodate the slowest bus wire.

• Parallel back-planes add to loading and capacitance,

slowing signals further and increasing power consumption

Serial chip interconnects offer 1Gbit/s performance using just a

few pins and wires. Can we use a packet routing chip as a

back-plane?

• Processors, memories, graphic devices, networks, slow

external interfaces all joined to a central switch

3/16/2016

EE3.cma - Computer Architecture

18

3

3/16/2016

EE3.cma - Computer Architecture

19

Memory Devices

Regardless of scale of computer the memory is similar.

Two major types:

• Static

• Dynamic

Larger memories get cheaper as production increases and

smaller memories get more expensive - you pay more for

less!

See:

http://www.educypedia.be/computer/memoryram.htm

http://www.kingston.com/tools/umg/default.asp

http://www.ahinc.com/hhmemory.htm

3/16/2016

EE3.cma - Computer Architecture

20

Memory Devices

Static Memories

• made from static logic elements - an array of flip-flops

• don’t lose their stored contents until clocked again

• may be driven as slowly as needed - useful for single

stepping a processor

• Any location may be read or written independently

• Reading does not require a re-write afterwards

• Writing data does not require the row containing it to be

pre-read

• No housekeeping actions are needed

• The address lines are usually all supplied at the same time

• Fast - 15ns was possible in Bipolar and 4-15ns in CMOS

Not used anymore – too much power for little gain in speed

3/16/2016

EE3.cma - Computer Architecture

21

Memory Devices

I/O7

Memory Matrix

256x256

Input Data

Control

I/O0

Vcc

Row Decoder

HM6264 - 8K*8 static RAM organisation

3/16/2016

A0

A1

A2

A3

A4

A5

A6

A7

Gnd

Column I/O

Column Decoder

A15

A8

CS2

CS1

WE

Timing Pulse Generator

Read Write Control

OE

EE3.cma - Computer Architecture

22

Memory Devices

t

RC

Address

CS1

tCO1

tLZ1

tHZ1

tCO2

CS2

HM6264 Read Cycle

HM6264 - 8K*8 static RAM organisation

tAA

3/16/2016

OE

tLZ2

tOE

tHZ2

tOLZ

tOHZ

Data Valid

Dout

tOH

Item

Read Cycle Time

Address Access Time

Chip Selection to

CS1

Output

CS2

Output Enable to Output Valid

Chip Selection to

CS1

Output in Low Z

CS2

Output Enable to Output in Low Z

Chip Deselection to

CS1

Output in High Z

CS2

Output Disable to Output in High Z

Output Hold from Address Change

Symbol

tRC

tAA

tCO1

tCO2

tOE

tLZ1

tLZ2

tOLZ

tHZ1

tHZ2

tOHZ

tOH

EE3.cma - Computer Architecture

min

100

10

10

5

0

0

0

10

max

100

100

100

50

35

35

35

-

Unit

ns

ns

ns

ns

ns

ns

ns

ns

ns

ns

ns

ns

23

Memory Devices

tWC

Address

3/16/2016

tWR1

tCW

CS1

HM6264 Write Cycle

HM6264 - 8K*8 static RAM organisation

OE

tCW

CS2

tAW

WE

Dout

tAS

tWR2

tWP

tOHZ

tDW

tDH

Din

Item

Symbol

Write Cycle Time

tWC

Chip Selection to End of Write

tCW

Address set up time

tAS

Address valid to End of Write

tAW

Write Pulse Width

tWP

Write Recovery Time CS1,WE

tWR1

CS2

tWR2

Write to Output in High Z

tWHZ

Data to Write Time Overlap

tDW

Data Hold from Write Time

tDH

Output Enable to Output in High Z tOHZ

Output Active from End of Write

tOW

EE3.cma - Computer Architecture

min

100

80

0

80

60

5

15

0

40

0

0

5

max

35

35

-

Unit

ns

ns

ns

ns

ns

ns

ns

ns

ns

ns

ns

ns

Data

sampled

by

memory

24

Memory Devices

Dynamic Memories

• information stored on a capacitor - discharges with time

• Only one transistor required to control - 6 for SRAM

• must be refreshed (0.1-0.01 pF needs refresh every 2-8ms)

• memory cells are organised so that cells can be refreshed a

row at a time to minimise the time taken

• row and column organisation lends itself to multiplexed row

and column addresses - fewer pins on chip

• Use RAS and CAS to latch row and column addresses

sequentially

• DRAM consumes high currents when switching transistors

(1024 columns at a time). Can cause nasty voltage transients

3/16/2016

EE3.cma - Computer Architecture

25

Memory Devices

Input Buffer

WE

Output Buffer

WE

Clock

OE Clock

R/W Switch

OE

CAS

CAS

Clock

RAS

RAS

Clock

X Decoder

X

Addrss

Memory

Array 3

Y

Addrss

Memory

Array 2

row select

X Decoder

Y Decoder

Ai

Memory

Array 1

Y Decoder

HM50464 - 64K*4 dynamic RAM organisation

I/O

1-4

Dynamic memory cell

Memory

Array 4

Refresh Address

Counter

3/16/2016

RAS

Bit Line

EE3.cma - Computer Architecture

CAS

26

Memory Devices

HM50464 Read Cycle

HM50464 - 64K*4 dynamic RAM organisation

RAS

CAS

Address

row

column

WRITE

valid

output

IO

OE

Read Cycle

Dynamic memory read operation is as follows

• The memory read cycle starts by setting all bit lines (columns) to a

suitable sense voltage. - pre charging

• Required row address is applied and a RAS (row address) is asserted

• selected row is decoded and opens transistors (one per column). This

dumps their capacitors charge into high feedback amplifiers which

recharge the capacitors - RAS must remain low

• simultaneously apply column address and set CAS. Decoded and

requested bits are gated to output - goes to outside when OE is active

3/16/2016

EE3.cma - Computer Architecture

27

Memory Devices

HM50464 Write Cycle

HM50464 - 64K*4 dynamic RAM organisation

RAS

CAS

Address

row

column

WRITE

IO

Valid Input

Early Write Cycle

Similar to the read cycle except the fall in WRITE signals time to latch

input data.

During the “Early Write” cycle - the WRITE falls before CAS - ensures

that memory device keeps data outputs disabled (otherwise when CAS

goes low they could output data!)

Alternatively a “Late Write” cycle the sequence is reversed and the OE

line is kept high - this can be useful in common address/data bus

architectures

3/16/2016

EE3.cma - Computer Architecture

28

Memory Devices

HM50464 - 64K*4 dynamic RAM organisation

Refresh Cycle

For a refresh no output is needed. A read, with a valid RAS and row

address pulls the data out all we need to do is put it back again by deasserting RAS.

This needs to be repeated for all 256 rows (on the HM50464) every 4ms.

There is an on chip counter which can be used to generate refresh

addresses.

Page Mode Access [“Fast Page Mode DRAM”] – standard DRAM

The RAS cycle time is relatively long so optimisations have been made

for common access patterns

Row address is supplied just once and latched with RAS. Then column

address are supplied and latched using CAS, data is read using WRITE

or OE. CAS and column address can then be cycled to access bits in

same row. The cycle ends when RAS goes high again.

Care must be taken to continue to refresh the other rows of memory at the

specified rate if needed

3/16/2016

EE3.cma - Computer Architecture

29

Memory Devices

HM50464 - 64K*4 dynamic RAM organisation

RAS

CAS

Address

row

col

col

Data

IO

col

Data

Data

Page Mode DRAM access - nibble and static column mode are similar

Nibble Mode

Rather than supplying the second and subsequent column addresses they

can be calculated by incrementing the initial address - first column

address stored in register when CAS goes low then incremented and

used in next low CAS transition - less common then Page Mode.

Static Column Mode

Column addresses are treated statically and when CAS is low the outputs

are read if OE is low as well. If the column address changes the outputs

change (after a propagation delay). The frequency of address changes

can be higher as there is no need to have an inactive CAS time

3/16/2016

EE3.cma - Computer Architecture

30

Memory Devices

HM50464 - 64K*4 dynamic RAM organisation

RAS

CAS

Address

row

col

col

col

OE

IO

Data

Data

Data

Extended Data Out DRAM access

Extended Data Out Mode (“EDO DRAM”)

EDO DRAM is very similar to page mode access. Except that data bus

outputs are controlled exclusively by the OE line. So that CAS can be

taken high and low again without data from previous word being

removed from data bus - so data can be latched by processor whilst

new column address is being latched by memory. Overall cycle times

can be shortened.

3/16/2016

EE3.cma - Computer Architecture

31

Memory Devices

HM50464 - 64K*4 dynamic RAM organisation

Clock

Command Act

NOP NOP read NOP NOP NOP NOP PChg NOP NOP Act

Address row

IO

col

bank

D0

Activate

DRAM

row

Read from

Column no.

(3 cycle latency)

D1

D2

row

D3

Read burst (4 words)

Simplified SDRAM burst read access

Synchronous DRAM (“SDRAM”)

Instead of asynchronous control signals SDRAMs accept one command

in each cycle. Different stages of access initiated by separate

commands - initial row address, reading etc. all pipelined so that a read

might not return a word for 2 or 3 cycles

Bursts of accesses to sequential words within a row may be requested by

issuing a burst-length command. Then, subsequent read or write

request operate in units of the burst length

3/16/2016

EE3.cma - Computer Architecture

32

Memory Devices

Summary DRAMs

• A whole row of the memory array must be read

• After reading the data must be re-written

• Writing requires the data to be read first (whole row has to be

stored if only a few bits are changed)

• Cycle time a lot slower than static RAM

• Address lines are multiplexed - saves package pin count

• Fastest DRAM commonly available has access time of

~60ns but a cycle time of 121ns

• DRAMs consume more current

• SDRAMS replace the asynchronous control mechanisms

Memory Type

3/16/2016

Cycles Required

Word 1

Word 2

Word 3

Word 4

DRAM

Page-Mode DRAM

5

5

5

3

5

3

5

3

EDO DRAM

SDRAM

5

5

2

1

2

1

2

1

SRAM

2

1

1

1

EE3.cma - Computer Architecture

33

4

3/16/2016

EE3.cma - Computer Architecture

34

Memory Interfacing

Interfacing

Most processors rely on external memory

The unit of access is a word carried along the Data Bus

Ignoring caching and virtual memory, all memory belongs to

a single address space.

Addresses are passed on the Address Bus

Hardware devices may respond to particular addresses Memory Mapped devices

External memory is a collection of memory chips.

All memory devices are joined to the same data bus

Main purpose of the addressing logic is to ensure only one

memory device is activated during each cycle

3/16/2016

EE3.cma - Computer Architecture

35

Memory Interfacing

Interfacing

The Data Bus has n lines - n = 8,16,32 or 64

The Address Bus has m lines - m = 16,20,24, 32 or 64

providing 2m words of memory

The Address Bus is used at the beginning of a cycle and the

Data Bus at the end

It is therefore possible to multiplex (in time) the two buses

Can create all sorts of timing complications - benefits are a

reduced processor pin count, makes it relatively common

Processor must tell memory subsystem what to do and when

to do it

Can do this either synchronously or asynchronously

3/16/2016

EE3.cma - Computer Architecture

36

Memory Interfacing

Interfacing

synchronously

• processor defines the duration of a memory cycle

• provides control lines for begin and end of cycle

• most conventional

• the durations and relationships might be determined at boot

time (available in 1980’s in the INMOS transputer)

asynchronously • processor starts cycle, memory signals end of cycle

• Error recovery is needed - if non-existent memory is

accessed (Bus Error)

3/16/2016

EE3.cma - Computer Architecture

37

Memory Interfacing

Interfacing

synchronous memory scheme control signals

• Memory system active

– goes active when the processor is accessing external

memory.

– Used to enable the address decoding logic

• provides one active chip select to a group of chips

• Read Memory

– says the processor is not driving the data bus

– selected memory can return data to the data bus

– usually connected to the output enable (OE) of memory

3/16/2016

EE3.cma - Computer Architecture

38

Memory Interfacing

Interfacing

synchronous memory scheme control signals (cont’d)

• Memory Write

– indicates data bus contains data which selected memory

device should store

– different processors use leading or trailing edges of signal

to latch data into memory

– Processors with data bus wider than 8 bits have separate

memory write byte signal for each byte of data

– Memory write lines connected to write lines of memories

• Address Latch Enable (in multiplexed address machines)

– tells the addressing logic when to take a copy of the address

from multiplexed bus so processor can use it for data later

• Memory Wait

– causes processor to extend memory cycle

– allows fast and slow memories to be used together without

loss of speed

3/16/2016

EE3.cma - Computer Architecture

39

Memory Interfacing

Address Blocks

How do we place blocks of memory within the address space

of our processor?

Two methods of addressing memory:

• Byte addressing

– each byte has its own address

– good for 8-bit mprocessors and graphics systems

– if memory is 16 or 32 bits wide?

• Word addressing

– only address lines which number individual words

– select multi-byte word

– extra byte address bits retained in processor to

manipulate individual byte

– or use write byte control signals

3/16/2016

EE3.cma - Computer Architecture

40

Memory Interfacing

Address Blocks

How do we place blocks of memory within the address space

of our processor?

Often want different blocks of memory:

• Particular addresses might be special:

– memory mapped I/O ports

– location executed first after a reset

– fast on-chip memory

– diagnostic or test locations

• Also want

– SRAM and/or DRAM in one contiguous block

– memory mapped graphics screen memory

– ROM for booting and low level system operation

– extra locations for peripheral controller registers

3/16/2016

EE3.cma - Computer Architecture

41

Memory Interfacing

Address Blocks

How do we place blocks of memory within the address space

of our processor?

• Each memory block might be built from individual

memory chips

– address and control lines wired in parallel

– data lines brought out separately to provide n bit word

• Fit all the blocks together in overall address map

– easier to place similar sized blocks next to each other

so that they can be combined to produce 2k+1 word area

– jumbling blocks of various sizes complicates address

decoding

– if contiguous blocks are not needed, place them at

major power of 2 boundaries - eg put base of SRAM at 0,

ROM half way up, lowest memory mapped peripheral at 7/8ths

3/16/2016

EE3.cma - Computer Architecture

42

Memory Interfacing

Address Decoding

address decoding logic determines which memory device to

enable depending upon address

• if each memory area stores contiguous words of 2k block

– all memory devices in that area will have k address

lines

– connected (normally) to the k least-significant lines

– remaining m-k examined to see if they provide mostsignificant (remaining) part of address of each area

3 schemes possible

– Full decoding - unique decoding

• All m-k bits are compared with exact values to make up

full address of that block

• only one block can become active

3/16/2016

EE3.cma - Computer Architecture

43

Memory Interfacing

Address Decoding

3 schemes possible (cont’d)

– Partial decoding

• only decode some of m-k lines so that a number of

blocks of addresses will cause a particular chip select to

become active

• eg ignoring one line will mean the same memory device

will be accessible at places in memory map

• makes decoding simpler

– Non-unique decoding

• connect different one of m-k lines directly to active low

chip select of each memory block

• can activate memory block by referencing that line

• No extra logic needed

• BUT can access 2 blocks at once this way…...

3/16/2016

EE3.cma - Computer Architecture

44

5

3/16/2016

EE3.cma - Computer Architecture

45

Memory Interfacing

Address Decoding - Example

A processor has a 32-bit data bus. It also provides a separate

30-bit word addressed address bus, which is labelled A2 to

A31 since it refers to memory initially using byte addressing,

where it uses A0 and A1 as byte addressing bits. It is desired

to connect 2 banks of SRAM (each built up from 128K*8

devices) and one bank of DRAM, built from 1M*4 devices,

to this processor. The SRAM banks should start at the

bottom of the address map, and the DRAM bank should be

contiguous with the SRAM. Specify the address map and

design the decoding logic.

3/16/2016

EE3.cma - Computer Architecture

46

Memory Interfacing

Address Decoding - Example

Each bank of SRAMs will require 4 devices to make up the 32 bit data bus.

Each Bank of DRAMs will require 8 devices.

0013FFFF

DRAM

bank 0

00040000

0003FFFF

00020000

0001FFFF

DRAM

bank 0

DRAM

bank 0

DRAM

bank 0

DRAM

bank 0

DRAM

bank 0

DRAM

bank 0

DRAM

bank 0

SRAM

Bank 1

SRAM

Bank 1

SRAM

Bank 1

SRAM

Bank 1

SRAM

Bank 2

SRAM

Bank 2

SRAM

Bank 2

SRAM

Bank 2

00000000

1M

words

(20 bits)

128k

words

(17 bits)

128k

words

(17bits)

------------------------- 32 bits -------------------------------------------------------------

3/16/2016

EE3.cma - Computer Architecture

47

Memory Interfacing

Address Decoding - Example

17 address lines

to all devices

in parallel

CPU

CS1

17 address lines

to all devices

in parallel

20 address lines

to all devices

in parallel

CS3

CS2

SRAM

128k*8

SRAM

128k*8

8 data lines

to each device

DRAM

1M*4

4 data lines

to each device

CS1 connects to chip select on SRAM bank 0

CS2 connects to chip select on SRAM bank 1

CS3 connects to chip select on DRAM bank

CS1 = A19*A20*A21*A22

CS2 =A19*A20*A21*A22

CS3 = A20+A21+A22

3/16/2016

}

}omitting all address lines A23 and above to simplify

}

EE3.cma - Computer Architecture

48

6

3/16/2016

EE3.cma - Computer Architecture

49

Memory Interfacing

Connecting Multiplexed Address and Data Buses

There are many multiplexing schemes but let’s choose 3

processor types and 2 memory types and look at the

possible interconnections:

• Processor types all 8-bit data and 16 bit address:

– No multiplexing - (eg Zilog Z80)

– multiplexes least significant address bits with data bus

(intel 8085)

– multiplexes the most significant and least significant

halves of address bus

• Memory types:

– SRAM (8k *8) - no address multiplexing

– DRAM (16k*4) - with multiplexed address inputs

3/16/2016

EE3.cma - Computer Architecture

50

Memory Interfacing

CPU vs Static Memory Configuration

CS

Address

decode

A0…15

A0…12

D0…7

D0…7

8k*8 SRAM

CPU

Non multiplexed

address bus

3/16/2016

EE3.cma - Computer Architecture

51

Memory Interfacing

CPU vs Static Memory Configuration

Address

decode

CS

MS

A8…15

A0…12

latch

LS

AD0…7

D0…7

8k*8 SRAM

CPU with LS

addresses multiplexed

with data bus

3/16/2016

EE3.cma - Computer Architecture

52

Memory Interfacing

CPU vs Static Memory Configuration

latch

Address

decode

CS

MA0…7

A0…12

D0…7

D0…7

CPU

time-multiplexed

address bus

8k*8 SRAM

3/16/2016

EE3.cma - Computer Architecture

53

Memory Interfacing

CPU vs Dynamic Memory Configuration

RAS

Address

decode

MPX

A0…15

CAS

D0…3

D0…7

MA0…6

D4…7

2 x 16k*4 DRAM

CPU

non - multiplexed

address bus

3/16/2016

MA0…6

EE3.cma - Computer Architecture

54

Memory Interfacing

CPU vs Dynamic Memory Configuration

RAS

Address

decode

CAS

MPX

latch

A8…15

D0…3

AD0…7

MA0…6

D4…7

2 x 16k*4 DRAM

CPU with LS

addresses multiplexed

with data bus

3/16/2016

MA0…6

EE3.cma - Computer Architecture

55

Memory Interfacing

CPU vs Dynamic Memory Configuration

RAS

Address

decode

MA0…7

MA0…6

D0…7

D0…3

CPU

time-multiplexed

address bus

3/16/2016

CAS

MA0…6

D4…7

2 x 16k*4 DRAM

EE3.cma - Computer Architecture

56

Displays

Video Display Characteristics

• Consider a video display capable of producing 640*240 pixel

monochrome, non-interlaced images at a frame rate of 50Hz:

h

Displayed

Image

v

add 20%

for frame

flyback

3/16/2016

dot rate = (640*1.2)*(240*1.2)*50 Hz

= 11MHz

add 20%

for line

flyback

= 90 ns/pixel

For 1024*800 non-interlaced display:

dot rate = (1024*1.2)*(800*1.2)* 50 Hz

= 65MHz

= 15 ns/pixel

add colour with 64 levels for rgb - 18 bits per pixel

Bandwidth now 1180MHz...

EE3.cma - Computer Architecture

57

Displays

Video Display Characteristics

• Problems with high bit rates:

– Memory mapping of the screen display within the processor map

couples CPU and display tightly - design together

– In order that screen display may be refreshed at the rates required

by video, display must have higher priority then processor for

DMA of memory bus - uses much of bandwidth

– In order to update the image the CPU may require very fast

access to screen memory too

– Megabytes of memory needed for large screen displays are still

relatively expensive - compared with CPU etc.

3/16/2016

EE3.cma - Computer Architecture

58

Displays

Bit-Mapped Displays

• Even 640*240 pixel display cannot be easily maintained

using DMA access to CPU’s RAM - except with multiple

word access

• Increase memory bandwidth for video display with special

video DRAM

– allows whole row of DRAM (256 or 1024 bits) in one

DMA access

• Many video DRAMs may be mapped to provide a single

bit of a multi-bit pixel in parallel - colour displays.

3/16/2016

EE3.cma - Computer Architecture

59

Displays

Character Based Displays

• limited to displaying one of a small number of images in fixed positions

– typically 24lines of 80 characters

– normally 8-bit ASCII

• Character value is used to determine the image from a look-up table

– table often in ROM (RAM version allows font changes)

• For a character of 9 dots wide by 14 high

– 14 rows are generated for each row of characters

– In order to display a complete frame, pixels are drawn a suitable do rate:

dot rate

3/16/2016

=

=

=

(80*9*1.2)*(24*14*1.2)*50 Hz

17.28 MHz

58ns/pixel

EE3.cma - Computer Architecture

60

Displays

Character Based Displays

• A row of 80 characters must be read for every displayed line

–

–

–

–

giving a line rate of 20.16kHz (similar to EGA standard)

overall memory access rate needed ~1.6Mbytes/second (625ns/byte)

barely supportable using DMA on small computers

even at 4bytes at a time (32 bit machines) still major use of data bus

• To avoid reading each line of 80 characters on other 13 rows characters

can be stored in a circular shift register on first access and used instead

of memory access.

– only need 80*24*50 accesses/sec - in bursts

– 167ms per byte - easily supported

– the whole 80 bytes can be read during flyback before start of new character

row at full memory speed in one DMA burst - 80 * about 200ns at a rate of

24*50 times a second - less than 2% of bus bandwidth.

3/16/2016

EE3.cma - Computer Architecture

61

Displays

Character Based Displays

• Assuming that rows of 80 characters in the CPU’s memory map are

stored at 128-byte boundaries (simplifies addressing) the CPU memory

addresses are:

address of screen memory

row

column

n-12 bits

5 bits

7 bits

0…23

0…79

address decode

• Address of character positions on the screen:

row

5 bits

0…23

Memory

3/16/2016

line number

in row

4 bits

carry

carry

column

7 bits

0…13

0…79

Look-up

Table

Memory

EE3.cma - Computer Architecture

dot number

across char

4 bits

carry

0…8

address of current

bit in shift register

62

Displays

Character Based Displays

• An appropriate block diagram of the display would be:

12

(r,c)

Screen

Address

4

ASCII bytes

Screen

Memory

Line no.

in char

Character

9

8 Generator

ROM

(16*256)*9 bits

dot clock

Shift

register

9 to 1 bit

FIFO

80*8bits

video data out

3/16/2016

EE3.cma - Computer Architecture

63

Displays

Character Based Displays

• The problem with DMA fetching individual characters from display

memory is its interference with processor.

• Alternative is to use Dual Port Memories

Dual Port SRAMs

• provide 2 (or more) separate data and address pathways to each memory cell

• 100% of memory bandwidth can be used by display without effecting CPU

• Can be expensive - ~£25 for 4kbytes - makes Mb displays impractical. For

character based display would be OK

Write

CE

Write

CE

I/O

1

OE

Do..Dn

Ao…An

3/16/2016

address

decode 1

row, col

I/O

2

Memory Array

EE3.cma - Computer Architecture

OE

Do..Dn

address

decode 2

row, col

Ao…An

64

7

3/16/2016

EE3.cma - Computer Architecture

65

Bit-Mapped Graphics & Memory Interleaving

Bit-Mapped Displays

• Instead of using an intermediate character generator can store all pixel

information in screen memory at pixel rates above.

• Even 640*240 pixel display cannot be maintained using DMA access to

CPU’s RAM - except with multiple word access

• Increase memory bandwidth for video display with special video

DRAM

– allows whole row of DRAM (256 or 1024 bits) in one DMA access

• Many video DRAMs may be mapped to provide a single bit of a

multi-bit pixel in parallel - colour displays.

• Use of video shift register limits clocking frequency to 25MHz 40ns/pixel

3/16/2016

EE3.cma - Computer Architecture

66

Graphics Card consists of:

GPU – Graphics Processing Unit

microprocessor optimized for 3D graphics rendering

clock rate 250-850MHz with pipelining – converts 3D

images of vertices and lines into 2D pixel image

Video BIOS – program to operate card and interface timings etc.

Video Memory – can use computer RAM, but more often has its

own VideoRAM (128Mb- 2Gb) – often multiport VRAM,

now DDR (double data rate – uses rising and falling edge

of clock)

RAMDAC – Random Access Digital to Analog Converter to CRT

3/16/2016

EE3.cma - Computer Architecture

67

Bit Mapped Graphics & Memory Interleaving

Using Video DRAMs

• To generate analogue signals for a colour display

– 3 fast DAC devices are needed

– each fed from 6 or 8 bits of data

– one each for red, green and blue video inputs

• To save storing so much data per pixel (24 bits) a Colour Look Up

Table (CLUT) device can be used.

– uses a small RAM as a look-up table

– E.g. a 256 entry table accessed by 8-bit values stored for each pixel - the

table contains 18 or 24 bits used to drive DACs

– Hence “256 colours may be displayed from a palette of 262144”

Data for

updating RAM

Pixel Data

6

Din

Addr Dout

RAM

218*28

DACs

Red output

6

Green output

Blue output

6

CLUT

3/16/2016

EE3.cma - Computer Architecture

68

Bit Mapped Graphics & Memory Interleaving

Using Video DRAMs

• Addressing Considerations

– if the number of bits in the shift registers is not the same as the

number of displayed pixels, it is easier to ignore the extra ones wasting memory may make addressing simpler

– processor’s screen memory bigger than displayable memory, gives a

scrollable virtual window.

remaining bits

0..(v-1)

log2v bits

0..(h-1)

log2h bits

screen block select

row address

column address

(not all combinations used)

– Even though most 32 bit processors can access individual bytes

(used as pixels) this is not as efficient as accessing memory in word

(32bits) units

3/16/2016

EE3.cma - Computer Architecture

69

Bit Mapped Graphics & Memory Interleaving

Addressing Considerations (cont’d)

– Sometimes it might be better NOT to arrange the displayed pixels in

ascending memory address order:

0

1

0

1

2

3

2

Each word defines 4 horizontally neighbouring

pixels. Each set fully specifies its colour - most

simple and common representation

3

Each word defines 2 pixels horizontally and

vertically with all colour data. Useful for text or

graphics applications where small rectangular

blocks are modified - might access fewer words

for changes

32

3/16/2016

Each word defines one bit of 32 horizontally

neighbouring pixels. 8 words (in 8 separate

colour planes) need to be changed to

completely change any pixel. Useful for adding

or moving blocks of solid colour - CAD

EE3.cma - Computer Architecture

70

Bit Mapped Graphics & Memory Interleaving

Addressing Considerations (cont’d)

• The video memories must now be arranged so that the bits

within the CPU’s 32-bit words can all be read or written to

their relevant locations in video memory in parallel.

– this is done by making sure that the pixels stored in each

neighbouring 32-bit word are stored in different memory

chips - interleaving

3/16/2016

EE3.cma - Computer Architecture

71

Bit Mapped Graphics & Memory Interleaving

Example

Design a 1024*512 pixel colour display capable of passing 8

bits per pixel to a CLUT. Use a video frame rate of 60Hz

and use video DRAMs with a shift register maximum

clocking frequency of 25MHz. Produce a solution that

supports a processor with an 8-bit data bus.

3/16/2016

EE3.cma - Computer Architecture

72

Bit Mapped Graphics & Memory Interleaving

Example

• 1024 pixels across the screen can be satisfied using 1 1024-bit shift

register (or 4 multiplexed 256-bit ones)

• The frame rate is 60Hz

• The number of lines displayed is 512

• The line rate becomes 60*512 = 30.72kHz - or 32.55ms/line

• 1024 pixels gives a dot rate of 30.72*1024 = 31.46MHz

• Dot time is thus 32ns - too fast for one shift register! So we will have

to interleave 2 or more.

• Multiplexing the minimum 3 shift registers will make addressing

complicated, easier to use 4 VRAMs - each with 256 rows of 256

columns, addressed row/column intersection containing 4 bits

interfaced by 4 pins to the processor and to 4 separate shift registers

3/16/2016

EE3.cma - Computer Architecture

73

Bit Mapped Graphics & Memory Interleaving

Example

• Hence for 8 bit CPU:

CPU memory

address

(BYTE address)

Video address

(pixel counters)

n-20 bits

0..512

9 bits

screen block select

512 rows

1 bit

0..512

8 bits

to RAS address i/p

to top/bottom

multiplexers

3/16/2016

0..1023

10 bits

1024 columns

0..1023

8 bits

1 bit

1 bit

implicit address

of bits in cascaded

shift registers

Which VRAM?

A+B, C+D

E+F, G+H

EE3.cma - Computer Architecture

to pixel

multiplexer

(odd/even pixels)

74

RHS of screen

LHS of screen

Example

top 256 lines

on screen

(8 bits of each

even pixel)

bottom 256 lines

on screen

(8 bits of each

even pixel)

top 256 lines

on screen

(8 bits of each

odd pixel)

bottom 256 lines

on screen

(8 bits of each

odd pixel)

even pixels

256*

256*4

A

256*

256*4

B

4

4

256*

256*4

C

256*

256*4

D

4

8

select top/ 8

bottm

mpx

8

4

odd pixels

256*

256*4

E

256*

256*4

F

4

select

256*

256*4

G

256*

256*4

H

4

odd/

even

pixel

mpx

R

G

B

CLUT

8

4

select

top/ 8

bottm

mpx

8

8 bits of

all pixels

(interleaved)

4

3/16/2016

EE3.cma - Computer Architecture

75

8

3/16/2016

EE3.cma - Computer Architecture

76

Mass Memory Concepts

Disk technology

•

•

•

•

•

•

•

•

•

•

•

•

unchanged for 50 years

similar for CD, DVD

1-12 platters

3600-10000rpm

double sided

circular tracks

subdivided into sectors

recording density >3Gb/cm2

innermost tracks not used – can not be used efficiently

inner tracks factor of 2 shorter than outer tracks

hence more sectors in outer tracks

cylinder – tracks with same diameter on all recording surfaces

3/16/2016

EE3.cma - Computer Architecture

77

Mass Memory Concepts

Access Time

• Seek time

– align head with

cylinder containing

track with sector inside

• Rotational Latency

– time for disk to rotate to

beginning of sector

• Data Transfer time

– time for sector to pass under head

Disk Capacity

= surfaces x tracks/surface x sectors/track x bytes/sector

3/16/2016

EE3.cma - Computer Architecture

78

Key Attributes of Example Discs

Identity of disc

Storage

attributes

Access

attributes

Physical

attributes

3/16/2016

Manufacturer

Seagate

Hitachi

IBM

Series

Model Number

Typical Application

Formatted Capacity GB

Recording surfaces

Cylinders

Sector size B

Avg tracks/sector

Max recording Density Gb/cm2

Min seek time ms

Max seek time ms

External data rate MB/s

Diameter, inches

Platters

Rotation speed rpm

Weight kg

Operating power W

Idle power W

Barracuda

ST1181677LW

Desktop

180

24

24,247

512

604

2.4

1

17

160

3.5

12

7200

1.04

14.1

10.3

DK23DA

ATA-5 40

Laptop

40

4

33,067

512

591

5.1

3

25

100

2.5

2

4200

0.10

2.3

0.7

Microdrive

DSCM-11000

Pocket device

1

2

7167

512

140

2.4

1

19

13

1

1

3600

0.04

0.8

0.5

EE3.cma - Computer Architecture

79

Key Attributes of Example Discs

Samsung launch 1Tb Hard drive:

3 x 3.5” platters

334Gb per platter

7200RPM

32Mb Cache

3Gb/s SATA interface

(SATA – serial Advanced Technology Attachment)

Highest density so far....

3/16/2016

EE3.cma - Computer Architecture

80

Mass Memory Concepts

Disk Organization

Data bits are small regions of magnetic coating magnetized in different

directions to give 0 or 1

Special encoding techniques maximize the storage density

eg rather than let data bit values dictate direction of magnetization can

magnetize based on change of bit value – nonreturn-to-zero (NRZ) –

allows doubling of recording capacity

3/16/2016

EE3.cma - Computer Architecture

81

Mass Memory Concepts

Disk Organization

•

Sector proceeded by sector number and followed by cyclic redundancy check

allows some errors and anomalies to be corrected

• Various gaps within and separating sectors allow processing to finish

• Unit of transfer is a sector – typically 512 to 2K bytes

• Sector address consists of 3 components:

– Disk address = Cylinder#, Track#, Sector#

17-31 bits

10-16bits 1-5bits 6-10bits

– Cylinder# - actuator arm

– Track# - selects read/write head or surface

– Sector# - compared with sector number recorded as it passes

• Sectors are independent and can be arranged in any logical order

• Each sector needs some time to be processed – some sectors may pass before disk

is ready to read again, so logical sectors not stored sequentially as physical sectors

track i

0 16 32 48 1 17 33 49 2 18 34 50 3 19 35 51 4 20 36 52 …..

track i+1 30 46 62 15 31 47 0 16 32 48 1 17 33 49 2 18 34 50 3 19….

track i+2 60 13 29 45 61 14 30 46 62 15 31 47 0 16 32 48 1 17 33 49…..

3/16/2016

EE3.cma - Computer Architecture

82

Mass Memory Concepts

Disk Performance

Disk Access Latency = Seek Time + Rotational Latency

• Seek Time – how far head travels from current cylinder

– mechanical motion – accelerates and brakes

• Rotational Latency – depends upon position

– Average rotational latency = time for half a rotation

– at 10,000 rpm = 3ms

3/16/2016

EE3.cma - Computer Architecture

83

Mass Memory Concepts

RAID - Redundant Array of Inexpensive (Independent) Disks.

• High capacity faster response without specialty hardware

3/16/2016

EE3.cma - Computer Architecture

84

Mass Memory Concepts

RAID0 – multiple disks appear as a single disk each accessing a

part of a single item across many disks

3/16/2016

EE3.cma - Computer Architecture

85

Mass Memory Concepts

RAID1 – robustness added by mirror contents on duplicate

disks – 100% redundancy

3/16/2016

EE3.cma - Computer Architecture

86

Mass Memory Concepts

RAID2 – robustness using error correcting codes – reducing

redundancy – Hamming codes – ~50% redundancy

3/16/2016

EE3.cma - Computer Architecture

87

Mass Memory Concepts

RAID3 – robustness using separate parity and spare disks –

reducing redundancy to 25%

3/16/2016

EE3.cma - Computer Architecture

88

Mass Memory Concepts

RAID4 – Parity/Checksum applied to sectors instead of bytes –

requires large use of parity disk

3/16/2016

EE3.cma - Computer Architecture

89

Mass Memory Concepts

RAID5 – Parity/Checksum distributed across disks – but 2 disk

failures can cause data loss

3/16/2016

EE3.cma - Computer Architecture

90

Mass Memory Concepts

RAID6 – Parity/Checksum distributed across disks and a second

checksum scheme (P+Q) distributed across different disks

3/16/2016

EE3.cma - Computer Architecture

91

9

3/16/2016

EE3.cma - Computer Architecture

92

Virtual Memory

In order to take advantage of the various performance and prices of

different types of memory devices it is normal for a memory

hierarchy to be used:

CPU register

fastest data storage medium

cache

for increased speed of access to DRAM

main RAM

normally DRAM for cost reasons; SRAM possible

disc

magnetic, random access

magnetic tape

serial access for archiving; cheap

• How and where do we find memory that is not RAM?

• How does a job maintain a consistent user image when there are

many others swapping resources between memory devices?

• How can all users pretend they have access to similar memory

addresses?

3/16/2016

EE3.cma - Computer Architecture

93

Virtual Memory

Paging

In a paged virtual memory system the virtual address is treated

as groups of bits which correspond to the Page number and

offset or displacement within the page

– often denoted as (P,D) pair.

• Page number can be looked up in a page table and

concatenated with the offset to give the real address.

• There is normally a separate page table for each virtual

machine which point to pages in the same memory.

• There are two methods used for page table lookup

– direct mapping

– associative mapping

3/16/2016

EE3.cma - Computer Architecture

94

Virtual Memory

Direct Mapping

• uses a page table with the same

number of entries as there are

pages of virtual memory.

• thus possible to look up the

entry corresponding to the

virtual page number to find

– the real address of the page

(if the page is currently

resident in real memory)

– or the address of that page

on the backing store if not

• This may not be economic for

large mainframes with many

users

• A large page table is expensive

to keep in RAM and may be

paged...

3/16/2016

EE3.cma - Computer Architecture

95

Virtual Memory

Content Addressable Memories

• when an ordinary

memory is given an

address it returns the

data word stored at that

location.

• A content addressable

memory is supplied data

rather than an address.

• It looks through all its

storage cells to find a

location which matches

the pattern and returns

which cell contained the

data - may be more than

one

3/16/2016

EE3.cma - Computer Architecture

96

Virtual Memory

Content Addressable Memories

• It is possible to perform a translation

operation using a content addressable

memory

• An output value is stored together

with each cell used for matching

• When a match is made the signal

from the match is used to enable the

register containing the output value

• Care needs to be taken so that only

one output becomes active at any

time

3/16/2016

EE3.cma - Computer Architecture

97

Virtual Memory

Associative Mapping

• Associative mapping uses a

content addressable memory to

find if the page number exist in

the page table

• If it does the rest of the entry

contains the real memory address

of the start of the page

• If not then page is currently in

backing store and needs to be

found from a directly mapped

page table on disc

• The associative memory only

needs to contain the same number

of entries as the number of pages

of real memory - much smaller

than the directly mapped table

3/16/2016

EE3.cma - Computer Architecture

98

Virtual Memory

Associative Mapping

• A combination of direct and associative mapping is often used.

3/16/2016

EE3.cma - Computer Architecture

99

Virtual Memory

Paging

• Paging is viable because programs tend to consist of loops and

functions which are called repeatedly from the same area of memory.

Data tends to be stored in sequential areas of memory and are likely to

be used frequently once brought into main memory.

• Some memory access will be unexpected, unrepeated and so wasteful

of page resources.

• It is easy to produce a program which mis-use virtual memory,

provoking frantic paging as they access memory over a wide area.

• When RAM is full, paging can not just read virtual pages from

backing store to RAM, it must first discard old ones to the backing

store.

3/16/2016

EE3.cma - Computer Architecture

100

10

3/16/2016

EE3.cma - Computer Architecture

101

Virtual Memory

Paging

• There are a number of algorithms that can be used to decide

which ones to move:

– Random replacement - easy to implement, but takes no

account of usage

– FIFO replacement - simple cyclic queue, similar to above

– First-In-Not-Used-First-Out - FIFO queue enhanced with

extra bits which are set when page is accessed and reset

when entry is tested cyclically.

– Least Recently Used - uses set of counters so that access

can be logged

– Working Set - all pages used in last x accesses are flagged

as working set. All other pages are discarded to leave

memory partially empty, ready for further paging

3/16/2016

EE3.cma - Computer Architecture

102

Virtual Memory

Paging - general points

• Every process requires its own page table - so that it can make

independent translation of location of actual page

• Memory fragmentation under paging can be serious.

– as pages are set size, usage will not be for complete page

and last one of a set will not normally be full

– especially if page size is large to optimise disc usage

(reduce the number of head movements)

• Extra bits can be stored in page table with the real address - dirty

bit - to determine if page has been written to since it was copied

and hence if it needs to be copied back

3/16/2016

EE3.cma - Computer Architecture

103

Virtual Memory

Segmentation

• A virtual address in a segmented system is made from 2 parts

– segment number

– displacement within (S,D) pairs

• unlike paging, segments are not fixed length, maybe variable

• Segments store complete entities - pages allow objects to be split

• Each task has its own

segment table

• segment table contains base

address and length of

segment so that other

segments aren’t corrupted

3/16/2016

EE3.cma - Computer Architecture

104

Virtual Memory

Segmentation

• Segmentation doesn’t give rise to fragmentation in the same way, pages

are of variable size so no waste of a segment.

• BUT as they are variable size not very easy to plan to fit them into

memory

?

• Keep a sorted table of vacant blocks of memory and combine

neighbouring blocks when possible

• Can keep information on the “type” a segment is - read-only executable

etc. as they correspond to complete entities.

3/16/2016

EE3.cma - Computer Architecture

105

Virtual Memory

Segmentation & Paging

• A combination of segmentation and Paging uses a triplet of virtual address

fields - the segment number, the page number within the segment and the

displacement within the page (S,P,D)

• More efficient than pure paging - use of space more flexible

• More efficient than pure segmentation - allows part of segment to be swapped

3/16/2016

EE3.cma - Computer Architecture

106

Virtual Memory

Segmentation & Paging

• It is easy to mis-use virtual memory by simple difference in the way that some

routines are coded: The 2 examples below perform exactly the same task, but

the left-hand one generates 1,000 page faults on a machine with 1K word pages,

while the one on the right generates 1,000,000. Most languages (except Fortran)

store arrays in memory with the rows laid out sequentially, the right hand

subscript varying most rapidly…..

void order

{

int array[1000][1000], ii, jj;

for (ii=0; ii<1000; ii++) {

for (jj=0;jj<1000; jj++) {

array[ii][jj];

}

}

void order

{

int array[1000][1000], ii, jj;

for (ii=0; ii<1000; ii++) {

for (jj=0;jj<1000; jj++) {

array[jj][ii];

}

}

}

}

3/16/2016

EE3.cma - Computer Architecture

107

Memory Caches

• Most general purpose mprocessor systems use DRAM for their bulk

RAM requirements because it is cheap and more dense than SRAM

• The penalty for this is that it is slower - SRAM has a 3-4 times

shorter cycle time

• To help some SRAM can be added:

– On-chip directly to the CPU for use as desired - use depends on

the compiler, not always easy to use efficiently but fast access

– Cacheing - between DRAM and CPU. Built using small fast

SRAM, copies of certain parts of the main memory are held

here. The method used to decide where to allocate cache

determines the performance.

– Combination of the two - on chip cache.

3/16/2016

EE3.cma - Computer Architecture

108

Memory Caches

Directly mapped cache - simplest form of memory cache.

• In which the real memory address is treated in three parts:

block select

tag (t bits)

cache index (c bits)

• For a cache of 2c words, the cache index section of the real memory

address indicates which cache entry is able to store data from that address

• When cached the tag (msb of address) is stored in cache with data to

indicate which page it came from

• Cache will store 2c words from 2t pages.

• In operation tag is compared in every memory cycle

– if tag matches a cache hit is achieved and cache data is passed

– otherwise a cache miss occurs and the DRAM supplies word and data

with tag are stored in the cache

Tag

t bits

Tags

Data

Cache Memory

3/16/2016

compare

Index

c bits

Use Cache or

Main Memory

EE3.cma - Computer Architecture

Main

Memory

109

Memory Caches

Set Associative Caches.

• A 2-way cache contains 2 cache blocks, each capable of storing one word

and the appropriate tag.

• For any memory access the two stored tags are checked

• Require Associative memory with 2 entries for each of the 2c cache lines

• Similarly a 4-way cache stores 4 cache entries for each cache index

Tag

t bits

Index

c bits

Cache Memory

Tags

Data

Tags

Data

Main

Memory

compare

Use Appropriate Cache or

Main Memory

3/16/2016

EE3.cma - Computer Architecture

110

Memory Caches

Fully Associative Caches

• A 2-way cache has two places which it must read and compare to

look for a tag

• This is extended to the size of the cache memory

– so that any main memory word can be cached at any location in

cache

• cache has no index (c=0) and contains longer tags and data

– notice as c (address length) decreases, t (tag length) must

increase to match

• all tags are compared on each memory access

• to be fast all tags must be compared in parallel

block select

tag (t bits)

no cache index (c=0)

INMOS T9000 had such a cache on chip

3/16/2016

EE3.cma - Computer Architecture

111

Memory Caches

Degree of Set Associativity

• for any chosen size of cache, there is a choice between more

associativity or a larger index field width

• optimum can depend on workload and instruction decoding accessible by simulation

In practice:

An 8kbyte (2k entries) cache, addressed directly, will produce a hit

rate of about 73%, a 32kbyte cache achieves 86% and a 128kbyte 2way cache 89%

(all these figures depend on characteristics of the instruction set and

code executed, data used, etc. - these are for the Intel 80386)

• considering the greater complexity of the 2-way cache there doesn’t

seem to be a great advantage in applying it

3/16/2016

EE3.cma - Computer Architecture

112

Memory Caches

Cache Line Size

• Possible to have cache data entries wider than a single word – i.e. a line size > 1

• Then a real memory access causes 2, 4 etc. words to be read

– reading performed over n-word data bus

– or from page mode DRAM, capable of transferring multiple

words from same row in DRAM, by supplying extra column

addresses

– extra words are stored in the cache in an extended data area

– as most code (and data access) occurs sequentially, it is likely

that next word will come in useful…

– real memory address specifies which word in the line it wants

block select

3/16/2016

tag (t bits)

cache index (c bits)

EE3.cma - Computer Architecture

line address (l bits)

113

11

3/16/2016

EE3.cma - Computer Architecture

114

Memory Caches

Writing Cached Memory

So far only really concerned with reading cache. But problem also exists to

keep cache and main memory consistent:

Unbuffered Write Through

• write data to relevant cache entry, update tag, also write data to location in

main memory - speed determined by main memory

Buffered Write Through

• Data (and address) is written to A FIFO buffer between CPU and main

memory, CPU continues with next access, FIFO buffer writes to DRAM

• CPU can continue to write at cache speeds, until FIFO is full, then slows

down to DRAM speed as FIFO empties

• If CPU wants to read from DRAM (instead of cache) need to empty FIFO

to ensure we have the correct data - can put long delay in.

• This delay can be shortened if FIFO has only one entry - simple latch

buffer

3/16/2016

EE3.cma - Computer Architecture

115

Memory Caches

Tag

8 bits

Index

9 bits

D0-31

13 bits

Data Bus (32 bits)

32

D Data Q

A0-31

13 bit

index

Address Bus

FIFO

22bits

22bits

Main

DRAM

memory

A0-21

13 bit

index

9 bit

tag

D

A

Tag

storage

and

comparison Match

WR

3/16/2016

control

Cache

Memory

WR A

Control

Logic

D0-31

32

Microprocessor

CPU

timing

signals

FIFO

32bits

2bits

(byte address)

FIFOs optional

for buffered

write-through

DRAM select

control

control

4Mword Memory using 8kword

Direct-Mapped cache with

Write-Through writes

EE3.cma - Computer Architecture

116

Memory Caches

Writing Cached Memory (cont’d)

Deferred Write (Copy Back)

• data is written out to cache only, allowing the cached entry to be

different from main memory. If the cache system wants to overwrite a cache index with a different tag it looks to see if the current

entry has been changed since it was copied in. If so it writes the

new value to main memory before reading the new data to the

location in cache.

• More logic is required for this operation, but the performance gain

can be considerable as it allows the CPU to work at cache speed if

it stays within the same block of memory. Other techniques will

slow down to DRAM speed eventually.

• Adding a buffer to this allows CPU to write to cache before data is

actually copied back to DRAM

3/16/2016

EE3.cma - Computer Architecture

117

Memory Caches

DRAM select

Tag

8 bits

9 bits

D0-31

Cache

Memory

A0-31

13 bit

index

13 bit

9 bit index

tag

22bits

D

Dirty bit

Match

D

A

Tag Q

storage

and

comparison

Main

DRAM

memory

control

Address Bus

WR

3/16/2016

Latch

32bits

D Data Q

WR A

A0-21

Q

Latch

D

2bits

(byte address)

D0-31

32

Microprocessor

Control

Logic

13 bits

Data Bus (32 bits)

32

CPU

timing

signals

Index

control

Q

Latch

control

4Mword Memory using 8kword

Direct-Mapped cache with

Copy-Back writes

EE3.cma - Computer Architecture

118

Memory Caches

Cache Replacement Policies for non direct-mapped caches

• when CPU accesses a location which is not already in cache need to

decide which existing entry to send back to main memory

• needs to be a quick decision

• Possible schemes are:

– Random replacement - a very simple scheme where a frequently

changing binary counter is used to supply a cache set number for

rejection.

– First-In-First-Out - a counter is incremented every time a new

entry is brought into the cache, which is used to point to the next slot

to be filled

– Least Recently Used - good strategy as keeps often used values in

cache, but difficult to implement with a few gates in short times

3/16/2016

EE3.cma - Computer Architecture

119

Memory Caches

Cache Consistency

A problem occurs when DMA is used by other devices or processors.

• Simple solution is to attach cache to memory and make all devices

operate through it.

• Not best idea as DMA transfer will cause all cache entries to be

overwritten, even though it is unlikely to be needed again soon

• If the cache is placed on the CPU side of the DMA traffic then

cache might not mirror DRAM contents

Bus Watching - monitor access to the DRAM and invalidate the

relevant cache tag entry if that DRAM has been updated can then

keep cache towards the CPU

3/16/2016

EE3.cma - Computer Architecture

120

12

3/16/2016

EE3.cma - Computer Architecture

121

Instruction Sets

Introduction

Instruction streams control all activity in the processor. All

characteristics of the machine depend on design of instruction set

– ease of programming

– code space efficiency

– performance

Look at a few different instruction sets:

– Zilog Z80

– DEC Vax-11

– Intel family

– INMOS Transputer

– Fairchild Clipper

– Berkeley RISC-I

3/16/2016

EE3.cma - Computer Architecture

122

Instruction Sets

General Requirements of an Instruction Set

Number of conflicting requirements of an instruction set:

• Space Efficiency - control information should be compact

– the major part of all data moved between memory and CPU

– obtained by careful design of instruction set

• variable length coding can be used so that frequently used

instructions are encoded into fewer bits

• Code Efficiency - can only translate a task efficiently if it is

easy to pick needed instructions from set.

– various attempts at optimising instruction sets resulted in :

• CISC - rich set of long instructions - results in small number

of translated instructions

• RISC - very short instructions, combined at compile time to

produce same result

3/16/2016

EE3.cma - Computer Architecture

123

Instruction Sets

General Requirements of an Instruction Set (cont’d)

• Ease of Compilation - in some environments compilation is a more

frequent activity than on machines where demanding executables

predominate. Both want execution efficiency however.

– more time consuming to produce efficient code for CISC - more

difficult to map program to wide range of complex instructions

– RISC simplifies compilation

– Ease of compilation doesn’t guarantee better code…..

– Orthogonality of instruction set also effects code generation.

• regular structure

• no special cases

• thus all actions (add, multiply etc.) able to work with each

addressing mode (immediate, absolute, indirect, register).

• If not compiler may have to treat different items differently constants, arrays and variables

3/16/2016

EE3.cma - Computer Architecture

124