Welcome Note! How To Survive SCU 2015 Europe!

advertisement

Windows Server 2016

Markus Erlacher

CEO

itnetX AG

www.itnetx.ch

Bringing the cloud to you

What if you could have the control of the datacenter

and the power of the cloud?

Reduced developer chaos and shadow IT

Simplified, abstracted experience

Added consistency to modern development

One portal, one user experience

Write once, deploy anywhere

Nano Server

Voice of the Customer

Reboots impact my business

Why do I have to reboot because of a patch to a component I never use?

When a reboot is required, the systems need to be back in service ASAP

Server images are too big

Large images take a long time to install and configure

Transferring images consumes too much network bandwidth

Storing images requires too much disk space

Infrastructure requires too many resources

If the OS consumes fewer resources, I can increase my VM density

Higher VM density lowers my costs and increases my efficiency & margins

I want just the components

I need

and nothing more

Microsoft Server Journey

Server Roles/Features

GUI Shell

Windows/

WindowsNT

Full Server

Server Core

Windows NT to

Windows Server

Windows

NT /

2003

Windows

Server 2003

Windows Server 2008

and

Windows Server

2008

Windows Server 2008 R2

Windows Server 2008 R2

Minimal Server

Interface

Server Core

Windows Server 2012

and 2012

Windows Server

Windows Server 2012 R2

Windows Server 2012 R2

Why we need Nano Server

Azure

Patches and reboots interrupt service delivery

(*VERY large # of servers) * (large OS resource consumption)

Provisioning large host images competes for network

resources

Why we need Nano Server

Cloud Platform System (CPS)

Cloud-in-box running on 1-4 racks using System Center &

Windows Server

Setup time needs to be shortened

Patches and reboots result in service disruption

Fully loaded CPS would live migrate > 16TB for every host OS

patch

Network capacity could have otherwise gone to business uses

Reboots: Compute host ~2 minutes / Storage host ~5 minutes

We need server configuration optimized for the

cloud

Nano Server - Next Step in the Cloud Journey

A new headless, 64-bit only, deployment option for

Windows Server

Deep refactoring focused on

Basic Client

CloudOS infrastructure

Born-in-the-cloud applications

Follow the Server Core pattern

Nano Server

Experience

Server with Local

Admin Tools

Server Core

Nano Server - Roles & Features

Zero-footprint model

Server Roles and Optional Features live outside of Nano Server

Standalone packages that install like applications

Key Roles & Features

Hyper-V, Storage (SoFS), and Clustering

Core CLR, ASP.NET 5 & PaaS

Full Windows Server driver support

Antimalware Built-in

System Center and Apps Insight agents to follow

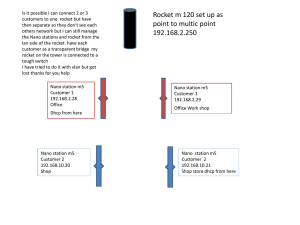

Nano Server in Windows Server 2016

An installation option, like Server Core

Not listed in Setup because image must be customized with

drivers

Separate folder on the Windows Server media

Available since the Windows

Server Technical Preview 2

released at Ignite

Installing Drivers

For the leanest image, install just the drivers your

hardware requires

Dism /Add-Driver /driver:<path>

Nano Server includes a package of all drivers in Server

Core

Dism /Add-Package /PackagePath:.\packages\Microsoft-NanoServerOEM-Drivers-Package.cab

To run Nano Server as a VM install

Dism /Add-Package /PackagePath:.\packagesMicrosoft-NanoServer-

Deploying Nano Server

Generate a VHD from NanoServer.wim

Download Convert-WindowsImage.ps1 from the Script

Center:

https://gallery.technet.microsoft.com/scriptcenter/ConvertWindowsImageps1-0fe23a8f

Run Convert-WindowsImage.ps1 -Sourcepath <path to wim>

-VHD <path to new VHD file> –VHDformat VHD -Edition 1.

Dism /Apply-Image

Installing Roles and Features

Nano Server folder has a Packages sub-folder

Dism /Add-Package /PackagePath:.\packages\<package>

Dism /Add-Package /PackagePath:.\packages\enus\<package>

Installing Agents and Tools on Nano Server

No MSI support in Nano Server

Current builds of Nano Server require xcopy or custom

PowerShell script

Nano Server Installer in the works, which will provide

Install

Uninstall

Inventory

Online and offline installation support

Installing Software on Nano Server

No MSI support in Nano Server

Current builds of Nano Server require xcopy or custom

PowerShell script

Nano Server Installer in the works, which will provide

Install

Uninstall

Inventory

Online and offline installation support

Demo: Nano Server Deployment

Hyper-V

We are winning

virtualization share

x86 Server Virtualization Share

For The Past 5+ Years

Microsoft

Hyper-V

Server

Q1 CY2008

Q3 CY2009

Q3 CY2012

Q3 CY2014

Change

Windows

Server 2008

Released

Windows

Server 2008 R2

Released

Windows

Server 2012

Released

CURRENT

Since

Hyper-V

Released

0.0%

11.8%

25.9%

30.6%

+30.6 Pts

40.0%

46.6%

51.4%

46.2%

+6.2 Pts

ESX

Source: IDC WW Quarterly Server Virtualization Tracker, December 2014. Hyper-V and ESX + vSphere shares based on percent market share among all x86

new hypervisor deployments (nonpaid and paid). x86 hypervisor shipments include those sold on new servers, new nonpaid hypervisor deployments

aboard new servers, and new hypervisor sales and nonpaid hypervisor deployments on installed base servers. Share gains for Hyper-V and ESX + vSphere

come in part from market share transfers from older products from same vendors.

Security

Challenges in protecting high-value assets

Confidently protect sensitive customer data:

Designed for ‘zero-trust’ environments

Shielded VMs

Spotlight capabilities

Shielded Virtual Machines can only run in

fabrics that are designated as owners of

that virtual machine

Shielded Virtual Machines will need

to be encrypted (by BitLocker or other

means) in order to ensure that only the

designated owners can run this virtual

machine

You can convert a running

Generation 2 virtual machine into a

Shielded Virtual Machine

Host Guardian

Service

Secure Boot Support for Linux

Providing kernel code integrity protections for Linux guest

operating systems.

Works with:

• Ubuntu 14.04 and later

• SUSE Linux Enterprise Server 12

Secure Boot Support for Linux

PowerShell to enable this:

Set-VMFirmware "Ubuntu" -SecureBootTemplate

MicrosoftUEFICertificateAuthority

Resiliency & Availability

Storage and Cluster Resiliency

Virtual Machine Storage Resiliency

Storage fabric outage no longer means that virtual machine

crash

• Virtual machines pause and resume automatically in

response to storage fabric problems

Virtual Machine Cluster Resiliency

VMs continue to run even when a node falls out of cluster

membership

Resiliency to transient failures

Repeat offenders are “quarantined”

Shared VHDX

Improved Shared VDHX

Host Based Backup of Shared VHDX

Online Resize of Shared VHDX

Replica Support for Hot Add of VHDX

When you add a new virtual hard disk to a virtual machine

that is being replicated – it is automatically added to the

not-replicated set. This set can be updated online.

Set-VMReplication "VMName" -ReplicatedDisks

(Get-VMHardDiskDrive "VMName")

Runtime Memory Resize

Dynamic memory is great, but more can be done.

For Windows Server 2016 guests, you can now increase and

decrease the memory assigned to virtual machines while

they are running.

Hot add / remove of network adapters

Network adapters can be added and removed from

Generation 2 virtual machines while they are running.

Servicing & Upgrades

Rolling Cluster Upgrade

You can now upgrade a 2012 R2 Hyper-V cluster to

Windows Server 2016 with:

• No new hardware

• No downtime

• The ability to roll-back safely if needed

New VM Upgrade Process

Windows Server 2016:

• Hyper-V will not automatically upgrade virtual machines

• Upgrading a virtual machine is a manual operation that is

separate from upgrading the host

• Individual virtual machines can be moved back to earlier

versions, until they have been manually upgraded

New VM Upgrade Process

Windows Server 2016:

PowerShell only:

Update-VMConfigurationVersion

Changing how we handle VM servicing

Windows Server 2016:

• VM drivers (integration services) updated when needed

• Require latest available VM drivers for that guest operating

system

• Drivers delivered directly to the guest operating system via

Windows Update

Scale Improvements

Evolving Hyper-V Backup

New architecture to improve reliability, scale and

performance.

• Decoupling backing up virtual machines from backing up

the underlying storage.

• No longer dependent on hardware snapshots for core

backup functionality, but still able to take advantage of

hardware capabilities when they are present.

Built in change tracking for Backup

Most Hyper-V backup solutions today implement kernel

level file system filters in order to gain efficiency.

• Makes it hard for backup partners to update to newer

versions of Windows

• Increases the complexity of Hyper-V deployments

Efficient change tracking for backup is now part of the

platform

VM Configuration Changes

•

•

•

New virtual machine configuration file

Binary format for efficient performance at scale

Resilient logging for changes

New file extensions

.VMCX and .VMRS

Operations

Production Checkpoints

Delivers the same Checkpoint experience that you had in

Windows Server 2012 R2 – but now fully supported for

Production Environments

• Uses VSS instead of Saved State to create checkpoint

• Restoring a checkpoint is just like restoring a system backup

PowerShell Direct to Guest OS

You can now script PowerShell in the Guest OS directly from

the Host OS

No need to configure PowerShell Remoting

Or even have network connectivity

Still need to have guest credentials

Network Adapter Identification

You can name individual network adapters in the virtual

machine settings – and see the same name inside the guest

operating system.

Add-VMNetworkAdapter

PowerShell -VMName

in host:

“TestVM" -SwitchName "Virtual Switch" -Name "Fred" -Passthru |

Set-VMNetworkAdapter -DeviceNaming on

PowerShell in guest:

Get-NetAdapterAdvancedProperty | ?{$_.DisplayName -eq "Hyper-V Network Adapter Name"} |

select Name, DisplayValue

ReFS Accelerated VHDX Operations

Taking advantage of an intelligent file system for:

• Instant fixed disk creation

• Instant disk merge operations

Isolation

Distributed Storage QoS

•

•

Windows Server 2016:

Leveraging Scale Out File Server to allow you to:

Define IOPs reserves for important virtual hard disks

Define a IOPs reserve and limit that is shared by a group of

virtual machines / virtual hard disks

Host Resource Protection

Dynamically identify virtual machines that are not “playing

well” and reduce their resource allocation.

Networking

Software Defined Networking

Bringing Software Defined Networking to the next level

VXLAN and NVGRE support

Virtual Firewall

Software Load Balancer

Improved Gateways

RDMA using vNICs

And much much more…

One more thing….

Containers

What are Containers

LXC (Linux Containers) is an operating-system-level

virtualization environment for running multiple

isolated Linux systems (containers) on a single Linux

control host. Containers provide operating systemlevel virtualization through a virtual environment that

has its own process and network space, instead of

creating a full-fledged virtual machine.

Bare-Metal

Virtual Machines

Container Run-time

Container Ecosystem

Container Run-Time

Container Images

Image Repository

Applications

Linux

Application

Frameworks

Containers

Deploying Containers

Requires Hyper-V Hypervisor

Deploying Containers

Deploying Containers

Requires Hyper-V

Hypervisor

Demo: Containers

Software Defined Storage

The (v)Next Level

Current SDS with Server 2012 R2 (1 / 2)

Access point for Hyper-V

Scale-out data access

Data access resiliency

Cluster Shared Volumes

Single consistent namespace

Fast failover

Storage Spaces

Storage pooling

Virtual disks

Data Resiliency

Hardware

- Standard volume hardware

- Fast and efficient networking

- Shared storage enclosures

- SAS SSD

- SAS HDD

Software Defined Storage System

Scale-Out File Server

Shared JBOD Storage

Current SDS with Server 2012 R2 (2 / 2)

Tiered Spaces leverage file system intelligence

File system measures data activity at sub-file granularity

Heat follows files

Admin-controlled file pinning is possible

Hyper-V Nodes

I/O Activity

Accumulates

Heat at Sub-File

Granularity

Data movement

Automated promotion of hot data to SSD tier

Configurable scheduled task

Write-Back Cache (WBC)

Helps smooth effects of write bursts

Uses a small amount of SSD capacity

IO to SSD bypass WBC

Large IO bypass WBC

Complementary

Together, WBC and the SSD tier address data’s short-term and long-term

performance needs

Hot Data

Cold Data

What we hear from

customers…..

I can’t implement a Microsoft Storage Solution because…

“I wanna do HyperConverged”

“Replication on Storage-Level

is missing”

“I don’t trust Microsoft doing

Storage”

Storage Spaces Direct

Storage Spaces Direct at a glance

•

•

•

•

Non-Shared Storage (inbox or local attached)

Distributed via new Software Storage Bus

Enables easy scale out

Leverages all the benefits of SMB3.x / RDMA

Storage Spaces Direct logical View

•

•

•

•

•

•

•

•

•

•

Scenarios

Hyper-converged

Converged (Disaggregated)

Software Storage Bus Cache

•

•

•

Cache scoped to local machine

Read and Write cache (see table below)

Automatic configuration when enabling S2D

•

•

Special partition on each caching device

•

Leaves 32GB for pool and virtual disks metadata

Round robin binding of SSD to HDD

Storage Configuration

Caching devices

Capacity devices

Caching behavior

SATA SSD + SATA HDD

All SATA SSD

All SATA HDD

Read + Write

NVMe SSD + SATA HDD

All NVMe SSD

All SATA HDD

Read + Write

NVMe SSD + SATA SSD

All NVMe SSD

All SATA SSD

Write only

Caching Devices

Capacity Devices

Software Storage Bus

•

Virtual storage bus spanning all nodes

•

•

Clusport: Initiator (virtual HBA)

ClusBlft: Target (virtual disk / enclosures)

•

SMB3/SMB Direct transport

•

Intelligent I/O Management

•

•

•

Prioritizing(App vs System)

De-randomization of random IO

Drives sequential IO pattern on rotational

media

Application

Cluster Shared Volumes File System (CSVFS)

File System

File System

Virtual Disks

Virtual Disks

SpacePort

SpacePort

ClusPort

ClusBflt

Physical Devices

Block over SMB

ClusPort

ClusBflt

Physical Devices

Storage Spaces Direct: Requirements

•

•

•

•

•

4 up to 16 Storage Nodes

10 Gbps RDMA

Min. 2 SSD / 1 NMVe per Node (Cache / Journal)

Min. 4 SSD / HDD per Node (capacity)

Supported HW Model (VMs for LAB)

Storage Spaces Direct Development Partners

Cisco UCS C3160 Rack Server

Dell PowerEdge R730xd

Fujitsu Primergy RX2540 M1

HP Apollo 2000 System

Intel® Server Board S2600WT-Based Systems

Lenovo System x3650 M5

Quanta D51PH

Demo

Storage Spaces Direct

Storage Replica

SR is used for DR preparation

Storage Replica at a glance

Volume based block-level storage replication

synchronous or asynchronous

HW agnostic (any type of source / destination volume)

SMB3 as transport protocol

Leverages RDMA / SMB3 Encryption Multichanneling

I/Os pre-aggregated prior to transfer

Managed via Powershell, cluadmin, ASR

Storage Replica Layer

Source

Destination

SR: Streched Cluster

Single Cluster, spanning DCs

Asymmetric Storage

Automatic Failover

Synchronous only

Datacenter A

Datacenter B

Streched Cluster

SR: Cluster to Cluster

Multiple Cluster

Manual Failover

Synchronous or Asynchronous

Datacenter A

Cluster A

Datacenter B

Cluster B

SR: Server to Server

To separate Servers

Manual Failover

Synchronous or Asynchronous

Datacenter A

Server A

Datacenter B

Server B

Synchronous workflow

Applications

(local or remote)

1

5

2

Source Server

Node (SR)

t

Data

4

2

Log

Destination Server

Node (SR)

t1

Data

3

Log

Asynchronous workflow

Applications

(local or remote)

1

3

4

Source Server

Node (SR)

t

Data

6

2

Log

Destination Server

Node (SR)

t1

Data

5

Log

Storage Replica: Requirements

•

•

•

•

•

•

•

Any volume (SAS, SAN, iSCSI, Local)

<5 ms round trip between sites for synchron mirror

RDMA

Identical size for source / target volume

SSDs for log disks recommended (min. 8GB size)

Identical physical disk geometry (phys. sector size)

Turn on SR write ordering for distributed app data

Demo

Storage Replica

ReFS (Resilient File System)

ReFS - Data Integrity

Metadata Checksums

Storage Spaces 3-way mirror with ReFS

Checksums protect all filesystem metadata

User Data Checksums

Optional checksums protect file data

Checksum Verification

•

•

Occurs on every read of checksum-protected data

During periodic background scrubbing

Healing of Detected Corruption

•

•

•

Healing occurs as soon as corruption is detected

Healthy version retrieved from Spaces’ alternate versions

(i.e. mirrors or parity data), if available

ReFS uses the healthy version to automatically have

Storage Spaces repair the corruption

•

•

•

Checksums verified on reads

On checksum mismatch, mirrors are consulted

Good copies used to heal bad mirror

ReFS - Resiliency and Availability

Availability

•

ReFS designed to stay online and keep your data accessible when all else fails

• Even when corruptions cannot be healed via Storage Spaces’ resiliency (mirror- and paritygenerated data versions)

Online Repair

•

•

CHKDSK-Like repairs performed without taking the volume offline

No downtime due to repair operations!

On-Volume Backups of Critical Metadata

•

•

•

Backups of critical metadata are automatically maintained on the volume

Online repair process consults backups if checksum-based repair fails

Provides additional protection for volume-critical metadata, in addition that provided by mirror

and parity spaces

ReFS - Speed and Efficiency

Efficient VM Checkpoints and Backup

VHD(X) checkpoints cleaned up without physical data copies

• Data migrated between parent and child VHD(X) files as a

ReFS metadata operation

• Reduction of I/O to disk

• Increased speed

• Reduces impact of checkpoint clean-up to foreground

workloads

Hyper-V Checkpoint Merging

Accelerated Fixed VHD(X) Creation

Fixed VHD(X) files zeroed with just a metadata operation

• Impact of zeroing I/O to foreground workloads eliminated

• Decreases VM deployment time

Quick Dynamic VHD(X) Expansion

Dynamic VHD(X) files zeroed with a metadata operation

• Impact of zeroing I/O for expanded regions eliminated

• Reduces latency spike for foreground workloads

Hyper-V VHDX Creation / Extension

Storage QoS

Distributed Storage QoS

Mitigate noisy neighbor issues

Monitor end to end storage performance

Deploy at high density with confidence

Storage Quality of Service (QoS)

Control and monitor storage performance

•

•

•

•

•

•

Rate Limiter

SCALE OUT FILE SERVER

•

•

Policy

Manager

IO Scheduler

Storage QoS Policy Types

Single Instance

Thank you!