Ostra: Leveraging trust to thwart unwanted commnunication

advertisement

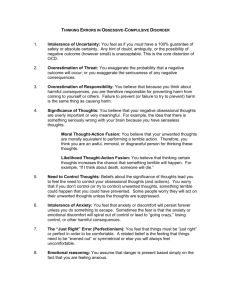

Ostra: Leveraging trust to thwart unwanted commnunication Alan Mislove Ansley Post Reter Druschel Krishna P. Gummadi Motivation • Existing social network site allow any sender to reach potentially millions of users at near zero marginal cost. • Unwanted communication wastes human attention. • Need a way to thwart unwanted communication. Existing approaches to thwarting unwanted communication • Identify unwanted communications by automatically identifying content. • Target the originator by identifying them and holding them accountable. • Impose an upfront cost on senders for each communication. Content – based filtering • Classify communication automatically on the basis of its content. • Subject to both false positives and false negatives. • Unwanted communication is classified as wanted. • Wanted communication is mis-classified as unwanted. Originator – based filtering • White listing. • Requires users have unique identifiers and that content can be authenticated. • Problem is that whitelisting can not deal with unwanted invitations. Imposing a cost on the sender • Deploying a decentralized email system that charges a per-message fee may require a micropayment infrastructure, which some have claimed is impractical. • The challenge-response systems need human attention to complete the challenge. • Some automatically generated email messages are wanted. Content rating • Help user to identify relevant content and avoid unwanted content. • Also help system administrators to identify potentially inappropriate content. • Only applicable to one-to-many communication, also can be manipulated when in a system with weak user identities. Leveraging relationships • Trust relationships are being used to eliminated the need for a trusted certificate authority. • In Ostra, it is used to ensure a user with multiple identities cannot sent additional unwanted communication, unless she also has additional relationships. Ostra strawman • Three assumptions • 1. Each user of the communication system has exactly one unique digital identity. • 2. A trusted entity observes all user actions and associates them with the identity of the user performing the action • 3. User classify communication they receive as wanted or unwanted. System model • With Ostra, communication consists of three phases. • 1. Authorization.(Ostra check if a token could be issued to the sender) • 2. Transmission. (Ostra attach the token at the sender side and check the receiving side) • 3. Classification. (The recipient classifies the communication, provide feedback to Ostra) Figure 1. User Credit • Ostra maintains a per-user balance range[L, U], with L<= 0 <= U. Sender’s L ++, Receiver’s U --, if adjustments cause credit balance to exceed range, Ostra refuse issue token. If not, token is issued. When receiver classifies the communication, unwanted cause one credit transfers from sender to receiver, otherwise adjustments are undone. User Credit • Properties • 1. Limits the amount of unwanted communication a sender can produce. • 2. Allows an arbitrary amount of wanted communication. • Limits the number of tokens that can be issued for a specific recipient before that recipient classifies any of the associated communication. Credit adjustment • What if legitimate user gradually sends a lot of unwanted communication and his credit balance reach its bound? • Add a decay rate d, with 0<= d <= 1. Outstanding credit(both positive or negative) decays at d percentage a day. • The max rate for a user could legitimate produce unwanted communication is d*L + S Credit adjustment • Denial of service attack still possible. • Introduce a special account C, like credit bank, only allow deposit credit, no withdraws are allowed. Credit adjustment • Add a Timeout T, if a communication has not been classified by the receiver after T, the credit bounds are automatically reset. • Enable receivers to plausible deny receipt of communication. Properties • Ostra’s credit balance observes the following invariant. • “At all time, the sum of all credit balance is 0” • Based on i) users have an initial zero balance when joining the system, ii)all operations transfer credit among users, iii)credit decay affects positive and negative credit at the same rate. Table 1 Multi – Party communication • Moderator receives and classifies the communication on behalf of all members of the group. • Only the moderator’s attention is wasted by unwanted communication. And the cost is the same as in two-party case. • Example, Youtube, “flag as inappropriate” mechanism. Ostra Design • Strong user identities are not practical in many applications.(Require strong background check.) • Need to refine the Ostra design to make it do not require strong user identities. Trust networks • There is a non-trivial cost for initiating and maintaining links in the network. • The network must be connected.(Path between any two user identities exists.) • Ostra assumes the system is a trust network and it has the complete view of the network. Link Credit • Use link credit instead of user credit. • Each link with a link credit balance B, initial value 0, and range [L, U]. With L <= 0 <= U, and L<= B <= U. Figure 2 Communicate among friends Figure 3 Communication among non friends Figure 4 Generalization of Ostra strawman Figure 5 Multiple identities Figure 6 Malicious attack • Targeting user: Forgiving some of the debt on one of her links. Transfer credit to the overflow account C. • Targeting links: Structure of social networks (which has dense core) is unlikely to be affected by this kind of attack on large scale. Discussion • Joint Ostra – new user should be introduced in by an existing Ostra user. • Content classification – feed back from recipient is a necessary small cost to pay. • Parameter setting – (L, U, and d) be chosen such that most legitimate users are not affected by the rate limit, while the amount of unwanted communication is still kept very low. Discussion • Compromised user account – easily be detected and even compromised, the communication still subjects to the same limits that apply to any individual user. Evaluation • Experimental trust network – YouTube(large, measured subset. 446,181 users and 1,728,938 symmetric links.) • Experimental traffic workload – Email data containing 150 users and covering 13, 978 emails. Figure 7 Evaluation • Setting parameters – two experiments with different assumptions about the avg. delay between arrival and classifying. • Table 3 Evaluation • Expected performance. • Max rate d*L*D +S , D is the degree of user. • L or d or proportional of malicious users in the network increase, we expect the overall rate of unwanted message to increase. Evaluation • Figure 8 Evaluation • Figure 9 Evaluation • Figure 10 Evaluation • Figrue 11. Decentralizing Ostra • Each participating user runs an Ostra software agent on her own computer, the agent stores the user’s key material and maintains secure network connection to the Ostra agents of the user’s trusted friends. The two Ostra agents adjacent to a trust link each store a copy of the link’s balance and bounds. Routing • To find routes within the local neighborhood of a user, use an efficient bloom filter based mechanism. • To find longer paths, use landmark routing to route to the destination’s neighborhood and then use bloom filters to reach the destination. Decentralized credit update • Figure 12