Business Statistics: A Decision

advertisement

Statistics

Please Stand By….

Chap 4-1

Chapter 4: Probability and Distributions

Randomness

General Probability

Probability Models

Random Variables

Moments of Random Variables

Chap 4-2

Randomness

The language of probability

Thinking about randomness

The uses of probability

Chap 4-3

Randomness

Chap 4-4

Randomness

Chap 4-5

Randomness

Chap 4-6

Randomness

Technical Analysis 1 | More Examples | Another (Tipping Pts)

Chap 4-7

Chapter Goals

After completing this chapter, you should be

able to:

Explain three approaches to assessing

probabilities

Apply common rules of probability

Use Bayes’ Theorem for conditional probabilities

Distinguish between discrete and continuous

probability distributions

Compute the expected value and standard

deviation for a probability distributions

Chap 4-8

Important Terms

Probability – the chance that an uncertain event

will occur (always between 0 and 1)

Experiment – a process of obtaining outcomes

for uncertain events

Elementary Event – the most basic outcome

possible from a simple experiment

Randomness –

Does not mean haphazard

Description of the kind of order that emerges only in

the long run

Chap 4-9

Important Terms (CONT’D)

Sample Space – the collection of all possible

elementary outcomes

Probability Distribution Function

Maps events to intervals on the real line

Discrete probability mass

Continuous probability density

Chap 4-10

Sample Space

The Sample Space is the collection of all

possible outcomes (based on an probabilistic

experiment)

e.g., All 6 faces of a die:

e.g., All 52 cards of a bridge deck:

Chap 4-11

Events

Elementary event – An outcome from a sample

space with one characteristic

Example: A red card from a deck of cards

Event – May involve two or more outcomes

simultaneously

Example: An ace that is also red from a deck of

cards

Chap 4-12

Elementary Events

A automobile consultant records fuel type and

vehicle type for a sample of vehicles

2 Fuel types: Gasoline, Diesel

3 Vehicle types: Truck, Car, SUV

6 possible elementary events:

e1

Gasoline, Truck

e2

Gasoline, Car

e3

Gasoline, SUV

e4

Diesel, Truck

e5

Diesel, Car

e6

Diesel, SUV

e1

Car

e2

e3

e4

Car

e5

e6

Chap 4-13

Independent vs. Dependent Events

Independent Events

E1 = heads on one flip of fair coin

E2 = heads on second flip of same coin

Result of second flip does not depend on the result of

the first flip.

Dependent Events

E1 = rain forecasted on the news

E2 = take umbrella to work

Probability of the second event is affected by the

occurrence of the first event

Chap 4-14

Probability Concepts

Mutually Exclusive Events

If E1 occurs, then E2 cannot occur

E1 and E2 have no common elements

E1

Black

Cards

E2

Red

Cards

A card cannot be

Black and Red at

the same time.

Chap 4-15

Coming up with Probability

Empirically

From the data!

Based on observation, not theory

Probability describes what happens in very

many trials.

We must actually observe many trials to pin

down a probability

Based on belief (Bayesian Technique)

Chap 4-16

Assigning Probability

Classical Probability Assessment

P(Ei) =

Number of ways Ei can occur

Total number of elementary events

Relative Frequency of Occurrence

Number of times Ei occurs

Relative Freq. of Ei =

N

Subjective Probability Assessment

An opinion or judgment by a decision maker about

the likelihood of an event

Chap 4-17

Calculating Probability

Counting Outcomes

Number of ways Ei can occur

Total number of elementary events

Observing Outcomes in Trials

Chap 4-18

Counting

Chap 4-19

Counting

a b c d e ….

___ ___ ___ ___

2.

N take n

N take k

3.

Order not important – less than permutations

1.

___ ___ ___

___ ___

Chap 4-20

Counting

Chap 4-21

Rules of Probability

S is sample space

Pr(S) = 1

Events measured in numbers result in a

Probability Distribution

Chap 4-22

Rules of Probability

Rules for

Possible Values

and Sum

Individual Values

Sum of All Values

k

0 ≤ P(ei) ≤ 1

P(e ) 1

For any event ei

i1

i

where:

k = Number of elementary events

in the sample space

ei = ith elementary event

Chap 4-23

Addition Rule for Elementary Events

The probability of an event Ei is equal to the

sum of the probabilities of the elementary

events forming Ei.

That is, if:

Ei = {e1, e2, e3}

then:

P(Ei) = P(e1) + P(e2) + P(e3)

Chap 4-24

Complement Rule

The complement of an event E is the collection of

all possible elementary events not contained in

event E. The complement of event E is

represented by E.

E

Complement Rule:

P(E ) 1 P(E)

E

Or,

P(E) P(E ) 1

Chap 4-25

Addition Rule for Two Events

■

Addition Rule:

P(E1 or E2) = P(E1) + P(E2) - P(E1 and E2)

E1

+

E2

=

E1

E2

P(E1 or E2) = P(E1) + P(E2) - P(E1 and E2)

Don’t count common

elements twice!

Chap 4-26

Addition Rule Example

P(Red or Ace) = P(Red) +P(Ace) - P(Red and Ace)

= 26/52 + 4/52 - 2/52 = 28/52

Type

Color

Red

Black

Total

Ace

2

2

4

Non-Ace

24

24

48

Total

26

26

52

Don’t count

the two red

aces twice!

Chap 4-27

Addition Rule for

Mutually Exclusive Events

If E1 and E2 are mutually exclusive, then

P(E1 and E2) = 0

E1

E2

So

P(E1 or E2) = P(E1) + P(E2) - P(E1 and E2)

= P(E1) + P(E2)

Chap 4-28

Conditional Probability

Conditional probability for any

two events E1 , E2:

P(E1 and E 2 )

P(E1 | E 2 )

P(E 2 )

where

P(E2 ) 0

Chap 4-29

Conditional Probability Example

Of the cars on a used car lot, 70% have air

conditioning (AC) and 40% have a CD player

(CD). 20% of the cars have both.

What is the probability that a car has a CD

player, given that it has AC ?

i.e., we want to find P(CD | AC)

Chap 4-30

Conditional Probability Example

(continued)

Of the cars on a used car lot, 70% have air conditioning

(AC) and 40% have a CD player (CD).

20% of the cars have both.

CD

No CD

Total

AC

No AC

Total

1.0

P(CD and AC) .2

P(CD | AC)

.2857

P(AC)

.7

Chap 4-31

Conditional Probability Example

(continued)

Given AC, we only consider the top row (70% of the cars). Of these,

20% have a CD player. 20% of 70% is about 28.57%.

CD

No CD

Total

AC

.2

.5

.7

No AC

.2

.1

.3

Total

.4

.6

1.0

P(CD and AC) .2

P(CD | AC)

.2857

P(AC)

.7

Chap 4-32

For Independent Events:

Conditional probability for

independent events E1 , E2:

P(E1 | E2 ) P(E1 )

where

P(E2 ) 0

P(E2 | E1) P(E2 )

where

P(E1) 0

Chap 4-33

Multiplication Rules

Multiplication rule for two events E1 and E2:

P(E1 and E 2 ) P(E1 ) P(E 2 | E1 )

Note: If E1 and E2 are independent, then P(E2 | E1) P(E2 )

and the multiplication rule simplifies to

P(E1 and E2 ) P(E1 ) P(E2 )

Chap 4-34

Tree Diagram Example

P(E1 and E3) = 0.8 x 0.2 = 0.16

Car: P(E4|E1) = 0.5

Gasoline

P(E1) = 0.8

Diesel

P(E2) = 0.2

P(E1 and E4) = 0.8 x 0.5 = 0.40

P(E1 and E5) = 0.8 x 0.3 = 0.24

P(E2 and E3) = 0.2 x 0.6 = 0.12

Car: P(E4|E2) = 0.1

P(E2 and E4) = 0.2 x 0.1 = 0.02

P(E3 and E4) = 0.2 x 0.3 = 0.06

Chap 4-35

Get Ready….

More Probability

Examples

Random Variables

Probability Distributions

Chap 4-36

Introduction to Probability

Distributions

Random Variable – “X”

Is a function from the sample space to

another space, usually Real line

Represents a possible numerical value from

a random event

Each r.v. has a Distribution Function – FX(x),

fX(x) based on that in the sample space

Assigns probability to the (numerical)

outcomes (discrete values or intervals)

Chap 4-37

Random Variables

Not Easy to Describe

Chap 4-38

Random Variables

Chap 4-39

Random Variables

Not Easy to Describe

Chap 4-40

Introduction to Probability

Distributions

Random Variable

Represents a possible numerical value from

a random event

Random

Variables

Discrete

Random Variable

Continuous

Random Variable

Chap 4-41

Discrete Probability Distribution

A list of all possible [ xi , P(xi) ] pairs

xi = Value of Random Variable (Outcome)

P(xi) = Probability Associated with Value

xi’s are mutually exclusive

(no overlap)

xi’s are collectively exhaustive

(nothing left out)

0 P(xi) 1 for each xi

S P(xi) = 1

Chap 4-42

Discrete Random Variables

Can only assume a countable number of values

Examples:

Roll a die twice

Let x be the number of times 4 comes up

(then x could be 0, 1, or 2 times)

Toss a coin 5 times.

Let x be the number of heads

(then x = 0, 1, 2, 3, 4, or 5)

Chap 4-43

Discrete Probability Distribution

Experiment: Toss 2 Coins.

T

T

H

H

T

H

T

H

Probability Distribution

x Value

Probability

0

1/4 = .25

1

2/4 = .50

2

1/4 = .25

Probability

4 possible outcomes

Let x = # heads.

.50

.25

0

1

2

x

Chap 4-44

X ) 1 as X

The Distribution Function

Assigns Probability to Outcomes

F, f > 0; right-continuous

F ( X ) 0 as X and F ( X ) 1 as X

P(X<a)=FX(a)

Discrete Random Variable

Continuous Random Variable

Chap 4-45

Discrete Random Variable

Summary Measures - Moments

Expected Value of a discrete distribution

(Weighted Average)

E(x) = Sxi P(xi)

Example: Toss 2 coins,

x = # of heads,

compute expected value of x:

x

P(x)

0

.25

1

.50

2

.25

E(x) = (0 x .25) + (1 x .50) + (2 x .25)

= 1.0

Chap 4-46

Discrete Random Variable

Summary Measures

(continued)

Standard Deviation of a discrete distribution

σx

{x E(x)}

2

P(x)

where:

E(x) = Expected value of the random variable

x = Values of the random variable

P(x) = Probability of the random variable having

the value of x

Chap 4-47

Discrete Random Variable

Summary Measures

(continued)

Example: Toss 2 coins, x = # heads,

compute standard deviation (recall E(x) = 1)

σx

{x E(x)}

2

P(x)

σ x (0 1)2 (.25) (1 1)2 (.50) (2 1)2 (.25) .50 .707

Possible number of heads

= 0, 1, or 2

Chap 4-48

Two Discrete Random Variables

Expected value of the sum of two discrete

random variables:

E(x + y) = E(x) + E(y)

= S x P(x) + S y P(y)

(The expected value of the sum of two random

variables is the sum of the two expected

values)

Chap 4-49

Sums of Random Variables

Usually we discuss sums of INDEPENDENT random

variables, Xi i.i.d.

Only sometimes is f SX f

Due to Linearity of the Expectation operator,

E(SXi) = SE(Xi) and

Var(SXi) = S Var(Xi)

CLT: Let Sn=SXi then (Sn - E(Sn))~N(0, var)

Chap 4-50

Covariance

Covariance between two discrete random

variables:

σxy = S [xi – E(x)][yj – E(y)]P(xiyj)

where:

xi = possible values of the x discrete random variable

yj = possible values of the y discrete random variable

P(xi ,yj) = joint probability of the values of xi and yj occurring

Chap 4-51

Interpreting Covariance

Covariance between two discrete random

variables:

xy > 0

x and y tend to move in the same direction

xy < 0

x and y tend to move in opposite directions

xy = 0

x and y do not move closely together

Chap 4-52

Correlation Coefficient

The Correlation Coefficient shows the

strength of the linear association between

two variables

σxy

ρ

σx σy

where:

ρ = correlation coefficient (“rho”)

σxy = covariance between x and y

σx = standard deviation of variable x

σy = standard deviation of variable y

Chap 4-53

Interpreting the

Correlation Coefficient

The Correlation Coefficient always falls

between -1 and +1

=0

x and y are not linearly related.

The farther is from zero, the stronger the linear

relationship:

= +1

x and y have a perfect positive linear relationship

= -1

x and y have a perfect negative linear relationship

Chap 4-54

Useful Discrete Distributions

Discrete Uniform

Binary – Success/Fail (Bernoulli)

Binomial

Poisson

Empirical

Piano Keys

Other “stuff that happens” in life

Chap 4-55

Useful Continuous Distributions

Finite Support

Uniform fX(x)=c

Beta

Infinite Support

Gaussian (Normal) N(m,)

Log-normal

Gamma

Exponential

Chap 4-56

Section Summary

Described approaches to assessing probabilities

Developed common rules of probability

Distinguished between discrete and continuous

probability distributions

Examined discrete and continuous probability

distributions and their moments (summary

measures)

Chap 4-57

Probability Distributions

Probability

Distributions

Discrete

Probability

Distributions

Continuous

Probability

Distributions

Binomial

Normal

Poisson

Uniform

Etc.

Etc.

Chap 4-58

Some Discrete Distributions

Chap 4-59

Binomial Distribution Formula

n!

x nx

P(x)

p q

x ! (n x )!

P(x) = probability of x successes in n trials,

with probability of success p on each trial

x = number of ‘successes’ in sample,

(x = 0, 1, 2, ..., n)

p = probability of “success” per trial

q = probability of “failure” = (1 – p)

n = number of trials (sample size)

Example: Flip a coin four

times, let x = # heads:

n=4

p = 0.5

q = (1 - .5) = .5

x = 0, 1, 2, 3, 4

Chap 4-60

Binomial Characteristics

Examples

μ np (5)(.1) 0.5

Mean

σ npq (5)(.1)(1 .1)

0.6708

P(X)

.6

.4

.2

0

X

0

μ np (5)(.5) 2.5

σ npq (5)(.5)(1 .5)

1.118

n = 5 p = 0.1

P(X)

.6

.4

.2

0

1

2

3

4

5

n = 5 p = 0.5

X

0

1

2

3

4

5

Chap 4-61

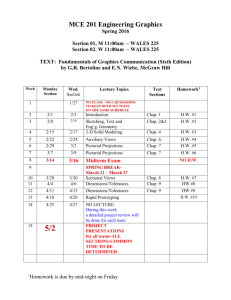

Using Binomial Tables

n = 10

x

p=.15

p=.20

p=.25

p=.30

p=.35

p=.40

p=.45

p=.50

0

1

2

3

4

5

6

7

8

9

10

0.1969

0.3474

0.2759

0.1298

0.0401

0.0085

0.0012

0.0001

0.0000

0.0000

0.0000

0.1074

0.2684

0.3020

0.2013

0.0881

0.0264

0.0055

0.0008

0.0001

0.0000

0.0000

0.0563

0.1877

0.2816

0.2503

0.1460

0.0584

0.0162

0.0031

0.0004

0.0000

0.0000

0.0282

0.1211

0.2335

0.2668

0.2001

0.1029

0.0368

0.0090

0.0014

0.0001

0.0000

0.0135

0.0725

0.1757

0.2522

0.2377

0.1536

0.0689

0.0212

0.0043

0.0005

0.0000

0.0060

0.0403

0.1209

0.2150

0.2508

0.2007

0.1115

0.0425

0.0106

0.0016

0.0001

0.0025

0.0207

0.0763

0.1665

0.2384

0.2340

0.1596

0.0746

0.0229

0.0042

0.0003

0.0010

0.0098

0.0439

0.1172

0.2051

0.2461

0.2051

0.1172

0.0439

0.0098

0.0010

10

9

8

7

6

5

4

3

2

1

0

p=.85

p=.80

p=.75

p=.70

p=.65

p=.60

p=.55

p=.50

x

Examples:

n = 10, p = .35, x = 3:

P(x = 3|n =10, p = .35) = .2522

n = 10, p = .75, x = 2:

P(x = 2|n =10, p = .75) = .0004

Chap 4-62

The Poisson Distribution

Characteristics of the Poisson Distribution:

The outcomes of interest are rare relative to the

possible outcomes

The average number of outcomes of interest per time

or space interval is

The number of outcomes of interest are random, and

the occurrence of one outcome does not influence the

chances of another outcome of interest

The probability of that an outcome of interest occurs

in a given segment is the same for all segments

Chap 4-63

Poisson Distribution Formula

( t ) e

P( x )

x!

x

t

where:

t = size of the segment of interest

x = number of successes in segment of interest

= expected number of successes in a segment of unit size

e = base of the natural logarithm system (2.71828...)

Chap 4-64

Poisson Distribution Shape

The shape of the Poisson Distribution

depends on the parameters and t:

t = 0.50

t = 3.0

0.25

0.70

0.60

0.20

0.40

P(x)

P(x)

0.50

0.30

0.15

0.10

0.20

0.05

0.10

0.00

0.00

0

1

2

3

4

x

5

6

7

0

1

2

3

4

5

6

7

8

9

10

x

Chap 4-65

Poisson Distribution

Characteristics

Mean

μ λt

Variance and Standard Deviation

σ 2 λt

where

σ λt

= number of successes in a segment of unit size

t = the size of the segment of interest

http://www.math.csusb.edu/faculty/stanton/m262/poisson_distribution

/Poisson_old.html

Chap 4-66

Graph of Poisson Probabilities

0.70

Graphically:

0.60

= .05 and t = 100

0

1

2

3

4

5

6

7

0.6065

0.3033

0.0758

0.0126

0.0016

0.0002

0.0000

0.0000

P(x)

X

t =

0.50

0.50

0.40

0.30

0.20

0.10

0.00

0

1

2

3

4

5

6

7

x

P(x = 2) = .0758

Chap 4-67

Some Continuous Distributions

Chap 4-68

The Uniform Distribution

(continued)

The Continuous Uniform Distribution:

f(x) =

1

ba

if a x b

0

otherwise

where

f(x) = value of the density function at any x value

a = lower limit of the interval

b = upper limit of the interval

Chap 4-69

Uniform Distribution

Example: Uniform Probability Distribution

Over the range 2 ≤ x ≤ 6:

1

f(x) = 6 - 2 = .25 for 2 ≤ x ≤ 6

f(x)

.25

2

6

x

Chap 4-70

Normal (Gaussian) Distribtion

Chap 4-71

f X ( x)

m

1 x

2

1 e

2

2

By varying the parameters μ and σ, we

obtain different normal distributions

μ ± 1σ encloses about 68% of x’s; μ ± 2σ

covers about 95% of x’s; μ ± 3σ covers

about 99.7% of x’s

The chance that a value that far or farther

away from the mean is highly unlikely, given

that particular mean and standard deviation

Chap 4-72

Probability as

Area Under the Curve

The total area under the curve is 1.0, and the curve is

symmetric, so half is above the mean, half is below

f(x) P( x μ) 0.5

0.5

P(μ x ) 0.5

0.5

μ

x

P( x ) 1.0

Chap 4-73

The Standard Normal Distribution

Also known as the “z” distribution

= (x-m)/

Mean is by definition 0

Standard Deviation is by definition 1

f(z)

1

0

z

Values above the mean have positive z-values,

values below the mean have negative z-values

Chap 4-74

Comparing x and z units

μ = 100

σ = 50

100

0

250

3.0

x

z

Note that the distribution is the same, only the

scale has changed. We can express the problem in

original units (x) or in standardized units (z)

Chap 4-75

Finding Normal Probabilities

(continued)

Suppose x is normal with mean 8.0

and standard deviation 5.0.

Now Find P(x < 8.6)

P(x < 8.6)

.0478

.5000

= P(z < 0.12)

= P(z < 0) + P(0 < z < 0.12)

= .5 + .0478 = .5478

Z

0.00

0.12

Chap 4-76

Using Standard Normal Tables

Chap 4-77

Section Summary

Reviewed discrete distributions

binomial, poisson, etc.

Reviewed some continuous distributions

normal, uniform, exponential

Found probabilities using formulas and tables

Recognized when to apply different distributions

Applied distributions to decision problems

Chap 4-78