ppt format

advertisement

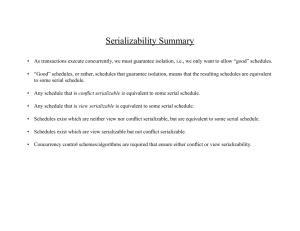

Quick Review of Apr 29 material • Transformation of Relational Expressions – Equivalence Rules • Transactions – ACID properties (Atomic, Consistent, Isolated, Durable) – Transaction States (active, partially committed, failed, committed, aborted) – Concurrent Execution and Serializability Serial and Interleaved Schedules Serial schedule Interleaved schedule Another Interleaved Schedule The previous serial and interleaved schedules ensured database consistency (A+B before execution = A+B after execution) The interleaved schedule on the right is only slightly different, but does not ensure a consistent result. – assume A=1000 and B=2000 before (sum = 3000) – after execution, A=950 and B=2150 (sum = 3100) Inconsistent Transaction Schedules • So what caused the problem? What makes one concurrent schedule consistent, and another one inconsistent? – Operations on data within a transaction are not relevant, as they are run on copies of the data residing in local buffers of the transaction – For scheduling purposes, the only significant operations are read and write • Given two transactions, Ti and Tj, both attempting to access data item Q: – – – – if Ti and Tj are both executing read(Q) statements, order does not matter if Ti is doing write(Q), and Tj read(Q), then order does matter same if Tj is writing and Ti reading if both Ti and Tj are executing write(Q), then order might matter if there are any subsequent operations in Ti or Tj accessing Q Transaction Conflicts • Two operations on the same data item by different transactions are said to be in conflict if at least one of the operations is a write • If two consecutive operations of different transactions in a schedule S are not in conflict, then we can swap the two to produce another schedule S’ that is conflict equivalent with S • A schedule S is serializable if it is conflict equivalent (after some series of swaps) to a serial schedule. Transaction Conflict Example Example: read/write(B) in T0 do not conflict with read/write(A) in T1 T0 read(A) write(A) T1 read(A) read(B) write(A) write(B) read(B) write(B) T0 read(A) write(A) read(B) write(B) T1 read(A) write(A) read(B) write(B) Serializability Testing (15.9) and Precedence Graphs • So we need a simple method to test a schedule S and discover whether it is serializable. • Simple method involves constructing a directed graph called a Precedence Graph from S • Construct a precedence graph as follows: – a vertex labelled Ti for every transaction in S – an edge from Ti to Tj if any of these three conditions holds: • Ti executes write(Q) before Tj executes read(Q) • Ti executes read(Q) before Tj executes write(Q) • Ti executes write(Q) before Tj executes write(Q) – if the graph has a cycle, S is not serializable Precedence Graph Example 1 • Compute a precedence graph for schedule B (right) • three vertices (T1, T2, T3) • edge from Ti to Tj if – Ti writes Q before Tj reads Q – Ti reads Q before Tj writes Q – Ti writes Q before Tj writes Q Precedence Graph Example 1 • Compute a precedence graph for schedule B (right) • three vertices (T1, T2, T3) • edge from Ti to Tj if – Ti writes Q before Tj reads Q – Ti reads Q before Tj writes Q – Ti writes Q before Tj writes Q Precedence Graph Example 2 • Slightly more complicated example • Compute a precedence graph for schedule A (right) • three vertices (T1, T2, T3) • edge from Ti to Tj if – Ti writes Q before Tj reads Q – Ti reads Q before Tj writes Q – Ti writes Q before Tj writes Q Precedence Graph Example 2 • Slightly more complicated example • Compute a precedence graph for schedule A (right) Concurrency Control • So now we can recognize when a schedule is serializable. In practice, it is often difficult and inefficient to determine a schedule in advance, much less examine it for serializability. • Lock-based protocols are a common system used to prevent transaction conflicts on the fly (i.e., without knowing what operations are coming later) • Basic concept is simple: to prevent transaction conflict (two transactions working on the same data item with at least one of them writing), we implement a lock system -- a transaction may only access an item if it holds the lock on that item. Lock-based Protocols • We recognize two modes of locks: – shared: if Ti has a shared-mode (“S”) lock on data item Q then Ti may read, but not write, Q. – exclusive: if Ti has an exclusive-mode (“X”) lock on Q then Ti can both read and write Q. • Transactions be granted a lock before accessing data – A concurrency-control manager handles granting of locks – Multiple S locks are permitted on a single data item, but only one X lock – this allows multiple reads (which don’t create serializability conflicts) but prevents any R/W, W/R, or W/W interactions (which create conflicts) Lock-based Protocols • Transactions must request a lock before accessing a data item; they may release the lock at any time when they no longer need access • If the concurrency-control manager does not grant a requested lock, the transaction must wait until the data item becomes available later on. • Unfortunately, this can lead to a situation called a deadlock – suppose T1 holds a lock on item R and requests a lock on Q, but transaction T2 holds an exclusive lock on Q. So T1 waits. Then T2 gets to where it requests a lock on R (still held by waiting T1). Now both transactions are waiting for each other. Deadlock. Deadlocks • To detect a deadlock situation we use a wait-for graph – one node for each transaction – directed edge Ti --> Tj if Ti is waiting for a resource locked by Tj • a cycle in the wait-for graph implies a deadlock. – The system checks periodically for deadlocks – If a deadlock exists, one of the nodes in the cycle must be aborted • 95% of deadlocks are between two transactions • deadlocks are a necessary evil – preferable to allowing the database to become inconsistent – deadlocks can be rolled back; inconsistent data is much worse Two-phase Locking Protocol • A locking protocol is a set of rules for placing and releasing locks – a protocol restricts the number of possible schedules – lock-based protocols are pessimistic • Two-phase locking is (by far) the most common protocol – growing phase: a transaction may only obtain locks (never release any of its locks) – shrinking phase: a transaction may only release locks (never obtain any new locks) Two-phase with Lock Conversion • Two-phase with lock conversion: – S can be upgraded to X during the growing phase – X can be downgraded to S during the shrinking phase (this only works if the transaction has already written any changed data value with an X lock, of course) – The idea here is that during the growing phase, instead of holding on X on an item that it doesn’t need to write yet, to hold an S lock on it instead (allowing other transactions to read the old value for longer) until the point where modifications to the old value begin. – Similarly in the shrink phase, once a transaction downgrades an X lock, other transactions can begin reading the new value earlier. Variants on Two-phase Locking • Strict two-phase locking – additionally requires that all X locks are held until commit time – prevents any other transactions from seeing uncommitted data – viewing uncommitted data can lead to cascading rollbacks • Rigorous two-phase locking – requires that ALL locks are held until commit time