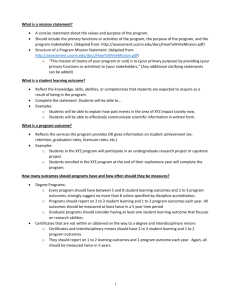

Methods for Assessing Student Learning Outcomes

Methods for Assessing

Student Learning

Outcomes

Beth Wuest

Interim Director, Academic Development and Assessment

Lisa Garza

Director, University Planning and Assessment

February 15, 2006

Workshop Goals

To become:

more aware of the importance of methods of assessment in relation to student learning outcomes and program improvement more knowledgeable about direct and indirect assessment methods

more competent at developing methods for assessing student learning outcomes more knowledgeable about using and adapting assessment methods that are currently in practice more adept at reviewing methods for assessing effectiveness and efficiency

Overview

For evidence of success and program improvement

All programs are requested to have 5-8 learning outcomes with two assessment methods for each outcome by March 31, 2006

An assessment report of these outcomes will be due toward the end of the 2006-2007 academic year

Linkages to Other University

Assessment

Academic Program Review

College and University strategic planning

Program and University accreditations

Definitions

Outcomes

Desired results expressed in general terms

Methods

Tools or instruments used to gauge progress toward achieving outcomes

Measures

Intended performance targets expressed in specific terms

Focus

At present we are focusing only on outcomes and methods. Although measures should be considered when developing these, they will not be specifically addressed until the first assessment cycle (2006-2007).

Student Learning Outcomes

Describe specific behaviors that a student of your program should demonstrate after completing the program

Focus on the intended abilities, knowledge, values, and attitudes of the student after completion of the program

Key questions to consider

What is expected from a graduate of the program?

What is expected as the student progresses through the program?

What does the student know? ( cognitive )

What can the student do? ( psychomotor )

What does the student care about? ( affective )

Why are Student Learning Outcomes

So Important?

basis for program improvement

instruction, course design, curricular design

communicate instructional intent

increase awareness of learning (for students)

common language

advising materials

promotional materials

support accreditation

Methods of Assessing Learning

Outcomes

should provide an objective means of supporting the outcomes, quality, efficiency or productivity of programs, operations, activities or services

should indicate how you will assess each of your outcomes

should indicate when you will assess each outcome

provide at least two ways to assess each outcome

Categories of Assessment Methods

student learning

direct assessments evaluate the competence of students

exam scores, rated portfolios indirect assessments evaluate the perceived learning

student perception, employer perception program or unit processes

direct assessments evaluate actual performance

customer satisfaction, error rates, time, cost, efficiency, productivity indirect assessments evaluate the perceived performance

perceived timeliness, perceived capability curriculum

methods used to check alignment of curriculum with outcomes

curriculum mapping

Examples of Direct Methods

Samples of individual student work

Pre-test and post-test evaluations

Standardized tests

Performance on licensure exams

Blind scored essay tests

Internal or external juried review of student work

Case study/problems

Capstone papers, projects or presentations

Project or course imbedded assessment

Documented observation and analysis of student behavior/performance

Externally reviewed internship or practicum

Collections of work (portfolios) of individual students

Activity logs

Performances

Interviews (including videotaped)

Examples of Indirect Methods

Questionnaires and Surveys

Students

Graduating Seniors

Alumni

Employers

Syllabi and curriculum analysis

Transcript analysis

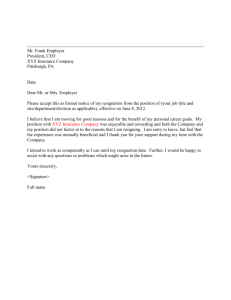

Describing Assessment Methods

What are you going to use?

presentation, assignment, test, survey, observation, performance rating

Of and/or by whom ?

student, mentor, focus group, alumni

Context (e.g., where or when)?

point-of-service, capstone, throughout the year, end of program

For what purpose ?

desired learning outcome

example: Test the students at the end of the program for their level of knowledge in XYZ

Creating Assessment Methods

What

Presentation

Who

Student

Assignment

Portfolio

Test or exam

Project

Alumni

Customer

Instructor

Mentor

Performance

Survey

Focus group

Process

Direct measurement Employer

Transcripts

Where/When

Point-of-service

Outcomes

Learning

Capstone Quality

Throughout the year Timeliness

End of year

End of program

Skills

Satisfaction

In course

On the job

Preparation

Efficiency

Creating Assessment Methods

What

Presentation

Assignment

Portfolio

Test or exam

Project

Performance

Who

Student

Alumni

Customer

Instructor

Mentor

Focus group

Survey Process

Direct measurement Employer

Transcripts

Where/When

Point-of-service

Outcomes

Learning

Capstone Quality

Throughout the year Timeliness

End of year

End of program

In course

Skills

Satisfaction

Preparation

On the job Efficiency

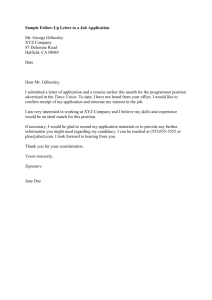

Locally Developed Surveys

institutional level

alumni survey

academic advising survey

image survey

student satisfaction survey

program or department level

advisory board surveys

employer surveys

customer surveys

program-specific surveys

graduating senior survey

Joe Meyer, Director,

Institutional Research

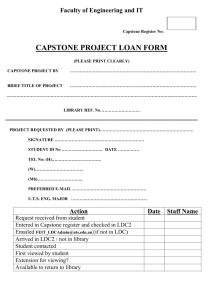

Curriculum or Course-based

performance-based

capstone courses

capstone projects

case studies

classroom assessment

course-embedded assignments

course-embedded exam questions

portfolios

reflective essays

Types of Examinations or Tests

standardized exams

national test

state test juried competitions

recitals

shows or exhibitions locally developed exams

pre-post tests

course-embedded exam questions

comprehensive exam

qualifying exam

Assessment Matrix Can be Useful to

Link the “Where” with the

“Outcomes”

Learning

Outcome

Course

1234

Course

2345

Course

3456

Capstone

Introduced Emphasized Used Assessed Application of theory

Skills and knowledge

Communication skills

Introduced

Introduced

Used Assessed

Emphasized

Hints on Selecting Methods

match assessment method with learning outcome

Students completing BS in xyz will demonstrate competence in abc principles comparable to graduates of other similar national programs

Student will be tested with a locally developed exam administered at the end of the program

Students’ scores on the xyz principles on the xyz national examination administered twice a year will be examined the assessment results should be usable

Students completing BS in xyz will demonstrate competence in conducting research

Seniors at the end of their capstone course develop a research design to address the intended research question posed in a case study. A rubric will be designed to assess the effectiveness of their ability to construct a research design.

Graduating seniors will complete a senior research project. Completion of the project will be recorded.

Not Useful

Hints on Selecting Methods

results should be easily interpreted and unambiguous data should not be difficult to collect or access information should be directly controllable by the unit or program identify multiple methods for assessing each outcome

direct and indirect methods qualitative and quantitative passive or active methods within different courses conducted by different groups identify subcomponents where other methods may be used that allow deeper analysis

Hints on Selecting Methods

use methods that can assess both the strengths and weaknesses of your program

capstone or senior projects are ideal for student learning outcomes assessment

when using surveys, target all stakeholders

build on existing data collection

accreditation criteria

program review

exercise

Selecting the “Best” Assessment

Methods

relationship to assessment — provide you with the information you need reliability — yields consistent responses over time validity — appropriate for what you want to measure timeliness and cost — preparation, response, and analysis time; opportunity and tangible costs motivation — provides value to student, respondents are motivated to participate other

results easy to understand and interpret changes in results can be attributed to changes in the program

After Identifying the Potential List of

Assessment Methods You Need to…

select the “best” ones

try to identify at least two methods for assessing each outcome consider possible performance targets for the future

balance between stretch targets versus achievable targets

Examples of methods

survey (using the Graduating Senior Survey) the students at the end of the program as to their intention to continue their education in a graduate program

(indirect method)

students will rate their likelihood of attending a graduate program on a survey

(using the Graduating Senior Survey) that they will complete at the end of the program

xyz graduates’ admission rate to xyz graduate program in the State of Texas will be reviewed

After Identifying the Potential List of

Assessment Methods You Need to…

develop assessment instruments

surveys

exams assignments scoring rubrics portfolios ideally you want them to be reliable, valid, and cheap approaches

use external sources seek help from internal sources (e.g., Academic Development and

Assessment Office)

do it yourself

the instrument may need to be modified based on assessment results

Challenges and Pitfalls

one size does not fit all — some methods work well for one program but not others

do not try to do the perfect assessment all at once — take a continuous improvement approach

allow for ongoing feedback

match the assessment method to the outcome and not vice-versa

Example

Outcome 1: Graduates will be satisfied that their undergraduate degree has prepared them to succeed in their professional career

xyz graduates will be surveyed in the annual alumni survey on their preparedness to succeed in their career

95% of the xyz graduates surveyed in the annual alumni survey report that the xyz program enabled them to be “ very prepared ” or “ extremely prepared ” to succeed in their career (next phase)

on-site internship supervisors each semester will rate interns from the xyz program on their skills necessary to succeed in the workplace

90% of on-site internship supervisors each semester rate interns from the xyz program as having the skills necessary to succeed in their career (next phase)

students in their capstone course will be administered a locally developed, standardized exam regarding career preparedness

95% of student in their capstone course are able to successful answer

90% of the questions regarding career preparedness on a locally developed, standardized exam (next phase) scores of graduates who have taken the state licensure exam in xyz within one year of graduating from the xyz program will be evaluated

85% of the graduates are able to pass the state licensure exam in xyz within one year of graduating from the xyz program (next phase)

senior portfolios will be examined annually using a locally devised rubric to show evidence of preparedness for success in related professional careers on three key measures: communication, leadership, and ethics

90% of senior portfolios examined annually using a locally devised rubric will show evidence of preparedness for success in related professional careers on three key measures: communication, leadership, and ethics (next phase)

students will be observed performing basic technical lab skills necessary for successful employment in a senior laboratory course

90% of students will be able to perform basic technical lab skills necessary for successful employment in a senior laboratory course (next phase)

Re-Cap of Process

Step 1: Define program mission

Step 2: Define program goals

Step 3: Define student learning outcomes

Step 4: Inventory existing and needed assessment methods

Step 5: Identify assessment methods for each learning outcome

![[Date] [Policyholder Name] [Policyholder address] Re: [XYZ](http://s3.studylib.net/store/data/008312458_1-644e3a63f85b8da415bf082babcf4126-300x300.png)

![waiver of all claims [form]](http://s3.studylib.net/store/data/006992518_1-099c1f53a611c6c0d62e397e1d1c660f-300x300.png)