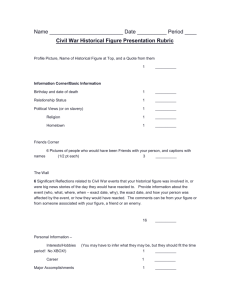

Caption quality: International approaches to standards and

advertisement