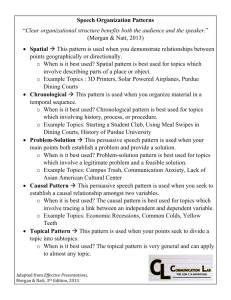

ppt slides

advertisement

An Introduction to Causal Modeling and

Discovery Using Graphical Models

Greg Cooper

University of Pittsburgh

Overview

Introduction

Representation

Inference

Learning

Evaluation

What Is Causality?

Much consideration in philosophy

I will treat it as a primitive

Roughly, if we manipulate something and

something else changes, then the former causally

influences the latter.

Why is Causation Important?

Causal issues arise in most fields including

medicine, business, law, economics, and the

sciences

An intelligent agent is continually considering

what to do next in order to change the world

(including the agent’s own mind). That is a

causal question.

Representing Causation Using

Causal Bayesian Networks

A causal Bayesian network (CBN) represents

some entity (e.g., a patient) that we want to

model causally

Features of the entity are represented by

variables/nodes in the CBN

Direct causation is represented by arcs

An Example

of a Causal Bayesian Network

Structure

History of Smoking (HS)

Chronic Bronchitis (CB)

Lung Cancer (LC)

Fatigue (F)

Weight Loss (WL)

An Example of the Accompanying

Causal Bayesian Network

Parameters

P(HS = no) = 0.80

P(HS = yes) = 0.20

P(CB = absent | HS = no) = 0.95

P(CB = absent | HS = yes) = 0.75

P(CB = present | HS = no) = 0.05

P(CB = present | HS = yes) = 0.25

P(LC = absent | HS = no) = 0.99995

P(LC = absent | HS = yes) = 0.997

P(LC = present | HS = no) = 0.00005

P(LC = present | HS = yes) = 0.003

Causal Markov Condition

A node is independent of its non-effects given just its

direct causes.

This is the key representational property of

causal Bayesian networks.

Special case: A node is independent of its

distant causes given just its direct causes.

General notion: Causality is local

Causal Modeling Framework

An underlying process generates entities that

share the same causal network structure. The

entities may have different parameters

(probabilities).

Each entity independently samples the joint

distribution defined by its CBN to generate

values (data) for each variable in the CBN model

Entity Generator

HS1

HS2

HS3

LC1

LC2

LC3

WL1

WL2

WL3

(no, absent, absent)

(yes, present, present) (yes, absent, absent)

(no, absent, absent)

(yes, absent, absent)

existing

entities

entity

feature

values

samples

Discovering the Average Causal

Bayesian Network

HSavg

LCavg

WLavg

Some Key Types of Causal Relationships

Direct

Causation

HS

Indirect

Causation

Confounding

HS

F

HS

CB

LC

Sampling bias

WL

LC

Sampled = true

WL

Inference Using a Single CBN

When Given Evidence

in the Form of Observations

P(F | CB = present, WL = present, CBN1)

History of Smoking (HS)

Chronic Bronchitis (CB)

Lung Cancer (LC)

Fatigue (F)

Weight Loss (WL)

Inference

The Markov Condition implies the following

equation:

n

P( X 1 , X 2 ,..., X n )

P( X i | DirectCauses( X i ))

i 1

The above equation specifies the full joint

probability distribution over the model variables.

From the joint distribution we can derive any

conditional probability of interest.

Inference Algorithms

In the worst case, the brute force algorithm is

exponential time in the number of variables in the

model

Numerous exact inference algorithms have been

developed that exploit independences among the

variables in the causal Bayesian network.

However, in the worst case, these algorithms are

exponential time.

Inference in causal Bayesian networks is NP-hard

(Cooper, AIJ, 1990).

Inference Using a Single CBN

When Given Evidence

in the Form of Manipulations

P(F | MCB = present, CBN1)

• Let MCB be a new variable that can have the same

values as CB (present, absent) plus the value observe.

• Add an arc from MCB to CB.

• Define the probability distribution of CB given its

parents.

Inference Using a Single CBN

When Given Evidence

in the Form of Manipulations

P(F | MCB = present, CBN1)

MCB

History of Smoking (HS)

Chronic Bronchitis (CB)

Lung Cancer (LC)

Fatigue (F)

Weight Loss (WL)

A Deterministic Manipulation

P(F | MCB = present), CBN1)

MCB

History of Smoking (HS)

Chronic Bronchitis (CB)

Lung Cancer (LC)

Fatigue (F)

Weight Loss (WL)

Inference Using a Single CBN

When Given Evidence

in the Form of Observations and Manipulations

P(F | MCB = present, WL = present, CBN1)

MCB

History of Smoking (HS)

Chronic Bronchitis (CB)

Lung Cancer (LC)

Fatigue (F)

Weight Loss (WL)

Inference Using Multiple CBNs:

Model Averaging

P( X | manipulate(Y' ), observe( Z' ))

P( X | manipulate(Y' ), Z' , CBN i ) P(CBN i | data, K )

i

Some Key Reasons

for Learning CBNs

Scientific discovery among measured variables

Example of general: What are the causal

relationships among HS, LC, CB, F, and WL?

Example of focused: What are the causes of LC

from among HS, CB, F, and WL?

Scientific discovery of hidden processes

Prediction

Example: The effect of not smoking on contracting

lung cancer

Major Methods for Learning CBNs

from Data

Constraint-based methods

Uses tests of independence to find patterns of

relationships among variables that support causal

relationships

Relatively efficient in discovery of causal models

with hidden variables

See talk by Frederick Eberhardt this morning

Score-based methods Bayesian scoring

Allows informative prior probabilities of causal structure

and parameters

Non-Bayesian scoring

Does not allow informative prior probabilities

Learning CBNs from Observational Data:

A Bayesian Formulation

P( X Y | D, K )

P(S i | D, K )

i: Si containsX Y

where D is observational data,

Si is the structure of CBNi,

and K is background knowledge and belief.

Learning CBNs from Observational Data

When There Are No Hidden Variables

P( S i | D, K )

P( S i | K ) P( D | S i , i , K ) P( i | S i , K ) d i

P(S j | K ) P(D | S j , j , K ) P( j | S j , K ) d j

j

where i are the parameters associated with Si and the

sum is over all CBNs for which P(Sj | K) > 0.

The BD Marginal Likelihood

The previous integral has the following closed

form solution, when we assume Dirichlet priors

(ijk and ij), multinomial likelihoods (Nijk and

Nij denote counts), parameter independence, and

parameter modularity:

( ijk N ijk )

( N ) ( )

i 1 j 1

ij

ij k 1

ijk

n

qi

( ij )

ri

Searching for Network Structures

Greedy search often used

Hybrid methods have been explored that constraints

and scoring

Some algorithms guarantee locating the generating

model in the large sample limit (assuming Markov and

Faithfulness conditions), as for example the GES

algorithm (Chickering, JMLR, 2002)

The ability to approximate the generating network is

often quite good

An excellent discussion and evaluation of several stateof-the-art methods, including a relatively new method

(Max-Min Hill Climbing) is at:

Tsamardinos, Brown, Aliferis, Machine Learning, 2006.

The Complexity of Search

Given a complete dataset and no hidden

variables, locating the Bayesian network

structure that has the highest posterior

probability is NP-hard (Chickering, AIS, 1996;

Chickering, et al, JMLR, 2004).

We Can Learn More from Observational and

Experimental Data Together

than from Either One Alone

H

C

E

We cannot learn the above causal structure

from observational or experimental data

alone. We need both.

Learning CBNs from Observational Data

When There Are Hidden Variables

P( S i | K )

P ( S i | D, K )

P(D | S i , i , K ) P( i | S i , K ) d i

Hi

P(S j | K ) P( D | S j , j , K ) P( j | S j , K ) d j

j

Hj

where Hi (Hj) are the hidden variables in Si (Sj) and the

sum in the numerator (denominator) is taken over all

values of Hi (Hj).

Learning CBNs from Observational and

Experimental Data:

A Bayesian Formulation

• For each model variable Xi that is experimentally manipulated in at least one

case, introduce a potential parent MXi of Xi .

• Xi can have parents as well from among the other {X1, ..., Xi-1, Xi+1, ..., Xn}

domain variables in the model.

• Priors on the distribution of Xi will include conditioning on MXi,when it is a

parent of Xi, as well as conditioning on the other parents of Xi.

• Define MXi to have the same values vi1, vi2, ... , viq as Xi, plus a value o (for

observe).

o When MXi has value vij in a given case, this represents that the

experimenter intended to manipulate Xi to have value vij in the case.

o When MXi has value observe in a given case, this represents that no

attempt was made by the experimenter to manipulate Xi, but

rather, Xi was merely observed to have the value recorded for it.

• With the above variable additions in place, use the previous Bayesian

methods for causal modeling from observational data.

An Example Database Containing

Observations and Manipulations

HS

MCB

CB LC

F

WL

T

obs

T

F

T

T

F

F

F

T

T

F

F

F

T

T

F

F

F

T

F

T

obs

F

Faithfulness Condition

Faithfulness Condition

Any independence among variables in the data

generating distribution follows from the Markov

Condition applied to the data generating causal

structure.

A simple counter example:

H

C

E

Challenges of Bayesian Learning

of Causal Networks

Major challenges

Large search spaces

Hidden variables

Feedback

Assessing parameter and structure priors

Modeling complicated distributions

The remainder of this talk will summarize several

methods for dealing with hidden variables, which is

arguably the biggest major challenge today

These examples provide only a small sample of previous

research

Learning Belief Networks in the Presence of

Missing Values and Hidden Variables

(N. Friedman, ICML, 1997)

Assumes a fixed set of measured and hidden variables

Uses Expectation Maximization (EM) to “fill in” the

values of the hidden variable

Uses BIC to score causal network structures with the

filled-in data. Greedily finds best structure and then

returns to the EM step using this new structure.

Some subsequent work

Use patterns of induced relationships among the measured

variables to suggest where to introduce hidden variables

(Elidan, et al., NIPS, 2000)

Determining the cardinality of the hidden variables

introduced (Elidan & Friedman, UAI, 2001)

A Non-Parametric Bayesian Methods for

Inferring Hidden Causes

(Wood, et al., UAI, 2006)

Learns hidden causes of measured variables

hidden variables

measured variables

Assumes binary variables and noisy-OR interactions

Uses MCMC to sample the hidden structures

Allows in principle an infinite number of hidden

variables

In practice, the number of optimal hidden variables is

constrained by the measured data

Bayesian Learning of Measurement and

Structural Model

(Silva & Scheines, ICML, 2006)

Learns the following type of models

hidden variables

measured variables

Assumes continuous variables, mixture of

Gaussian distributions, and linear interactions

Mixed Ancestral Graphs*

A MAG(G) is a graphical object that contains only the observed

variables, causal arcs, and a new relationship for representing

hidden confounding.

There exist methods for scoring linear MAGS (Richardson &

Spirtes Ancestral Graph Markov Models, Annals of Statistics,

2002)

SES

SES

SEX

PE

L1

CP

SEX

PE

L2

IQ

Latent Variable DAG

IQ

Corresponding MAG

* This slide was adapted from a slide provided by Peter Spirtes.

CP

A Theoretical Study of Y Structures

for Causal Discovery

(Mani, Spirtes, Cooper, UAI, 2006)

Learn a Bayesian network structure on the measured

variables

Identify patterns in the structure that suggest causal

relationships

A

B

C

D

E

F

The “Y” structure shown in green supports that D is an

unconfounded cause of F.

Causal Discovery

Using Subsets of Variables

Search for an estimate M of the Markov blanket

of a variable X (e.g., Aliferis, et al., AMIA, 2002)

X is independent of other variables in the generating

causal network model, conditioned on the variables

in X’s Markov blanket

Within M search for patterns among the

variables that suggest a causal relationship to X

(e.g., Mani, doctoral dissertation, Un. of

Pittsburgh, 2006)

Causal Identifiability

Generally depends upon

Markov Condition

Faithfulness Condition

Informative structural relationships among the

measured variables

Example of the “Y structure”:

A

B

C

E

Evaluation of Causal Discovery

In evaluating a classifier, the correct answer in any

instance is just the value of some variable of interest,

which typically is explicitly in the data set. This make

evaluation relatively straightforward.

In evaluating the output of a causal discovery

algorithm, the answer is not in the dataset. In general

we need some outside knowledge to confirm that the

causal output is correct. This makes evaluation

relatively difficult. Thus, causal discovery algorithms

have not been thoroughly evaluated.

Methods for Evaluating Causal

Discovery Algorithms

Simulated data

Real data with expert judgments of causation

Real data with previously validated causal

relationships

Real data with follow up experiments

An Example of an Evaluation Using

Simulated Data

(Mani, poster here)

Generated 20,000 observational data samples from each

of five CBNs that were manually constructed

Applied the BLCD algorithm, which considers many 4variable subsets of all the variables and applies Bayesian

scoring. It is based on the causal properties of “Y”

structures.

Results

Precision: 83%

Recall: 27%

An Example of an Evaluation Using

Previously Validated Causal Relationships

(Yoo, et al., PSB, 2002)

ILVS is a Bayesian method that considers pairwise relationships

among a set of variables

It works best when given both observational and experimental

data

ILVS was applied to a previously collected DNA microarray

dataset on 9 genes that control galactose metabolism in yeast

(Ideker, et al., Science, 2001) The causal relationships among the

genes have been extensively studied and reported in the

literature.

ILVS predicted 12 of 27 known causal relationships among the

genes (44% recall) and of those 12 eight were correct (67%

precision)

Yoo has explored numerous extensions to ILVS

An Example of an Evaluation Using Real

Data with Follow Up Experiments

(Sachs, et al., Science, 2005)

Experimentally manipulated human immune system

cells

Used flow cytometry to measure the effects on 11

proteins and phospholipids on a large number of

individual cells

Used a Bayesian method for causally learning from

observational and experimental data

Derived 17 causal relationships with high probability

15 highly supported by the literature (precision = 15/17 =

88%)

The other two were confirmed experimentally by the

authors (precision = 17/17 = 100%)

Three causal relationships were missed (“recall” = 17 /20 =

85%)

A Possible Approach to Combining

Causal Discovery and Feature Selection

1.

2.

3.

4.

5.

6.

Use prior knowledge and statistical associations to develop

overlapping groups of features (variables)

Derive causal probabilistic relationships within groups

Have the causal groups constrain each other

Determine additional groups of features that might constrain

causal relationships further

Either go to step 2 or step 6

Model average within and across groups to derive approximate

model-averaged causal relationships

David Danks 2002. Learning the Causal Structure of Overlapping Variable

Sets. In S. Lange, K. Satoh, & C.H. Smith, eds. Discovery Science: Proceedings of

the 5th International Conference. Berlin: Springer-Verlag. pp. 178-191.

Some Suggestions

for Further Information

Books

Conferences

Glymour, Cooper (eds), Computation, Causation, and Discovery

(MIT Press, 1999)

Pearl, Causality: Models, Reasoning, and Inference (Cambridge

University Press, 2000)

Spirtes, Glymour, Scheines, Causation, Prediction, and Search

(MIT Press, 2001)

Neapolitan, Learning Bayesian Networks (Prentice Hall, 2003)

UAI, ICML, NIPS, AAAI, IJCAI

Journals

JMLR, Machine Learning

Acknowledgement

Thanks to Peter Spirtes for his comments on an

outline of this talk