search space - UEF-Wiki

advertisement

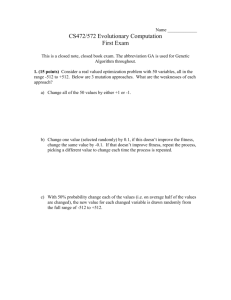

Seminar on Computational Intelligence Timetable 12.1. 13.1. 19.1. 20.1. 26.1. 27.1. 2.2. 3.2. … UBI-Health etc. Iiro Jantunen Introduction Pekka Toivanen Positioning Mikko Asikainen Self-Organization Pekka Toivanen Genetic algorithms Pekka Toivanen No seminar delayed to future Seminar presentations Seminar presentations Seminar on Computational Intelligence Presentation topics Health 1. 2. 3. 4. 5. 6. Automatic context (behavior) analysis of elderly people Technology for self-made measurements of health parameters Ubiquitous health, e-health and e-Medical centre Ubiquitous health architecture Brain imaging and analysis in multiple sclerosis Brain imaging and analysis in dementia Swarm Intelligence 1. 2. Swarm Intelligence in computer games Basic ideas of swarm intelligence and applications Positioning 1. 2. 3. Positioning of animals indoors Positioning of animals outdoors Positioning of humans indoors Genetic Algorithms: A Tutorial “Genetic Algorithms are good at taking large, potentially huge search spaces and navigating them, looking for optimal combinations of things, solutions you might not otherwise find in a lifetime.” - Salvatore Mangano Computer Design, May 1995 Classes of Search Techniques Search techniques Calculus-based techniques Direct methods Finonacci Guided random search techniques Indirect methods Newton Evolutionary algorithms Evolutionary strategies Genetic algorithms Parallel Centralized Simulated annealing Distributed Sequential Steady-state Generational Enumerative techniques Dynamic programming Genetic Algorithms - History • • • • Pioneered by John Holland in the 1970’s Got popular in the late 1980’s Based on ideas from Darwinian Evolution Can be used to solve a variety of problems that are not easy to solve using other techniques Evolution in the real world • Each cell of a living thing contains chromosomes - strings of DNA • Each chromosome contains a set of genes - blocks of DNA • Each gene determines some aspect of the organism (like eye colour) • A collection of genes is sometimes called a genotype • A collection of aspects (like eye colour) is sometimes called a phenotype • Reproduction involves recombination of genes from parents and then small amounts of mutation (errors) in copying • The fitness of an organism is how much it can reproduce before it dies • Evolution based on “survival of the fittest” Start with a Dream… • • • • Suppose you have a problem You don’t know how to solve it What can you do? Can you use a computer to somehow find a solution for you? • This would be nice! Can it be done? A dumb solution A “blind generate and test” algorithm: Repeat Generate a random possible solution Test the solution and see how good it is Until solution is good enough Can we use this dumb idea? • Sometimes - yes: – if there are only a few possible solutions – and you have enough time – then such a method could be used • For most problems - no: – many possible solutions – with no time to try them all – so this method can not be used A “less-dumb” idea (GA) Generate a set of random solutions Repeat Test each solution in the set (rank them) Remove some bad solutions from set Duplicate some good solutions make small changes to some of them Until best solution is good enough How do you encode a solution? • Obviously this depends on the problem! • GA’s often encode solutions as fixed length “bitstrings” (e.g. 101110, 111111, 000101) • Each bit represents some aspect of the proposed solution to the problem • For GA’s to work, we need to be able to “test” any string and get a “score” indicating how “good” that solution is Silly Example - Drilling for Oil • Imagine you had to drill for oil somewhere along a single 1km desert road • Problem: choose the best place on the road that produces the most oil per day • We could represent each solution as a position on the road • Say, a whole number between [0..1000] Where to drill for oil? Solution1 = 300 Solution2 = 900 Road 0 500 1000 Digging for Oil • The set of all possible solutions [0..1000] is called the search space or state space • In this case it’s just one number but it could be many numbers or symbols • Often GA’s code numbers in binary producing a bitstring representing a solution • In our example we choose 10 bits which is enough to represent 0..1000 Convert to binary string 512 256 128 64 32 16 8 4 2 1 900 1 1 1 0 0 0 0 1 0 0 300 0 1 0 0 1 0 1 1 0 0 1023 1 1 1 1 1 1 1 1 1 1 In GA’s these encoded strings are sometimes called “genotypes” or “chromosomes” and the individual bits are sometimes called “genes” Drilling for Oil Solution1 = 300 (0100101100) Solution2 = 900 (1110000100) Road OIL 0 1000 30 5 Location Summary We have seen how to: • represent possible solutions as a number • encoded a number into a binary string • generate a score for each number given a function of “how good” each solution is - this is often called a fitness function • Our silly oil example is really optimisation over a function f(x) where we adapt the parameter x Search Space • For a simple function f(x) the search space is one dimensional. • But by encoding several values into the chromosome many dimensions can be searched e.g. two dimensions f(x,y) • Search space an be visualised as a surface or fitness landscape in which fitness dictates height • Each possible genotype is a point in the space • A GA tries to move the points to better places (higher fitness) in the the space Fitness landscapes Search Space • Obviously, the nature of the search space dictates how a GA will perform • A completely random space would be bad for a GA • Also GA’s can get stuck in local maxima if search spaces contain lots of these • Generally, spaces in which small improvements get closer to the global optimum are good Back to the (GA) Algorithm Generate a set of random solutions Repeat Test each solution in the set (rank them) Remove some bad solutions from set Duplicate some good solutions make small changes to some of them Until best solution is good enough Adding Sex - Crossover • Although it may work for simple search spaces our algorithm is still very simple • It relies on random mutation to find a good solution • It has been found that by introducing “sex” into the algorithm better results are obtained • This is done by selecting two parents during reproduction and combining their genes to produce offspring Adding Sex - Crossover • Two high scoring “parent” bit strings (chromosomes) are selected and with some probability (crossover rate) combined • Producing two new offspring (bit strings) • Each offspring may then be changed randomly (mutation) Selecting Parents • Many schemes are possible so long as better scoring chromosomes more likely selected • Score is often termed the fitness • “Roulette Wheel” selection can be used: – Add up the fitness's of all chromosomes – Generate a random number R in that range – Select the first chromosome in the population that - when all previous fitness’s are added gives you at least the value R SGA operators: Selection • Main idea: better individuals get higher chance – Chances proportional to fitness – Implementation: roulette wheel technique 1/6 = 17% A 3/6 = 50% B C » Assign to each individual a part of the roulette wheel » Spin the wheel n times to select n individuals fitness(A) = 3 fitness(B) = 1 2/6 = 33% fitness(C) = 2 Example population No. 1 2 3 4 5 6 7 8 Chromosome 1010011010 1111100001 1011001100 1010000000 0000010000 1001011111 0101010101 1011100111 Fitness 1 2 3 1 3 5 1 2 Roulette Wheel Selection 1 1 0 2 3 2 4 3 5 1 6 3 7 5 Rnd[0..18] = 7 Rnd[0..18] = 12 Chromosome4 Chromosome6 Parent1 Parent2 8 1 2 18 Crossover - Recombination 1010000000 Parent1 Offspring1 1011011111 1001011111 Parent2 Offspring2 1010000000 Crossover single point - random With some high probability (crossover rate) apply crossover to the parents. (typical values are 0.8 to 0.95) SGA operators: 1-point crossover • • • • Choose a random point on the two parents Split parents at this crossover point Create children by exchanging tails Pc typically in range (0.6, 0.9) n-point crossover • • • • Choose n random crossover points Split along those points Glue parts, alternating between parents Generalisation of 1 point (still some positional bias) Uniform crossover • • • • Assign 'heads' to one parent, 'tails' to the other Flip a coin for each gene of the first child Make an inverse copy of the gene for the second child Inheritance is independent of position Mutation mutate Offspring1 1011011111 Offspring1 1011001111 Offspring2 1010000000 Offspring2 1000000000 Original offspring Mutated offspring With some small probability (the mutation rate) flip each bit in the offspring (typical values between 0.1 and 0.001) The GA Cycle of Reproduction reproduction children modified children parents population deleted members discard modification evaluated children evaluation Back to the (GA) Algorithm Generate a population of random chromosomes Repeat (each generation) Calculate fitness of each chromosome Repeat Use roulette selection to select pairs of parents Generate offspring with crossover and mutation Until a new population has been produced Until best solution is good enough Many Variants of GA • Different kinds of selection (not roulette) – Tournament – Elitism, etc. • Different recombination – Multi-point crossover – 3 way crossover etc. • Different kinds of encoding other than bitstring – Integer values – Ordered set of symbols • Different kinds of mutation Many parameters to set • Any GA implementation needs to decide on a number of parameters: Population size (N), mutation rate (m), crossover rate (c) • Often these have to be “tuned” based on results obtained - no general theory to deduce good values • Typical values might be: N = 50, m = 0.05, c = 0.9 Why does crossover work? • A lot of theory about this and some controversy • Holland introduced “Schema” theory • The idea is that crossover preserves “good bits” from different parents, combining them to produce better solutions • A good encoding scheme would therefore try to preserve “good bits” during crossover and mutation Genetic Programming • When the chromosome encodes an entire program or function itself this is called genetic programming (GP) • In order to make this work encoding is often done in the form of a tree representation • Crossover entials swaping subtrees between parents Genetic Programming It is possible to evolve whole programs like this but only small ones. Large programs with complex functions present big problems Implicit fitness functions • Most GA’s use explicit and static fitness function (as in our “oil” example) • Some GA’s (such as in Artificial Life or Evolutionary Robotics) use dynamic and implicit fitness functions - like “how many obstacles did I avoid” • In these latter examples other chromosomes (robots) effect the fitness function Problem • In the Travelling Salesman Problem (TSP) a salesman has to find the shortest distance journey that visits a set of cities • Assume we know the distance between each city • This is known to be a hard problem to solve because the number of possible routes is N! where N = the number of cities • There is no simple algorithm that gives the best answer quickly Problem • Design a chromosome encoding, a mutation operation and a crossover function for the Travelling Salesman Problem (TSP) • Assume number of cities N = 10 • After all operations the produced chromosomes should always represent valid possible journeys (visit each city once only) • There is no single answer to this, many different schemes have been used previously A Simple Example “The Gene is by far the most sophisticated program around.” - Bill Gates, Business Week, June 27, 1994 A Simple Example The Traveling Salesman Problem: Find a tour of a given set of cities so that – each city is visited only once – the total distance traveled is minimized Representation Representation is an ordered list of city numbers known as an order-based GA. 1) London 3) Dunedin 2) Venice 4) Singapore 5) Beijing 7) Tokyo 6) Phoenix 8) Victoria CityList1 (3 5 7 2 1 6 4 8) CityList2 (2 5 7 6 8 1 3 4) Crossover Crossover combines inversion and recombination: * * Parent1 (3 5 7 2 1 6 4 8) Parent2 (2 5 7 6 8 1 3 4) Child (5 8 7 2 1 6 3 4) This operator is called the Order1 crossover. Mutation Mutation involves reordering of the list: Before: * * (5 8 7 2 1 6 3 4) After: (5 8 6 2 1 7 3 4) TSP Example: 30 Cities 120 100 y 80 60 40 20 0 0 10 20 30 40 50 x 60 70 80 90 100 Solution i (Distance = 941) TSP30 (Performance = 941) 120 100 y 80 60 40 20 0 0 10 20 30 40 50 x 60 70 80 90 100 Solution j(Distance = 800) TSP30 (Performance = 800) 120 100 80 y 44 62 69 67 78 64 62 54 42 50 40 40 38 21 35 67 60 60 40 42 50 99 60 40 20 0 0 10 20 30 40 50 x 60 70 80 90 100 Solution k(Distance = 652) TSP30 (Performance = 652) 120 100 y 80 60 40 20 0 0 10 20 30 40 50 x 60 70 80 90 100 Best Solution (Distance = 420) TSP30 Solution (Performance = 420) 120 100 80 y 42 38 35 26 21 35 32 7 38 46 44 58 60 69 76 78 71 69 67 62 84 94 60 40 20 0 0 10 20 30 40 50 x 60 70 80 90 100 Using Genetic Algorithms [GAs] to both design composite materials and aerodynamic shapes for race cars and regular means of transportation (including aviation) can return combinations of best materials and best engineering to provide faster, lighter, more fuel efficient and safer vehicles for all the things we use vehicles for. Rather than spending years in laboratories working with polymers, wind tunnels and balsa wood shapes, the processes can be done much quicker and more efficiently by computer modeling using GA searches to return a range of options human designers can then put together however they please. Getting the most out of a range of materials to optimize the structural and operational design of buildings, factories, machines, etc. is a rapidly expanding application of GAs. These are being created for such uses as optimizing the design of heat exchangers, robot gripping arms, satellite booms, building trusses, flywheels, turbines, and just about any other computer-assisted engineering design application. T here is work to combine GAs optimizing particular aspects of engineering problems to work together, and some of these can not only solve design problems, but also project them forward to analyze weaknesses and possible point failures in the future so these can be avoided Robotics involves human designers and engineers trying out all sorts of things in order to create useful machines that can do work for humans. Each robot's design is dependent on the job or jobs it is intended to do, so there are many different designs out there. GAs can be programmed to search for a range of optimal designs and components for each specific use, or to return results for entirely new types of robots that can perform multiple tasks and have more general application. GA-designed robotics just might get us those nifty multi-purpose, learning robots we've been expecting any year now since we watched the Jetsons as kids, who will cook our meals, do our laundry and even clean the bathroom for us Evolvable hardware (EH) is a new field about the use of evolutionary algorithms (EA) to create specialized electronics without manual engineering. It brings together reconfigurable hardware, artificial intelligence, fault tolerance and autonomous systems. Evolvable hardware refers to hardware that can change its architecture and behavior dynamically and autonomously by interacting with its environment. In its most fundamental form an evolutionary algorithm manipulates a population of individuals where each individual describes how to construct a candidate circuit. Each circuit is assigned a fitness, which indicates how well a candidate circuit satisfies the design specification. The evolutionary algorithm uses stochastic operators to evolve new circuit configurations from existing ones. Done properly, over time the evolutionary algorithm will evolve a circuit configuration that exhibits desirable behavior. Each candidate circuit can either be simulated or physically implemented in a reconfigurable device. Typical reconfigurable devices are field-programmable gate arrays (for digital designs) or field-programmable analog arrays (for analog designs). At a lower level of abstraction are the field-programmable transistor arrays that can implement either digital or analog designs. In many cases conventional design methods (formulas, etc.) can be used to design a circuit. But in other cases the design specification doesn't provide sufficient information to permit using conventional design methods. For example, the specification may only state desired behavior of the target hardware. The fitness of an evolved circuit is a measure of how well the circuit matches the design specification. Fitness in evolvable hardware problems is determined via two methods:: • extrinsic evolution: all circuits are simulated to see how they perform • intrinsic evolution : physical tests are run on actual hardware. In extrinsic evolution only the final best solution in the final population of the evolutionary algorithm is physically implemented, whereas with intrinsic evolution every individual in every generation of the EA's population is physically realized and tested. Evolvable hardware problems fall into two categories: original design and adaptive systems. Original design uses evolutionary algorithms to design a system that meets a predefined specification. Adaptive systems reconfigure an existing design to counteract faults or a changed operational environment. Do you find yourself frustrated by slow LAN performance, inconsistent internet access, a FAX machine that only sends faxes sometimes, your land line's number of 'ghost' phone calls every month? Well, GAs are being developed that will allow for dynamic and anticipatory routing of circuits for telecommunications networks. These could take notice of your system's instability and anticipate your re-routing needs. Using more than one GA circuit-search at a time, soon your interpersonal communications problems may really be all in your head rather than in your telecommunications system. Other GAs are being developed to optimize placement and routing of cell towers for best coverage and ease of switching, so your cell phone and blackberry will be thankful for GAs too. New applications of a GA known as the "Traveling Salesman Problem" or TSP can be used to plan the most efficient routes and scheduling for travel planners, traffic routers and even shipping companies. The shortest routes for traveling. The timing to avoid traffic tie-ups and rush hours. Most efficient use of transport for shipping, even to including pickup loads and deliveries along the way. The program can be modeling all this in the background while the human agents do other things, improving productivity as well! Chances are increasing steadily that when you get that trip plan packet from the travel agency, a GA contributed more to it than the agent did On the security front, GAs can be used both to create encryption for sensitive data as well as to break those codes. Encrypting data, protecting copyrights and breaking competitors' codes have been important in the computer world ever since there have been computers, so the competition is intense. Every time someone adds more complexity to their encryption algorithms, someone else comes up with a GA that can break the code. It is hoped that one day soon we will have quantum computers that will be able to generate completely indecipherable codes. Of course, by then the 'other guys' will have quantum computers too, so it's a sure bet the spy vs. spy games will go on indefinitely The de novo design of new chemical molecules is a burgeoning field of applied chemistry in both industry and medicine. GAs are used to aid in the understanding of protein folding, analyzing the effects of substitutions on those protein functions, and to predict the binding affinities of various designed proteins developed by the pharmaceutical industry for treatment of particular diseases. The same sort of GA optimization and analysis is used for designing industrial chemicals for particular uses, and in both cases GAs can also be useful for predicting possible adverse consequences. This application has and will continue to have great impact on the costs associated with development of new chemicals and drugs The development of microarray technology for taking 'snapshots' of the genes being expressed in a cell or group of cells has been a boon to medical research. GAs have been and are being developed to make analysis of gene expression profiles much quicker and easier. This helps to classify what genes play a part in various diseases, and further can help to identify genetic causes for the development of diseases. Being able to do this work quickly and efficiently will allow researchers to focus on individual patients' unique genetic and gene expression profiles, enabling the hoped-for "personalized medicine" we've been hearing about for several years In the current unprecedented world economic meltdown one might legitimately wonder if some of those Wall Street gamblers made use of GA-assisted computer modeling of finance and investment strategies to funnel the world's accumulated wealth into what can best be described as dot-dollar black holes. But then again, maybe they were simply all using the same prototype, which hadn't yet been de-bugged. It is possible that a newer generation of GA-assisted financial forecasting would have avoided the black holes and returned something other than bad debts the taxpayers get to repay. Who knows Those who spend some of their time playing computer Sims games (creating their own civilizations and evolving them) will often find themselves playing against sophisticated artificial intelligence GAs instead of against other human players online. These GAs have been programmed to incorporate the most successful strategies from previous games - the programs 'learn' - and usually incorporate data derived from game theory in their design. Game theory is useful in most all GA applications for seeking solutions to whatever problems they are applied to, even if the application really is a game When to Use a GA • Alternate solutions are too slow or overly complicated • Need an exploratory tool to examine new approaches • Problem is similar to one that has already been successfully solved by using a GA • Want to hybridize with an existing solution • Benefits of the GA technology meet key problem requirements Some GA Application Types Domain Application Types Control gas pipeline, pole balancing, missile evasion, pursuit Design Scheduling semiconductor layout, aircraft design, keyboard configuration, communication networks manufacturing, facility scheduling, resource allocation Robotics trajectory planning Machine Learning Signal Processing designing neural networks, improving classification algorithms, classifier systems filter design Game Playing poker, checkers, prisoner’s dilemma Combinatorial Optimization set covering, travelling salesman, routing, bin packing, graph colouring and partitioning Conclusions Question: ‘If GAs are so smart, why ain’t they rich?’ Answer: ‘Genetic algorithms are rich - rich in application across a large and growing number of disciplines.’ - David E. Goldberg, Genetic Algorithms in Search, Optimization and Machine Learning