Infrastructure Management Novartis

advertisement

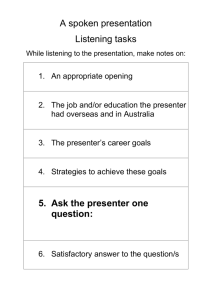

Grids and NIBR Steve Litster PhD. Manager of Advanced Computing Group OGF22, Date Feb. 26th 2008 Agenda Introduction to NIBR Brief History of Grids at NIBR Overview of Cambridge Campus grid Storage woes and Virtualization solution 2 | Presentation Title | Presenter Name | Date | Subject | Business Use Only NIBR Organization Novartis Institutes for BioMedical Research (NIBR) is Novartis’ global pharmaceutical research organization. Informed by clinical insights from our translational medicine team, we use modern science and technology to discover new medicines for patients worldwide. Approximately 5000 people worldwide 3 | Presentation Title | Presenter Name | Date | Subject | Business Use Only NIBR Locations VIENNA CAMBRIDGE HORSHAM TSUKUBA BASEL EMERYVILLE SHANGHAI E. HANOVER 4 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Brief History of Grids at NIBR 2001-2004 Infrastructure • PC Grid for cycle “scavenging” - 2700 Desktop CPU’s . Applications primarily Molecular Docking- GOLD and DOCK • SMP Systems- Multiple, departmentally segregated - no queuing, multiple interactive sessions • Linux Clusters (3 in US, 2 in EU)-departmentally segregated - Multiple Job schedulers PBS, SGE, “home-grown”. • Storage - Multiple NAS systems and SMP systems serving NFS based file systems - Storage: growth-100GB/Month 5 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Brief History of Grids at NIBR cont. 2004-2006 • PC Grid “Winding” Down • Reasons: - Application on-boarding very specialized • SMP Systems Multiple, departmentally segregated systems • Linux Clusters (420 CPU in US, 200 CPU in EU) • Reasons: - Departmental consolidation (still segregation through “queues”) - Standardized OS and Queuing systems • Storage - Consolidation to centralized NAS and SAN solution - Growth Rate 400GB/Month 6 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Brief History of Grids at NIBR cont. 2006-Present • Social Grids Community Forums, Sharepoint, Media Wiki (user driven) • PC Grid: 1200 CPU’s (EU). Changing requirements. • SMP Systems - Multiple 8+ CPU systems-shared resource-Part of Compute Grid • Linux Clusters 4 Globally Available (Approx 800 CPU ) - Incorporation of Linux workstations, Virtual Systems and Web Portals - Workload changing from Docking/Bioinformatics to Statistical and Image Analysis • Storage (Top Priority) - Virtualization - Growth Rate 2TB/Month (expecting 4-5TB/Month) 7 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Cambridge Site “Campus Grid” 8 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Cambridge Infrastructure 9 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Cambridge Campus Grid cont. Current Capabilities • Unified home, data and application directories • All file systems are accessible via NFS and CIFS (multi-protocol) • NIS and AD “UIDS/GIDS” synchronized • Users can submit jobs to the compute grid from linux workstations or portal based applications e.g.. Inquiry integrated with SGE. • Extremely scalable NAS solution and virtualized file systems 10 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Storage Infrastructure cont. • Storage (Top Priority) - Virtualization - Growth Rate 2TB/Month (expecting 4-5TB/Month) SAN Cambridge Storage Growth 7 TB 13 TB NAS 40 TB 100 90 . 70 TB Used 80 60 50 40 30 20 10 0 11 | Presentation Title | Presenter Name | Date | Subject | Business Use Only 08 nJa 7 -0 ov N 07 pSe 7 l-0 Ju 7 -0 ay M 7 -0 ar M 07 nJa 6 -0 ov N 06 pSe 6 l-0 Ju 6 -0 ay M 6 -0 ar M 06 nJa 5 -0 ov N 05 pSe 5 l-0 Ju 5 -0 ay M 5 -0 ar M 05 nJa Date How did we get there technically? Original Design: A single NAS File System • 2004 3TB (no problems, life was good) • 2005 7TB (problems begin) - Backing up file systems >4TB problematic - Restoring data from 4TB file system-even more of a problem • Must have a “like device”, 2TB restore takes 17hrs - Time to think about NAS Virtualization. • 2006 13 TB (major problems begin) - Technically past the limit supported by storage vendor • Journalled file systems do need fsck'ing sometimes 12 | Presentation Title | Presenter Name | Date | Subject | Business Use Only NAS Virtualization Requirements: • Multi-protocol file systems (NFS and CIFS) • No “stubbing” of files • No downtime due to storage expansion/migration • Throughput must be as good as existing solution • Flexible data management policies • Must backup with 24hrs 13 | Presentation Title | Presenter Name | Date | Subject | Business Use Only NAS Virtualization Solution 14 | Presentation Title | Presenter Name | Date | Subject | Business Use Only NAS Virtualization Solution cont. Pro’s • Huge reduction in backup resources (90->30 LTO3 tapes/week) • Less wear and tear on backup infrastructure (and operator) • Cost savings : Tier 4 = 3x, Tier 3 =x • Storage Lifecycle –NSX example • Much less downtime (99.96% uptime) Cons • Can be more difficult to restore data • Single point of failure (Acopia meta data) • Not built for high throughput (requirements TBD) 15 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Storage Virtualization Inbox-Data repository for “wet lab” data • 40TB of Unstructured data • 15 Million directories • 150 Million files • 98% of data, not accessed 3 months after creation. Growth due to HCS, X-Ray Crystallographic, MRI, NMR and Mass Spectrometry data • Expecting 4-5TB Month 16 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Lessons Learned Can we avoid having to create a technical solution? • Discuss data management policy before lab instruments are deployed • Discuss standardizing image formats • Start taking advantage of meta data In the meantime: • Monitor and manage storage growth and backups closely • Scale backup infrastructure with storage • Scale Power and Cooling –new storage systems require >7KW/Rack. 17 | Presentation Title | Presenter Name | Date | Subject | Business Use Only NIBR Grid Future (highly dependent on open standards) • Cambridge model deployed Globally • Tighter integration of the GRIDs (storage, compute, social) with: - workflow tools –Pipeline Pilot, Knime, ….. - home grown and ISV applications - data repositories –start accessing information not just data - Portals/SOA - Authentication and Authorization 18 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Acknowledgements Bob Cohen, John Ehrig, OGF Novartis ACS Team: • Jason Calvert, Bob Coates, Mike Derby, James Dubreuil, Chris Harwell, Delvin Leacock, Bob Lopes, Mike Steeves, Mike Gannon Novartis Management: • Gerold Furler, Ted Wilson, Pascal Afflard and Remy Evard (CIO) 19 | Presentation Title | Presenter Name | Date | Subject | Business Use Only Additional Slides :NIBR Network Note: E. Hanover is the North American hub, Basel is the European hub All Data Center sites on the Global Novartis Internal Network have network connectivity capabilities to all other Data Center sites dependent on locally configured network routing devices and the presence of firewalls in the path. Basel; Redundant OC3 links to BT OneNet cloud Cambridge; Redundant dual OC 12 circuits to E. Hanover. Cambridge does not have a OneNet connection at this time. NITAS Cambridge also operates a network DMZ with 100Mbps/1Gbps internet circuit. E. Hanover; Redundant OC12s to USCA OC3 to Basel T3 into BT OneNet MPLS cloud with Virtual Circuits defined to Tsukuba and Vienna, Emeryville; 20 Mbps OneNet MPLS connection Horsham; Redundant E3 circuits into BT OneNet cloud with Virtual Circuits to E. Hanover & Basel Shanghai; 10Mbps BT OneNet cloud connection, backup WAN to Pharma Beijing Tsukuba; Via Tokyo, redundant T3 links into BT OneNet cloud with Virtual Circuits defined to E. Hanover & Vienna Vienna; Redundant dual E3 circuits into the BT OneNet MPLS cloud with Virtual Circuits to E. Hanover, Tsukuba & Horsham 20 | Presentation Title | Presenter Name | Date | Subject | Business Use Only