Berkeley NEST Wireless OEP

Programmable Packets in the

Emerging Extreme Internet

David Culler

UC Berkeley

Intel Research @ Berkeley

The emerging internet of 2012

• won’t be dominated by independent, point-topoint transport between ‘desktops’

• 99.9% of the network nodes will be the billions of devices deeply embedded in the physical world

– they are the majority today, but not connected to each other or the web.... This will change.

– they will generate a phenomenal amount of data

• Broad-coverage services spread over a substantial portions of the web serve millions at once

– CDNs and P2Ps the tip of the iceberg

• These ‘extreme’ network environments may present a much greater need for programmability

– may also be more condusive to generality

– very different attack models and response

8/7/2002 Programmable Packets

Outline

• Motivation

• Deeply embedded networks of tiny devices

• Planetary-scale Services

• Discussion

8/7/2002 Programmable Packets

Deeply Embedded Networks

• # nodes >> # people

• sensor/actuator data stream

• unattended

• inaccessible

• prolonged deployment

• energy constrained

• operate in aggregate

• in-network processing necessary

• what they do changes over time

=> must be programmed over the network

Programmable Packets 8/7/2002

Berkeley Wireless Sensor ‘Motes’

Mote Type WeC Rene Rene2 Dot Mica

Date

Type

Prog. Mem. (KB)

RAM (KB)

Radio

Rate (Kbps)

Modulation Type

8/7/2002

Sep-99 Oct-00 Jun-01

Microcontroller (4MHz)

AT90LS8535

Aug-01

ATMega163

8

0.5

10

OOK

16

1

Communication

RFM TR1000

Feb-02

ATMega103/128

128

4

10/40

OOK/ASK

Programmable Packets

TinyOS Application Graph

Route map router sensor appln

Active Messages

Radio Packet Serial Packet

Radio byte UART

RFM

8/7/2002

Temp photo

ADC

SW

HW

Example: self-organized adhoc, multi-hop routing of photo sensor readings

3450 B code

226 B data clocks

Programmable Packets

Graph of cooperating state machines on shared stack

It is a noisy world after all...

• Get to rethink each of the layers in a new context

– coding, framing

– mac

– routing

– transport,

– rate control

– discovery

– multicast

– aggregation

– naming

– security

– ...

• Resource constrained, power aware, highly variable, ...

•

Every node is also a router

• No entrenched ‘dusty packets’

8/7/2002 probability of reception from center node vs xmit strength

Programmable Packets

Example “epidemic” tree formation

8/7/2002 Programmable Packets

Tiny Virtual Machines?

• TinyOS components graph supports a class of applns.

• Application flexibility / extendability needed

– Re-tasking deployed networks

– Adjusting parameters

• Binary program uploading takes ~2 minutes

– significant energy cost, vulnerable transition

• Tiny virtual machine adds layer of interpretation for specific coordination

– Primitives for sensing and communication

– Small capsules (24 bytes)

–

Propagate themselves through network

Programmable Packets 8/7/2002

Maté Overview

• TinyOS component

• 7286 bytes code, 603 bytes RAM

• Stack-based bytecode interpreter

• Three concurrent execution contexts

• Code broken into capsules of 24 instructions

• Single instruction message send

• Self-forwarding code for rapid programming

• Message receive and send contexts

Programmable Packets 8/7/2002

Maté Network VM Architecture

• 3 execution contexts

• dual stack, 1-byte inst.

• Send/Rcv/Clock + sub capsules

• Hold up to 24 instructions

• Fit in a single TinyOS AM packet

– installation is atomic

– no buffering

• Context-specific inst: send, receive, clock

• Shared: subroutines 0-3

• Version information

8/7/2002

PC

Subroutines

0 1 2 3 gets/sets

Programmable Packets

Events

Operand

Stack

Return

Stack

Mate

Context

Code Snippet: cnt_to_leds

gets # Push heap variable on stack pushc 1 # Push 1 on stack add # Pop twice, add, push result copy # Copy top of stack sets # Pop, set heap pushc 7 # Push 0x0007 onto stack and # Take bottom 3 bits of value putled # Pop, set LEDs to bit pattern halt #

8/7/2002 Programmable Packets

Sending a Message

pushc 1 # Light is sensor 1 sense # Push light reading on stack pushm # Push message buffer on stack clear # Clear message buffer add # Append reading to buffer send # Send message using built-in halt # ad-hoc routing system

Programmable Packets 8/7/2002

Viral Code

• Every capsule has version information

• Maté installs newer capsules it hears on network

• Motes can forward their capsules (local broadcast)

– forw

– forwo

8/7/2002 Programmable Packets

Forwarding: cnt_to_leds

gets # Push heap variable on stack pushc 1 # Push 1 on stack add # Pop twice, add, push result copy # Copy top of stack sets # Pop, set heap pushc 7 # Push 0x0007 onto stack and # Take bottom 3 bits of value putled # Pop, set LEDs to bit pattern forw # Forward capsule halt #

Programmable Packets 8/7/2002

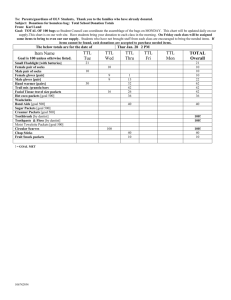

Code Progation

• 42 motes: 3x14 grid

• 3 hop network

– largest cell 30 motes

– smallest cell 15 motes

Network Programming Rate

100%

80%

60%

40%

20%

0%

0 20 40 60 80 100 120 140 160 180 200 220 240

Tim e (seconds)

Programmable Packets 8/7/2002

Why Tiny Programmable Packets?

• All programming must be remote

– rare opportunities to get to GDI, can’t mess with the birds, minimize disturbance

– too many devices to program by hand

• Network programming of entire code image

– essential, but often overkill

– takes about 2 minutes of active radio time

– window of vulnerability

• Packet programs propagate very cheaply

– if a change will run for less than 6 days, less energy to interpret it

• ~10,000 instructions per second

• Task operations are 1/3 of Maté overhead

• 33:1 to 1.03:1 overhead on TinyOS operations

Programmable Packets 8/7/2002

www.tinyos.org

Thoughts on the “Many Tiny”

• Deeply embedded networks of small devices are coming

– utilize spatial diversity as well as coding and retransmission

– severely constrained resources

– self-organization is essential

– deal with noise and uncertainty - routinely

• “Programming the network?” is not a question.

– it is necessary

– epidemic algorithms common

– distributed algorithms (time synch, leader elec, ...)

– reactive by design

– ‘learning’ framework is natural (ex. MPR routing)

• Nodes interact directly with physical world

– what they do will matter

– potential to observe the effects of actions

• Models of security & privacy TBD!

– very different attack models

• Deja vu opportunity

8/7/2002 Programmable Packets

The Other Extreme

- Planetary Scale Services

• www.planet-lab.org

8/7/2002 Programmable Packets

Motivation

• A new class of services & applications is emerging that spread over a sizable fraction of the web

– CDNs as the first examples

–

Peer-to-peer, ...

• Architectural components are beginning to emerge

– Distributed hash tables to provide scalable translation

– Distributed storage, caching, instrumentation, mapping, events ...

• The next internet will be created as an overlay on the current one

– as did the last one

– it will be defined by its services, not its transport

» translation, storage, caching, event notification, management

• There will soon be vehicle to try out the next n great ideas in this area

8/7/2002 Programmable Packets

Confluence of Technologies

• Cluster-based scalable distribution, remote execution, management, monitoring tools

– UCB Millennium, OSCAR, ..., Utah Emulab, ModelNet...

• CDNS and P2Ps

– Gnutella, Kazaa, ... ,Pastry, Chord, CAN, Tapestry

• Proxies routine

• Virtual machines & Sandboxing

– VMWare, Janos, Denali,... web-host slices (EnSim)

• Overlay networks becoming ubiquitous

–

XBONE, RON, Detour... Akamai, Digital Island, ....

• Service Composition Frameworks

– yahoo, ninja, .net, websphere, Eliza

• Established internet ‘crossroads’ – colos

• Web Services / Utility Computing

• Grid authentication infrastructure

• Packet processing,

– Anets, .... layer 7 switches, NATs, firewalls

Programmable Packets

The Time is NOW

Guidelines (1)

• Thousand viewpoints on “the cloud” is what matters

– not the thousand servers

– not the routers, per se

– not the pipes, per se

8/7/2002 Programmable Packets

Guidelines (2)

• and you miust have the vantage points of the crossroads

– primarily co-location centers

8/7/2002 Programmable Packets

Guidelines (3)

• Each service needs an overlay covering many points

– logically isolated

• Many concurrent services and applications

– must be able to slice nodes = > VM per service

– service has a slice across large subset

• Must be able to run each service / app over long period to build meaningful workload

– traffic capture/generator must be part of facility

• Consensus on “a node” more important than

“which node”

8/7/2002 Programmable Packets

Guidelines (4)

Management, Management, Management

• Test-lab as a whole must be up a lot

– global remote administration and management

» mission control

– redundancy within

• Each service will require its own remote management capability

• Testlab nodes cannot “bring down” their site

– generally not on main forwarding path

– proxy path

– must be able to extend overlay out to user nodes?

• Relationship to firewalls and proxies is key

8/7/2002 Programmable Packets

Guidelines (5)

• Storage has to be a part of it

– edge nodes have significant capacity

• Needs a basic well-managed capability

– but growing to the seti@home model should be considered at some stage

– may be essential for some services

8/7/2002 Programmable Packets

Initial Researchers (mar 02)

Washington

Tom Anderson

Steven Gribble

David Wetherall

MIT

Frans Kaashoek

Hari Balakrishnan

Robert Morris

David Anderson

Berkeley

Ion Stoica

Joe Helerstein

Eric Brewer

John Kubi

Intel Research

David Culler

Timothy Roscoe

Sylvia Ratnasamy

Gaetano Borriello

Satya

Milan Milenkovic

Duke

Amin Vadat

Jeff Chase

Princeton

Larry Peterson

Randy Wang

Vivek Pai

8/7/2002 Programmable Packets see http://www.cs.berkeley.edu/~culler/planetlab

Rice

Peter Druschel

Utah

Jay Lepreau

CMU

Srini Seshan

Hui Zhang

UCSD

Stefan Savage

Columbia

Andrew

Campbell

ICIR

Scott Shenker

Mark Handley

Eddie Kohler

Initial Planet-Lab Candidate Sites

UBC

UCSD

UW

Chicago

Intel Seattle

Intel OR

Intel Berkeley

UCB

Utah

ICIR

UCSB

St. Louis

Washu

UCLA

WI

Intel

UPenn

Harvard

CMU

KY

UT

Rice

Cornell

Princeton

Duke

GIT

MIT

Columbia

ISI

Uppsala

Copenhagen

Cambridge

Amsterdam

Karlsruhe

Barcelona

Beijing

Tokyo

Melbourne

Programmable Packets 8/7/2002

Approach:Service-Centric Virtualization

• Virtual Machine Technology has re-emerged for hosting complete desktop environments on nonnative OS’s and potentially on machine monitors.

– ex. VMWare, ...

• Sandboxing has emerged to emulate multiple virtual machines per server with limited /bin, (no /dev)

– ex. ENSim web hosting

• Network Services require fundamentally simpler virtual machines, can be made far more scalable (VMs per PM), focused on service requirements

– ex. Jail, Denali, scalable and fast, but no full legacy OS

– access to overlays (controlled access to raw sockets)

– allocation & isolation

» proportional scheduling across resource container - CPU, net, disk

– foundation of security model

– fast packet/flow processing puts specific design pressures

• Instrumentation and management are additional virtualized

‘slices’

– distributed workload generation, data collection

Hard problems/challenges

• “ Sliceability” – multiple experimental services deployed over many nodes

– Distributed Virtualization

–

Isolation & Resource Containment

–

Proportional Scheduling

– Scalability

• Security & Integrity - remotely accessed and fully exposed

–

Authentication / Key Infrastructure proven, if only systems were bug free

– Build secure scalable platform for distributed services

»

Narrow API vs. Tiny Machine Monitor

• Management

– Resource Discovery, Provisioning, Overlay->IP

–

Create management services (not people) and environment for innovation in management

»

Deal with many as if one

• Building Blocks and Primitives

–

Ubiquitous overlays

• Instrumentation

8/7/2002 Programmable Packets

Programmable Packets w/i a Slice

• A service spread over the globe needs to be extensible through methods more lightweight than ‘reload all the code’

– not unlike the ‘new router firmware’ problem

• Smart Packets interpreted in the context of the containing service-slice, rather than generic core-router.

• Routing is overlay routing, so not limited by CISCO design cycle

• ‘Global view’ gives the service many advantages

– not just localization / caching

– adaptive or multipath routing in the overlay

– multi-lateration in the network space

» consider a global spam filter

• Reactive loops within a service are natural

– service-driven load balancing, overlay management, SEDA-style processing

Programmable Packets 8/7/2002

Discussion

Wide-Area Broad-Coverage Services

8/7/2002

Deeply-

Embedded

Networks

Traditional pt-pt Internet

Programmable Packets

Security: restricted API -> Simple

Machine Monitor

• Authentication & Crypto works… if underlying SW has no holes

very simple system

push complexity up into place where it can be managed

virtualized services

• Classic ‘security sandbox’ limits the API and inspects each request

• Ultimately can only make very tiny machine monitor truly secure

• SILK effort (Princeton) captures most valuable part of ANets nodeOS in Linux kernel modules

– controlled access to raw sockets, forwarding, proportional alloc

• Key question is how limited can be the API

– ultimately should self-virtualize

» deploy the next planetlab within the current one

– progressively constrain it, introducing compatibility box

– minimal box defines capability of thinix

• Host f

1 planetSILK within f

2 thinix VM

8/7/2002 Programmable Packets