Child Protection Rapid Assessment

A Short Guide

© Global Protection Cluster, Child Protection Working Group, January 2011

All rights reserved….

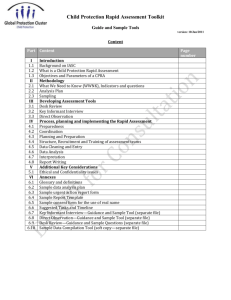

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Glossary of Acronyms

CP: Child Protection

CPRA: Child Protection Rapid Assessment

CPWG: Child Protection Working Group

CPRAWG: Child Protection Rapid Assessment Working Group

DO: Direct Observation

DR: Desk Review

GBV: Gender Based Violence

IA: Inter Agency

IASC: Inter Agency Standing Committee

IDP: Internally Displaced Persons

IM: Information Management

KI: Key Informant

KII: Key Informant Interview

NATF: Needs Assessment Task Force

RA: Rapid Assessment

SGBV: Sexual and Gender Based Violence

SV: Sexual Violence

WWNK: What We Need to Know

Assessment guide legend

A step or sub-step in organizing and

implementing a Child Protection

Rapid Assessment

Where to find Annexes and Sample

Tools

Tasks

Don’t forget!

I

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Introduction

[note from the CPWG]

Background on Humanitarian Assessments

Following any larger-scale rapid-onset emergency, Child Protection assessment will usually be

conducted in the context of coordinated assessments organized through the humanitarian cluster

system. The Inter Agency Standing Committee (IASC) Needs Assessment Task Force (NATF) suggests a

framework that identified three phases in the emergency assessment processes – these are generally

applicable to all emergencies, whether large- or smaller-scale.1 These are:

Phase I – Preliminary Scenario Definition. This phase should happen within 72 hours of the onset of the

emergency and does not include sector specific questions;

Phase II – Multi-Cluster/Sector joint assessment. This phase should take place within the first two weeks

of the onset and will look into top priority sector issues;

Phase III – Cluster/Sector-specific Assessments. This phase addressed more detailed and in-depth sector

specific questions and will take place during the third and fourth week following the onset of an

emergency.

What is a Child Protection Rapid Assessment?

A Child Protection Rapid Assessment (CPRA) is an inter-agency, cluster-specific rapid assessment,

designed and conducted by CPWG members in the aftermath of a rapid-onset emergency. It is meant to

provide a snapshot of urgent child protection related needs among the affected population within the

immediate post-emergency context, as well as act as a stepping-stone for a more comprehensive

process of assessing the impacts of the emergency. This rapid assessment should not be confused with

nor take the place of more comprehensive assessments or monitoring mechanisms.

The IASC NATF was established by the Inter-Agency Standing Committee1 in 2009 to improve coordinated assessment

processes in humanitarian disasters.

1

II

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Objectives of a CPRA

A Child Protection Rapid Assessment (CPRA) provides a starting point for defining Child Protection needs

and existing supports in the immediate aftermath of a rapid-onset emergency.

Through the CPRA, we strive to determine:

SCALE of needs and protection risks;

PRIORITIES for required response – geographic and programmatic areas of priority, from which

funding priorities can be agreed;

HOW such response should be configured – including what existing capacities the response can

build on;

Depending on the context, CPRA may also be useful for other purposes, such as:

Creating an evidence-base for advocacy with stakeholders (armed groups, government etc);

Providing some knowledge of where the main information gaps are.

When should the CPRA happen?

The inter-agency CPRA typically takes about 3-4 weeks to complete, and thus usually falls within phase

III of the NATF rapid assessment framework. The CPRA may be one component of a coordinated

Protection Rapid Assessment. However, CPRA can very well be a stand-alone process, and take place in

the absence of any other humanitarian assessments. In contexts where preparedness measures have

reduced the post-emergency preparation time, CPRA can also happen at earlier stages after the onset.

The limited duration of the CPRA ensures that priority sector-specific information becomes available

rapidly to inform preliminary response. Following this rapid assessment phase, a more comprehensive

and in-depth child protection assessment should be planned. The existing Inter-Agency Child Protection

Assessment Toolkit is the primary resource developed to assist this more comprehensive child

protection assessment process.

How should the CPRA happen?

This guide will take you through the main steps in the process of planning and implementing a Child

Protection Rapid Assessment in the aftermath of a rapid-onset emergency. The Action Plan and

Timeline table on page 1 of this guide provides an overview of the entire process. Ethical and

confidentiality issues, briefly presented on page 19 of this guide, should be dealt with as a central part to

any assessment effort that deals with human subjects, including the CPRA.

While significant efforts have been dedicated ensure that the guide is as comprehensive as possible, it is

impossible to create an assessment tool that can fit any and every post-emergency context. Therefore,

while using this tool, be mindful of possible need for adjustments and additions based on your local

context.

III

Planning and Implementing a Child Protection Rapid Assessment (Action Plan and Timeline)

Topic/Phase

Guide

(page

#)

1. Coordination and

Planning

2 to 3

2. Preparing for the

Assessment

4 to 7

3. Reviewing &

Adapting the Data

Collection & Analysis

tools

4. Structure,

Recruitment and

Training of

Assessment Teams

5. Data Collection

and Management

6. Data analysis,

Interpretation and

Report Writing

7 to 10

10 to 12

12 to 13

Suggested Timeline

Suggested Tasks

Form assessment coordination

body

Agree on roles and

responsibilities

Agree on lead agency

Develop work-plan, including

who will lead each activity

Determine the initial

geographic sample (scenarios)

Determine logistical and HR

needs

Cost the operation and

raise/flag funds

Analyze the risk and develop

contingencies

Refine and adapt What We

Need to Knows

Conduct Desk Review

Develop sample frame

Define urgent action procedure

Contact key resources

Adapt modules

Recruit assessors and

supervisors

Train assessors and

supervisors

Deploy teams to the field

Supervise fieldwork

Provide regular technical and

logistical support to teams

Collect field reports, clean and

enter the data

Analyse and interpret the data

14 to 18

Write reports and disseminate

Week 1

Week 2

Week 3

1 2 3 4 5 6 7 1 2 3 4 5 6 7 1 2 3 4 5 6 7 1 2

Week 4

Week 5

3 4 5 6 7 1 2 3 4 5 6 7

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

WEEK 1 - Coordination and Planning

The success of a joint Child Protection Rapid Assessment is dependent on a collective effort on the part of

CP actors, including the government when appropriate. A single organization is unlikely to have the full

resources and expertise necessary for this process - a collaborative coordinated approach can help to

ensure the availability of pooled resources. The involvement of a broad set of child protection actors will

also enrich and strengthen the quality and legitimacy of the end result - including diverse experiences and

points of view can ensure wider buy-in and ownership over the process and results.

Coordinate and Link up with other Multisectoral Assessments Processes

Following any rapid-onset emergency, child protection actors are responsible for ensuring that their

responses to the emergency are well coordinated. Whatever coordination mechanism for Child Protection

is in place should also be used as the initial forum to discuss and coordinate assessment activities. The first

step in ensuring a coordinated assessment process would be to look into other existing assessment

processes. Deciding who should lead the coordination effort on needs assessments depends on existing

coordination mechanisms, actors’ capacity and expertise and the type and nature of the emergency.

Ideally, a lead agency should be selected during the preparedness phase to avoid delays.

In some situations, especially larger-scale rapid-onset disasters, a coordinated multi-cluster assessment will

be undertaken in the first two weeks of the emergency. It is important that CPWG members link and

coordinate with NATF or any other existing needs assessment process to:

ensure that child protection considerations are integrated into multi-cluster/sector assessment;

obtain the data and results from previous assessments to use it as secondary data; and

avoid duplication and unnecessary overlap of assessment activities and explore possibility of piggybacking Child Protection into other planned sector-specific rapid assessments.

See Part I – Introduction for a brief overview of the stages of the multi-sectoral assessment process

Building upon information from a multi-cluster joint assessment (Phase II) can usefully

inform a child protection rapid assessment (Phase III). If no multi-cluster joint assessment has

been completed, however, the child protection group needs to make the judgment whether to

proceed with a child protection assessment or await completion of a multi-cluster joint

assessment.

2

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Form a Child Protection Rapid Assessment Working Group (CPRAWG):

Within the Child Protection sub-Cluster/Coordination mechanism, a working group should be formed to

manage the rapid assessment process, including technical oversight and administrative support. It is

important to ensure that in addition to child protection expertise, there is some minimal level of IM

technical capacity within this working group to support assessment work2.

The initial task check-list of the Working Group may include:

Develop a time-bound work-plan that clearly assigns responsibility to different members;

Decide on the overall geographic scope of the assessment;

Determine logistical and human resource needs;

Cost the operation and identify funds and in-kind contributions;

Analyse risks and develop contingency plans;

Form a smaller technical group to develop the sampling, adapt the tool, and undertake the analysis

and interpretation of the results;

Agree on how the data collection process will be supervised and supported.

In addition to technical and administrative support provided to assessment teams, other tasks of the CPRA

working group may include:

Outreach to mobilize the participation of key CP actors;

Contingency planning, including monitoring of the humanitarian situation and revising plans as

necessary;

Time management of the assessment process;

Linking and coordinating with other assessment processes;

Ensuring logistic arrangements and support;

Developing a plan to share results with relevant actors.3

Financial and human resources together with logistical needs are often a determining

factor in the scope of the assessment. Coming up with a rough calculation of resource needs

earlier in the process will ensure a more realistic planning and smoother implementation.

If there is no IM capacity within the CPWG, seek IM support from OCHA or from other clusters or agencies who may be

conducting similar activities. Also explore the possibility of using the same sampling methodology being used for other

assessments.

3 Anecdotal evidence suggests one of the main impediments to timely distribution and use of assessment info is disagreement

on the how to share the results with others. It is preferable to agree on the parameters of results sharing at the outset. It is

also suggested that any formal sign-off process is complemented the release of preliminary results or a 'briefing note.’

2

3

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

WEEK 2 - Preparing for the Assessment

Review and refine What We Need to Know (WWNK)

The What We Need to Know (WWNK) represent the key pieces of priority information on risks and on the

situation of children which we require to inform our immediate programming priorities. Deciding on

WWNK is the foundation of any CPRA.

The below list of WWNKs was developed based on a broad consultation with global and field level CPWG

members. The CPRA working group should refine and adapt this list to the local context. The process of

adapting the assessment tools should be based on this refined list of WWNKs.

Child Protection WWNKs in the immediate onset/rapid assessment phase:

1. Patterns of separation;

2. Types of care arrangements for separated children;

3. Capacities in community to respond to child separation;

4. Patterns and levels of institutionalization of children;

5. Laws and policies on adoption (in and out of country);

6. Nature and extent of any hazards for children in the environment (i.e. open pit latrines, dangling

electrical wires, landmines or other explosives in the vicinity of the residence, small arms etc) and

risk to their safety as a result;

7. Types and levels of violence towards children in the community;

8. Causes and level of risk of death and/or Injury to children;

9. Existence of active participation of children in acts of violence;

10. Past and current trends in involvement/association of children in armed forces and groups;

11. Specific risks of sexual violence for children;

12. Risks of other forms of GBV for children;

13. Common community practices in response to sexual violence against children;

14. Availability of essential sexual violence response services for children (specially health and

psychosocial services);

15. Existing patterns and scale of child labour; likely new risks as a result of the emergency;

16. Sources of stress for children and their caregivers;

17. Children’s and parents’ coping mechanisms;

18. Capacities for provision of people/resources at community level to provide support for children.

Conduct the Desk Review

Undertaking a Desk Review (DR) is a key and necessary component of the CP Rapid Assessment. Ideally, the

DR should take place in the preparedness phase and before the final adaptation of the various assessment

tools – the DR will provide valuable information to inform the formulation of questions and answer options

in the information collection tools. Remember that during the DR, secondary sources such as the National

4

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Health Information System will need to be used. Data acquired through such sources are called: secondary

data.4

See Annex 3 for the Sample Desk Review & Guide

Decide on a Sampling Methodology and Sample Frame

Do not sacrifice quality for quantity. When resources are scarce, do less, but do it well.

Conducting an assessment in fewer sites but with higher quality is likely to result in more reliable

information than visiting more sites with under-prepared, or otherwise less-skilled, assessors.

Sampling methodology:

Any analysis of a situation that is based on information collected from only a sample of the population will

inevitably have inaccuracies. It will provide an estimate of the situation. The level of inaccuracy relates to

the kind of sampling used. Given the resource and logistical constraints in a post emergency setting, as well

as the time-bound nature of rapid assessments, purposive sampling is usually used.

Purposive sampling is a sampling methodology where groups of people or communities are purposefully

selected based on a set of defined criteria (more on how to develop such criteria below). Through this

purposeful selection, we are striving to achieve a relatively complete picture of the situation in the all

affected area, without using a random sampling approach (which is costly and time-consuming).5

Despite inaccuracies, purposive sampling does give us a measure and sense of the scale and priorities that

is approximate enough to enable initial rapid prioritization and planning. It can also provide preliminary

insight into how the emergency has impacted differently on the different categories of affected groups

chosen for the sample.

Another benefit to purposive sampling is that the site selection can be adjusted during the assessment

process if needed. For example, if during the data collection it becomes clear that certain important areas

that we thought initially inaccessible are now assessable by road, they can be added (and visa versa). It is

important to note that the field report should document and justify the selection or exclusion of sites and

populations for the assessment.

Unit of measurement

“Unit of measurement” is the level at which something is measured; for instance, an individual, a class, a

school, a country, etc. For the purposes of a CPRA, the unit of measurement is at a community (rather than

individual or household) level.

Secondary data is a type of data that is derived from a source other than the primary source. For example, if we use the data

collected routinely by government ministries on the situation of children in a given region, we are using secondary data. For

more guidance on technical issue regarding data and information management, please see the CPWG Technical Guide to Child

Protection Assessment.

5 For more guidance on technical issues regarding sampling methodology, please see the CPWG Technical Guide to Child

Protection Assessment. (to be developed)

4

5

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

A site should be a distinct community with a formal, legal, customary or pragmatic boundary allowing an

estimate of its population. The exact definition of what constitutes a ‘site’ needs to be determined at the

local level. Determining the best definition of a site (unit of measurement) depends heavily on the

geographical spread of the emergency, populations affected, results of the previous assessments (if any),

and available resources. The main parameters of selecting a site are as follows:

in a non-camp setting, the smallest administrative unit such as a village or a population grouping

can be taken as a distinct site. In camp settings, each camp can be taken as a site;

if populations with distinct characteristics (such as language, ethnicity, place of origin, status, etc)

live together in one site, and you believe that these characteristics are likely to have an impact on

how each of group is affected by the emergency, such locations should be divided into multiple

sites along the lines of those distinct characteristics regardless of their size.6

If the population distribution is not straightforward or the process of trying to identify sites is a challenge,

seek help from an information management (IM) expert. This will ensure that the collected data will be “good

enough” for your purposes.

Sample frame:

A Sample (or sampling) frame is a list of all the sites that have been selected to be part of an assessment.

This sampling frame needs to be developed after defining the criteria for a ‘site’.

To select the sites, the various characteristics of the sites and populations impacted by the emergency need

to be taken into consideration. These characteristics may include things such as: camp versus non-camp

settings; directly versus indirectly affected areas; displaced versus non-displaced affected populations;

origins of the displaced populations; IDPs within a host community versus on their own; mountainous

versus coastal areas, etc.

Two main steps in developing a sampling frame are the following:

Step 1 –we recommend that a list of distinct scenarios be developed. These scenarios should be

based on and represent the various known characteristics of the affected population and areas.

For example: Imagine a situation where a cyclone has affected two regions in country X. You know that:

a) Region X1 is highly affected while region X2 has been slightly affected;

b) Information from an initial rapid assessment by NATF reveals that in area X1A (sub-region of X1) the

population has been displaced while in area X1B (sub-region of X1) the population stayed in their

villages;

c) Two distinct ethnic groups live in areas X2A and X2B (sub-regions of X2).

Based on this information, we are faced with four distinct scenarios: X1A, X1B, X2A, and X2B.

Step 2 - The second step is to select a number of sites per scenario. We recommend a standard of at

least three sites per scenario. Selection of sites will be based mainly on geographic accessibility and other

logistical considerations.

The idea behind this parameter is to make sure that the KIs interviewed can credibly speak to the experience of the

population they are representing.

6

6

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

In the example used above, our sample frame will include at least three sites in each of the four

sub-regions. This means that we will be assessing at least 12 sites.

When facing limited time or resources to cover all the scenarios, consider prioritizing:

Severely affected areas - prioritize sites where secondary sources of information or experience

indicate the humanitarian situation is the most serious;

Accessible areas - where overall needs are urgent, widespread and unmet, it’s justifiable to focus

on accessible areas or affected population;

Where there are the most gaps in existing knowledge - cover geographic locations or groups on

which little information is available.

When time and resources are available, consider the following:

assess more sites in areas suspected to have been affected more;

assess non-affected sites that are comparable in characteristics to the identified scenarios in

affected areas. This will allow for a comparison of affected and non-affected sites (that are similar

in other characteristics) that can provide a sense of how things have changed as a result of the

emergency.

WEEK 3 - Reviewing and Adapting the Data Collection and Analysis Tools

Issues such as: type and scale of emergency; language diversity; ethnicity; tribal and religious affiliations;

pre-existing child protection concerns; access and security limitations; WWNKS; local capacity and many

more contextual considerations will inform the development of appropriate tools for a CPRA. The three

recommended modules for a CPRA are:

Desk Review

Key Informant Interview

Direct Observation

Focus Group Discussions are generally not encouraged in this phase, because of the levels of staff expertise

they require and the time required to analyse the information they produce. Where there are available

staff trained in appropriate methodologies these may be used as a complementary source of data, but their

use should be guided by strict adherence to guidance on this form of approach.7

Sample Assessment tools are provided in annexes. These sample tools should be used as a

base model for the adaptation and development of context-specific tools. Each of the sample tools

in the annexes also includes further guidance on tool-specific adaptation.

General Considerations in Adapting and Refining CPRA Tools:

You may want to further limit possible answers to a question by making them time bound. For

example, question 1.2 in the KI sample tool asks the country team to define a limited “recall

7

For more guidance on FGDs, refer to the Inter-Agency Child Protection Assessment Toolkit and/or Rapid

Appraisal in Humanitarian Emergencies using PRM, available at: http://resources.cpclearningnetwork.org/

7

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

period” when inquiring about an event over the past X days. Narrowing the question down in this

manner sometimes helps a respondent provide a more accurate answer, while also making analysis

more meaningful;

Whether or not a country edition of the tools has been produced during the preparedness phase

(before the onset), the tools should always be reviewed in advance of fieldwork to ensure

appropriateness as well as thoroughness. We suggest at a minimum doing a short simulation of the

tools with CPWG members familiar with the local context;

Changes to the tools after the deployment of teams are discouraged. However, if the supervisors

determine that changes are critical to the success of the assessment, changes should be kept to a

minimum and need to be immediately communicated to all concerned teams through a centralized

coordination mechanism. When communication is poor (i.e. reliance on transmission devices that

operate sporadically), the capacity of teams to understand and implement the change(s) is

compromised, or there is a likelihood that the changes will be inappropriately or unevenly applied,

the introduction of changes may render the information gathered unusable.

Revise the Assessment Questions (as and if necessary)8

For the rapid assessment phase, to facilitate timely data compilation and analysis, close-ended questions

should be used as much as possible. Open-ended questions should only be used when answers to

questions are hard to predict or when the answer options cannot be pre-determined. To make open-ended

questions easier to analyze, however, we need to close them by identifying a set of predicted answer

options. For this purpose, two methods are used in the sample tools:

1. Multiple-choice questions: for which we can identify an exhaustive and mutually exclusive pool of

answers. This requires sufficient knowledge of the local context to ensure that selected choices adequately

cover all possible answers. Such questions should provide an ‘other’ answer option that can capture

important issues or help identify additional answer options for multiple-choice questions. Multiple-choice

questions are easiest to analyze.

2. Coded-category questions: in this method categories of answers will be developed for each question. This

coded-category approach is useful for questions for which it is harder to come up with concrete answer

options. The assessor will then be tasked to decide to which category the answer of the respondent

belongs.

As far as possible, the type of questions recommended in the Sample KII tool should be maintained, while

answer options should be modified as necessary.

Please see the CPWG Technical Guide to Child Protection Assessments for additional guidance on assessment questions. (to

be developed)

8

8

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Adapt the Key Informant Interview (KII)

See Annex 1 for the Sample Key Informant Interview Tool

A Key Informant (KI) is anyone who can provide information or opinions on a specific subject (or group of

issues) based on her/his experience and knowledge of the community we are trying to assess. KIs should be

identified based on their roles in community and on whether we are confident they can provide a goodenough representation of the views or situation of children in the community/population in question. Key

informants do not necessarily have to be people in positions of authority.

In choosing the key informants, consider whether:

There is reason to believe that they have significant knowledge of the situation of the population of

interest;

They will be able to understand the questions;

Their personal experience is representative of the community, and if not whether this will affect

their answers. (e.g. having a higher level of education than other community members may not

meaningfully affect answers regarding experience or impact of the disaster, but being a member of

a dominant group might);

key informants may have an ‘agenda’ that shapes their answering of questions. While everyone

migh have a persoanl agenda, such biases should be taken into consideration in the selection and

analysis.

The number of key informants to be interviewed in each site is also dependant on the number of sites in

your sample frame; resources and time; and the homogeneity9 of each site. A minimum of 3 key

informants interviews are recommended for each site. In a site that is exceptionally large, additional KIs

should be indentified. In addition:

at least two of the KIs should be working directly with children in some capacity on a day to day

basis. E.g. teacher, community care taker, etc.;

gender balance should be considered. In other words both genders should be represented in the

KIIs conducted in each camp;

at least one of the KIs should hold some overall responsibilities for the population. (e.g. a local

chief, camp manager, religious leader, etc.)

It is recommended that you conduct these KIIs as separate interviews. Individual interviews are easier to

handle and may introduce less bias, as peer pressure and/or fear of disagreement with other members of

the community are less of an issue. If time and resources do not allow for separate interviews, you may opt

for an interview with a group of key informants.

In both of these cases, only one report per site will be produced and forwarded for analysis (see below). In

the case of individual interviews with three KIs, the supervisor is responsible for compiling one site report

using all the information available in the KII questionnaires and cross-verified with the direct observation

checklist.

A homogenous site can be interpreted as a site where a majority of the population represents similar identity

denominations such as socio-economic background, ethnicity, religion and language.

9

9

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Adapt the Direct Observation Tool (as and if necessary)

See Annex 2 for the Sample Direct Observation Tool

The power of Direct Observation (DO) as an assessment method is often understated. Large amounts of

valuable information may be at our disposal through mere observation. Through “listening” and “seeing,”

and without relying on other people’s judgments, we can gain significant insight to the realities of life in a

given site. Direct observation is particularly useful if we are interested in knowing about behaviours within

a population as well as physical conditions of something or a place.

The main purpose of this method in a CPRA is to inform the analysis and validate the data collected from

KIIs through a process called triangulation10. DO results will be used by the supervisor and the team to

inform the compilation of the site report.

Revise the Site Report (as and if necessary)

See Annex 5 for the Sample Site Compilation Report.

Each site will produce a single report that reflects all the data collected in that specific site. This report will

be a triangulated compilation of information collected through KIIs, DO and informal observation of team

members. Adaptation of the questionnaires will also requires an adaptation of this tool.

Revise the Urgent Action form and agree on reporting lines and follow-up responsibilities

See Annex 4 for the Sample Urgent Action Template and Procedures

Urgent Action Forms are designed for an occasion when an individual case comes to the attention of the

assessor. The child protection rapid assessment working group is responsible for defining the criteria for

urgent action and establishing a well-defined procedure for referral services. Cases are not to be actively

sought during the assessment (though may be sought through separate response activities).

WEEKS 2-3 Structure, Recruitment and Training of Assessment Teams

This Rapid Assessment Toolkit has been developed based on the assumption that assessors will have negligible

child protection background, but speak the local language and have some experience working with communities.

Triangulation of data is the process of comparing data collected through different methods, by different people and from

different sources. More on triangulation in section 4.7.

10

10

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Due to the many constraints present in a post-emergency context, you will likely be faced with difficult

choices in regards to the qualification of assessors. The only indispensible qualifications for an assessor are:

knowledge of local language, ability to express oneself clearly and experience working or interacting

responsibly with communities.

CPWG members are encouraged to partially mitigate possible shortfall by putting together a roster of

already screened candidates during the preparedness process.

Determine Structure of the Assessment Teams

Each team should include at least one supervisor who has more advanced child protection skills,

prior assessment experience and ideally some IM technical knowledge, who will be the lead in the

field;

The number of assessors in each field team should be determined by: the number of assessors

available; number of supervisors/team leaders available; and number, location and size of sites to

be assessed. Nonetheless, as a general rule of thumb and to ensure effective management, there

should be maximum six assessors per supervisor; and

If it is not possible to have someone with IM technical background in each team, efforts should be

made to ensure that there is an IM technical focal point that team supervisors can call on if need

be.

Supervisor:

Supervisors coordinate the activities of the assessors in the field while providing them with technical and

logistical support. Supervisors are responsible for coming up with a data collection plan to monitor the

progression of data collection activities. Data collection plan will be a simple list of when and what data

collection activities will take place in each site will and by whom. This plan is crucial to ensuring efficient use

of

time

and

Checklist for Daily Debriefing Session (for use by supervisors)

Review and discuss all filled questionnaires

o

Detect potential error patters in filling the questionnaires

o

Address difficulties in answering questions/sensitive topics

o

Acknowledge and comment on innovations (if any) and discuss their relevance to other

contexts;

Compile site reports (when all the KIIs and the DO forms are filled out for the sites in question);

Discuss logistical concerns/difficulties;

Discuss and refer (if necessary) Urgent Action cases ;

Detect potential inconsistencies in information provided to different assessors (triangulation) and if

necessary, void certain questionnaires that present significant bias on the part of the KI;

Write detailed reports of all discussions and agreements and share with the team the following day.

11

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

resources. The supervisor will also be responsible for conducting debriefing sessions with their assessment

teams at the end of each working day.

Daily debriefing sessions are one of the most important tasks of a supervisor and are at the core of an

efficient data management process. It is during such sessions that

Train the assessment teams11

Assessment teams should be briefed and trained before deployment. This training should cover:

some background information on the emergency and the child protection context (this can be

partly based on the Desk Review);

key child protection definitions and principles;

ethical considerations;

an orientation on the assessment tools;

roles and responsibilities of team members,

reporting and debriefing requirements;

logistics of data collection.

If Rapid Assessment preparation was not undertaken prior to the emergency, including field-testing of the

adapted tools, the training can be an opportunity to do a mock field-test.

Note however, that if the training is going to be used for this dual process, you need to ensure that

you allocate time post-training to revise and consolidate changes before teams are deployed to the field.

WEEK 4 - Data Collection and Management

The CPRA working group should appoint logistics, security, urgent action and technical (CP and IM) focal

points who should remain at the disposal of the field teams throughout the process. At the same time, data

entry focal points should be identified and means of data transmission be communicated to all supervisors.

Before starting the data collection process, consider the operational requirements of data

management: expertise, technological capacity and resource availability. Ask the following

questions early enough to find solutions to possible constraints:

Are computers available for data entry?

Do partners have access to necessary software (word and excel)?

Are power and Internet connections available for sharing data?

Is translation required, and if so, at what stage of the data management process?

Basic guidance on some aspects of the training is provided in the CPWG Technical Guide to Child Protection Assessments

(to be developed).

11

12

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Collect Data

It is recommended that data collection take place in a sweeping manner. In other words, it is preferable to

concentrate resources in one site, until the data collection in that particular site is completed. For example,

if a team consists of a supervisor and three assessors, instead of spreading the team to three sites, they

should all concentrate on collecting the data from the same site and then move on to the next site. This will

also enable the supervisor to transmit the site report of each completed site to the data entry team for real

time processing.

Clean Data

Data cleaning is a process that takes place in different stages.

Stage 1 - The most important part of data cleaning is done in the field through daily debriefing

sessions. During these sessions, the team-leader and assessors will go through filled forms and look for

areas that may require clarification and/or comments.

Unless a supervisor verifies (and signs) data collection forms, they cannot be considered a valid source of information.

Stage 2 - The next part of data cleaning can happen at the data entry point. During data entry, the

data should be checked for errors and missing elements.

For example, a gender variable has two attributes, male and female, and therefore two possible numerical

values, say 0 and 1. Any other value is an error and can be readily detected. A less obvious error would for

example be the answer to a question with 12 multiple-choice options. The values will be 1 to 12. If 111 is

seen in that column, we will know that it is probably a data entry error.

No matter how carefully assessors collect and record the data, and how diligently encoders entered the data

into the database, mistakes happen. Most errors can be detected and removed by simple checks.

Enter and compile the Data

Data can be recorded and managed by hand, using tally sheets and summary tables. However, computer

programmes such as MS Excel will save you time in the long run and will allow for easy manipulation,

analysis and sharing of data. In a time-constrained context, it is preferred to use a computerized

programme.

See the CPRA Data Compilation and Analysis Tool (Excel document) that comes as part of

this assessment kit.

13

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

A model data management tool has been developed for use during a CPRA. This excel-based tool allows for

easy data entry and basic automatic analysis.

Ideally data entry should be done in parallel with data collection. If possible, site reports should be

transmitted via fax, email or other available means to an agreed-upon data entry site. This will allow for

simultaneous data entry and analysis which will not only save time, but will also allow the data entry team

to ask for clarifications/further information, if and when necessary, while the teams are still in the field.

WEEKS 4 & 5 - Data Analysis, Interpretation and Report Writing

Data analysis is the process of making sense of the collected data. In other words, it is bringing together

individual data points (like an answer to a question) to speak as a collective and tell the ‘story’ of the

situation. It is through data analysis that we translate the “raw” data from different sources into

meaningful information that enables us to provide informed statements about WWNK.

Analyze the Data

The most common analysis that can be done with a purposive sample used in this assessment is descriptive

analysis. It so happens that descriptive analysis is also one of the least complicated methods of analysis.

Non-specialists can easily run such analysis with minimal instruction.

Within the category of descriptive analysis, the most useful for assessment data is frequency analysis.

Frequency analysis helps you to determine the frequency of a particular event, issue, or statement within

the overall information that has been collected. For example, if 12 out of 18 sites reported that the

incidence of child labour has increased since the onset of the emergency, we can claim that 66% of the

assessed sites reported that the incidence of child labour has increased since the onset of the emergency.

To facilitated frequency analysis, a frequency distribution table can be used. For example, the age

distribution of the majority of separated children based on KIIs can be presented in a frequency distribution

table such as:

Table 1: frequency analysis of the age distribution of separated children12

Frequency

Percentage

under 5

5 to 14

15 to 18

3

16%

8

42%

3

16%

no observable

difference

2

11%

don’t know

3

16%

Cross tabulation is another method in descriptive statistics. Through cross tabulation, one can separate

responses to a specific question based on characteristics of the respondent (e.g. male/female) or the site

where the data was collected (e.g. urban/rural/camp/…). Such analysis is often very helpful in determining

12

Only one age category was picked per site.

14

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

how a particular issue has affected different types of affected areas or groups differently, and will inform

program design, for example in rural versus urban areas affected by the emergency.

Example of cross-tabulation analysis - Table 2: tally sheet—increase in incidence of sexual violence

Entry#

Urban/Rural

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

Urban

Rural

Rural

Rural

Urban

Urban

Rural

Rural

Urban

Urban

Rural

Urban

Rural

Rural

Urban

Rural

Rural

Rural

Increase in incidence of Sexual

Violence since the emergency

Yes

No

Yes

No

Yes

Yes

No

No

No

No

No

Yes

No

Yes

Yes

No

Yes

No

Table 3: Cross-tabulation table—increase in incidence of sexual violence in urban versus rural sites

Urban

Rural

Total

Increase in SV

5

3

8

No increase in SV

2

8

10

Total

7

11

18

Graphic presentation of data:

Showing the data through visuals is a very basic but oftentimes useful way of making the data useful.

Graphing can take place at different stages of analysis. Examples:

15

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

Graph 1: shows a simple calculating of number of urban

versus rural sites

Graph 2: shows how many sites reported an

increase in the incidence of sexual violence since

the onset of the emergency.

Type of Assessed Sites

(%)

20

10

Frequency

0

Urban

Rural

Graph 3: Is based on a cross tabulation of the two previous sets of data and as a result contains much more

information on how the emergency has impacted differently on SGBV incidents in urban vs rural areas.

It is highly recommended that an information management (IM) specialist accompany the CP team

throughout the analysis process. If there are no IM specialists available within the CPRA working group or

other CPWG member agencies, please seek support from [to be confirmed and added …]

Interpret the Data

Interpretation is the process through which the data that has been collected and analyzed is then linked

back to programmatic objectives (and informing the WWNK) of the assessment.

A key step in interpreting the data is to make sure (as much as possible) that the data that has been

16

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

collected is accurate within the acceptable ‘good enough’ threshold, which will be possible through

triangulation. Triangulation of data is the process of comparing data collected through different methods,

by different people and from different sources. This is our main form of a validity check in a Rapid

Assessment. Finding similar information across the different sources and methods used in the assessment

allows for increased confidence in the results. Triangulation becomes ever more important if we collect our

data from a small sample, which is often the case in a rapid assessment setting.

Triangulation can happen at different levels.

Stage 1 - Triangulation during data collection – the information collected at the site level should be

triangulated by comparing the information provided by the different KIs as well as that collected through

the direct observation tool. This triangulation should be undertaken on a daily basis, and led by the team

supervisors during daily debriefing sessions. During the daily debriefing sessions, the team will compare

results from different key informant interviews and structured and informal observations to find the best

answer to each question presented in the site report. In answers to each question, it is normally the most

frequent answer that should be cited as the best answer. However, if there are contradictions in answers

for KIs, team members should discuss and choose the best options based on their interactions with the

local population and observations during data collection. Some questions in the site report template also

allow for ranking the answers based on how frequently they were expressed by KIs.

Any decision to not include information that has been collected should be documented carefully and

diligently to avoid future confusions.

Stage 2 – Triangulation after all the data has been compiled- triangulation should also be done after

all the data has been aggregated. For example: if the KIs in 85% of the sites reported that there is no child

recruitment, but 45% of the direct observation reports recorded activities recruitment taking place, we

know that one of these two is not correct. In this case, a third source of information should be identified

and used for validation.

Examples of basic interpretation:

Example 1: Remember Table 1 from above (‘frequency analysis of the age distribution of separated

children’)

Frequency

Percentage

under 5

5 to 14

15 to 18

3

16%

8

42%

3

16%

no observable

difference

2

11%

don’t know

3

16%

This table represents the answers of KIs per site to the question: “in your opinion, which age group

represents the majority of separated children?” We have all the elements necessary to interpret the

information that has been collected through our assessment and can claim that 42% of assessed sites

reported that a majority of separated children fall in the age group of 5 to 14.

If we have access to other sources of data regarding separated children, we should triangulate this finding

with the data from those sources. For example if the NATF multi-cluster rapid assessment report claims

that 70% of separated children are under 5, we know that there is a problem in one of these two findings. If

17

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

this happens, and we are not able to find any other sources of data that contradict our findings, we can still

consider our figure valid.

Example 2: Remember cross-tabulation table 3:

Increase in SV

No increase in SV

Total

Urban

5

2

7

Rural

3

8

11

Total

8

10

18

This provides enough information for us to make claims about increase of sexual violence since the onset of

the emergency in urban versus rural areas. We can claim that a higher percentage of visited urban sites are

reporting an increase in the incidence of sexual violence since the emergency than visited rural sites.

Produce and disseminate assessment products

After the analysis phase, it is important to share the results with other actors. Ideally, a mini-workshop

should be organized to discuss the main findings and their significance. This will not only enrich the learning

from the data, but also ensures buy-in and wider use of the results. You may also want to consider

different assessment ‘products’ for different audiences. These can include:

PRODUCT and sample content:

Briefing: short narrative, some visual presentation of

data and bullet point recommendations.

LENGTH

2-4 pages

Detailed report:

1. Exec Summary (same as above);

2. Intro and discussion of methodology;

3. Key findings

- data tables and visual graphs of findings;

- narrative analysis

(key findings can be organized by CP issues/risks,

geographic area, etc, or a combination of these,

depending on the context)

4. Identification of priorities – programmatic and

funding;

5. Key recommendations – for programming

20-30

pages max

Raw data – available electronically to be shared

n/a

TARGET GROUP

All interested humanitarian

actors (donors, senior

management of agencies,

government, HQ, etc)

CPWG members who will use

information to inform

programming

CPWG members, other clusters,

NATF, etc…

It is important to acknowledge that the assessment results are NOT

representative of the total population.

This is inevitable when purposive sampling is used. To make this clear in our reporting,

findings should be qualified to reflect the known inaccuracies of the methods (ex: “Of

75 sites assessed, 80% reported separated children” rather than “80% of affected areas

reported separated children”).

18

Child Protection Rapid Assessment Toolkit – GUIDE - Pilot/Field-testing Version 0.1

WEEK 1-5 - Ethical and confidentiality issues

Assessments are “interventions” in themselves. They can be meaningful and positive experiences or

intrusive and disrupting, and possibly sources of additional stress, for the population. This is especially

the case during the immediate aftermath of an emergency. Our guiding principle during any assessment

should be the two principles of “do no harm” and “best interest of the child.” Therefore, an ethical

approach to rapid assessments requires:

1. A commitment to follow-up action, as necessary;

2. Identifying and finding ways to support community-coping mechanisms that are not violating

basic rights of or harming children;

3. Considering potential negative effects of the assessment exercise, such as stigmatization;

attracting unnecessary attention to a person or a group; or instilling unwarranted fear;

4. Not creating false expectations through honest communication with communities about the

objectives of the assessment before and during the assessment.13

It is also our responsibility to ensure the confidentiality of the information we have been entrusted with.

Confidentially can be defined as the restrictive management of sensitive information (names, incidents,

locations, details, etc.) collected before, during and after child protection assessments. Sensitive

information must be protected and shared only with those persons (service providers, family, etc.) who

need the information for the best interest of the child. Shared information should ideally be stripped of

any details of the source, unless otherwise is required to ensure appropriate action (with written

consent from the source).

Sensitive questions such as the ones flagged ( ) in the KI sample tool (Annex 1) should only be asked

by well-trained interviewers. It is important to speak with locals or those that have a strong familiarity

with the protection situation in the country or context to determine whether and to what degree

flagged issues may be sensitive or politicized; to decide, based on the known capacity of the assessors,

whether these issues should be included; and to adapt the tools to ensure that appropriate terminology

and language is used in assessing these sensitive issues. If assessors do not have a strong background in

CP or adequate and thorough training, these questions should not be asked.

Informed consent is an integrated part of any assessment activity that involves direct acquisition of

information from people regardless of their age. The sample tool for the key informant interview (Annex

1) includes an oral informed consent example. If you intend to use a key informant’s name in your

reports, a written consent form is necessary. Based on the context and background, the assessment

team may decide that written consent is necessary for all KIIs irrespective of the use of name. In such

cases, special written consent forms should be included in all KI questionnaires.

13

Adapted from “Ethical Considerations for the IA Emergency Child Protection Assessment.”

19