Bridging the Gap between Linguists and Technology Developers

advertisement

Bridging the Gap between Linguists & Technology Developers:

Large-Scale, Sociolinguistic Annotation for Dialect and

Speaker Recognition*

Christopher Cieri1, Stephanie Strassel1, Meghan Glenn1,

Reva Schwartz2, Wade Shen3, Joseph Campbell3

1. Linguistic Data Consortium

2. United States Secret Service 3. MIT Lincoln Laboratory

3600 Market Street, Suite 810

Washington, DC

244 Wood Street

Philadelphia, PA 19104

reva.schwartz@usss.dhs.gov

Lexington, MA 02421

{ccieri, strassel, mlglenn}@ldc.upenn.edu

{swade, jpc}@ll.mit.edu

* This work is sponsored by the Department of Homeland Security under Air Force Contract FA8721-05-C-0002. Opinions, interpretations,

conclusions and recommendations are those of the authors and are not necessarily endorsed by the United States Government

LREC 2008, May 26 – June 1, Marrakesh

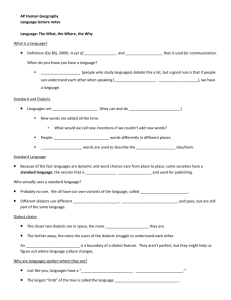

Introduction to Phanotics

Increased interest in speaker recognition community in high-level features that

abstract from the acoustic signal.

lexical choice, presence of idiomatic expressions, syntactic structures

Forensic applications require robustness to channel differences

channel adaptation and the

identification of features inherently robust to channel difference

Language Recognition community increasingly mutually intelligible dialects,

not just languages

Decades of research in dialectology suggest that high-level features can

enable systems to cluster speakers according to the dialects they speak.

Phanotics (Phonetic Annotation of Typicality in Conversational Speech) seeks

to

Sponsored by United States Secret Service

MIT Lincoln Laboratory coordinates effort and develops the systems

Linguists from Arizona State and Old Dominion universities consult on dialectal

phenomena

LDC and Appen Pty Ltd o Australia annotate data provided by LDC and

Identify high-level features characteristic of American dialects,

annotate a corpus for these features

use the data to develop dialect recognition systems

use the categorization to create better models for speaker recognition

LREC 2008, May 26 – June 1, Marrakesh

Annotation Approach

Annotating large corpora for many high-level features impractical

without

existing data

annotations

technologies that simplify the annotator’s task

Phanotics uses data orthographically transcribed to serve as a guide

to potential loci for the features sought

orthographic transcripts, pronouncing lexicon, forced-aligner generate

putative, time-aligned, phonetic transcription that

images that the speaker’s utterances were standard.

high-level features of interest described as deviations from standard

pronunciation

loci in which actual pronunciation differs from putative standard are

potential high-level features

Since

complete phonetic transcription cost-prohibitive

automatic phonetic transcription is not adequately accurate

we lack dialect studies for every difference one might encounter

We do not count deviations directly but allow the technologies to guide

human annotators to expected features.

LREC 2008, May 26 – June 1, Marrakesh

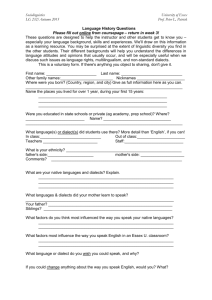

Requirements

Requires natural speech from speakers of target dialects

Initial focus on distinguishing African American Vernacular English (AAVE)

from all other dialects of American English (non-AAVE)

plan to investigate other American dialects later

Selected data collected to minimize the effect of observation

recordings of subjects engaged in conversations

Project requires subjects categorized according to the dialect spoken.

Since goal is to establish typicality of features by dialect,

categorization based on something other than features themselves

relied on self-reported metadata

AAVE

native speakers of American English

born and raised in the United States

ethnically African American

Non-AAVE

American English speakers of other ethnicities

Remove subjects from either pool who appear later mis-categorized.

LREC 2008, May 26 – June 1, Marrakesh

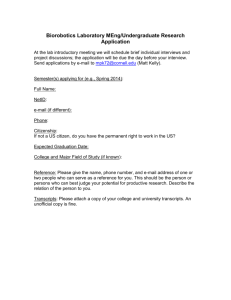

Data Selection

Mixer Corpora

CTS, from LDC; supports robust SR development

subjects provided age, sex, occupation, cities born/raised, ethnicity

subjects completed

>=10 six-minute calls

speaking to other subjects whom they typically did not know

about assigned topics

Bilinguals in Arabic, Mandarin, Russian, and Spanish used those languages &

English

7% calls in cross-channel recording room (8+ microphones on one side of call

calls audited for topic and audio quality but not generally transcribed

Although not designed for the current effort includes self-report ethnicity.

Pool contains speakers of multiple American English dialects who

categorized themselves as African American and other ethnicities

126 Mixer calls transcribed by Phanotics project

35 included conversations between two speakers of AAVE

91 include conversations between one AAVE and non-AAVE

LREC 2008, May 26 – June 1, Marrakesh

Data Selection

Fisher Corpus

collected at LDC to support STT development within DARPA EARS

subjects provided age, sex, native language, and the cities where they were born

and raised

subjects completed 1-25 10-minute calls, speaking to other participants, whom they

typically did not know, about assigned topics

calls audited for topic and quality

verbatim, time-aligned orthographic transcripts were produced

lacks crucial information on the ethnicity of the speaker

but some subjects were LDC employees, their family, friends, and colleagues

small number (171) could be assigned to an ethnic category after the fact

StoryCorps® Griot Initiative

funded by Corporation for Public Broadcasting in US

one-year effort to record one-hour interviews of African Americans.

nine recording locations open for up to six weeks each

subjects interview friends and family on topics of their choice

potential users receive instructions on conducting good interviews; trained facilitator

present

participants receive a free copy of their interview; other copies are archived and

distributed

StoryCorps provides Phanotics selected interview in exchange for transcripts

Sociolinguistic Interviews

recorded and contributed by researchers working in the United States

variable quality being reviewed for potential use

LREC 2008, May 26 – June 1, Marrakesh

Transcription

Most audio lacked transcripts; LDC designed spec for this project.

similar to Fisher Quick Transcription specification

emphasizes speed and accuracy.

annotators segment speech at sentence level

sentences further segmented if >8 seconds; >0.5 seconds internal silence

segments overlap; audio containing no speech left un-segmented

standard orthography, case, punctuation (period, question mark, comma)

-- incomplete sentences and restarts; - incomplete words

proper names, acronyms, letter strings capitalized

uttered numbers written as words, not as strings of digits

limited set of standard contractions are used and

non-standard contractions (‘cause for because) written as the full word

obviously mispronounced, idiosyncratic words tagged with ‘+’

no other attempt made to mark dialectal pronunciation

accomplished in annotation phase

limited set of non-lexemes, (um, uh) used in filled pauses

speech errors transcribed as produced

limited time to transcribe diffluencies since these will be rejected

background noises not marked; limited set of markers for speaker noises

transcribers indicate low confidence with double parentheses (()).

LREC 2008, May 26 – June 1, Marrakesh

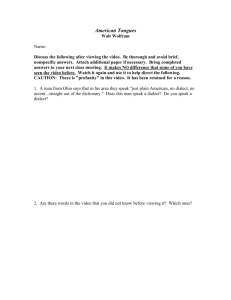

Feature Annotation

Goal: identify features that distinguish dialect from standard

features described as rules that change standard into non-standard

rules apply variably according to internal and external constraints

lexical identity, morphology of affected word, position within sentence,

phonological environment, functional effect of change (for example

whether it neutralizes a distinction between two words), the age, sex,

socioeconomic class of speakers, dialects they speak

Examples

reduction of consonant clusters in final position

left => lef’, missed => miss)

deletion of r, l, w

car => ca’, palm => pa’m, young ones => young ‘uns

change of the voiced and voiceless interdental fricatives into stops

bother => boda’

Data preparation, customized tools simplify the annotation process

Rules specified as a => b/x_y

a becomes b when preceded by x and followed by y

input+environment, “xay”, constitute search term

input+output a=>b constitute a question to be answered by human

Did the subject say xay or xby?

LREC 2008, May 26 – June 1, Marrakesh

Feature Annotation

SPAAT (Super Phonetic Annotation and Analysis Tool) designed for

rapid annotation and analysis

for each feature, presents list of regions of interest (ROI) where rule may

have applied

since transcript & audio previously forced-aligned, annotator can listen to

the audio with small amount of preceding and following context

Annotator’s job is to decide whether or not the rule has applied.

LREC 2008, May 26 – June 1, Marrakesh

Initial Results

average time to annotate an ROI ranges 15-25

Approach to measuring inter-annotator agreement

distinguishes initial agreement measured at beginning of effort

assess the difficulty of a task

from measures repeated after thorough documentation created,

annotators undergone rigorous training, testing and selection

Initial inter-annotator agreement varies by rule, rule type, annotator

and annotator training

absolute average initial agreement across five annotators, all rules was

74.49% on three-way decision where a feature is annotated as present,

intermediate or absent

converted to two-way decision (feature is present versus intermediate +

absent) initial agreement climbs to 85.54%

Pair wise agreement by chance in three way and two way decisions is,

respectively, 11.1% and 25%

initial two way agreement rates were 83.81% for rules involving

substitutions and 91.95% for rules involving reductions and insertions.

Team now working to increase IAA

expanding training program, documentation to include audio examples

decision: form is standard, non-standard, intermediate, unrelated to rule,

indeterminate, ROI is mistaken

creating a small gold standard

LREC 2008, May 26 – June 1, Marrakesh

Summary

Project connects sociolinguistics and HLT

Seeks to determine typicality of high level features in distinguishing

dialect for forensic purposes

Focuses initially on AAVE; later on other dialects of American English

Uses existing audio from CTS and interviews

Creates transcripts, audio-transcript time-alignments

Combination of these with SPAAT speeds annotation

Initial inter-annotator agreement encouraging

Modifications of spec, training, tool expected to increase IAA

Fisher audio and transcripts already available in LDC’s Catalog

LDC2005S13

LDC2005T19

LDC2004S13

LDC2004T19

Fisher English Training Part 2, Speech

Fisher English Training Part 2, Transcripts

Fisher English Training Speech Part 1 Speech

Fisher English Training Speech Part 1 Transcripts

Mixer audio in queue

Story Corps Griot and Sociolinguistic Interviews under negotiation

To be distributed after use in the program

Mixer Transcripts

Annotations

possibly SPAAT

LREC 2008, May 26 – June 1, Marrakesh