PPT - RealityGrid

advertisement

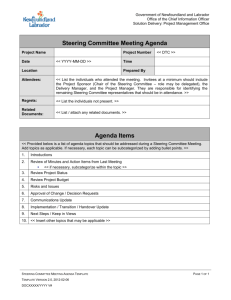

Supercomputing, Visualization & e-Science Manchester Computing RealityGrid Software Infrastructure, Integration and Interoperability Stephen Pickles <stephen.pickles@man.ac.uk> http://www.realitygrid.org Royal Society, Tuesday 17 June, 2003 Supercomputing, Visualization & e-Science Manchester Computing Middleware landscape Shifting sands A tour of the Middleware Landscape Traditional Grid computing, roots in Supercomputing Globus Toolkit – US led, de-facto standard, adopted by UK e-Science programme UNICORE – German and European Business-business integration, roots in e-Commerce Web services The great white hope Open Grid Services Architecture (OGSA) 3 Supercomputing, Visualization & e-Science Globus Toolkit™ A software toolkit addressing key technical problems in the development of Grid enabled tools, services, and applications – – – – Offer a modular “bag of technologies” Enable incremental development of grid-enabled tools and applications Implement standard Grid protocols and APIs Make available under liberal open source license The Globus Toolkit™ centers around four key protocols – Connectivity layer: • Security: Grid Security Infrastructure (GSI) – Resource layer: • Resource Management: Grid Resource Allocation Management (GRAM) • Information Services: Grid Resource Information Protocol (GRIP) • Data Transfer: Grid File Transfer Protocol (GridFTP) 4 Supercomputing, Visualization & e-Science Globus features "Single sign-on" through Grid Security Infrastructure (GSI) Remote execution of jobs – GRAM, job-managers, Resource Specification Language (RSL) Grid-FTP – Efficient file transfer; third-party file transfers MDS (Metacomputing Directory Service) – Resource discovery – GRIS and GIIS – Today, built on LDAP Co-allocation (DUROC) – Limited by support from scheduling infrastructure Other GSI-enabled utilities – gsi-ssh, grid-cvs, etc. Commodity Grid Kits (CoG-kits), Java, Python, Perl Widespread deployment, lots of projects, UK e-Science Grid 5 Supercomputing, Visualization & e-Science UNICORE UNIform access to COmputing REsources Started 1997 German projects: UNICORE UNICORE PLUS EU projects with UK partners: EUROGRID GRIP www.unicore.org 6 Supercomputing, Visualization & e-Science UNICORE features High-level abstractions Abstract Job Object (AJO) represents a user's job Task dependencies represented in a DAG – A task may be script, a compilation or run of an executable, a file transfer,… – SubAJOs can be run on different computational resource Software resources (we miss this!) High-level services or capabilities Built in workflow – Support for conditional execution and loops (tail recursive execution of a DAG) Graphical user interfaces for job definition and submission – Extensible through plug-ins Resource broker 7 Supercomputing, Visualization & e-Science Growing awareness of UNICORE Early UNICORE-based prototype OGSI implementation by Dave Snelling May 2002 – Later used in first RealityGrid computational steering demonstrations at UK e-Science All Hands Meeting, September 2002 Dave Snelling, senior architect of UNICORE and RealityGrid partner is now co-chair of the OGSI working group at Global Grid Forum UNICORE selected as middleware for Japanese NAREGI project (National Research Grid Initiative) – 5 year project 2003-2007 – ~17M$ budget in FY2003 8 Supercomputing, Visualization & e-Science Recent UNICORE developments GridFTP already supported as alternative file transfer mechanism – Multiple streams help even when there is only one network link - pipelining! Support for multiple streams and re-startable transfers will go into UNICORE Protocol Layer (UPL) mid-summer 2003 UNICORE servers now support streaming (FIFOs) – Ideal for simulation-visualization connections RealityGrid style Translators between UNICORE resource descriptions and MDS exist – Looking closely at XML based representation of UNICORE resource descriptions – Ontological model to be informed by GLUE schema and NAREGI experiences UNICORE programmer's guide (draft) OGSI implementation in progress – With XML serialization over UPL (faster than SOAP encapsulation, even with MIME types) – OGSI portType for Abstract Job Object (AJO) consignment • NB. OGSI compliance alone does not imply interoperability with GRAM! – Job Management and Monitoring portTypes to follow 9 Supercomputing, Visualization & e-Science UNICORE and Globus UNICORE Java and Perl Packaged Software with GUI Globus ANSI C, Perl, shell scripts Toolkit approach – Top down, vertical integration Not originally open source Designed with firewalls in mind Easy to customise No delegation Abstract Job Object (AJO) Framework Workflow Resource Broker Easy to deploy 10 – Bottom up, horizontal integration Open Source Firewalls are a problem Easy to build on Supports Delegation Weak, if any, higher level abstractions Supercomputing, Visualization & e-Science Web Services Loosely Coupled Distributed Computing – Think Java RMI or C remote procedure call Service Oriented Architecture Text Based Serialization – XML: “Human Readable” serialization of objects Three Parts – Messages (SOAP) – Definition (WSDL) – Discovery (UDDI) 11 Supercomputing, Visualization & e-Science Web Services in Action UDDI Publish/WSDL Search Client Java/C/Browser https/SOAP WS Platform Any protocol Legacy Enterprise Application 12 InterStage, WebSphere, J2EE, GLUE, SunOne, .NET Database ... Supercomputing, Visualization & e-Science Enter Grid Services Experiences of Grid computing (and business process integration) suggest extending Web Services to provide: State – Service Data Model Persistence and Naming – Two Level Naming (GSH, GSR) – Allows dynamic migration and QoS adaptation Lifetime Management Explicit Semantics – Grid Services specify semantics on top of Web Service syntax. Infrastructure support for common patterns – Factories, registries (service groups), notification By integrating Grid and Web Services we gain – Tools, investment, protocols, languages, implementations, paths to exploitation – Political weight behind standards and solutions 13 Supercomputing, Visualization & e-Science OGSA - outlook The Open Grid Services Infrastructure (OGSI) is the foundation layer for the Open Grid Services Architecture (OGSA) OGSA has a lot of industrial and academic weight behind it – IBM is committed, Microsoft is engaged, Sun and others are coming on board – Globus, UNICORE and others are re-factoring for OGSA Goal is to push all OGSI extensions into core WSDL – Some early successes OGSI specification is now in RFC after 12 months concerted effort by many organisations and individuals OGSI promises "a better kind of string" to hold the Grid together. But it’s not a "silver bullet". – Lacking higher-level semantics – Much standardisation effort still required in Global Grid Forum and elsewhere 14 Supercomputing, Visualization & e-Science OGSI implementations Complete Globus Toolkit version 3 (Apache/Axis) – Fast and furious pre-releases trying to keep pace with evolving standard – General release Q3 2003 .NET implementation from Marty Humphrey, University of Virginia Partial Java/UNICORE – Snelling and van den Berghe, FLE – production-quality implementation informed by May '02 prototype Python – Keith Jackson, LBNL Perl – Mark Mc Keown, University of Manchester GGF panel will report next week. 15 Supercomputing, Visualization & e-Science Supercomputing, Visualization & e-Science Manchester Computing The pieces of RealityGrid Integration, Independence and Interoperability Or Putting it all together The pieces Steering library and tools Visualization – See also talk by Steven Kenny, Loughborough University Web portal – More on this and other topics from Jean-Christophe Desplat, EPCC ICENI – More later from Steven Newhouse, LeSC, Imperial College Performance Control – More later from John Gurd, CNC, University of Manchester Instruments: LUSI, XMT (not this talk) Human-Computer Interfaces (HCI) – Should not be last in the list! – More later from Roy Kalawsky, Loughborough University Not to mention application codes and the middleware landscape itself. 17 Supercomputing, Visualization & e-Science Design philosophies and tensions Grid-enabled Component-based and service-oriented – plug in and compose new components and services, from partners and third parties Independence and modularity – to minimize dependencies on third-party software • Should be able to steer locally without and Grid middleware – to facilitate parallel development within project Integration and/or interoperability – Things should work together Respect autonomy of application owners – Prefer light-weight instrumentation of application codes to wholesale re-factoring – Same source (or binary) should work with or without steering Dynamism and adaptibility – Attach/detach steering client from running application – Adapt to prevailing conditions Intuitive and appropriate user interfaces 18 Supercomputing, Visualization & e-Science First “Fast Track” Demonstration Jens Harting at UK e-Science All Hands Meeting, September 2002 19 Supercomputing, Visualization & e-Science Firewall “Fast Track” Steering Demo UK e-Science AHM 2002 Bezier SGI Onyx @ Manchester Vtk + VizServer UNICORE Gateway and NJS Manchester Laptop SHU Conference Centre Simulation Data Dirac SGI Onyx @ QMUL LB3D with RealityGrid Steering API UNICORE Gateway and NJS QMUL Steering (XML) 20 VizServer client Steering GUI The Mind Electric GLUE web service hosting environment with OGSA extensions Single sign-on using UK eScience digital certificates Supercomputing, Visualization & e-Science Steering architecture today Communication modes: • Shared file system • Files moved by UNICORE daemon • GLOBUS-IO Simulation Steering library Client Steering library Data mostly flows from simulation to visualization. Reverse direction is being exploited to integrate NAMD&VMD into RealityGrid framework. 21 data transfer Steering library Visualization Visualization Supercomputing, Visualization & e-Science Computational steering – how? We instrument (add "knobs" and "dials" to) simulation codes through a steering library, written in C – Can be called from Fortran90, C and C++ – Library distribution includes Fortran90 and C examples Library features: – – – – – – – – Pause/resume Checkpoint and restart – new in today's demonstration Set values of steerable parameters (parameter steer) Report values of monitored (read-only) parameters (parameter watch) Emit "samples" to remote systems for e.g. on-line visualization Consume "samples" from remote systems for e.g. resetting boundary conditions Automatic emit/consume with steerable frequency No restrictions on parallelisation paradigm Images can be displayed at sites remote from visualization system, using e.g. SGI OpenGL VizServer – Interactivity (rotate, pan, zoom) and shared controls are important 22 Supercomputing, Visualization & e-Science Steering client 23 Built using C++ and Qt library – currently have execs. for Linux and IRIX Attaches to any steerable RealityGrid application Discovers what commands are supported Discovers steerable & monitored parameters Constructs appropriate widgets on the fly Supercomputing, Visualization & e-Science Implementing steering Steps required to instrument a code for steering: Register supported commands (eg. pause/resume, checkpoint) – steering_initialize() Register samples – register_io_types() Register steerable and monitored parameters – register_params() Inside main loop – steering_control() – Reverse communication model: • User code actions, in sequence, each command in list returned • Support routines provided (eg. emit_sample_slice) – When you write a checkpoint, register it When finished, – steering_finalize() 24 Supercomputing, Visualization & e-Science Steering in an OGSA framework Steering GS bind Simulation Steering library library Steering publish Steering Steering library client find connect Client Registry data transfer publish Steering library bind Steering GS 25 Visualization Visualization Supercomputing, Visualization & e-Science Steering in OGSA continued… Each application has an associated OGSI-compliant “Steering Grid Service” (SGS) SGS provides public interface to application – – – – Use standard grid service technology to do steering Easy to publish our protocol Good for interoperability with other steering clients/portals Future-proofed next step to move away from file-based steering Application still need only make calls to the steering library SGSs used to bootstrap direct inter-component connections for large data transfers Early working prototype of OGSA Steering Grid Service exists – Based on light-weight Perl hosting environment 26 Supercomputing, Visualization & e-Science Steering wishlist Logging of all steering activity – Not just checkpoints – As record of investigative process – As basis for scripted steering Scripted steering – Breakpoints ( IF (temperature > TOO_HOT) THEN … ) – Replay of previous steering actions Advanced checkpoint management to support exploration of parameter space (and code development) – Imagine a tree where nodes are checkpoints and branches are choices made through steering interface (cf. GRASPARC) 27 Supercomputing, Visualization & e-Science On-line visualisation Fast track demonstrators use open source VTK for on-line visualisation – Simple GUI built with Tk/Tcl, polls for new data to refresh image – Some in-built parallelism VTK extended to use the steering library – AVS-format data supported – XDR-format data for sample transfer between platforms Volume-data focus so far but this will change as more steerable applications bring new visualization requirements – – – – 28 Volume render (parallel) Isosurface Hedgehog Cut-plane Supercomputing, Visualization & e-Science Visualization experiences Positive experiences of SGI VizServer – Delivers pixels to remote displays, transparently – Reasonable interactivity, even over long distances • Will test further over QoS-enabled networks in collaboration with MB-NG – Also experimenting with Chromium VizServer Integration: – Put GSI authentication into VizServer PAM when released – Use VizServer API for Advance Reservation of graphics pipes, and – Exploit new collaborative capabilities in latest release. Access Grid Integration: trying two approaches – VizServer clients in Access Grid nodes (lose benefits of multicast) – Using software from ANL (developers of Chromium) to write from VTK to video interface of Access Grid 29 Supercomputing, Visualization & e-Science Visualization – Integration issues Traditional Modular Visualization Environments tend to be monolithic Incorporating the simulation in an extended visualization pipeline makes steering possible, but often implies lock-in to a single framework When remote execution is supported, rarely "Grid compatible" – But the UK e-Science project gViz is changing this for Iris Explorer Can't easily compose components from different MVEs – No single visualization package meets all requirements for features and performance 30 Supercomputing, Visualization & e-Science Portal Portal = "web client" Includes job launching and monitoring EPCC Work package 3: portal integration Initial design document released, work about to start Integration issues: Portal must provide steering capability – Through Steering Grid Service On-line visualization is harder for a web client – Do only what's practical > 40 UK e-Science projects have a portal work package! 31 Supercomputing, Visualization & e-Science ICENI (deep track) ICENI provides – – – – – Metadata-rich component framework Exposure of components as OGSA services Steering and visualization capabilities of its own Rich set of higher level services Potentially a layer between RealityGrid and middleware landscape Recent developments – – – – First controlled release of ICENI within project to CNC Integration of "binary components" (executables) Dynamic composition in progress (important for attach/detach) More in talk by Steven Newhouse ICENI integration challenges: – Describe and compose the components of a "fast track" computational steering application in ICENI language – Reconcile "fast track" and ICENI steering entities and concepts 32 Supercomputing, Visualization & e-Science Performance Control More in John Gurd's talk application performance steerer component performance steerer component performance steerer component 1 33 application component performance steerer component 2 component 3 Supercomputing, Visualization & e-Science Performance Control (deep track) Component loader supports parallel job migration Relies on checkpoint/restart software – Proven in LB3D – Plan to generalise, co-ordinating with Grid-CPR working group of GGF – Malleable: from N processors on platform X to M processors on platform Y Integration issues: Must work with ICENI components – Groundwork laid Application codes must be instrumented for Performance Control Steering Library – Both trigger taking of checkpoints – Steering system needs to know when a component migrates – Embedding Performance Control in Steering Library may be feasible 34 Supercomputing, Visualization & e-Science Human Computer Interfaces Recommendations from HCI Audit beginning to inform a wide range of RealityGrid developments – – – – – Steering Visualization Web portal Workflow Management of scientific process Sometimes architecturally separate concepts should be presented in single interface to the end-user More in Roy Kalawsky's talk 35 Supercomputing, Visualization & e-Science Middleware, again All this has to build on, and interface with, the shifting sands of the middleware landscape We seem to be spoiled for choice – Globus, UNICORE, Web Services, ICENI are all on an OGSA convergence track – with the promise of interoperability – but at a very low level. Higher level services will emerge – But will they be timely? – Will they be backed by standards? – Will we be able to use them? 36 Supercomputing, Visualization & e-Science Widespread deployment Short term, GT 2 is indicated To gain leverage from common infrastructure of UK e-Science programme – Grid Support Centre, Level 2 Grid, Training, Engineering Task Force, etc. Hence our recent work on RealityGrid-L2 – Demonstrated on Level 2 Grid at LeSC open day in April But it's hard Deployment and maintenance overhead of Globus is high We need "high quality" cycles for capability computing Gaining access to L2G resources is still painful, but slow progress is being made Much work required to adapt applications and scripts to available L2G resources – Discovering useful information like queue names and policies; location of compilers, libraries, tools, scratch space; retention policies, etc. is still problematic 37 Supercomputing, Visualization & e-Science External dependencies Robustness of middleware Maturity of OGSA and availability of services Tooling support for web services in languages other than Java and C# Support for advanced reservation and co-allocation Need to co-allocate (or co-schedule) – (a) multiple processors to run a parallel simulation code, – (b) multiple graphics pipes and processors on the visualization system More complex scenarios involve multi-component applications. 38 Supercomputing, Visualization & e-Science Conclusions We've achieved a lot But there's tough challenges ahead 39 Supercomputing, Visualization & e-Science Partners Academic University College London Queen Mary, University of London Imperial College University of Manchester University of Edinburgh University of Oxford University of Loughborough 40 Industrial Schlumberger Edward Jenner Institute for Vaccine Research Silicon Graphics Inc Computation for Science Consortium Advanced Visual Systems Fujitsu Supercomputing, Visualization & e-Science Supercomputing, Visualization & e-Science Manchester Computing SVE @ Manchester Computing Bringing Science and Supercomputers Together http://www.man.ac.uk/sve sve@man.ac.uk