PPT File

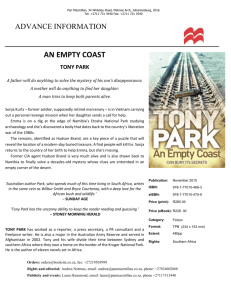

advertisement

Tony Jebara, Columbia University

Advanced Machine

Learning & Perception

Instructor: Tony Jebara

Tony Jebara, Columbia University

Boosting

•Combining Multiple Classifiers

•Voting

•Boosting

•Adaboost

•Based on material by Y. Freund, P. Long & R. Schapire

Tony Jebara, Columbia University

Combining Multiple Learners

•Have many simple learners

•Also called base learners or

weak learners which have a classification error of <0.5

•Combine or vote them to get a higher accuracy

•No free lunch: there is no guaranteed best approach here

•Different approaches:

Voting

combine learners with fixed weight

Mixture of Experts

adjust learners and a variable weight/gate fn

Boosting

actively search for next base-learners and vote

Cascading, Stacking, Bagging, etc.

Tony Jebara, Columbia University

Voting

•Have T classifiers

ht (x )

•Average their prediction with weights

f (x ) =

Â

T

t=1

T

a t ht (x ) where a t • 0and  t = 1 a t = 1

•Like mixture of experts but weight is constant with input

y

a

a

a

x1

x1

x1

x2

x2

x2

x3

x3

x3

Expert

Expert

x

Expert

Tony Jebara, Columbia University

Mixture of Experts

•Have T classifiers or experts h (x ) and a gating fn a t (x )

t

•Average their prediction with variable weights

•But, adapt parameters of the gating function

and the experts (fixed total number T of experts)

f (x ) =

Â

T

t=1

T

a t (x )ht (x ) where a t (x ) • 0and  t = 1 a t (x ) = 1

Output

Gating

Network

x1

x1

x1

x2

x2

x2

x3

x3

x3

Expert

Expert

Input

Expert

Tony Jebara, Columbia University

Boosting

•Actively find complementary or synergistic weak learners

•Train next learner based on mistakes of previous ones.

•Average prediction with fixed weights

•Find next learner by training on weighted versions of data.

training data

(x1,y1 ),K , (x N ,y N )

{

}

weak learner

Â

N

i= 1

(

wistep - ht (x i )y i

Â

{w ,K ,w }

1

N

weights

weak rule

N

i= 1

wi

)< 1 2

ht (x )

g

ensemble of learners

(a 1,h1 ),K , (a T ,hT )

{

}

f (x ) =

T

a t ht (x )

prediction

Â

t=1

Tony Jebara, Columbia University

AdaBoost

•Most popular weighting scheme

T

•Define margin for point i as y i  t = 1 a t ht (x i )

•Find an ht and find weight at to min the cost function

N

T

sum exp-margins

exp - y

a h (x )

Â

i= 1

training data

(x1,y1 ),K , (x N ,y N )

{

(

i

Â

}

N

i= 1

N

weights

)

(

wistep - ht (x i )y i

Â

1

i

weak rule

weak learner

Â

{w ,K ,w }

t t

t=1

N

i= 1

wi

)< 1 2

ht (x )

g

ensemble of learners

(a 1,h1 ),K , (a T ,hT )

{

}

f (x ) =

T

a t ht (x )

prediction

Â

t=1

Tony Jebara, Columbia University

AdaBoost

(

N

)

T

•Choose base learner & at: min a ,h  i = 1 exp - y i  t = 1 a t ht (x i )

t t

•Recall error of

N

base classifier ht must be e = Â i = 1 wistep (- ht (x i )y i ) < 1 - g

t

Â

N

w

i= 1 i

2

•For binary h, Adaboost puts this weight on weak learners:

(instead of the

Ê1 - e ˆ˜

t ˜

Á

a t = 12 ln Á

more general rule)

˜˜

Á

Á

Ë et ˜¯

•Adaboost picks the following for the weights on data for

the next round (here Z is the normalizer to sum to 1)

t+1

i

w

=

(

)

wit exp - a t y iht (x i )

Zt

Tony Jebara, Columbia University

Decision Trees

Y

X>3

+1

5

-1

Y>5

-1

-1

-1

+1

3

X

Tony Jebara, Columbia University

Decision tree as a sum

Y

-0.2

X>3

sign

+0.2

+1

+0.1

-0.1

-0.1

-1

Y>5

-0.3

-0.2 +0.1

-0.3

-1

+0.2

X

Tony Jebara, Columbia University

An alternating decision tree

Y

-0.2

Y<1

sign

0.0

+0.2

+1

X>3

+0.7

-1

-0.1

+0.1

-0.1

+0.1

-1

-0.3

Y>5

-0.3

+0.2

+0.7

+1

X

Tony Jebara, Columbia University

Example: Medical Diagnostics

•Cleve dataset from UC Irvine database.

•Heart disease diagnostics (+1=healthy,-1=sick)

•13 features from tests (real valued and discrete).

•303 instances.

Tony Jebara, Columbia University

Ad-Tree Example

Tony Jebara, Columbia University

Cross-validated accuracy

Learning

algorithm

Number

of splits

ADtree

6

17.0%

0.6%

C5.0

27

27.2%

0.5%

446

20.2%

0.5%

16

16.5%

0.8%

C5.0 +

boosting

Boost

Stumps

Average Test error

test error variance

Tony Jebara, Columbia University

AdaBoost Convergence

Logitboost

•Rationale?

•Consider bound on

the training error:

=

Â

Â

Â

=

’

R emp =

£

1

N

1

N

N

i= 1

N

i= 1

Brownboost

0-1 loss

(

)

exp (- y f (x ))

step - y i f (x i )

i

(

N

Mistakes

T

t=1

Zt

Margin

Correct

exp bound on step

i

)

exp - y i  t = 1 a t ht (x i )

i= 1

T

Loss

definition of f(x)

recursive use of Z

•Adaboost is essentially doing gradient descent on this.

•Convergence?

Tony Jebara, Columbia University

AdaBoost Convergence

•Convergence? Consider the binary ht case.

R emp £

=

’

’

T

Z =

t=1 t

’

T

t=1

Â

N

(

)

t

w

exp - a t y i ht (x i )

i= 1 i

1

Ê Ê

y h (x i )ˆˆ

2 i t

Ê

ˆ

Á

˜˜˜˜

Á

et ˜

T

N

Á

Á

Á

t

˜˜˜˜

˜˜

Á

Á

Á

w

exp

ln

Â

Á

Á

Á

i

˜˜˜˜

i

=

1

t=1

˜˜

Á

Á

Á

1

e

Ë

¯

Á

˜¯˜˜¯

t

Á

Ë

Ë Á

Ê

ˆ˜ i t ( i )

et ˜

T

N

t Á

Á

˜˜

w

Á

t = 1 Â i= 1 i Á

˜

Á

Ë 1 - et ˜¯

Ê

et

T Á

t

t 1 - et

Á

w

+

w

ÁÂ correct i

incorrect i e

t = 1Á

1

e

Á

t

t

Ë

at =

1

2

Ê1 - e ˆ˜

t ˜

Á

ln Á

˜˜

Á

Á

Ë et ˜¯

et =

1

2

- gt £

yh x

=

’

=

’

=

’

T

2 et (1 - et ) =

t=1

(

)

’

T

t=1

(1 -

(

)

(

ˆ˜

˜˜

˜˜

˜¯

4gt2 £ exp - 2Â t gt2

Remp £ exp - 2Â t gt2 £ exp - 2T g2

)

1

2

)

So, the final learner converges exponentially fast in T if

each weak learner is at least better than gamma!

- g

Tony Jebara, Columbia University

Curious phenomenon

Boosting decision trees

Using <10,000 training examples we fit >2,000,000 parameters

Tony Jebara, Columbia University

Explanation using margins

0-1 loss

Margin

Tony Jebara, Columbia University

Explanation using margins

0-1 loss

No examples

with small

margins!!

Margin

Tony Jebara, Columbia University

Experimental Evidence

Tony Jebara, Columbia University

AdaBoost Generalization Bound

•Also, a VC analysis gives a generalization bound:

Ê

ˆ˜

(where d is VC of

T

d

Á

˜

R £ Remp + O Á

˜˜

Á

base classifier)

Á

˜

N

Á

Ë

¯

•But, more iterations overfitting!

•A margin analysis is possible, redefine margin as:

y  t a t ht (x )

marf (x, y ) =

at

Â

t

Ê

ˆ˜

N

d

Á

˜˜

Then have R £ 1 Â step q- mar (x ,y ) + O Á

Á

f

i

i

N

2 ˜

Á

˜¯

N

q

Á

i= 1

Ë

(

)

Tony Jebara, Columbia University

AdaBoost Generalization Bound

•Suggests this optimization problem:

d

m

Margin

Tony Jebara, Columbia University

AdaBoost Generalization Bound

•Proof Sketch

Tony Jebara, Columbia University

UCI Results

% test error rates

Database

Other

Boosting

Error

reduction

Cleveland

27.2 (DT)

16.5

39%

Promoters

22.0 (DT)

11.8

46%

Letter

13.8 (DT)

3.5

74%

Reuters 4

5.8, 6.0, 9.8

2.95

~60%

7.4

~40%

Reuters 8 11.3, 12.1, 13.4

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Consider classifying an image as face/notface

•Use weak learner as stump that averages of pixel intensity

•Easy to calculate, white areas subtracted from black ones

•A special representation of the sample called the integral

image makes feature extraction faster.

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Summed area tables

•A representation that means any rectangle’s values can be

calculated in four accesses of the integral image.

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Summed area tables

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•The base size for a sub window is 24 by 24 pixels.

•Each of the four feature types are scaled and shifted across

all possible combinations

•In a 24 pixel by 24 pixel sub window there are ~160,000

possible features to be calculated.

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Viola-Jones algorithm, with K attributes (e.g., K = 160,000)

we have 160,000 different decision stumps to choose from

At each stage of boosting

•given reweighted data from previous stage

•Train all K (160,000) single-feature perceptrons

•Select the single best classifier at this stage

•Combine it with the other previously selected classifiers

•Reweight the data

•Learn all K classifiers again, select the best, combine,

reweight

•Repeat until you have T classifiers selected

•Very computationally intensive!

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Reduction in Error as Boosting adds Classifiers

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•First (e.g. best) two features learned by boosting

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Example training data

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•To find faces, scan all squares at different scales, slow

•Boosting finds ordering on weak learners (best ones first)

•Idea: cascade stumps to avoid too much computation!

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Training time = weeks (with 5k faces and 9.5k non-faces)

•Final detector has 38 layers in the cascade, 6060 features

•700 Mhz processor:

•Can process a 384 x 288 image in 0.067 seconds (in 2003

when paper was written)

Tony Jebara, Columbia University

Boosted Cascade of Stumps

•Results