Data_Normalization

advertisement

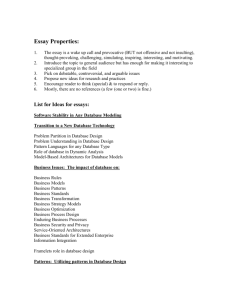

/w EPDw UJNzg5N L10: D ATA N ORMALIZATION - L OGICAL D ATABASE D ESIGN In this learning unit, you will learn about the process of data normalization, and how it can be used to transform existing data into an efficient logical model. L EARNING O BJECTIVES Define data normalization Explain why data normalization is important Explain how normalization helps reduce redundancy and anomalies Solve data anomalies by transforming data from one normal form to the next; to the third normal form Apply normalization with data modeling to produce good database design This week we’ll explore more of the activities performed in logical design, Data Normalization. If you look at our methodology you can see that we are still in the Design phase. P ART 1: D ATA N ORMALIZATION W HAT IS D ATA N ORMALIZATION ? Data Normalization is a process which, when applied correctly to a data model, will produce an efficient logical model. By efficient logical model, we mean one with minimal data redundancy and high efficiency in terms of table design. Webster defines normalization as “to make conform or to reduce to a form or standard.”• This definition holds true for data normalization, too. Data Normalization is a means of making your data conform to a standard. It just so happens that standard is optimized for efficient storage in relational tables! Highly efficient data models are minimally redundant, and vice-versa. The bottom line here is the more “efficiency”• we add into the data model, the more tables we produce, the less chance data is repeated in rows of the table, and the greater the chance we don’t have unreliable or inconsistent data in those tables. (Okay Mike, take a breath!) Let’s look at an example. For example, this data model is inefficient, because it contains redundant data: the rows in the City and state columns. Redundancy leaves the door open to data inconsistency, as is the case with the attributes in bold italic. It is obvious we meant “New York”• and “IL”•, and not “News York”• and “iL”• but because our data model does not protect us from making this mistake, it could (and is bound to) happen. An improved, 'normalized', data model would look like the following: And because of the PK and FK constraints on the logical model, it is not impossible to have two different cities or states for the same zip code; hence we’ve reduced the chance for someone to introduce bad data! This is the modus operandi of data normalization. Make more tables and FK’s to eliminate the possibility of data redundancy and inconsistency! ïŠ W HERE DOES D ATA N ORMALIZATION F IT ? Where does data normalization fit within the logical model? Normalization services two purposes: It is used to: Improve upon existing data models and data implementations. Take a poor database design and make it better. I normalize frequently just to correct problems with existing designs. Check to make sure your Logical model is minimally redundant. You can run the normalization tests over your data model to ensure your logical model is efficient. “Reverse engineer”• an external data model, such as a view or report into its underlying table structure. I use normalization to estimate the internal data model of an application based on the external data model (the screens and reports of the application). A NOTHER EXAMPLE , “D AVE D” S TYLE Here’s another example of normalization, taken from Professor Dave Dischiave. If you look at the following set of data you’ll see where this is going. Let’s say you received the following employee data: By inspecting the data in the above relation we notice that the same data values highlighted in yellow live in the data set more than once. The Dept Name Marketing occurs twice as does the title Manager. When data values live in the database more that once, we refer to this condition as data redundancy. If this were a set of 300,000 employees you can image how ugly this problem could get. Any new employee added to the set or changes made to an existing employee now have the opportunity to be added or changed inconsistently with existing data values already in the database. When these types of errors are introduced to the data they are referred to as insertion or modification anomalies. Anomaly is another way of saying a deviation from an established rule or in Dave D terms an error. Let’s insert a new row of data into the table above to prove our point: Here we inserted a new employee 248, Carrie J, 10, marking, mg. We assigned her to the Marketing department or did we? When we inspect the data, we find that the data is similar but unfortunately not the same. Is “marking”• the same thing as Marketing? Is “mg”• the same as Manager? When using data with anomalies to make decisions about employees that work in the Marketing department or have the title of Manager your results may be inaccurate. Upon inspecting the data you can visually inspect the data to determine what the data means; but it doesn’t inspire confidence about what other data values may be incorrect as well. If you reflect back to week one, we stated that in order for data to be useful for making decisions data had to be ARTC. Here the A stands for accurate. If we run the risk of insertion or modification anomalies (i.e. errors) with the data you see how quickly the data can become inaccurate. I think you can now see why normalizing the data is important. To normalize the data, that is to reduce anomalies, we need to first reduce redundancy. So how is that done? To reduce redundancy we’ll apply the process of normalization by applying a set of steps called normal forms also the name of the end state after each step has been applied. H OW DOES D ATA N ORMALIZATION WORK ? T HE N ORMAL F ORMS The process of normalization involves checking for data dependencies among the data in the columns of your data model. Depending on the type of dependency, the model will be in a certain normal form, for example, 1st, 2nd or 3rd normal form. To move your data model from its current normal form to a higher normal form, involves applying a normalization rule. The end product of a normalization rule is an increased number of tables (and foreign keys) from that in which you started. The higher the normal form, the more tables in your data model, the greater the efficiency, and the lower the chances for data redundancy and errors. The specific normal forms and rules are examined in the section below: 1st normal form - any multi-valued attributes have been removed so that there is a single value at the intersection of each row and column. This means eliminate repeating attributes or groups of repeating attributes. These are very common in many-to-many relationships 2nd normal form - remove partial dependencies. Once in 1st normal form, eliminate attributes that are dependent on only part of the composite PK 3rd normal form - remove transitive dependencies. From 2nd normal form, remove attributes that are dependent on non-PK attributes Boyce-Codd normal form - remove remaining anomalies that result from functional dependencies 4th normal form - remove multi-valued dependencies 5th normal form - remove remaining anomalies (essentially a catchall) As we review the above steps we have to wonder: What do they actually mean? Are all six really necessary? Isn’t there an easier way to remove anomalies? Must they be processed in order? Before we determine the answer to these questions let’s look at the normal forms pictorially and see how far we need to go with normalization. P ART 2: F UNCTIONAL D EPENDENCE F UNCTIONAL D EPENDENCE At its root, Normalization is all about functional dependence, or the relationship between two sets of data (typically columns in the tables you plan to normalize). Functional dependence says that for each distinct value in one column, say “column A”•, there is one and only one value in another column, say “column B.”• Furthermore, we say “the data in column B is functionally dependent on the data in column A”• or just “B is functionally dependent on A.”• You can also say “A determines B”•, since column B would be known as the determinant. Where does all this formal mumbo-jumbo come from? Well, in the world of mathematics, a function, such as f(x) = 2x+3 takes all values in its domain (in this case x is all real numbers) and maps them to one and only one value in the range (in this case 2x +3) so that for any given value of x, there should be one and only one value for f(x). This, in my own words, is the formal definition of a function. Functional dependence works the same way, but instead uses columns in a table as the domain and range. The range is the determinant. Take the following example: When we say the data in the customer name column is functionally dependent on the customer id column, we’re saying that for each distinct customer id_ there is one and only one customer name_. Don’t misinterpret what this says - It’s a-okay to have more than one customer id determine the same customer name (as is the case with customers 101 and 103 since they’re both “Tom”), but it’s not all right to have one customer id, such as 101 determine more than one name (Tom, Turk) or 104 Ted, Teddy (as is the case with the items in red in the customer2 table). So in table Customer1 Customer id determines Customer Name, but in table Customer2 this is not the case! P RIME VS . N ON -P RIME ATTRIBUTES The very first step in the process of normalization is to establish a primary key from the existing columns in each table. When we do this they’re not called “primary keys”• per-se but instead called candidate keys. This is a critical step because if you create a surrogate key (think: int identity in T-SQL) for the table, then it makes the process of functional dependence a little more trivial, since every column in the table is functionally dependent on the surrogate key! Bottom line here is if you need to use surrogate keys, be sure to add them after you’ve normalized, not before! You will always normalize based on the existing data, and therefore must resist the urge to use surrogate keys! Okay. Now we move to the definitions. Once you’ve established a candidate key, you can categorize the columns in each table accordingly: Prime Attributes (a.k.a. Key attributes)”“ those columns which are the candidate key or part of the candidate key (in the case of a composite key). Non-Prime Attribute (a.k.a. Non-Key attributes)”“ Those columns which are not part of the candidate key Example, from the figure below: (Foo1 + Foo3 = Key / Prime, Foo2, Foo4 = non Key / non Prime) P ART 3: E XTENSIVE EVALUATION OF T HE N ORMAL FORMS O VERVIEW Normal forms are “states of being,”• like sitting or standing. A data model is either “in”• a given normal form or it isn’t. The normal form of a logical data model is determined by: Its current normal form The degree of functional dependence among the attributes in each table. Z ERO N ORMAL F ORM 0NF (U N - NORMALIZED DATA ) Definitions are great and all, but sometimes they aren’t much help. Let’s look at a couple of examples to help clarify the normal forms. As we inspect an un-normalized Employee table below what do we notice about the attributes and the data values? Two things immediately jump out at us. There is an obvious repeating group and there is significant redundancy. So the risk of data anomalies is high. The Bottom Line (0NF): Data’s so bad, you can’t establish a primary key to create entity integrity from the existing data! Ouch! F IRST N ORMAL F ORM 1NF Let’s take the table above and put it into first normal form by removing the repeating groups of attributes. The result looks like the table below. You might say that this is not much of an improvement. In fact there is even more redundancy. Well yes, more redundancy but now we can have more than three employees working in any department without changing the structure of the database. You’ll also notice that I also had to create a composite PK in order to maintain entity integrity. When you have entity integrity the primary key has functional dependency. The Bottom Line (1NF): At this point you can at least establish a candidate key among the existing data. S ECOND N ORMAL F ORM 2NF Next, let’s take the table above from 1st normal form and remove the partial dependencies that exist. Let’s remove the attributes that are only dependent on part of the primary key. Here you can see that that there are some logical associations, deptName is dependent on deptNo and empName is dependent on empNo therefore we can remove each set of these attributes and place them in their own entities. Because deptName only needs the deptNo for determination, we say there’s a partial (functional) dependency. Eliminating this partial dependency by creating an additional table improves the data design since we've reduced the amount of redundant data. The Bottom Line (2NF): At this point, you have a candidate key and no partial dependencies in the existing data. With each new table created, the data redundancy is reduced. T HIRD N ORMAL F ORM 3NF Let’s take the tables above from 2nd normal form and remove the transitive dependencies that is let’s remove the attributes that are only dependent on non-primary key attributes or are not dependent on any attribute remaining in the table. Whatever is left is considered normalized to the 3rd normal form. Here we only have the titleName attribute remaining and it is not dependent on either of the PK attributes: deptNo or empNo; so we remove titleName from the table above and place it in its own entity. But we still need a way to reference it. We need to invent a PK with unique values and then associate the titleNames with the employees in the Employee entity via a FK. The titleName is a transitive (functional) dependence. After 3rd normal form our three new tables look like this: You can see we've improved the design yet again by eliminating yet another layer of redundancy. You probably know the Title table as a lookup table. Lookup tables are a common practice for 3NF. The Bottom Line (3NF): At this point, you have (1) a candidate key and (2) no partial dependencies and (3) no transitive dependencies in each table of existing data. Furthermore, this logical design is better than the original design since we have reduced the amount of redundant data, and minimized the possibility of inserting or updating bad data. NOTE: See the Slides from this learning unit for more examples on the normal forms.