IPTR Presentation Template

advertisement

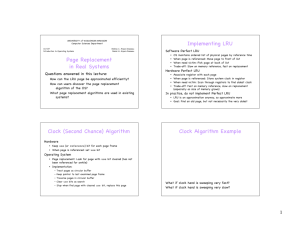

Differentiated Storage Services Michael Mesnier, Jason Akers, Feng Chen Tian Luo Intel Corporation The Ohio State University 23rd ACM Symposium on Operating Systems Principles (SOSP) 1 October 23-26, 2011, Cascais, Portugal Technology overview An analogy: moving & shipping Classification Policy assignment Policy enforcement Why should computer storage be any different? 2 Technology overview Differentiated Storage Services Classification Policy assignment Computer system Classifier QoS Policy Metadata Low latency Applications or DB Boot files Low latency Small files High throughput Media files High bandwidth … … I/O Classification Operating system I/O Classification (offline) Storage system Management firmware QoS Policies Storage Pool A Storage Pool B Storage Pool C File system Storage controller I/O Classification QoS Mechanisms Classify each I/O in-band = Current & future research 3 Policy enforcement The SCSI CDB 5 bits 32 classes 4 Technology overview Motivation: disk caching with SSDs Universal challenges in the industry – Keeping the right data cached – Avoiding thrash under cache pressure Conventional approaches – Cache bypass for large/sequential requests – Evict cold data (LRU commonly used) How I/O classification can help – Identify cacheable I/O classes – Assign relative caching priorities 5 Technology overview Filesystem prototypes (Ext3 & NTFS) FS classification FS policy assignment Computer system Classifier Cache priority Metadata 0 Applications or DB Journal 0 Directories 0 Files <= 4KB 1 Files <=16KB 2 Files <=64KB 3 … … Files > GB Lowest I/O Classification Operating system I/O Classification Storage system Management firmware QoS Policies Disk SSD File system Storage controller I/O Classification QoS Mechanisms Classify each I/O in-band = Current & future research 6 FS policy enforcement Technology overview Database prototype (PostgreSQL) DB classification Computer system Applications or DB I/O Classification Operating system DB policy assignment Classifier Cache priority System tables 0 Temp. tables (on write) 1 Randomly tables 2 Temp. tables (on read) 3 Sequential tables Bypass Index files Bypass Storage system Management firmware QoS Policies Disk I/O Classification SSD File system Storage controller I/O Classification QoS Mechanisms Classify each I/O in-band = Current & future research 7 DB policy enforcement Technology overview Selective cache algorithms Selective allocation – Always allocate high-priority classes – E.g. FS metadata and DB system tables always allocated – Conditionally allocate low-priority classes – Depends on cache pressure, cache contents, etc. – High/low cutoff is a tunable parameter Selective eviction – Evict in priority order (lowest priority first) – E.g., temporary DB tables evicted system tables – Trivially implemented by managing one LRU per class 8 Technology development 9 Technology development Ext3 prototype OS changes (block layer) – Add classifier to I/O requests – Only coalesce like-class requests – Copy classifier into SCSI CDB Ext3 changes – 18 classes identified – Optimized for a file server Small files & metadata A small kernel patch A one-time change to the FS 10 Ext3 Class Group Number Cache priority Unclassified 0 12 Superblock 1 0 Group desc. 2 0 Bitmap 3 0 Inode 4 0 Indirect block 5 0 Directories 6 0 Journal 7 0 File <= 4KB 8 1 File <= 16KB 9 2 File <= 64KB 10 3 … … … File > 1GB 18 11 Technology development Ext3 classification illustrated echo ‘Hello, world!’ >> foo; sync – – – – – – – READ_10(lba WRITE_10(lba WRITE_10(lba WRITE_10(lba WRITE_10(lba WRITE_10(lba WRITE_10(lba 231495 231495 16519223 16519231 16519239 16519247 8279 len len len len len len len 8 8 8 8 8 8 8 grp grp grp grp grp grp grp 9) 9) 8) 8) 8) 8) 5) <=4KB <=4KB Journal Journal Journal Journal Inode I/O classification shows read-modifywrite and metadata updates 7 I/Os (28KB) to write 13 bytes – Metadata accounts for most of the overhead NTFS classification is implemented with Windows filter drivers 11 Technology development PostgreSQL prototype Classification API: scatter/gather I/O fd=open("foo", O_RDWR|O_CLASSIFIED, 0666); class = 19; myiov[0].iov_base = &class; myiov[0].iov_len = 1; myiov[1].iov_base = “Hello, world!”; myiov[1].iov_len = 13; writev(fd, myiov, 2); OS changes (block layer) – Add O_CLASSIFIED file flag – Extract classifier from SG I/O A small OS & DB patch A one-time change to the OS & DB 12 Preliminary DB classes PostgreSQL class Group Number Unclassified 0 Transaction log 19 System table 20 Free space map 21 Temporary table 22 Random table 23 Sequential table 24 Index file 25 Reserved 26-31 Technology development Cache implementations Fully associative read/write LRU cache – Insert(), Lookup(), Delete(), etc. – Hash table maps disk LBA to SSD LBA – Syncer daemon asynchronously cleans cache Monitors cache pressure for selective allocate Maintains multiple LRU lists for selective evict Front-ends: iSCSI (OS independent) and Linux MD MD cache module (RAID-9) Striping: mdadm –create /dev/md0 –level=0 –raid-devices=2 /dev/sdd /dev/sde Mirroring: mdadm –create /dev/md0 –level=1 –raid-devices=2 /dev/sdd /dev/sde RAID-9: mdadm –create /dev/md0 –level=9 –raid-devices=2 <cache> <base 13 Evaluation 14 Evaluation Experimental setup Host OS (Xeon, 2-way, quad-core, 12GB RAM) – Linux 2.6.34 (patched as described) Target storage system – HW RAID array + X25-E cache Workloads and cache sizes – SPECsfs: 18GB (10% of 184GB working set) – TPC-H: 8GB (28% of 29GB working set) Comparison – LRU versus LRU-S (LRU with selective caching) 15 SPECsfs I/O breakdown 16 LRU LRU-S Large files pollute LRU cache (metadata and small files evicted) LRU-S fences off large file I/O SPECsfs performance metrics Hit rate Running time 1.8x speedup LRU LRU-S Syncer overhead LRU 17 LRU-S HDD LRU LRU-S I/O Throughput LRU LRU-S SPECsfs file latencies Reduction in write latency over HDD LRU LRU LRU-S LRU-S LRU suffers from write outliers (from eviction overheads) 18 Reduction in read latency over HDD LRU LRU-S LRU-S reduces read latency (most small files are cached) TPC-H I/O breakdown 19 LRU LRU-S Indexes pollute LRU cache (user tables evicted) LRU-S fences off index files TPC-H performance metrics Hit rate Running time 1.2x speedup LRU LRU-S Syncer overhead LRU 20 LRU-S HDD LRU LRU-S I/O Throughput LRU LRU-S Conclusion & future work Intelligent caching is just the beginning – Other types of performance differentiation – Security, reliability, retention, … Other applications we’re looking at – Databases – Hypervisors – Cloud storage – Big Data (NoSQL DB) Thank you! Questions? Work already underway in T10 Open source coming soon… Intel Confidential 21