A. White's Standard Errors

advertisement

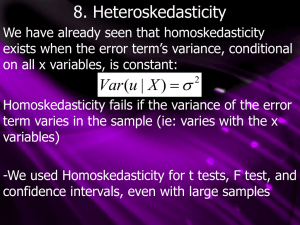

OUTLIER, HETEROSKEDASTICITY,AND NORMALITY Robust Regression HAC Estimate of Standard Error Quantile Regression Review General form of the multiple linear regression model is: yi f ( xi1 , xi 2 ,..., xik ) ui yi 1 xi1 2 xi 2 ... k xik ui i = 1,…,n This can be expressed as n y i k xik u i k 1 in summation form. 2 Or y Xβ u in matrix form, where u1 1 x11 x1k y1 y , X , β , u u n k xn1 xnk y n x1 is a column of ones, i.e. x11 xnk 1 1 T T 3 Review Problems with X Incorrect model – e.g. exclusion of relevant variables; inclusion of irrelevant variables; incorrect functional form (ii) There is high linear dependence between two or more explanatory variables (iii) The explanatory variables and the disturbance term are correlated (i) Review Problems with u (i) The variance parameters in the covariance-variance matrix are different (ii) The disturbance terms are correlated (iii) The disturbances are not normally distributed Problems with (i) (ii) Parameter consistency Structural change 8 Robust regression analysis • alternative to a least squares regression model when fundamental assumptions are unfulfilled by the nature of the data • resistant to the influence of outliers • deal with residual problems • Stata & E-Views Alternatives of OLS • A. White’s Standard Errors OLS with HAC Estimate of Standard Error • B. Weighted Least Squares Robust Regression • C. Quantile Regression Median Regression Bootstrapping OLS and Heteroskedasticity • What are the implications of heteroskedasticity for OLS? • Under the Gauss–Markov assumptions (including homoskedasticity), OLS was the Best Linear Unbiased Estimator. • Under heteroskedasticity, is OLS still Unbiased? • Is OLS still Best? A. Heteroskedasticity and Autocorrelation Consistent Variance Estimation • the robust White variance estimator rendered regression resistant to the heteroskedasticity problem. • Harold White in 1980 showed that for asymptotic (large sample) estimation, the sample sum of squared error corrections approximated those of their population parameters under conditions of heteroskedasticity • and yielded a heteroskedastically consistent sample variance estimate of the standard errors Quantile Regression • Problem – The distribution of Y, the “dependent” variable, conditional on the covariate X, may have thick tails. – The conditional distribution of Y may be asymmetric. – The conditional distribution of Y may not be unimodal. Neither regression nor ANOVA will give us robust results. Outliers are problematic, the mean is pulled toward the skewed tail, multiple modes will not be revealed. Reasons to use quantiles rather than means • • • • • • Analysis of distribution rather than average Robustness Skewed data Interested in representative value Interested in tails of distribution Unequal variation of samples • E.g. Income distribution is highly skewed so median relates more to typical person that mean. Quantiles • Cumulative Distribution Function CDF 1.0 F ( y) Prob(Y y) 0.8 0.6 0.4 0.2 • Quantile Function 0.0 -2.0 -1.5 -1.0 -0.5 0.5 0.0 1.5 1.0 2.0 Q( ) min( y : F ( y) ) Quantile (n=20) 1.5 • Discrete step function 1.0 0.5 0.0 -0.5 -1.0 -1.5 0.2 0.4 0.6 0.8 1.0 Regression Line The Perspective of Quantile Regression (QR) Optimality Criteria • Linear absolute loss • Mean optimizes min yi -1 0 1 • Quantile τ optimizes min ei I (ei 0) ei yi • I = 0,1 indicator function 1 -1 0 1 Quantile Regression Absolute Loss vs. Quadratic Loss 3 2.5 2 Quad 1.5 p=.5 1 p=.7 0.5 0 -2 -1 0 1 2 Simple Linear Regression Food Expenditure vs Income Engel 1857 survey of 235 Belgian households Range of Quantiles Change of slope at different quantiles? Bootstrapping • When distributional normality and homoskedasticity assumptions are violated, many researchers resort to nonparametric bootstrapping methods Bootstrap Confidence Limits