Modeling Consumer Decision Making and Discrete Choice Behavior

advertisement

Part 2: Basic Econometrics [ 1/48]

Econometric Analysis of Panel Data

William Greene

Department of Economics

Stern School of Business

Part 2: Basic Econometrics [ 2/48]

Part 2: Basic Econometrics [ 3/48]

Part 2: Basic Econometrics [ 4/48]

Trends in Econometrics

Small structural models vs. large scale multiple equation

models

Non- and semiparametric methods vs. parametric

Robust methods – GMM (paradigm shift? Nobel prize)

Unit roots, cointegration and macroeconometrics

Nonlinear modeling and the role of software

Behavioral and structural modeling vs. “reduced form,”

“covariance analysis”

Identification and “causal” effects

Pervasiveness of an econometrics paradigm

Part 2: Basic Econometrics [ 5/48]

Estimation Platforms

Model based

Kernels and smoothing methods (nonparametric)

Semiparametric analysis

Parametric analysis

Moments and quantiles (semiparametric)

Likelihood and M- estimators (parametric)

Methodology based (?)

Classical – parametric and semiparametric

Bayesian – strongly parametric

Part 2: Basic Econometrics [ 6/48]

Objectives in Model Building

Specification: guided by underlying theory

Estimation: coefficients, partial effects, model

implications

Statistical inference: hypothesis testing

Prediction: individual and aggregate

Model assessment (fit, adequacy) and evaluation

Model extensions

Modeling framework

Functional forms

Interdependencies, multiple part models

Heterogeneity

Endogeneity

Exploration: Estimation and inference methods

Part 2: Basic Econometrics [ 7/48]

Regression Basics

The “MODEL”

Modeling the conditional mean – Regression

Other features of interest

Modeling quantiles

Conditional variances or covariances

Modeling probabilities for discrete choice

Modeling other features of the population

Part 2: Basic Econometrics [ 8/48]

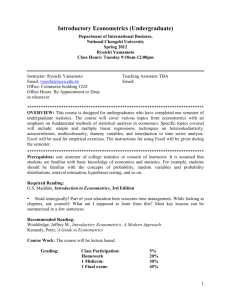

Application: Health Care

German Health Care Usage Data, 7,293 Individuals, Varying Numbers of Periods

Data downloaded from Journal of Applied Econometrics Archive. They can be used for regression, count models,

binary choice, ordered choice, and bivariate binary choice. There are altogether 27,326 observations. The number

of observations ranges from 1 to 7. (Frequencies are: 1=1525, 2=2158, 3=825, 4=926, 5=1051, 6=1000, 7=987).

Variables in the file are

DOCTOR = 1(Number of doctor visits > 0)

HOSPITAL = 1(Number of hospital visits > 0)

HSAT

= health satisfaction, coded 0 (low) - 10 (high)

DOCVIS

= number of doctor visits in last three months

HOSPVIS = number of hospital visits in last calendar year

PUBLIC

= insured in public health insurance = 1; otherwise = 0

ADDON

= insured by add-on insurance = 1; otherswise = 0

HHNINC = household nominal monthly net income in German marks / 10000.

(4 observations with income=0 were dropped)

HHKIDS

= children under age 16 in the household = 1; otherwise = 0

EDUC

= years of schooling

AGE

= age in years

MARRIED = marital status

Part 2: Basic Econometrics [ 9/48]

Part 2: Basic Econometrics [ 10/48]

Part 2: Basic Econometrics [ 11/48]

Unobserved factors are

“controlled for.”

Part 2: Basic Econometrics [ 12/48]

Household Income

Kernel Density Estimator

Histogram

Part 2: Basic Econometrics [ 13/48]

Regression – Income on

Education

---------------------------------------------------------------------Ordinary

least squares regression ............

LHS=LOGINC

Mean

=

-.92882

Standard deviation

=

.47948

Number of observs.

=

887

Model size

Parameters

=

2

Degrees of freedom

=

885

Residuals

Sum of squares

=

183.19359

Standard error of e =

.45497

Fit

R-squared

=

.10064

Adjusted R-squared

=

.09962

Model test

F[ 1,

885] (prob) =

99.0(.0000)

Diagnostic

Log likelihood

=

-559.06527

Restricted(b=0)

=

-606.10609

Chi-sq [ 1] (prob) =

94.1(.0000)

Info criter. LogAmemiya Prd. Crt. =

-1.57279

--------+------------------------------------------------------------Variable| Coefficient

Standard Error b/St.Er. P[|Z|>z]

Mean of X

--------+------------------------------------------------------------Constant|

-1.71604***

.08057

-21.299

.0000

EDUC|

.07176***

.00721

9.951

.0000

10.9707

--------+------------------------------------------------------------Note: ***, **, * = Significance at 1%, 5%, 10% level.

----------------------------------------------------------------------

Part 2: Basic Econometrics [ 14/48]

Specification and Functional Form

---------------------------------------------------------------------Ordinary

least squares regression ............

LHS=LOGINC

Mean

=

-.92882

Standard deviation

=

.47948

Number of observs.

=

887

Model size

Parameters

=

3

Degrees of freedom

=

884

Residuals

Sum of squares

=

183.00347

Standard error of e =

.45499

Fit

R-squared

=

.10157

Adjusted R-squared

=

.09954

Model test

F[ 2,

884] (prob) =

50.0(.0000)

Diagnostic

Log likelihood

=

-558.60477

Restricted(b=0)

=

-606.10609

Chi-sq [ 2] (prob) =

95.0(.0000)

Info criter. LogAmemiya Prd. Crt. =

-1.57158

--------+------------------------------------------------------------Variable| Coefficient

Standard Error b/St.Er. P[|Z|>z]

Mean of X

--------+------------------------------------------------------------Constant|

-1.68303***

.08763

-19.207

.0000

EDUC|

.06993***

.00746

9.375

.0000

10.9707

FEMALE|

-.03065

.03199

-.958

.3379

.42277

--------+-------------------------------------------------------------

Part 2: Basic Econometrics [ 15/48]

Interesting Partial Effects

---------------------------------------------------------------------Ordinary

least squares regression ............

LHS=LOGINC

Mean

=

-.92882

Standard deviation

=

.47948

Number of observs.

=

887

Model size

Parameters

=

5

Degrees of freedom

=

882

Residuals

Sum of squares

=

171.87964

Standard error of e =

.44145

Fit

R-squared

=

.15618

Adjusted R-squared

=

.15235

Model test

F[ 4,

882] (prob) =

40.8(.0000)

Diagnostic

Log likelihood

=

-530.79258

Restricted(b=0)

=

-606.10609

Chi-sq [ 4] (prob) =

150.6(.0000)

Info criter. LogAmemiya Prd. Crt. =

-1.62978

--------+------------------------------------------------------------Variable| Coefficient

Standard Error b/St.Er. P[|Z|>z]

Mean of X

--------+------------------------------------------------------------Constant|

-5.26676***

.56499

-9.322

.0000

EDUC|

.06469***

.00730

8.860

.0000

10.9707

FEMALE|

-.03683

.03134

-1.175

.2399

.42277

AGE|

.15567***

.02297

6.777

.0000

50.4780

AGE2|

-.00161***

.00023

-7.014

.0000

2620.79

--------+-------------------------------------------------------------

E[ Income | x]

Age 2 Age Age2

Age

Part 2: Basic Econometrics [ 16/48]

Function: Log Income | Age

Partial Effect wrt Age

Part 2: Basic Econometrics [ 17/48]

A Statistical Relationship

A relationship of interest:

Number of hospital visits: H = 0,1,2,…

Covariates: x1=Age, x2=Sex, x3=Income, x4=Health

Causality and covariation

Theoretical implications of ‘causation’

Comovement and association

Intervention of omitted or ‘latent’ variables

Temporal relationship – movement of the “causal

variable” precedes the effect.

Part 2: Basic Econometrics [ 18/48]

Endogeneity

A relationship of interest:

Number of hospital visits: H = 0,1,2,…

Covariates: x1=Age, x2=Sex, x3=Income, x4=Health

Should Health be ‘Endogenous’ in this model?

What do we mean by ‘Endogenous’

What is an appropriate econometric method of

accommodating endogeneity?

Part 2: Basic Econometrics [ 19/48]

Models

Conditional mean function: E[y | x]

Other conditional characteristics – what is ‘the model?’

Conditional variance function: Var[y | x]

Conditional quantiles, e.g., median [y | x]

Other conditional moments

Conditional probabilities: P(y|x)

What is the sense in which “y varies with x?”

Part 2: Basic Econometrics [ 20/48]

Using the Model

Understanding the relationship:

Estimation of quantities of interest such as elasticities

Prediction of the outcome of interest

Control of the path of the outcome of interest

Part 2: Basic Econometrics [ 21/48]

Application: Doctor Visits

German individual health care data: N=27,236

Model for number of visits to the doctor:

Poisson regression (fit by maximum likelihood)

E[V|Income]=exp(1.412 - .0745 income)

OLS Linear regression: g*(Income)=3.917 - .208 income

H istogr am

11152

for N um ber of D octor V isits

8364

Frequency

5576

2788

0

0

4

8

1 2 1 6 2 0 2 4 2 8 3 2 3 6 4 0 4 4 4 8 5 2 5 6 6 0 6 4 6 8 7 2 7 6 8 0 8 4 8 8 9 2 9 61 0 0

104

108

112

116

120

DOCV I S

Part 2: Basic Econometrics [ 22/48]

Conditional Mean

and Linear Projection

Projection and Conditional Mean Functions

4 .3 1

Function

3 .4 0

This area is

outside the range

of the data

2 .4 8

Most of the

data are in

here

1 .5 7

.6 6

-. 2 6

0

5

10

15

20

I NCOM E

CONDM E AN

P ROJ E CT N

Notice the problem with the linear projection. Negative predictions.

Part 2: Basic Econometrics [ 23/48]

What About the Linear Projection?

What we do when we linearly regress a variable

on a set of variables

Assuming there exists a conditional mean

There usually exists a linear projection. Requires

finite conditional variance of y.

Approximation to the conditional mean?

If the conditional mean is linear

Linear projection equals the conditional mean

Part 2: Basic Econometrics [ 24/48]

Partial Effects

What did the model tell us?

Covariation and partial effects: How does the y

“vary” with the x?

Partial Effects: Effect on what?????

For continuous variables

δ(x)=E[y|x]/x, usually not coefficients

For dummy variables

(x,d)=E[y|x,d=1] - E[y|x,d=0]

Elasticities: ε(x)=δ(x) x / E[y|x]

Part 2: Basic Econometrics [ 25/48]

Average Partial Effects

When δ(x) ≠β, APE = Ex[δ(x)]= (x)f(x)dx

x

Approximation: Is δ(E[x]) = Ex[δ(x)]? (no)

Empirically: Estimated

APE = (1 /N)Ni=1ˆ(xi )

Empirical approximation: Est.APE = ̂(x)

For the doctor visits model

δ(x)= β exp(α+βx)=-.0745exp(1.412-.0745income)

Sample APE

= -.2373

Approximation

= -.2354

Slope of the linear projection = -.2083 (!)

Part 2: Basic Econometrics [ 26/48]

APE and PE at the Mean

δ(x)=E[y|x]/x, =E[x]

δ(x) δ()+δ()(x-)+(1/2)δ()(x-)2 +

E[δ(x)]=APE δ() + (1/2)δ()2x

Implication: Computing the APE by averaging over observations (and

counting on the LLN and the Slutsky theorem) vs. computing partial

effects at the means of the data.

In the earlier example: Sample APE = -.2373

Approximation = -.2354

Part 2: Basic Econometrics [ 27/48]

The Canonical Panel Data Problem

y x c

c is unobserved individual heterogeneity

How do we estimate partial effects in the

presence of c?

PE(x) = Ec δ(x,c)=Ec E[y|x,c]/x

APE = E x Ec E[y|x,c]/x

Part 2: Basic Econometrics [ 28/48]

The Linear Regression Model

y = X+ε, N observations, K columns in X,

including a column of ones.

Standard assumptions about X

Standard assumptions about ε|X

E[ε|X]=0, E[ε]=0 and Cov[ε,x]=0

Regression?

If E[y|X] = X then X is the projection of y on X

Part 2: Basic Econometrics [ 29/48]

Estimation of the Parameters

Least squares, LAD, other estimators – we will

focus on least squares

-1

b = (X'X) X'y

s2 e'e /N or e'e /(N-K)

Classical vs. Bayesian estimation of

Properties

Statistical inference: Hypothesis tests

Prediction (not this course)

Part 2: Basic Econometrics [ 30/48]

Properties of Least Squares

Finite sample properties: Unbiased, etc. No

longer interested in these.

Asymptotic properties

Consistent? Under what assumptions?

Efficient?

Contemporary work: Often not important

Efficiency within a class: GMM

Asymptotically normal: How is this established?

Robust estimation: To be considered later

Part 2: Basic Econometrics [ 31/48]

Least Squares Summary

ˆ b, plim b =

d

N(b )

N{0, 2 [plim( X'X / N)]1 }

a

b

N{,(2 / N)[plim( X'X / N)]1 }

Estimated Asy.Var[b]=s2 ( X'X ) 1

Part 2: Basic Econometrics [ 32/48]

Hypothesis Testing

Nested vs. nonnested tests

y=b1x+e vs. y=b1x+b2z+e: Nested

y=bx+e vs. y=cz+u: Not nested

y=bx+e vs. logy=clogx: Not nested

y=bx+e; e ~ Normal vs. e ~ t[.]: Not nested

Fixed vs. random effects: Not nested

Logit vs. probit: Not nested

x is endogenous: Maybe nested. We’ll see …

Parametric restrictions

Linear: R-q = 0, R is JxK, J < K, full row rank

General: r(,q) = 0, r = a vector of J functions,

R (,q) = r(,q)/’.

Use r(,q)=0 for linear and nonlinear cases

Part 2: Basic Econometrics [ 33/48]

Example: Panel Data on Spanish Dairy Farms

N = 247 farms, T = 6 years (1993-1998)

Units

Mean

Output

Milk

Milk production (liters)

Input

Cows

# of milking cows

22.12

Input

Labor

# man-equivalent units

Input

Land

Input

Feed

131,107

Std. Dev.

Minimum

92,584

14,410

Maximum

727,281

11.27

4.5

82.3

1.67

0.55

1.0

4.0

Hectares of land devoted

to pasture and crops.

12.99

6.17

2.0

45.1

Total

amount

of

feedstuffs fed to dairy

cows (Kg)

57,941

47,981

3,924.14

376,732

Part 2: Basic Econometrics [ 34/48]

Application

y = log output

x = Cobb douglas production: x = 1,x1,x2,x3,x4

= constant and logs of 4 inputs (5 terms)

z = Translog terms, x12, x22, etc. and all cross products,

x1x2, x1x3, x1x4, x2x3, etc. (10 terms)

w = (x,z) (all 15 terms)

Null hypothesis is Cobb Douglas, alternative is

translog = Cobb-Douglas plus second order terms.

Part 2: Basic Econometrics [ 35/48]

Translog Regression Model

x

H0:z=0

Part 2: Basic Econometrics [ 36/48]

Wald Tests

r(b,q)= close to zero?

Wald distance function:

r(b,q)’{Var[r(b,q)]}-1 r(b,q) 2[J]

Use the delta method to estimate Var[r(b,q)]

Est.Asy.Var[b]=s2(X’X)-1

Est.Asy.Var[r(b,q)]= R(b,q){s2(X’X)-1}R’(b,q)

The standard F test is a Wald test; JF = 2[J].

Part 2: Basic Econometrics [ 37/48]

Wald= b z - 0 {Var[b z - 0]}1 b z - 0

Close

to 0?

Part 2: Basic Econometrics [ 38/48]

Likelihood Ratio Test

The normality assumption

Does it work ‘approximately?’

For any regression model yi = h(xi,)+εi where

εi ~N[0,2], (linear or nonlinear), at the linear (or

nonlinear) least squares estimator, however computed,

with or without restrictions,

2

2

ˆ and

logL(

ˆ

/N) (N/2)[1+log2+log

ˆ ˆ

ˆ ]

This forms the basis for likelihood ratio tests.

ˆunrestricted ) log L (

ˆrestricted )]

2[log L (

2

ˆ

d

Nlog 2restricted

2 [ J ]

ˆunrestricted

Part 2: Basic Econometrics [ 39/48]

Part 2: Basic Econometrics [ 40/48]

Score or LM Test: General

Maximum Likelihood (ML) Estimation

A hypothesis test

H0: Restrictions on parameters are

true

H1: Restrictions on parameters are not true

Basis for the test: b0 = parameter estimate under H0

(i.e., restricted), b1 = unrestricted

Derivative results: For the likelihood function under H1,

logL1/ | =b1 = 0 (exactly, by definition)

logL1/ | =b0 ≠ 0. Is it close? If so, the restrictions look

reasonable

Part 2: Basic Econometrics [ 41/48]

Why is it the Lagrange Multiplier Test?

Maximize logL() subject to restrictions r ()=0

X

such as Rβ - q = 0 or Z = 0 when = , R = (0:I) and q = 0.

Z

Use LM approach:

Maximize wrt ( ) L* = logL() r ().

L * / ˆ R logL(ˆ R ) / ˆ R r(ˆ R ) / ˆ R 0

FOC:

0

L * /

r (ˆ R )

If = 0, the constraints are not binding and logL(ˆ R ) / ˆ R 0 so ˆ R ˆ U .

If 0, the constraints are binding and logL(ˆ ) / ˆ 0.

R

Direct test: Test H 0 : = 0

Equivalent test: Test H 0LM : logL(ˆ R ) / ˆ R 0.

R

Part 2: Basic Econometrics [ 42/48]

Computing the LM Statistic

ˆ (1|0),i

g

N

2

e

1 x i

2 ei|0 , ei|0 =y i x ib, s 02 i=1 i|0

N

s 0 zi

ˆ (1|0) =Ni=1g

ˆ (1|0),i = Ni=1

g

N

ˆ

ˆ

ˆ

H

(1|0) i=1 g(1|0),i g(1|0),i

1 x i

1 X'e0 1 0

ei|0 = 2

s 20 zi

s 0 Z'e0 s 20 Z'e0

1 2 xi xi

N

i=1 4 ei|0 '

s0

zi zi

Note this is the middle matrix in the White estimator.

x

and s 04 disappear from the product. Let w i0 ei|0 i .

zi

Then LM=i'W0 (W0 W0 ) 1 W0 i, which is simple to compute.

The s 20

2

The derivation on page 64 of Wooldridge’s text is needlessly complex, and the second form of LM

is actually incorrect because the first derivatives are not ‘heteroscedasticity robust.’

Part 2: Basic Econometrics [ 43/48]

Application of the Score Test

Linear Model: Y = X+Zδ+ε

Test H0: δ=0

Restricted estimator is [b’,0’]’

Namelist ; X = a list… ; Z = a list … ; W = X,Z $

Regress ; Lhs = y ; Rhs = X ; Res = e $

Matrix ; list ; LM = e’ W * <W’[e^2]W> * W’ e $

Part 2: Basic Econometrics [ 44/48]

Restricted regression and

derivatives for the LM Test

Part 2: Basic Econometrics [ 45/48]

Tests for Omitted Variables

? Cobb - Douglas Model

Namelist ; X = One,x1,x2,x3,x4 $

? Translog second order terms, squares and cross products of logs

Namelist ; Z = x11,x22,x33,x44,x12,x13,x14,x23,x24,x34 $

? Restricted regression. Short. Has only the log terms

Regress ; Lhs = yit ; Rhs = X ; Res = e $

Calc

; LoglR = LogL ; RsqR = Rsqrd $

? LM statistic using basic matrix algebra

Namelist ; W = X,Z $

Matrix ; List ; LM = e'W * <W’[e^2]W> * W'e $

? LR statistic uses the full, long regression with all quadratic terms

Regress ; Lhs = yit ; Rhs = W $

Calc

; LoglU = LogL ; RsqU = Rsqrd ; List ; LR = 2*(Logl - LoglR) $

? Wald Statistic is just J*F for the translog terms

Calc

; List ; JF=col(Z)*((RsqU-RsqR)/col(Z)/((1-RsqU)/(n-kreg)) )$

Part 2: Basic Econometrics [ 46/48]

Regression Specifications

Part 2: Basic Econometrics [ 47/48]

Model Selection

Regression models: Fit measure = R2

Nested models: log likelihood, GMM criterion

function (distance function)

Nonnested models, nonlinear models:

Classical

Akaike information criterion= – (logL – 2K)/N

Bayes (Schwartz) information criterion = –(logL-K(logN))/N

Bayesian: Bayes factor = Posterior odds/Prior odds

(For noninformative priors, BF=ratio of posteriors)

Part 2: Basic Econometrics [ 48/48]

Remaining to Consider for the

Linear Regression Model

Failures of standard assumptions

Heteroscedasticity

Autocorrelation and Spatial Correlation

Robust estimation

Omitted variables

Measurement error

Endogenous variables and causal effects

Part 2: Basic Econometrics [ 49/48]

Appendix:

1. Computing the

LM Statistic

2. Misc Results

Part 2: Basic Econometrics [ 50/48]

LM Test

ˆ

ˆ1|=b0 Ni=1g

ˆ (1|=b0 ),i G'i

g

ˆ is a vector of derivatives.

where each row of G

ˆ1|=b0 is close to 0.

Use a Wald statistic to assess if g

By the information matrix equality,

ˆ1|=b0 ] E[H1|=b0 ] where H is the Hessian.

Var[g

Estimate this with the sum of squares as usual:

ˆ ˆ

ˆ 1|=b Ni=1g

ˆ

ˆ

H

g

G'G

(1|=b0 ),i (1|=b0 ),i

0

Part 2: Basic Econometrics [ 51/48]

LM Test (Cont.)

ˆ1|

=b0

Wald statistic = g

-1

ˆ 1|=b g

H

ˆ1|=b0

0

-1

ˆ G'G

ˆ ˆ G'i

ˆ

= iG

-1

ˆ G'G

ˆ ˆ G'i

ˆ /N

= N iG

= NR 2

R 2 = the R 2 in the 'uncentered' regression of

a column of ones on the vector of first derivatives.

(N is equal to the total sum of squares.)

This is a general result. We did not assume any

specific model in the derivation. (We did assume

independent observations.)

Part 2: Basic Econometrics [ 52/48]

Representing Covariation

Conditional mean function: E[y | x] = g(x)

Linear approximation to the conditional mean

function: Linear Taylor series

ĝ( x ) = g( x 0 ) + ΣKk=1 [gk | x = x 0 ](x k -x k0 )

= 0 + ΣKk=1k (x k -x k0 )

The linear projection (linear regression?)

g*(x)= 0 Kk 1 k (x k -E[x k ])

0

E[y]

Var[x]}-1 {Cov[x,y]}

Part 2: Basic Econometrics [ 53/48]

Projection and Regression

The linear projection is not the regression, and is

not the Taylor series.

Example: f(y|x)=[1/λ(x)]exp[-y/λ(x)]

λ(x)=exp(+x)=E[y|x]

x~U[0,1]; f(x)=1, 0 x 1

Part 2: Basic Econometrics [ 54/48]

For the Example: α=1, β=2

20.88

Conditional Mean

16.70

Linear

Projection

Linear Projection

Variable

12.53

Taylor Series

8.35

4.18

.00

.00

.20

.40

.60

.80

X

EY_X

PROJECTN

TAYLOR

1.00

Part 2: Basic Econometrics [ 55/48]

www.oft.gov.uk/shared_oft/reports/Evaluating-OFTs-work/oft1416.pdf

Part 2: Basic Econometrics [ 56/48]

Econometrics

Theoretical foundations

Microeconometrics and macroeconometrics

Behavioral modeling

Statistical foundations: Econometric methods

Mathematical elements: the usual

‘Model’ building – the econometric model