Computational Physics

advertisement

Computational Physics

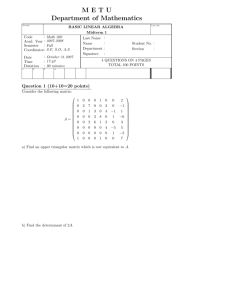

Linear Algebra

Dr. Guy Tel-Zur

Sunset in Caruaru by Jaime JaimeJunior. publicdomainpictures.net

Version 4-11-10, 14:00

MHJ Chapter 4 – Linear Algebra

• In this talk we deal with basic matrix operations

• Such as the solution of linear equations, calculate the

inverse of a matrix, its determinant etc.

• Here we focus in particular on so-called direct or

elimination methods, which are in principle determined

through a finite number of arithmetic operations.

• Iterative methods will be discussed in connection with

eigenvalue problems in MHJ chapter 12.

• This chapter serves also the purpose of introducing

important programming details such as handling memory

allocation for matrices and the usage of the libraries which

follow these lectures.

Libraries

• LAPACK based on:

– EISPACK – for solving symmetric, un-symmetric

and generalized eigenvalue problems

– LINPACK - linear equations and least square

problems

• BLAS: Basic Linear Algebra Subprogram

– Levels I, II and III

• Nelib: http://www.netlib.org

LAPACK - Linear Algebra PACKage

LAPACK is written in Fortran90 and provides

routines for solving systems of simultaneous linear

equations, least-squares solutions of linear systems

of equations, eigenvalue problems, and singular

value problems. The associated matrix

factorizations (LU, Cholesky, QR, SVD…) are also

provided.

Dense and banded matrices are handled, but not

general sparse matrices. In all areas, similar

functionality is provided for real and complex

matrices, in both single and double precision.

EISPACK

EISPACK is a collection of Fortran subroutines

that compute the eigenvalues and eigenvectors

of nine classes of matrices: complex general,

complex Hermitian, real general, real symmetric,

real symmetric banded, real symmetric

tridiagonal, special real tridiagonal, generalized

real, and generalized real symmetric matices. In

addition, two routines are included that use

singular value decomposition to solve certain

least-squares problems.

LINPACK

LINPACK is a collection of Fortran subroutines that

analyze and solve linear equations and linear leastsquares problems. The package solves linear

systems whose matrices are general, banded,

symmetric indefinite, symmetric positive definite,

triangular, and tridiagonal square. In addition, the

package computes the QR and singular value

decompositions of rectangular matrices and applies

them to least-squares problems. LINPACK uses

column-oriented algorithms to increase efficiency

by preserving locality of reference.

• LINPACK and EISPACK are based on BLAS I

• LAPACK is based on BLAS III

BLAS

• The BLAS (Basic Linear Algebra Subprograms) are

routines that provide standard building blocks for

performing basic vector and matrix operations.

• The Level 1 BLAS perform scalar, vector and vectorvector operations.

• The Level 2 BLAS perform matrix-vector operations

• The Level 3 BLAS perform matrix-matrix operations.

• Because the BLAS are efficient, portable, and widely

available, they are commonly used in the development

of high quality linear algebra software, LAPACK for

example.

http://icl.cs.utk.edu/lapack-for-windows/

LAPACK / CLAPACK / ScaLAPACK for Windows

Working with a Linear Algebra package

using Visual Studio

Work dir: C:\Users\telzur\Documents\BGU\Teaching\ComputationalPhysics\2011A\Lectures\04\LAPACK\CLAPACK-EXAMPLE\Release>

Demo

Compare with the following MATLAB

code

Demo

clear all

close all

disp('solves Ax=b')

A= [76, 27, 18; 25, 89, 60; 11, 51, 32]

b=[10, 7, 43]

invA=inv(A)

x=b*inv(A)

[L,U]=lu(A)

inv(U)*inv(L)*b'

Code location:

C:\Users\telzur\Documents\BGU\Teaching\ComputationalPhysics\2011A\Lectures\04\lpack.m

DGESV

SUBROUTINE DGESV( N, NRHS, A, LDA, IPIV, B, LDB, INFO )

*

* -- LAPACK driver routine (version 3.2) -* -- LAPACK is a software package provided by Univ. of Tennessee, -* -- Univ. of California Berkeley, Univ. of Colorado Denver and NAG Ltd..-* November 2006

*

* .. Scalar Arguments ..

INTEGER

INFO, LDA, LDB, N, NRHS

* ..

* .. Array Arguments ..

INTEGER

IPIV( * )

DOUBLE PRECISION A( LDA, * ), B( LDB, * )

* ..

*

* Purpose

* =======

*

*

*

*

*

*

*

*

*

*

*

*

DGESV computes the solution to a real system of linear equations

A * X = B,

where A is an N-by-N matrix and X and B are N-by-NRHS matrices.

The LU decomposition with partial pivoting and row interchanges is

used to factor A as

A = P * L * U,

where P is a permutation matrix, L is unit lower triangular, and U is

upper triangular. The factored form of A is then used to solve the

system of equations A * X = B.

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

*

Arguments

=========

N

(input) INTEGER

The number of linear equations, i.e., the order of the

matrix A. N >= 0.

NRHS (input) INTEGER

The number of right hand sides, i.e., the number of columns

of the matrix B. NRHS >= 0.

A

(input/output) DOUBLE PRECISION array, dimension (LDA,N)

On entry, the N-by-N coefficient matrix A.

On exit, the factors L and U from the factorization

A = P*L*U; the unit diagonal elements of L are not stored.

LDA

(input) INTEGER

The leading dimension of the array A. LDA >= max(1,N).

IPIV (output) INTEGER array, dimension (N)

The pivot indices that define the permutation matrix P;

row i of the matrix was interchanged with row IPIV(i).

B

(input/output) DOUBLE PRECISION array, dimension (LDB,NRHS)

On entry, the N-by-NRHS matrix of right hand side matrix B.

On exit, if INFO = 0, the N-by-NRHS solution matrix X.

LDB

(input) INTEGER

The leading dimension of the array B. LDB >= max(1,N).

INFO (output) INTEGER

= 0: successful exit

< 0: if INFO = -i, the i-th argument had an illegal value

> 0: if INFO = i, U(i,i) is exactly zero. The factorization

has been completed, but the factor U is exactly

singular, so the solution could not be computed.

=====================================================================

Cont’

*

*

*

*

*

*

*

*

.. External Subroutines ..

EXTERNAL

DGETRF, DGETRS, XERBLA

..

.. Intrinsic Functions ..

INTRINSIC

MAX

..

.. Executable Statements ..

Test the input parameters.

INFO = 0

IF( N.LT.0 ) THEN

INFO = -1

ELSE IF( NRHS.LT.0 ) THEN

INFO = -2

ELSE IF( LDA.LT.MAX( 1, N ) ) THEN

INFO = -4

ELSE IF( LDB.LT.MAX( 1, N ) ) THEN

INFO = -7

END IF

IF( INFO.NE.0 ) THEN

CALL XERBLA( 'DGESV ', -INFO )

RETURN

END IF

*

*

*

Compute the LU factorization of A.

CALL DGETRF( N, N, A, LDA, IPIV, INFO )

IF( INFO.EQ.0 ) THEN

*

*

*

Solve the system A*X = B, overwriting B with X.

CALL DGETRS( 'No transpose', N, NRHS, A, LDA, IPIV, B, LDB,

$

INFO )

END IF

RETURN

*

*

*

End of DGESV

END

Cont’

Mathematical intermezzo

http://ocw.mit.edu/courses/mathematics/18-085-computationalscience-and-engineering-i-fall-2008/

The Kn Matrices

What are the properties of K ?

The next slides are from: Computational Science and Engineering, by Gilbert

Strang. An excellent recommended book. His course is available online from

MIT Opencourseware. Very Recommended!!!!

Kn Properties

1.

2.

3.

4.

5.

6.

These matrices are symmetric.

The matrices Kn are sparse.

These matrices are tridiagonal.

The matrices have constant diagonals.

All the matrices K = Kn are invertible.

The symmetric matrices Kn are positive

definite.

Source: Computational Science and Engineering, by Gilbert Strang

• In Signal Processing D=Kn/4 is a “High-Pass”

Filter. Du picks out the rapidly varying parts of

a vector u

• K are called Toeplitz Matrix and MATLAB has a

function for generating such matrices, e.g.

K = toeplitz([2 -1 zeros(l,2)])

constructs K4 from row 1

Source: Computational Science and Engineering, by Gilbert Strang

More properties

• (Pivots) An invertible matrix has n nonzero

pivots.

• A positive definite symmetric matrix has n

positive pivots.

• (Eigenvalues) An invertible matrix has n

nonzero eigenvalues.

• A positive definite symmetric matrix has n

positive eigenvalues.

Source: Computational Science and Engineering, by Gilbert Strang

More special matrices

T=Top, B=Bottom

Source: Computational Science and Engineering, by Gilbert Strang

Building K,T,B,C in Matlab

Demo

Make a demo also using Octave

Source: Computational Science and Engineering, by Gilbert Strang

Matlab Demos

K4=toeplitz([2 -1 0 0])

C4=toeplitz([2 -1 0 -1])

inv(K4) …OK

inv(C4) …not OK singular

eig(K4) positive >0 positive definite

eig(C4) >=0 semi positive definite

Both are symmetric: try transpose(K4)

Check:

[L,U]=lu(T4) all pivots are 1

[L,U]=lu(B4) 0 on the diagonal of U B isn’t invertibe

inv(T4) …OK

inv(B4) …not OK singular

Demo

demos

e=ones(8,1)

B.e=0 . = dot product

BT=transpose(B) B is symmetric

System to solve: Bu=f

uTBT=fT

BT.e=B.e=0

Thefore

fT.e=0

Demo

Continued

det(K8) = Π(diagonal of U) = 2/1 * 3/2 * 4/3 … * 9/8 = 9

Demo

THIS IS THE CODE TO CREATE K,T,B,C AS SPARSE MATRICES

Then Matlab will not operate on all the zeros!

function K=SKTBC(type,n,sparse)

% SKTBC Create finite difference model matrix.

% K=SKTBC(TYPE,N,SPARSE) creates model matrix TYPE of size N-by-N.

% TYPE is one of the characters 'K', 'T', 'B', or 'C'.

% The command K = SKTBC('K', 100, 1) gives a sparse representation

% K=SKTBC uses the defaults TYPE='K', N=10, and SPARSE=false.

% Change the 3rd argument from 1 to 0 for dense representation!

% If no 3rd argument is given, the default is dense

% If no argument at all, KTBC will give 10 by 10 matrix K

if nargin<1, type='K'; end

if nargin<2, n=10; end

e=ones(n,1);

K=spdiags([-e,2*e,-e],-1:1,n,n);

switch type

case 'K'

case 'T'

K(1,1)=1;

case 'B'

K(1,1)=1;

K(n,n)=1;

case 'C'

K(1,n)=-1;

K(n,1)=-1;

otherwise

error('Unknown matrix type.');

end

if nargin<3 | ~sparse

K=full(K);

end

Demo

http://math.mit.edu/classes/18.085/create-sparse.html

For large size n

Source: Numerical recipes in FORTRAN77: the art of

scientific computing, page 65. By William H. Press

In Mathematics: Vectors and Matrices

Are mapped to Computers as Memory arrays

Fixed Memory allocation vs. Dynamic Memory Allocation

Compile time vs. Run time

In the next slides we will study two programs that demonstrate these issues

Let’s start with Table 4.2 in the book: Matrix handling program where

arrays are defined at compilation time – Next slide

Use Visual Studio for the demo solution file under: chap4_static

int main() {

int k,m, row = 3, col = 5;

int vec[5]; // line a: a standard C++ declaration of a vector

int matr[3][5]; // line b: a standard fixed-size C++ declaration of a matrix

Table 4.2

for(k = 0; k < col; k++) vec[k] = k; // data into vector[]

for(m = 0; < row; m++) { // data into matr[][]

for(k = 0; k < col ; k++) matr[m][k] = m + 10 * k;

}

printf("\n Vector data in main():\n”);

// print vector data

Demo

for(k = 0; k < col; k++) printf("vetor[%d]= %d ",k, vec[k]);

printf("\n Matrix data in main():");

for(m = 0; m < row; m++) {

printf(“\n”);

for(k = 0; k < col; k++)

printf("m atr[%d][%d]= %d ",m,k,matr[m][k]);

}

// לא ניתן להעתיק אותו אחד לאחד.הקוד שכתוב בספר קצת רשלני

printf(“\n”);

sub_1(row, col, vec, matr); // line c: transfer vec[] and matr[][] addresses to func. sub_1().

return 0;

} // End: function main()

void sub_1(int row, int col, int vec[], int matr[][5]) { //line d: a func. def.

int k,m;

printf("\n Vetor data in sub1():\n"); // print vector data

for(k = 0; k < col; k++) printf("vetor[%d]= %d ",k, vec[k]);

printf("\n Matrix data in sub1():");

equiv to

for(m = 0; m < row; m++) {

printf(“\n”);

int *vec

for(k = 0; k < col; k++) {

equiv to

printf("matr[%d][%d]= %d ",m, k, matr[m][k]);

int (*matr)[5]

}

}

printf(“\n”);

Using Visual Studio 2010

Make demo in class

Static program execution

Table 4.3: Matrix handling program

with dynamic array allocation.

התכנית ב 4.3לא מתקמפלת ומלאה באגים.

בעתיד היא תתווסף כתכנית לדוגמה .בשלב

הזה נתייחס אליה כאל פסאודו-קוד מבלי

להריצה

#include <stdio.h>

int main()

{

int *vec; // line a

int **matr; // line b

int m, k, row, col, total = 0;

printf("\n Read in number of rows= "); // line c

scanf("%d",&row);

printf("\n Read in number of column= ");

scanf("%d", &col);

vec = new int [col]; // line d

matr = (int **)matrix(row, col, sizeof(int)); // line e

for(k = 0; k < col; k++) vec[k] = k; // store data in vector[]

for(m = 0; < row; m++) { // store data in array[][]

for(k = 0; k < col; k++) matr[m][k] = m + 10 * k;

}

printf("\n Vetor data in main():\n"); // print vector data

for(k = 0; k < col; k++) printf("vetor[%d]= %d ",k,vec[k]);

printf("\n Array data in main():");

for(m = 0; m < row; m++) {

printf("\n");

for(k = 0; k < col; k++) {

printf("m atrix[%d][%d]= %d ",m, k, matr[m][k]);

}

}

printf("\n");

for(m = 0; m < row; m++) { // access the array

for(k = 0; k < col; k++) total += matr[m][k];

}

printf("\n Total= %d\n",total);

sub_1(row, col, vec, matr);

free_matrix((void **)matr); // line f

delete [] vec; // line g

return 0;

the same procedure is

} // End: function main()

performed for vec[]

we declare a

pointer to an

integer

declares a pointer-to-apointer which will contain

the address to

a pointer of row vectors,

each with col integers

read in the size of vec[] and

matr[][] through the numbers

row and col

we reserve memory for

the vector

we use a user-defined function to reserve

necessary memory for matrix[row][col] and

again matr contains the address to the

reserved memory

location.

The remaining part of the

function main() are as in the

previous case down to line f.

Continued from previous slide

void sub_1(int row, int col, int vec[], int ??matr)

// line h

in line h an important difference

{

from the previous case occurs. First,

the vector declaration is

int k,m;

the same, but the matr declaration

printf("\n Vetor data in sub1():\n"); // print

is quite different. The

vector data

for(k = 0; k < col; k++) printf("vetor[%d]= %d ",k, corresponding parameter in the call

to sub_1[]

vec[k]);

in line g is a double pointer.

printf("\n Matrix data in sub1():");

Consequently, matr in line h must

for(m = 0; m < row; m++) {

be a double pointer

printf("\n");

for(k = 0; k < col; k++) {

printf("matrix[%d][%d]= %d ",m,k,matr[m][k]);

}

}

printf("\n");

} // End: function sub_1()

/*

* The function

* void **matrix( )

* reserves dynamic memory for a two-dimensional matrix

* using the C++ command new . No initialization of the elements .

* Input data :

* int row - number of rows

* int col - number of columns

* int num_bytes - number of bytes for each element

* Returns avoid **pointer to the reserved memory location.

*/

void **matrix( int row , int col , int num_bytes )

{

int i , num;

char **pointer, *ptr;

pointer = new(nothrow) char* [ row ] ;

if ( !pointer ) {

cout << "Exeption handling Memory aloation failed";

cout << "for"<< row << "row addresses!"<< endl;

return NULL;

}

i = ( row * col * num_bytes ) / size of ( char ) ;

pointer [ 0 ] = new( nothrow ) char [ i ] ;

if ( !pointer [ 0 ] ) {

cout << "Exeption handling :Memory allocation failed";

cout << "for address to " << i << " characters!"<< endl ;

return NULL;

}

ptr = pointer [ 0 ] ;

num = col * num_bytes ;

for ( i = 0 ; i < row ; i ++ , ptr += num ) {

pointer [ i ] = ptr ;

}

return ( void **) pointer ;

} // end : function void **matrix ( )

lib.cpp

Skip to section 4.4

C++ and Fortran features of

matrix handling

If we store a matrix in the sequence a11 a12 . . . a1n a21 a22 . . . a2n . . . ann

This is called “row-major” order (we go along a given row i and pick up all column elements j)

C++ stores them by row-major.

We cloud also store in column-major order a11 a21 . . . an1 a12 a22 . . . an2 . . . ann.

Fortran stores matrices by column-major,

4.4 Linear Systems

•

•

•

•

•

Gauss Elimination

Upper/Lower triangular matrix

Solve Linear System

LU algorithm

Cholesky decomposition (a special case)

(4.4) Linear Systems:

An Example

This is the stiffness matrix, K,

we met earlier!

…And we switched from a Differential Equation to Linear Algebra.

Case #1:

Assume first that the function f does not depend on u(x).

Then our linear equation reduces to

Au = f ,

(4.8)

which is nothing but a simple linear equation with a

tridiagonal matrix A.

We will solve such a system of equations in subsection 4.4.3.

Case #2

If we assume that our boundary value problem is that of a quantum

mechanical particle confined by

a harmonic oscillator potential, then our function f takes the form (assuming

that all constants m = h_bar = omega = 1

להבנה נוספת ראש

with λ being the eigenvalue.

השקף הבא

Inserting this into our equation, we define first a new matrix A as

We will solve this type of equations in chapter 12.

(4.4.2) LU Decomposition

LU Factorization

Continue from page 83 at MHJ chap 4 PDF

Cholesky’s Factorization

MHJ Chapter 4, page 87 (2009 edition)

One has to check first if A is positive definite!

Vandermonde matrix

MHJ Chap. 4, page 93

QR Decomposition

is a decomposition of the matrix into an orthogonal and

an upper triangular matrix.

singular value decomposition

SVD

M=UΣV*

Matlab demo:

M=[1 0 0 0 2; 0 0 3 0 0; 0 0 0 0 0;0 4 0 0 0]

[U S V]=svd(M)