holliday-capacity

advertisement

Capacity of Finite-State Channels:

Lyapunov Exponents and Shannon Entropy

Tim Holliday

Peter Glynn

Andrea Goldsmith

Stanford University

Introduction

We show the entropies H(X), H(Y), H(X,Y), H(Y|X) for finite

state Markov channels are Lyapunov exponents.

This result provides an explicit connection between

dynamic systems theory and information theory

It also clarifies Information Theoretic connections to

Hidden Markov Models

This allows novel proof techniques from other fields to be

applied to Information Theory problems

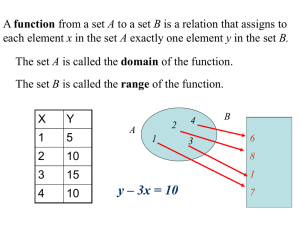

Finite-State Channels

Channel state Zn {c0, c1, … cd} is a Markov Chain with

transition matrix R(cj, ck)

States correspond to distributions on the input/output

symbols P(Xn=x, Yn=y)=q(x ,y|zn, zn+1)

Commonly used to model ISI channels, magnetic recording

channels, etc.

c0

c1

R(c1, c3)

R(c0, c2)

c2

c3

Time-varying Channels

with Memory

We consider finite state Markov channels with no

channel state information

Time-varying channels with finite memory induce

infinite memory in the channel output.

Capacity for time-varying infinite memory channels is

defined in terms of a limit

1

C maxn lim I X n ; Y n

p ( X ) n n

Previous Research

Mutual information for the Gilbert-Elliot channel

[Mushkin Bar-David, 1989]

Finite-state Markov channels with i.i.d. inputs

[Goldsmith/Varaiya, 1996]

Recent research on simulation based computation of

mutual information for finite-state channels

[Arnold, Vontobel, Loeliger, Kavčić, 2001, 2002, 2003]

[Pfister, Siegel, 2001, 2003]

Symbol Matrices

For each symbol pair (x,y) X x Y define a

|Z|x|Z| matrix G(x,y)

G(x,y)(c0,c1) = R(c0,c1) q(x0 ,y0|c0,c1), (c0,c1) Z

Where (c0,c1) are channel states at times (n,n+1)

Each element corresponds to the joint

probability of the symbols and channel

transition

Probabilities as Matrix Products

Let m be the stationary distribution of the channel

P X 0n x0n , Y0n y0n

n

n

n

n

n

n

n

n

P

X

x

,

Y

y

|

Z

c

P

Z

c

0 0 0 0 0 0 0 0

c0 ,c1 ,,cn

c0 ,c1 ,,cn

n

m (c0 ) R(c j , c j 1 )q( x j , y j | c j , c j 1 )

j 0

m G( x1 , y1 )G( x2 , y2 ) G( xn , yn ) e

G( x1 , y1 )G( x2 , y2 ) G( xn , yn )

The matrices G are deterministic

functions of the random pair (x,y)

Entropy as a Lyapunov Exponent

The Shannon entropy is equivalent to the Lyapunov

exponent for G(X,Y)

1

H(X, Y) lim Elog P( X 1 ,, X n , Y1 ,, Yn )

n n

1

lim log P( X 1 ,, X n , Y1 ,, Yn )

n n

1

lim log G( X1 ,Y1 ) G( X n ,Yn )

n n

1

lim E log G( X1 ,Y1 ) G( X n ,Yn ) λ(Y | X)

n n

Similar expressions exist for H(X), H(Y), H(X,Y)

Growth Rate Interpretation

The typical set An is the set of sequences

x1,…,xn satisfying

2 nH(X) P X 1 x1 ,, X n xn 2 nH(X)

By the AEP P(An)>1- for sufficiently large n

The Lyapunov exponent is the average rate of

growth of the probability of a typical sequence

In order to compute l(X) we need information

about the “direction” of the system

Lyapunov Direction Vector

The vector pn is the “direction” associated with l(X)

for any m.

Also defines the conditional channel state probability

m GX GX ...GX

n

pn

P( Zn 1 | X )

|| m GX GX ...GX ||1

1

1

2

2

n

n

Vector has a number of interesting properties

It is the standard prediction filter in hidden Markov

models

pn is a Markov chain if m is the stationary distribution for

the channel)

Random Perron-Frobenius Theory

The vector p is the random Perron-Frobenius

eigenvector associated with the random matrix GX

For all n we have

For the stationary

version of p we have

pn

pn 1G X n

pn 1G X n

1

D

pGX L p

The Lyapunov exponent l ( X ) E , X log L

we wish to compute is

E , X log pG X

1

Technical Difficulties

The Markov chain pn is not irreducible if the

input/output symbols are discrete!

Standard existence and uniqueness results cannot be

applied in this setting

We have shown that pn possesses a unique

stationary distribution if the matrices GX are

irreducible and aperiodic

Proof exploits the contraction property of

positive matrices

Computing Mutual Information

Compute the Lyapunov exponents l(X), l(Y), and l(X,Y)

as expectations (deterministic computation)

Then mutual information can be expressed as

I ( X ; Y ) l ( X ) l (Y ) l ( X , Y )

We also prove continuity of the Lyapunov exponents on

the domain q, R, hence

C max [l ( X ) l (Y ) l ( X , Y )]

( q, R)

Simulation-Based Computation

(Previous Work)

Step 1: Simulate a long sequence of input/output

symbols

Step 2: Estimate entropy using

1 n1

H n ( X ) ln ( X ) log p j GX j

n j 0

1

Step 3: For sufficiently large n, assume that the

sample-based entropy has converged.

Problems with this approach:

Need to characterize initialization bias and confidence

intervals

Standard theory doesn’t apply for discrete symbols

Simulation Traces for Computation of

H(X,Y)

Rigorous Simulation Methodology

We prove a new functional central limit theorem

for sample entropy with discrete symbols

A new confidence interval methodology for

simulated estimates of entropy

A method for bounding the initialization bias in

sample entropy simulations

How good is our estimate?

How long do we have to run the simulation?

Proofs involve techniques from stochastic

processes and random matrix theory

Computational Complexity of

Lyapunov Exponents

Lyapunov exponents are notoriously difficult to

compute regardless of computation method

NP-complete problem [Tsitsiklis 1998]

Dynamic systems driven by random matrices

typically posses poor convergence properties

Initial transients in simulations can linger for

extremely long periods of time.

Conclusions

Lyapunov exponents are a powerful new tool for

computing the mutual information of finite-state channels

Results permit rigorous computation, even in the case of

discrete inputs and outputs

Computational complexity is high, multiple computation

methods are available

New connection between Information Theory and

Dynamic Systems provides information theorists with a

new set of tools to apply to challenging problems