1 Performance Assessment, Rubrics, & Rating Scales

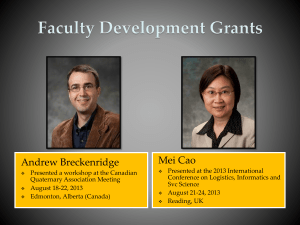

advertisement

Performance Assessment, Rubrics, & Rating Scales 1 Trends Definitions Advantages & Disadvantages Elements for Planning Technical Concerns Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Types of Performance Assessments Performance Assessment Portfolios Exhibitions Experiments Essays or Writing Samples 2 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Performance Assessment 3 Who is currently using performance assessments in their courses or programs? What are some examples of these assessment tasks? Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Primary Characteristics 4 Constructed response Reviewed against criteria/continuum (individual or program) Design is driven by assessment question/ decision Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Why on Rise? 5 Accountability issues increasing Educational reform has been underway Growing dissatisfaction with traditional multiple choice tests (MC) Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Exercise 1 6 Locate the sample rubrics in your packet. Working with a partner, review the different rubrics. Describe what you like and what you find difficult about each (BE KIND). Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Advantages As Reported By Faculty 7 Clarification of goals & objectives Narrows gap between instruction & assessment May enrich insights about students’ skills & abilities Useful for assessing complex learning Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Advantages for Students 8 Opportunity for detailed feedback Motivation for learning enhanced Process information differently Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Disadvantages Requires Coordination –Goals –Administration –Scoring –Summary 9 & Reports Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Disadvantages Archival/Retrieval –Accessible –Maintain 10 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Disadvantages Costs –Designing –Scoring (Train/Monitor) –Archiving 11 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Steps in Developing Performance Assessments 1. 2. 3. 4. 5. 12 Clarify purpose/reason for assessment Clarify performance Design tasks Design rating plan Pilot/revise Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Steps in Developing Rubrics 1. Identify purpose/reason for rating scale 2. Define clearly what is to be rated 3. Decide which you will use a. Holistic or Analytic b. Generic or Task-Specific 4. Draft the rating scale and have it reviewed 13 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Recommendations Generic Taskspecific 14 Holistic Analytic Cost-effective but lacking Desirable in diagnostic value Not recommended Deborah Moore, Office of Planning & Institutional Effectiveness Very desirable but expensive Spring 2002 Steps in Developing Rubrics (continued) 5. 6. 7. 8. 15 Pilot your assessment tasks and review Apply your rating scales Determine the reliability of the ratings Evaluate results and revise as needed. Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Table 4. Item Means Rubric Item General Statement of Problem: 1. Engages reader. 1 2. Establishes formal tone 1 (professional audience). 3. Describes problem clearly. 1 4. Defines concepts and terms 1 effectively. Literature Review: 5. Describes study/studies clearly. 1 6. Paraphrases/interprets concisely. 1 7. Organizes meaningfully. 1 Power/Appeal: 8. Expresses voice. 1 Synthesis: 9. e.g. Makes connections between sources. 1 Identifies missing pieces. Predicts reasonable future directions Format and Structure: 10. Mechanics (spelling, punctuation) 1 and grammar. 11. A.P.A. style (in text citation) 1 12. Develops beginning, middle, and 1 end elements logically. 13. Provides sound transition. 1 14. Chooses words well. 1 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 2 3 4 5 Descriptive Rating Scales 17 Each rating scale point has a phrase, sentence, or even paragraph describing what is being rated. Generally recommended over graded-category rating scales. Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Portfolio Scoring Workshop 18 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Subject Matter Expertise Experts like Dr. Edward White join faculty in their work to refine scoring rubrics and monitor the process. 19 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Exercise 2 20 Locate the University of South Florida example. Identify the various rating strategies that are involved in use of this form. Identify strengths and weaknesses of this form. Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Common Strategy Used 21 Instructor assigns individual grade for an assignment within a course. Assignments are forwarded to program-level assessment team. Team randomly selects a set of assignments and assigns a different rating scheme. Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Exercise 3 Locate Rose-Hulman criteria. Select one of the criteria. In 1-2 sentences, describe an assessment task/scenario for that criterion. Develop rating scales for the criterion. – – 22 List traits Describe distinctions along continuum of ratings Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Example of Consistent & Inconsistent Ratings Students 23 CONSISTENT RATING INCONSISTENT RATING (7-Point Scale) (7-Point Scale) Judge A Judge B Judge C Judge A Judge B Judge C Larry 7 6 7 7 3 1 Moe 4 4 3 4 6 2 Curley 1 2 2 2 4 7 Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Calculating Rater Agreement (3 Raters for 2 Papers) Is Rater in Agreement with the Criterion Score? Judges Paper 1 Paper 2 Rater’s Agreement Paper 1 Paper 2 Rater’s Agreement Larry Yes No 50% Yes No 50% Moe No No 0% Yes Yes 100% Curley Yes Yes 100% Yes Yes 100% 67%= Yes 33%= Yes 50% 100% =Yes 67%= Yes 83% Total 24 Is Rater in Agreement with the Criterion Score, Plus or Minus 1 Point? Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Rater Selection and Training 25 Identify raters carefully. Train raters about purpose of assessment and to use rubrics appropriately. Study rating patterns and do not keep raters who are inconsistent. Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Some Rating Problems 26 Leniency/Severity Response set Central tendency Idiosyncrasy Lack of interest Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Exercise 4 27 Locate Generalizability Study tables (1-4). In reviewing table 1, describe the plan for rating the performance. What kinds of rating problems do you see? In table 2, what seems to be the biggest rating problem? In table 3, what seems to have more impact, additional items or raters? Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Generalizability Study (GENOVA) 28 G Study: identifies sources of error (facet) in the overall design; estimates error variance for each facet of the measurement design D Study: estimates reliability of ratings with current design to project outcome of alternative designs Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Table 1. Rater and Item Means (Scale 1 - 5) Rubric Item Number Team 1 Team 2 Team 3 Team 4 Team 5 3.0 2.7 2.9 3.1 3.3 3.1 2.9 3.0 2.5 3.8 2.0 2.9 2.9 3.5 3.0 3.3 3.3 3.2 3.8 3.2 3.4 3.1 2.9 3.8 2.0 3.3 3.4 3.2 3.3 3.2 3.5 3.1 3.4 3.4 3.4 3.4 3.1 3.8 2.7 3.6 3.3 3.2 3.9 3.6 3.0 3.8 3.3 3.7 4.0 3.7 3.2 4.2 2.6 4.1 4.1 3.9 3.5 3.6 3.6 3.7 3.7 3.7 3.9 3.7 3.3 3.6 3.5 3.9 3.7 3.7 Rater 1 Mean Rater 2 Mean 3.2 2.7 2.7 3.6 3.4 3.2 3.7 3.6 3.7 3.6 Grand Mean 2.9 3.2 3.3 3.6 3.6 .21 .51 .45 .67 .50 1 2 3 4 5 6 7 8 9 10 11 12 13 14 Dependability of Design Table 2. Percent of Error Variance Associated with Facets of Measurement Percent Variance (%) Source of Error Variance Team 1 Team 2 Team 3 Team 4 Team 5 Persons 18 31 19 35 47 Raters 23 40 3 2 3 Items 13 8 12 15 3 Persons X Raters 15 5 6 10 29 Persons X Items 12 6 23 13 7 Items X Raters 7 5 18 14 5 Error (Persons X Raters X Items) 11 5 17 11 8 Total* 99 101 98 100 102 * rounding error Table 3. Phi Coefficient Estimates Based on Alternative Designs for Each Team Raters Items Phi Raters Items Phi Raters Vary Number of Vary Number of Vary Number of Vary Number of Vary Number of Team 5 Team 4 Team 3 Team 2 Team 1 Items Phi Raters Items Phi Raters Items Phi Current Design 2 14 .21 2 14 .51 2 14 .45 2 14 .67 2 14 .50 Alternatives 2 18 .34 2 18 .68 2 18 .61 2 18 .81 2 18 .67 3 14 .41 3 14 .75 3 14 .62 3 14 .84 3 14 .75 3 18 .42 3 18 .75 3 18 .66 3 18 .85 3 18 .75 4 14 .46 4 14 .79 4 14 .66 4 14 .86 4 14 .80 4 18 .48 4 18 .80 4 18 .70 4 18 .88 4 18 .80 5 14 .50 5 14 .82 5 14 .68 5 14 .87 5 14 .83 5 18 .52 5 18 .83 5 18 .72 5 18 .89 5 18 .83 Table 4. Item Means Rubric Item General Statement of Problem: 1. Engages reader. 2. Mean Score 3.31 Establishes formal tone 3.25 (professional audience). 3. Describes problem clearly. 3.25 4. Defines concepts and terms 3.36 effectively. Literature Review: 5. Describes study/studies clearly. 3.48 6. Paraphrases/interprets concisely. 3.39 7. Organizes meaningfully. 3.49 Power/Appeal: 8. Expresses voice. 3.36 Synthesis: 9. e.g. Makes connections between sources.3.00 Identifies missing pieces. Predicts reasonable future directions Format and Structure: 10. Mechanics (spelling, punctuation) 3.82 and grammar. 11. A.P.A. style (in text citation) 2.53 12. Develops beginning, middle, and 3.52 end elements logically. 13. Provides sound transition. 3.45 14. Chooses words well. 3.47 Summary 33 Interpretation - Raters using the rubric in nonsystematic ways Reliability (phi) values range from .21 to .67 for the teams—well below .75 level desired Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 What Research Says About Current Practice Instructors have limited sense of differentiated purposes of assessment Articulating their goals for student outcomes is difficult Uneven understanding about what constitutes thinking & problem solving Discipline content Articulating Instructors worry gets short shrift criteria for judging over fairness; difficult criteria unevenly applied 34 Deborah Moore, Office of Planning & Institutional Effectiveness Often design plan is weak/flawed; limited thought about use of information Instructors not prepared to consider reliability & validity issues Spring 2002 Summary 35 Use on the rise Costly Psychometrically challenging Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002 Thank you for your attention. 36 Deborah Moore, Assessment Specialist 101B Alumni Gym Office of Planning & Institutional Effectiveness dlmoor2@email.uky.edu 859/257-7086 http://www.uky.edu/LexCampus/; http://www.uky.edu/OPIE/ Thank you for attending. Deborah Moore, Office of Planning & Institutional Effectiveness Spring 2002