Java Methods

A & AB

Object-Oriented Programming

and Data Structures

Maria Litvin ● Gary Litvin

Big-O Analysis of Algorithms

Copyright © 2006 by Maria Litvin, Gary Litvin, and Skylight Publishing. All rights reserved.

Objectives:

• Learn the big-O definition and notation

• Review big-O for some common tasks

and algorithms studied earlier

• Review several popular sorting

algorithms and their average, best, and

worst case big-O

18-2

Evaluation of Algorithms

• Practice:

benchmarks

t

• Theory:

asymptotic analysis,

big-O

n

18-3

Analysis of Algorithms —

Assumptions

• Abstract computer model with “unlimited”

RAM

• Unlimited range for integers

• Basic operations on integers (addition,

multiplication, etc.) take fixed time regardless

of the values of the operands

18-4

Big-O Analysis

• Studies space and time requirements in

terms of the “size” of the task

• The concept of “size” is somewhat

informal here:

The size of a list or another collection

The dimensions of a 2-D array

The argument of a function (for example,

factorial(n) or fibonacci(n))

The number of objects involved (for example,

n disks in the Tower of Hanoi puzzle)

18-5

Big-O Assumptions

• Here our main concern is time

• Ignores a constant factor

measures the time in terms of the number

of some abstract steps

• Applies to large n, studies asymptotic

behavior

18-6

Example 1: Sequential Search

for (int i = 0; i < n; i++)

if (a[ i ] == target)

break;

n/2 iterations

on average

t (n) tinit titer n / 2

t ( n) A n B

t (n)

B

1

An

An

Small when

n

t

t(n) = An

The average time is

approximately A·n —

linear time (for large n)

n

18-7

Example 2: Binary Search

n The Number of Comparisons

1

3

2

7

3

...

...

n

log2(n)

t (n) tinit titer log 2 n

The average time is

approximately C·log2 n

— logarithmic time

(for large n)

t

t(n) = C log n

n

18-8

Sequential vs. Binary Search

• The logarithmic curve increases without

bound when n increases

• But... log grows much

more slowly than any

linear function.

• No matter what the

constants are, the linear

“curve” eventually

overtakes the

logarithmic curve.

t

n

18-9

Order of Growth

• For large enough n, a binary search on the

slowest computer will finish earlier than a

sequential search on the fastest computer.

• It makes sense to talk about the order of

growth of a function.

• Constant factors are ignored.

For example, the order of

growth of any linear function

exceeds the order of growth of

any logarithmic function

18-10

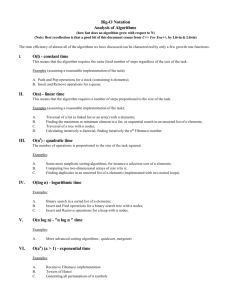

Big-O

Given two functions t(n) and g(n),

we say that

t(n) = O(g(n))

if there exist a positive constant A

and some number N such that

t(n) A g(n)

for all n > N.

18-11

Big-O (cont’d)

• Big-O means that asymptotically

t(n) grows not faster than g(n).

like “t(n) g(n)”

• In analysis of algorithms, it is

common practice to use big-O

for the tightest possible upper

bound.

like “t(n) = g(n)”

• Example:

Sequential Search is O(n)

Binary Search is O(log n)

18-12

Big-O (cont’d)

• For a log, the base is not important:

from the Change of Base Theorem

logb n K log n

where

1

K

log b

18-13

Big-O (cont’d)

• For a polynomial

k 1

P(n) ak nk ak 1

... a1n a0

the big-O is determined by the

degree of the polynomial:

P(n) O(nk )

18-14

Big-O (cont’d)

• For “triangular” nested loops

for (int i = 0; i < n; i++)

for (int j = i; j < n; j++)

...

The total number of iterations is:

n(n 1)

1 2 ... (n 1)

O( n 2 )

2

18-15

Big-O Examples

• O(1) — constant time

Finding a median value in a sorted array

Calculating 1 + 2 + ... + n using the formula for the

sum of an arithmetic sequence

Push and pop operations in an efficiently

implemented stack; add and remove operations in

a queue (Chapter 21)

Finding a key in a lookup table or a sparsely

populated hash table (Chapter 24)

18-16

Big-O Examples

• O(log n) — logarithmic time

Binary search in a sorted list of n elements

Finding a target value in a binary search

tree with n nodes (Chapter 23)

add and remove operations in a priority

queue, implemented as a heap, with n

nodes (Chapter 25)

18-17

Big-O Examples

• O(n) — linear time

Traversing a list with n elements, (for

example, finding max or min)

Calculating n factorial or the n-th Fibonacci

number iteratively

Traversing a binary tree with n nodes

(Chapter 23)

18-18

Big-O Examples

• O(n log n) — “n log n” time

Mergesort and Quicksort of n elements

Heapsort (Chapter 25)

18-19

Big-O Examples

• O(n2) — quadratic time

More simplistic sorting algorithms, such as

Selection Sort of n elements

Traversing an n by n 2-D array

Finding duplicates in an unsorted list of n

elements (implemented with a nested loop)

18-20

Big-O Examples

• O(an) (a > 1) — exponential time

Recursive Fibonacci implementation

(a 3/2; see Chapter 22)

Solving the Tower of Hanoi puzzle (a =

2; see Section 22.5)

Generating all possible permutations

of n symbols

18-21

Big-O Summary

O(1) < O(log n) < O(n) < O(n log n) < O(n2) < O(n3) < O(an)

t

Exponential:

t = kan

Quadratic:

t = Cn2

n log n

Linear

log n

Constant

n

18-22

Sorting

• O(n2)

• O(n log n)

By counting

Selection Sort

Insertion Sort

Mergesort

Quicksort

Heapsort

If you are limited to “honest”

comparison sorting, any sorting

algorithm, in its worst case scenario,

takes at least O(nlog n) steps

18-23

Sorting by Counting —

2

O(n )

• For each value in the list, count how many values are

smaller (or equal with a smaller index).

• Place that value into the position indicated by the

count.

Always n2

comparisons

for (int i = 0; i < n; i++)

{

count = 0;

for (int j = 0; j < n; j++)

if (a [ j ] < a [ i ] | | (a [ j ] == a [ i ] && j < i ) )

count++;

b [count ] = a [ i ];

}

18-24

Selection Sort —

2

O(n )

• Find the largest value and swap it with the last

element.

• Decrement the “logical” size of the list and repeat

while the size exceeds 1.

while (n > 1)

{

iMax = 0;

for (int i = 1; i < n; i++)

if (a [ i ] > a [ iMax ])

iMax = i;

Always n(n-1)/2

comparisons

swap (a, iMax, n-1);

n--;

}

18-25

Insertion Sort — O(n2)

• Keep the beginning of the list sorted.

• Insert the next value in order into the sorted.

beginning segment.

O(n2) comparisons

on average, but only

O(n) when the list is

already sorted

for (int i = 1; i < n; i++)

{

temp = a [ i ];

for (int j = i; j > 0 && a [ j-1 ] > temp; j--)

a [ j ] = a [ j-1 ];

a [ j ] = temp;

}

18-26

Mergesort — O(n log n)

• Split the list down the middle into two lists of

approximately equal length.

• Sort (recursively) each half.

• Merge the two sorted halves into one sorted list.

private void sort (double a [ ], int from, int to)

{

if (to == from)

return;

Each merge

takes O(n)

int middle = (from + to) / 2;

comparisons

sort(a, from, middle);

sort(a, middle + 1, to);

merge (a, from, middle, middle + 1, to);

}

18-27

Quicksort — O(n log n)

• Choose a “pivot” element.

• Partition the array so that all the

values to the left of the pivot are

smaller than or equal to it, and all

the elements to the right of the

pivot are greater than or equal to it.

• Sort (recursively) the left-of-thepivot and right-of-the-pivot pieces.

Proceed from

both ends of the

array as far as

possible; when

both values are

on the wrong side

of the pivot, swap

them

O(n log n) comparisons,

on average, if partitioning

splits the array evenly

most of the time

18-28

Heapsort — O(n log n)

• An elegant algorithm based on heaps

(Chapter 25)

18-29

Sorting Summary

Best case

Average case

Worst case

Selection Sort

O(n2)

O(n2)

O(n2)

Insertion Sort

O(n) — array

already sorted

O(n2)

O(n2)

Mergesort

O(n log n), or O(n)

in a slightly

modified version

when the array is

sorted

O(n log n)

O(n log n)

Quicksort

O(n log n)

O(n log n)

O(n2) — pivot is

consistently chosen far

from the median value,

e.g., the array is already

sorted and the first

element is chosen as

pivot

Heapsort

O(n log n)

O(n log n)

O(n log n)

18-30

Review:

• What assumptions are made for big-O

analysis?

• What O(...) expressions are commonly used

to measure the big-O performance of

algorithms?

Is it true that n(n-1)/2 = O(n2)?

•

• Give an example of an algorithm that runs in

O(log n) time.

• Give an example of an algorithm (apart from

sorting) that runs in O(n2) time.

18-31

Review (cont’d):

• Name three O(n log n) sorting algorithms.

• How many comparisons are needed in

sorting by counting? In Selection Sort?

•

•

•

•

What is the main idea of Insertion Sort?

What is the best case for Insertion Sort?

What is the main idea of Quicksort?

What is the worst case for Quicksort?

18-32