Learning Bayesian Networks from Data

advertisement

K2 Algorithm Presentation

Learning Bayes Networks from Data

Haipeng Guo

Friday, April 21, 2000

KDD Lab, CIS Department, KSU

Presentation Outline

•

Bayes Networks Introduction

•

What’s K2?

•

Basic Model and the Score Function

•

K2 algorithm

•

Demo

Bayes Networks Introduction

• A Bayes network B = (Bs, Bp)

• A Bayes Network structure Bs is a directed

acyclic graph in which nodes represent

random domain variables and arcs between

nodes represent probabilistic independence.

• Bs is augmented by conditional probabilities,

Bp, to form a Bayes Network B.

Bayes Networks Introduction

• Example: Sprinkler

- Bs of Bayes Network: the structure

Sprinkler

Season

x2

x1

Ground_moist

x4

Rain

x3

Ground_state

x5

Bayes Networks Introduction

- Bp of Bayes Network: the conditional probability

season

P(Spring)

0.25

P(Summer)

0.25

P(Fall)

0.25

P(Winter)

0.25

sprinkler

Season

Spring

Summer

Fall

Winter

P(on)

0.75

1

0.75

0.25

P(off)

0.25

0

0.25

0.75

Rain , Ground-moist, and Ground-state

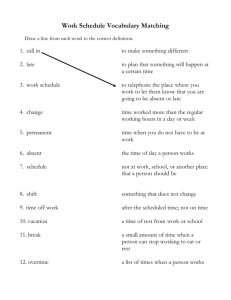

What’s K2?

• K2 is an algorithm for constructing a Bayes

Network from a database of records

• “A Bayesian Method for the Induction of

Probabilistic Networks from Data”, Gregory

F. Cooper and Edward Herskovits, Machine

Learning 9, 1992

Basic Model

•

The problem: to find the most probable

Bayes-network structure given a database

•

D – a database of cases

•

Z – the set of variables represented by D

•

Bsi , Bsj – two bayes network structures

containing exactly those variables that are in

Z

Basic Model

P( BSi , D)

P( BSi | D)

P( BSi , D)

P( D)

P( BSj | D) P( BSj , D) P( BSj , D)

P( D)

• By computing such ratios for pairs of bayes network

structures, we can rank order a set of structures by

their posterior probabilities.

• Based on four assumptions, the paper introduces an

efficient formula for computing P(Bs,D), let B represent

an arbitrary bayes network structure containing just the

variables in D

Computing P(Bs,D)

• Assumption 1 The database variables, which we

denote as Z, are discrete

• Assumption 2 Cases occur independently, given a

bayes network model

• Assumption 3 There are no cases that have

variables with missing values

• Assumption 4 The density function f(Bp|Bs) is

uniform. Bp is a vector whose values denotes the

conditional-probability assignment associated with

structure Bs

Computing P(Bs,D)

ri

(ri 1)!

P( Bs , D) P( Bs )

N ij N ijk !

i 1 j 1 ( N ij ri 1)!

k 1

n

Where

qi

D - dataset, it has m cases(records)

Z - a set of n discrete variables: (x1, …, xn)

ri - a variable xi in Z has ri possible value assignment: (vi1 ,...vir )

i

Bs - a bayes network structure containing just the variables in Z

i - each variable xi in Bs has a set of parents which we represent

with a list of variables i

qi - there are has unique instantiations of i

wij - denote jth unique instantiation of i relative to D.

Nijk - the number of cases in D in which variable xi has the value of

vik and i is instantiated as wij.

Nij - N ij

ri

N

k 1

ijk

Decrease the computational complexity

Three more assumptions to decrease the computational

complexity to polynomial-time:

<1> There is an ordering on the nodes such that if xi precedes

xj, then we do not allow structures in which there is an arc from

xj to xi .

<2> There exists a sufficiently tight limit on the number of

parents of any nodes

<3> P(i xi) and P(j xj) are independent when i j.

ri

(ri 1)!

max [ P( Bs , D)] [ P( i xi )

N ij N ijk !

Bs

i 1

j 1 ( N ij ri 1)!

k 1

n

qi

K2 algorithm: a heuristic search method

Use the following functions:

(ri 1)! ri

g (i, i )

N ijk !

j 1 ( N ij ri 1)! k 1

qi

Where the Nijk are relative to i being the parents of xi and

relative to a database D

Pred(xi) = {x1, ... xi-1}

It returns the set of nodes that precede xi in the node

ordering

K2 algorithm: a heuristic search method

{Input: A set of nodes, an ordering on the nodes, an

upper bound u on the number of parents a node may

have, and a database D containing m cases}

{Output: For each nodes, a printout of the parents of the

node}

K2 algorithm: a heuristic search method

Procedure K2

For i:=1 to n do

i = ;

Pold = g(i, i );

OKToProceed := true

while OKToProceed and | i |<u do

let z be the node in Pred(xi)- i that maximizes g(i, i {z});

Pnew = g(i, i {z});

if Pnew > Pold then

Pold := Pnew ;

i :=i {z} ;

else OKToProceed := false;

end {while}

write(“Node:”, “parents of this nodes :”, i );

end {for}

end {K2}

Conditional probabilities

• Let ijk denote the conditional probabilities P(xi =vik | i = wij )-that is,

the probability that xi has value v for some k from 1 to ri , given that

the parents of x , represented by , are instantiated as wij. We call ijk a

network conditional probability.

• Let be the four assumptions.

• The expected value of ijk :

E[ ijk | D, Bs , ]

( N ijk 1)

( N ij ri )

Demo Example

Input:

Case

1

2

3

4

5

6

7

8

9

10

x1

present

present

absent

present

absent

absent

present

absent

present

absent

x2

absent

present

absent

present

absent

present

present

absent

present

absent

x3

absent

present

present

present

absent

present

present

absent

present

absent

The dataset is generated from the following structure:

x1

x2

x3

Demo Example

Note:

-- use log[g(i, i )] instead of g(i, i ) to save running time