MyInternetIsSlow

advertisement

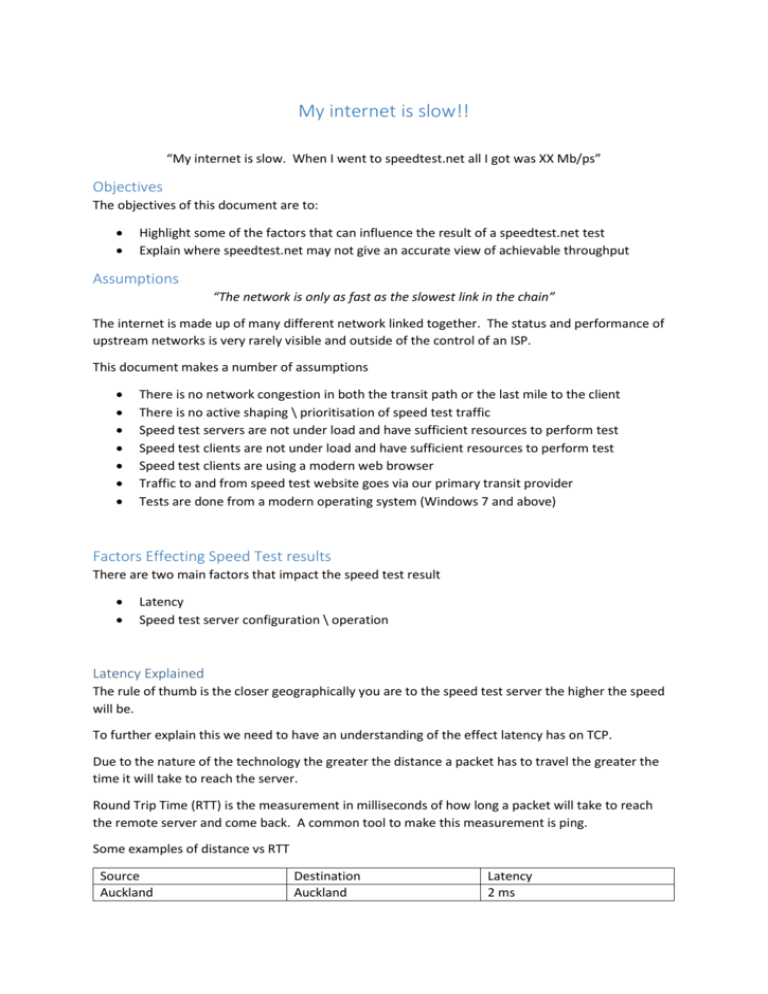

My internet is slow!! “My internet is slow. When I went to speedtest.net all I got was XX Mb/ps” Objectives The objectives of this document are to: Highlight some of the factors that can influence the result of a speedtest.net test Explain where speedtest.net may not give an accurate view of achievable throughput Assumptions “The network is only as fast as the slowest link in the chain” The internet is made up of many different network linked together. The status and performance of upstream networks is very rarely visible and outside of the control of an ISP. This document makes a number of assumptions There is no network congestion in both the transit path or the last mile to the client There is no active shaping \ prioritisation of speed test traffic Speed test servers are not under load and have sufficient resources to perform test Speed test clients are not under load and have sufficient resources to perform test Speed test clients are using a modern web browser Traffic to and from speed test website goes via our primary transit provider Tests are done from a modern operating system (Windows 7 and above) Factors Effecting Speed Test results There are two main factors that impact the speed test result Latency Speed test server configuration \ operation Latency Explained The rule of thumb is the closer geographically you are to the speed test server the higher the speed will be. To further explain this we need to have an understanding of the effect latency has on TCP. Due to the nature of the technology the greater the distance a packet has to travel the greater the time it will take to reach the server. Round Trip Time (RTT) is the measurement in milliseconds of how long a packet will take to reach the remote server and come back. A common tool to make this measurement is ping. Some examples of distance vs RTT Source Auckland Destination Auckland Latency 2 ms Auckland Auckland Auckland Auckland Wellington Sydney Singapore Los Angeles 10 ms 30 ms 130 ms 180 ms As we can see the latency increases as the distance increases. To see the effect this has on throughput lets quickly review how TCP works. A very simplistic description of the way TCP transfers data is: The sender sends some data and then waits for an acknowledgement from the receiver before sending any more data The receiver then sends an acknowledgement that the data was received The sender then sends the next block of data and the process repeats This delay is referred to as the Bandwidth Delay Product. So for transfers over a shorter distance less time will be spent “waiting”, thus over a given time period more data will be transferred. Automotive Example To put this in automotive terms let us use the example of two Auckland based trucking companies. Both companies have the same truck. The trucks have the same capacity and travel at the same speed (ignoring road type, speed zone etc). When a delivery is made the trunk must reach the destination and then return to base before the next delivery can be made. Company A only does Auckland deliveries whereas Company B only does Auckland to Hamilton deliveries. During an 8 hours day Company A can do more deliveries than Company B because it has less distance to travel, thus the time per delivery is less. Time taken per delivery = latency. Latency vs Throughput Bench Testing Testing Topology Three virtual Debian 6 machines are setup inside VMware workstation. IP forwarding is enabled on the WAN Emulator machine. 10.0.0.4/30 10.0.0.0/30 .1 .2 Host 1 .5 WAN Emulator .6 Host 2 Low latency testing Using PING we establish the RTT from Host1 to Host2 is around 1 ms. We then use IPERF to generate a TCP stream and we see just under 100 Mb/ps of throughput. This gives us a baseline of throughput. 50ms Latency Testing First we add 50ms to the RTT by configuring a 25ms delay on traffic entering and leaving the wan emulator. We now see pings from Host1 to Host2 are higher than before. When we perform the same speed test and the results are lower than before. 100ms Latency Testing We change the wan emulator to add 100ms to the RTT by configuring a 50ms delay on traffic entering and leaving the wan emulator. We now see pings from Host1 to Host2 are higher than before. When we perform the same speed test the results are lower than before. 200ms latency testing We change the wan emulator to add 100ms to the RTT by configuring a 100ms delay on traffic entering and leaving the wan emulator. We now see pings from Host1 to Host2 are higher than before. When we perform the same speed test the results are lower than before. From some basic testing we see as latency increases throughput drops. Multiple Connections One method to increase throughput is to have multiple connections at once. If we use the 200ms latency test environment and increase the number of connections overall throughput increases. Using 4 Connections Using 8 Connections The number of connections used is decided by the application and the servers being connected to. The network in the middle (the ISP) does not control this as such. To revisit the automotive example from earlier using multiple connections would be the same as Company B (who does the Auckland to Hamilton deliveries) adding additional trucks to their fleet. TCP Window Size This note is to acknowledge TCP Send and Receive window sizing can influence TCP throughput but are outside the scope of this document. Further reading: http://en.wikipedia.org/wiki/Transmission_Control_Protocol http://en.wikipedia.org/wiki/Bandwidth-delay_product Speed test server configuration \ operation “Not all speed tests are created equal” How speedtest.net works To get a better understanding of how the speedtest.net testing works, Wireshark was used to capture the traffic to “lift the bonnet” so to speak on the testing process. * The upload test is ignored as majority of end user interest is in download speeds Test 1 – tools.nztechnologygroup.com This server is located in the Vocus data centre in Albany. It is running the free speedtest.net mini software. First we see 2x HTTP GETs to download random350x350.jpg We then see 2x HTTP GETs to download random2000x2000.jpg Test 2 – latools.nztechnologygroup.com This server is a VPS hosted in Los Angeles running the same speedtest.net mini software. Once again we see 2x HTTP GETs to download random350x350.jpg Next we see 2x HTTP GETs to download random1000x1000.jpg This is somewhat interesting that we are getting 2x different behaviours using what is essentially the same software. Test 3 – Using Sydney based Optus speedtest.net server Once again we see 2x HTTP GETs to download random350x350.jpg Then we see 2x HTTP GETs to download random750x750.jpg Then we see 2x HTTP GETs to download random1000x1000.jpg Test 4 – Using San Jose based Internode speedtest.net server First off we get 10x HTTP GETs to download laency.txt Followed by 1x HTTP GET to download random1000x1000.jpg Then we see 4x HTTP GETs to download random750x750.jpg From 4 different speed test servers we have observed 4 different behaviours. Speedtest.net have information about how the tests are performed. This is located at: https://support.speedtest.net/entries/20862782-how-does-the-test-itself-work-how-is-the-resultcalculated Looking through this article a couple of points stand out: Speedtest.net tests for latency and modifies its behaviour accordingly. o One commonality between the 4 tests is the initial download of random350x350.jpg o Random350x350.jpg is approximately 239KB o This file is appears to me what is used to estimate the download speed Earlier in this document we highlighted the difference in latency between traffic from NZ to Australia and the US. This can go ways to explaining why speed tests to Australia and the US are quite different. Speedtest.net tests aim to be completed within 10 seconds Speedtest.net may use up to 4 connections to saturate a link o Earlier in the document we highlighted using multiple connections was a way to boost throughput. Looking through the speedtest.net mini files there does not appear to be a way to modify or force a particular behaviour. Netguage Ookola who is behind the speedtest.net software offers a number of additional tools that can be purchased. One of which is Netguage (http://www.ookla.com/netgauge). This software offers a more feature rich tool and allows a number of configurations. One item of interest is the Client Configuration Templates. http://www.ookla.com/support/a23000438/netgauge%3A+client+configuration Depending on which configuration template is selected the number of connections used can be modified. More connections = more throughput. Real World Testing Below are the results from testing using latools.nztechnologygroup.com as the end point. A UFB 100/50 (evolve 4) was used as the client connectivity. A number of different tests were performed and a number of different results were given. The results are presented here to show how greatly results can vary depending on the testing method Speedtest.net Mini Netguage using Broadband template Netguage using fiber template As we can see depending on which testing software is used, and how it is configured, it can be the decider between a support call occurring or not. Command Line Testing This testing was done using a Debian 6 client downloading a single file “100mb.bin” from latools.nztechnologygroup.com to simulate a real world example (downloading a large file). HTTP download using single tcp connection HTTP download using 4 connections (2444.08 Kilobytes per second = 19.0938 Megabits per second) HTTP download using 8 connections (3128.78 Kilobytes per second = 24.44 Megabits per second) Summary As demonstrated there are a number of factors that can result in a user getting a lower than expected result when using speedtest.net. Namely: Latency due to the distance travelled Configuration of the speedtest.net software Speedtest.net results should only be taken as a rough guide or starting point, not a definitive test on achievable throughput. At the time of writing we have reached out to ookola to setup our own netguage sites.