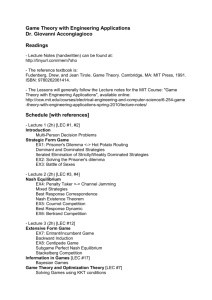

EECC722 - Shaaban

advertisement

Advanced Computer Architecture

Course Goal:

Understanding important emerging design techniques, machine

structures, technology factors, evaluation methods that will

determine the form of high-performance programmable processors and

computing systems in 21st Century.

Important Factors:

•

•

Driving Force: Applications with diverse and increased computational demands

even in mainstream computing (multimedia etc.)

Techniques must be developed to overcome the major limitations of current

computing systems to meet such demands:

– ILP limitations, Memory latency, IO performance.

– Increased branch penalty/other stalls in deeply pipelined CPUs.

– General-purpose processors as only homogeneous system computing

resource.

• Enabling Technology for many possible solutions:

– Increased density of VLSI logic (one billion transistors in 2004?)

– Enables a high-level of system-level integration.

EECC722 - Shaaban

#1 Lec # 1 Fall 2003 9-8-2003

Topics we will cover include:

•

Course Topics

Overcoming inherent ILP limitations by exploiting Thread-level

Parallelism (TLP):

– Support for Simultaneous Multithreading (SMT).

• Alpha EV8. Intel P4 Xeon (aka Hyper-Threading), IBM Power5.

– Introduction to Multiprocessors:

• Chip Multiprocessors (CMPs): The Hydra Project. IBM Power4, 5

•

Memory Latency Reduction:

– Conventional & Block-based Trace Cache (Intel P4).

•

Advanced Branch Prediction Techniques.

•

•

Towards micro heterogeneous computing systems:

– Vector processing. Vector Intelligent RAM (VIRAM).

– Digital Signal Processing (DSP) & Media Architectures &

Processors.

– Re-Configurable Computing and Processors.

Virtual Memory Implementation Issues.

•

High Performance Storage: Redundant Arrays of Disks (RAID).

EECC722 - Shaaban

#2 Lec # 1 Fall 2003 9-8-2003

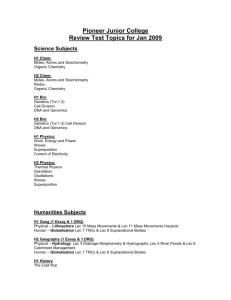

Computer System Components

L1

1000MHZ - 3 GHZ (a multiple of system bus speed)

Pipelined ( 7 -21 stages )

Superscalar (max ~ 4 instructions/cycle) single-threaded

Dynamically-Scheduled or VLIW

Dynamic and static branch prediction

CPU

L2

SDRAM

PC100/PC133

100-133MHZ

64-128 bits wide

2-way inteleaved

~ 900 MBYTES/SEC

L3

Double Date

Rate (DDR) SDRAM

PC3200

400MHZ (effective 200x2)

64-128 bits wide

4-way interleaved

~3.2 GBYTES/SEC

(second half 2002)

RAMbus DRAM (RDRAM)

PC800, PC1060

400-533MHZ (DDR)

16-32 bits wide channel

~ 1.6 - 3.2 GBYTES/SEC

( per channel)

Caches

System Bus

Examples: Alpha, AMD K7: EV6, 400MHZ

Intel PII, PIII: GTL+ 133MHZ

Intel P4

800MHZ

Support for one or more CPUs

adapters

Memory

Controller

Memory Bus

I/O Buses

NICs

Controllers

Example: PCI-X 133MHZ

PCI, 33-66MHZ

32-64 bits wide

133-1024 MBYTES/SEC

Memory

Disks

Displays

Keyboards

North

Bridge

South

Bridge

Networks

I/O Devices:

Fast Ethernet

Gigabit Ethernet

ATM, Token Ring ..

Chipset

EECC722 - Shaaban

#3 Lec # 1 Fall 2003 9-8-2003

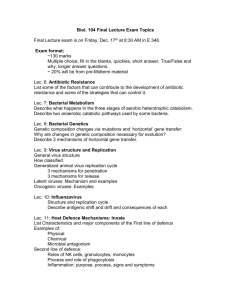

Computer System Components

Enhanced CPU Performance & Capabilities:

Memory Latency Reduction:

Conventional &

Block-based

Trace Cache.

L1

•

•

•

•

•

Support for Simultaneous Multithreading (SMT): Alpha EV8.

VLIW & intelligent compiler techniques: Intel/HP EPIC IA-64.

More Advanced Branch Prediction Techniques.

Chip Multiprocessors (CMPs): The Hydra Project. IBM Power 4,5

Vector processing capability: Vector Intelligent RAM (VIRAM).

Or Multimedia ISA extension.

• Digital Signal Processing (DSP) capability in system.

• Re-Configurable Computing hardware capability in system.

SMT

CMP

CPU

L2

Integrate Memory

Controller & a portion

of main memory with

CPU: Intelligent RAM

Integrated memory

Controller:

AMD Opetron

IBM Power5

L3

Caches

System Bus

adapters

Memory

Controller

Memory Bus

I/O Buses

NICs

Controllers

Memory

Disks (RAID)

Displays

Keyboards

North

Bridge

South

Bridge

Networks

I/O Devices:

Chipset

EECC722 - Shaaban

#4 Lec # 1 Fall 2003 9-8-2003

EECC551 Review

•

•

•

•

•

•

•

•

•

•

Recent Trends in Computer Design.

A Hierarchy of Computer Design.

Computer Architecture’s Changing Definition.

Computer Performance Measures.

Instruction Pipelining.

Branch Prediction.

Instruction-Level Parallelism (ILP).

Loop-Level Parallelism (LLP).

Dynamic Pipeline Scheduling.

Multiple Instruction Issue (CPI < 1): Superscalar vs. VLIW

• Dynamic Hardware-Based Speculation

• Cache Design & Performance.

EECC722 - Shaaban

#5 Lec # 1 Fall 2003 9-8-2003

Recent Trends in Computer Design

• The cost/performance ratio of computing systems have seen a

steady decline due to advances in:

– Integrated circuit technology: decreasing feature size,

• Clock rate improves roughly proportional to improvement in

• Number of transistors improves proportional to (or faster).

– Architectural improvements in CPU design.

• Microprocessor systems directly reflect IC improvement in terms

of a yearly 35 to 55% improvement in performance.

• Assembly language has been mostly eliminated and replaced by

other alternatives such as C or C++

• Standard operating Systems (UNIX, NT) lowered the cost of

introducing new architectures.

• Emergence of RISC architectures and RISC-core architectures.

• Adoption of quantitative approaches to computer design based on

empirical performance observations.

EECC722 - Shaaban

#6 Lec # 1 Fall 2003 9-8-2003

Microprocessor Architecture Trends

CISC Machines

instructions take variable times to complete

RISC Machines (microcode)

simple instructions, optimized for speed

RISC Machines (pipelined)

same individual instruction latency

greater throughput through instruction "overlap"

Superscalar Processors

multiple instructions executing simultaneously

Multithreaded Processors

VLIW

additional HW resources (regs, PC, SP) "Superinstructions" grouped together

each context gets processor for x cycles decreased HW control complexity

CMPs

Single Chip Multiprocessors

duplicate entire processors

(tech soon due to Moore's Law)

SIMULTANEOUS MULTITHREADING (SMT)

multiple HW contexts (regs, PC, SP)

each cycle, any context may execute

SMT/CMPs (e.g. IBM Power5 in 2004)

EECC722 - Shaaban

#7 Lec # 1 Fall 2003 9-8-2003

1988 Computer Food Chain

Mainframe

Supercomputer

Minisupercomputer

Work- PC

Ministation

computer

Massively Parallel

Processors

EECC722 - Shaaban

#8 Lec # 1 Fall 2003 9-8-2003

Massively Parallel Processors

Minisupercomputer

Minicomputer

1997- Computer Food Chain

Clusters

Mainframe

Server

Work- PC PDA

station

Supercomputer

EECC722 - Shaaban

#9 Lec # 1 Fall 2003 9-8-2003

Processor Performance Trends

Mass-produced microprocessors a cost-effective high-performance

replacement for custom-designed mainframe/minicomputer CPUs

1000

Supercomputers

100

Mainframes

10

Minicomputers

Microprocessors

1

0.1

1965

1970

1975

1980 1985

Year

1990

1995

2000

EECC722 - Shaaban

#10 Lec # 1 Fall 2003 9-8-2003

Microprocessor Performance

1987-97

1200

DEC Alpha 21264/600

1000

800

Integer SPEC92 Performance

600

DEC Alpha 5/500

400

200

0

DEC Alpha 5/300

DEC

HP

SunMIPSMIPSIBM 9000/AXP/

RS/

DEC Alpha 4/266

500

-4/ M M/

750

6000

IBM POWER 100

260 2000 120

87 88 89 90 91 92 93 94 95 96 97

EECC722 - Shaaban

#11 Lec # 1 Fall 2003 9-8-2003

Microprocessor Frequency Trend

10,000

100

Intel

DEC

Gate delays/clock

21264S

1,000

Mhz

21164A

21264

Pentium(R)

21064A

21164

II

21066

MPC750

604

604+

10

Pentium Pro

601, 603 (R)

Pentium(R)

100

Result:

486

386

2005

2003

2001

1999

1997

1995

1993

1991

1

1989

10

1987

Gate Delays/ Clock

Processor freq

scales by 2X per

generation

IBM Power PC

Deeper Pipelines

Longer stalls

Higher CPI

Frequency doubles each generation

Number of gates/clock reduce by 25%

EECC722 - Shaaban

#12 Lec # 1 Fall 2003 9-8-2003

Microprocessor Transistor

Count Growth Rate

100000000

One billion in 2004?

Alpha 21264: 15 million

Pentium Pro: 5.5 million

PowerPC 620: 6.9 million

Alpha 21164: 9.3 million

Sparc Ultra: 5.2 million

10000000

Moore’s Law

Pentium

i80486

Transistors

1000000

i80386

i80286

100000

Moore’s Law:

i8086

10000

i8080

i4004

1000

1970

1975

1980

1985

Year

1990

1995

2000

2X transistors/Chip

Every 1.5 years

Still valid possibly

until 2010

EECC722 - Shaaban

#13 Lec # 1 Fall 2003 9-8-2003

Increase of Capacity of VLSI Dynamic RAM Chips

size

year

size(Megabit)

1980

0.0625

1983

0.25

1986

1

1989

4

1992

16

1996

64

1999

256

2000

1024

1000000000

100000000

Bits

10000000

1000000

100000

10000

1000

1970

1975

1980

1985

Year

1990

1995

2000

1.55X/yr,

or doubling every 1.6

years

EECC722 - Shaaban

#14 Lec # 1 Fall 2003 9-8-2003

Recent Technology Trends

(Summary)

Capacity

Speed (latency)

Logic 2x in 3 years

2x in 3 years

DRAM 4x in 3 years

2x in 10 years

Disk

2x in 10 years

4x in 3 years

Result: Widening gap between CPU performance &

memory/IO performance

EECC722 - Shaaban

#15 Lec # 1 Fall 2003 9-8-2003

Computer Technology Trends:

Evolutionary but Rapid Change

• Processor:

– 2X in speed every 1.5 years; 100X performance in last decade.

• Memory:

– DRAM capacity: > 2x every 1.5 years; 1000X size in last decade.

– Cost per bit: Improves about 25% per year.

• Disk:

–

–

–

–

Capacity: > 2X in size every 1.5 years.

Cost per bit: Improves about 60% per year.

200X size in last decade.

Only 10% performance improvement per year, due to mechanical

limitations.

• Expected State-of-the-art PC by end of year 2003 :

– Processor clock speed:

– Memory capacity:

– Disk capacity:

> 3400 MegaHertz (3.2 GigaHertz)

> 4000 MegaByte (2 GigaBytes)

> 300 GigaBytes (0.3 TeraBytes)

EECC722 - Shaaban

#16 Lec # 1 Fall 2003 9-8-2003

A Hierarchy of Computer Design

Level Name

1

Modules

Electronics

2

Logic

3

Organization

Gates, FF’s

Registers, ALU’s ...

Processors, Memories

Primitives

Descriptive Media

Transistors, Resistors, etc.

Gates, FF’s ….

Circuit Diagrams

Logic Diagrams

Registers, ALU’s …

Register Transfer

Notation (RTN)

Low Level - Hardware

4 Microprogramming

Assembly Language

Microinstructions

Microprogram

Firmware

5 Assembly language

programming

6 Procedural

Programming

7

Application

OS Routines

Applications

Drivers ..

Systems

Assembly language

Instructions

Assembly Language

Programs

OS Routines

High-level Languages

High-level Language

Programs

Procedural Constructs

Problem-Oriented

Programs

High Level - Software

EECC722 - Shaaban

#17 Lec # 1 Fall 2003 9-8-2003

Hierarchy of Computer Architecture

High-Level Language Programs

Software

Assembly Language

Programs

Application

Operating

System

Machine Language

Program

Compiler

Software/Hardware

Boundary

Firmware

Instr. Set Proc. I/O system

Instruction Set

Architecture

Datapath & Control

Hardware

Digital Design

Circuit Design

Microprogram

Layout

Logic Diagrams

Register Transfer

Notation (RTN)

Circuit Diagrams

EECC722 - Shaaban

#18 Lec # 1 Fall 2003 9-8-2003

Computer Architecture Vs. Computer Organization

• The term Computer architecture is sometimes erroneously restricted

to computer instruction set design, with other aspects of computer

design called implementation

• More accurate definitions:

– Instruction set architecture (ISA): The actual programmervisible instruction set and serves as the boundary between the

software and hardware.

– Implementation of a machine has two components:

• Organization: includes the high-level aspects of a computer’s

design such as: The memory system, the bus structure, the

internal CPU unit which includes implementations of arithmetic,

logic, branching, and data transfer operations.

• Hardware: Refers to the specifics of the machine such as detailed

logic design and packaging technology.

• In general, Computer Architecture refers to the above three aspects:

Instruction set architecture, organization, and hardware.

EECC722 - Shaaban

#19 Lec # 1 Fall 2003 9-8-2003

Computer Architecture’s Changing

Definition

• 1950s to 1960s:

Computer Architecture Course = Computer Arithmetic

• 1970s to mid 1980s:

Computer Architecture Course = Instruction Set Design,

especially ISA appropriate for compilers

• 1990s-2000s :

Computer Architecture Course = Design of CPU,

memory system, I/O system, Multiprocessors

EECC722 - Shaaban

#20 Lec # 1 Fall 2003 9-8-2003

Architectural Improvements

• Increased optimization, utilization and size of cache systems with

multiple levels (currently the most popular approach to utilize the

increased number of available transistors) .

• Memory-latency hiding techniques.

• Optimization of pipelined instruction execution.

• Dynamic hardware-based pipeline scheduling.

• Improved handling of pipeline hazards.

• Improved hardware branch prediction techniques.

• Exploiting Instruction-Level Parallelism (ILP) in terms of multipleinstruction issue and multiple hardware functional units.

• Inclusion of special instructions to handle multimedia applications.

• High-speed system and memory bus designs to improve data transfer

rates and reduce latency.

EECC722 - Shaaban

#21 Lec # 1 Fall 2003 9-8-2003

Current Computer Architecture Topics

Input/Output and Storage

Disks, WORM, Tape

Emerging Technologies

Interleaving

Bus protocols

DRAM

Memory

Hierarchy

Coherence,

Bandwidth,

Latency

L2 Cache

L1 Cache

VLSI

Instruction Set Architecture

Addressing,

Protection,

Exception Handling

Pipelining, Hazard Resolution, Superscalar,

Reordering, Branch Prediction, Speculation,

VLIW, Vector, DSP, ...

Multiprocessing,

Simultaneous CPU Multi-threading

RAID

Pipelining and Instruction

Level Parallelism (ILP)

Thread Level Parallelism (TLB)

EECC722 - Shaaban

#22 Lec # 1 Fall 2003 9-8-2003

Computer Performance Measures:

Program Execution Time

• For a specific program compiled to run on a specific machine “A”,

the following parameters are provided:

– The total instruction count of the program.

– The average number of cycles per instruction (average CPI).

– Clock cycle of machine “A”

•

How can one measure the performance of this machine running this

program?

– Intuitively the machine is said to be faster or has better performance

running this program if the total execution time is shorter.

– Thus the inverse of the total measured program execution time is a

possible performance measure or metric:

PerformanceA = 1 / Execution TimeA

How to compare performance of different machines?

What factors affect performance? How to improve performance?

EECC722 - Shaaban

#23 Lec # 1 Fall 2003 9-8-2003

CPU Execution Time: The CPU Equation

• A program is comprised of a number of instructions, I

– Measured in:

instructions/program

• The average instruction takes a number of cycles per instruction

(CPI) to be completed.

– Measured in: cycles/instruction

– IPC (Instructions Per Cycle) = 1/CPI

• CPU has a fixed clock cycle time C = 1/clock rate

– Measured in:

seconds/cycle

• CPU execution time is the product of the above three

parameters as follows:

CPU Time

=

I

x CPI

x

C

CPU time

= Seconds

Program

= Instructions x Cycles

Program

Instruction

x Seconds

Cycle

EECC722 - Shaaban

#24 Lec # 1 Fall 2003 9-8-2003

Factors Affecting CPU Performance

CPU time

= Seconds

= Instructions x Cycles

Program

Program

Instruction

Instruction

Count I

CPI

IPC

Program

X

X

Compiler

X

X

X

X

Instruction Set

Architecture (ISA)

Organization

(Micro-Architecture)

Technology

x Seconds

X

Cycle

Clock Cycle C

X

X

EECC722 - Shaaban

#25 Lec # 1 Fall 2003 9-8-2003

Metrics of Computer Performance

Execution time: Target workload,

SPEC95, SPEC2000, etc.

Application

Programming

Language

Compiler

ISA

(millions) of Instructions per second – MIPS

(millions) of (F.P.) operations per second – MFLOP/s

Datapath

Control

Megabytes per second.

Function Units

Transistors Wires Pins

Cycles per second (clock rate).

Each metric has a purpose, and each can be misused.

EECC722 - Shaaban

#26 Lec # 1 Fall 2003 9-8-2003

SPEC: System Performance

Evaluation Cooperative

The most popular and industry-standard set of CPU benchmarks.

• SPECmarks, 1989:

– 10 programs yielding a single number (“SPECmarks”).

• SPEC92, 1992:

– SPECInt92 (6 integer programs) and SPECfp92 (14 floating point programs).

• SPEC95, 1995:

– SPECint95 (8 integer programs):

• go, m88ksim, gcc, compress, li, ijpeg, perl, vortex

– SPECfp95 (10 floating-point intensive programs):

• tomcatv, swim, su2cor, hydro2d, mgrid, applu, turb3d, apsi, fppp, wave5

– Performance relative to a Sun SuperSpark I (50 MHz) which is given a score

of SPECint95 = SPECfp95 = 1

• SPEC CPU2000, 1999:

– CINT2000 (11 integer programs). CFP2000 (14 floating-point intensive programs)

– Performance relative to a Sun Ultra5_10 (300 MHz) which is given a score of

SPECint2000 = SPECfp2000 = 100

EECC722 - Shaaban

#27 Lec # 1 Fall 2003 9-8-2003

Top 20 SPEC CPU2000 Results (As of March 2002)

Top 20 SPECint2000

#

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

MHz

1300

2200

2200

1667

1000

1400

1050

1533

750

833

1400

833

600

675

900

552

750

700

800

400

Processor

POWER4

Pentium 4

Pentium 4 Xeon

Athlon XP

Alpha 21264C

Pentium III

UltraSPARC-III Cu

Athlon MP

PA-RISC 8700

Alpha 21264B

Athlon

Alpha 21264A

MIPS R14000

SPARC64 GP

UltraSPARC-III

PA-RISC 8600

POWER RS64-IV

Pentium III Xeon

Itanium

MIPS R12000

int peak

814

811

810

724

679

664

610

609

604

571

554

533

500

478

467

441

439

438

365

353

Top 20 SPECfp2000

int base

790

790

788

697

621

648

537

587

568

497

495

511

483

449

438

417

409

431

358

328

MHz

1300

1000

1050

2200

2200

833

800

833

1667

750

1533

600

675

900

1400

1400

500

450

500

400

Processor

POWER4

Alpha 21264C

UltraSPARC-III Cu

Pentium 4 Xeon

Pentium 4

Alpha 21264B

Itanium

Alpha 21264A

Athlon XP

PA-RISC 8700

Athlon MP

MIPS R14000

SPARC64 GP

UltraSPARC-III

Athlon

Pentium III

PA-RISC 8600

POWER3-II

Alpha 21264

MIPS R12000

fp peak

1169

960

827

802

801

784

701

644

642

581

547

529

509

482

458

456

440

433

422

407

fp base

1098

776

701

779

779

643

701

571

596

526

504

499

371

427

426

437

397

426

383

382

EECC722 - Shaaban

Source: http://www.aceshardware.com/SPECmine/top.jsp

#28 Lec # 1 Fall 2003 9-8-2003

Quantitative Principles

of Computer Design

• Amdahl’s Law:

The performance gain from improving some portion of

a computer is calculated by:

Speedup =

Performance for entire task using the enhancement

Performance for the entire task without using the enhancement

or Speedup =

Execution time without the enhancement

Execution time for entire task using the enhancement

EECC722 - Shaaban

#29 Lec # 1 Fall 2003 9-8-2003

Performance Enhancement Calculations:

Amdahl's Law

• The performance enhancement possible due to a given design

improvement is limited by the amount that the improved feature is used

• Amdahl’s Law:

Performance improvement or speedup due to enhancement E:

Execution Time without E

Speedup(E) = -------------------------------------Execution Time with E

Performance with E

= --------------------------------Performance without E

– Suppose that enhancement E accelerates a fraction F of the

execution time by a factor S and the remainder of the time is

unaffected then:

Execution Time with E = ((1-F) + F/S) X Execution Time without E

Hence speedup is given by:

Execution Time without E

1

Speedup(E) = --------------------------------------------------------- = -------------------((1 - F) + F/S) X Execution Time without E

(1 - F) + F/S

EECC722 - Shaaban

#30 Lec # 1 Fall 2003 9-8-2003

Pictorial Depiction of Amdahl’s Law

Enhancement E accelerates fraction F of execution time by a factor of S

Before:

Execution Time without enhancement E:

Unaffected, fraction: (1- F)

Affected fraction: F

Unchanged

Unaffected, fraction: (1- F)

F/S

After:

Execution Time with enhancement E:

Execution Time without enhancement E

1

Speedup(E) = ------------------------------------------------------ = -----------------Execution Time with enhancement E

(1 - F) + F/S

EECC722 - Shaaban

#31 Lec # 1 Fall 2003 9-8-2003

Performance Enhancement Example

• For the RISC machine with the following instruction mix given earlier:

Op

ALU

Load

Store

Branch

Freq

50%

20%

10%

20%

Cycles

1

5

3

2

CPI(i)

.5

1.0

.3

.4

% Time

23%

45%

14%

18%

CPI = 2.2

• If a CPU design enhancement improves the CPI of load instructions

from 5 to 2, what is the resulting performance improvement from this

enhancement:

Fraction enhanced = F = 45% or .45

Unaffected fraction = 100% - 45% = 55% or .55

Factor of enhancement = 5/2 = 2.5

Using Amdahl’s Law:

1

1

Speedup(E) = ------------------ = --------------------- =

(1 - F) + F/S

.55 + .45/2.5

1.37

EECC722 - Shaaban

#32 Lec # 1 Fall 2003 9-8-2003

Extending Amdahl's Law To Multiple Enhancements

• Suppose that enhancement Ei accelerates a fraction Fi of the

execution time by a factor Si and the remainder of the time is

unaffected then:

Speedup

Original Execution Time

((1 F ) F ) XOriginal

i

i

Speedup

i

i

S

Execution Time

i

1

((1 F ) F )

i

i

i

i

S

i

Note: All fractions refer to original execution time.

EECC722 - Shaaban

#33 Lec # 1 Fall 2003 9-8-2003

Amdahl's Law With Multiple Enhancements:

Example

•

Three CPU or system performance enhancements are proposed with the

following speedups and percentage of the code execution time affected:

Speedup1 = S1 = 10

Speedup2 = S2 = 15

Speedup3 = S3 = 30

•

•

Percentage1 = F1 = 20%

Percentage1 = F2 = 15%

Percentage1 = F3 = 10%

While all three enhancements are in place in the new design, each

enhancement affects a different portion of the code and only one

enhancement can be used at a time.

What is the resulting overall speedup?

Speedup

1

((1 F ) F )

i

i

•

i

i

S

i

Speedup = 1 / [(1 - .2 - .15 - .1) + .2/10 + .15/15 + .1/30)]

= 1/ [

.55

+

.0333

]

= 1 / .5833 = 1.71

EECC722 - Shaaban

#34 Lec # 1 Fall 2003 9-8-2003

Pictorial Depiction of Example

Before:

Execution Time with no enhancements: 1

Unaffected, fraction: .55

S1 = 10

F1 = .2

S2 = 15

S3 = 30

F2 = .15

F3 = .1

/ 15

/ 10

/ 30

Unchanged

Unaffected, fraction: .55

After:

Execution Time with enhancements: .55 + .02 + .01 + .00333 = .5833

Speedup = 1 / .5833 = 1.71

Note: All fractions refer to original execution time.

EECC722 - Shaaban

#35 Lec # 1 Fall 2003 9-8-2003

Evolution of Instruction Sets

Single Accumulator (EDSAC 1950)

Accumulator + Index Registers

(Manchester Mark I, IBM 700 series 1953)

Separation of Programming Model

from Implementation

High-level Language Based

(B5000 1963)

Concept of a Family

(IBM 360 1964)

General Purpose Register Machines

Complex Instruction Sets

(Vax, Intel 432 1977-80)

Load/Store Architecture

(CDC 6600, Cray 1 1963-76)

RISC

(Mips,SPARC,HP-PA,IBM RS6000, . . .1987)

EECC722 - Shaaban

#36 Lec # 1 Fall 2003 9-8-2003

A "Typical" RISC

•

•

•

•

•

32-bit fixed format instruction (3 formats I,R,J)

32 64-bit GPRs (R0 contains zero, DP take pair)

32 64-bit FPRs,

3-address, reg-reg arithmetic instruction

Single address mode for load/store:

base + displacement

– no indirection

• Simple branch conditions (based on register values)

• Delayed branch

EECC722 - Shaaban

#37 Lec # 1 Fall 2003 9-8-2003

A RISC ISA Example: MIPS

Register-Register

31

26 25

Op

21 20

rs

rt

6 5

11 10

16 15

rd

sa

0

funct

Register-Immediate

31

26 25

Op

21 20

rs

16 15

0

immediate

rt

Branch

31

26 25

Op

21 20

rs

16 15

0

displacement

rt

Jump / Call

31

26 25

Op

0

target

EECC722 - Shaaban

#38 Lec # 1 Fall 2003 9-8-2003

Instruction Pipelining Review

•

•

•

•

•

•

Instruction pipelining is CPU implementation technique where multiple

operations on a number of instructions are overlapped.

An instruction execution pipeline involves a number of steps, where each step

completes a part of an instruction. Each step is called a pipeline stage or a pipeline

segment.

The stages or steps are connected in a linear fashion: one stage to the next to form

the pipeline -- instructions enter at one end and progress through the stages and

exit at the other end.

The time to move an instruction one step down the pipeline is is equal to the

machine cycle and is determined by the stage with the longest processing delay.

Pipelining increases the CPU instruction throughput: The number of instructions

completed per cycle.

– Under ideal conditions (no stall cycles), instruction throughput is one

instruction per machine cycle, or ideal CPI = 1

Pipelining does not reduce the execution time of an individual instruction: The

time needed to complete all processing steps of an instruction (also called

instruction completion latency).

– Minimum instruction latency = n cycles, where n is the number of pipeline

stages

EECC722 - Shaaban

#39 Lec # 1 Fall 2003 9-8-2003

MIPS In-Order Single-Issue Integer Pipeline

Ideal Operation

Time in clock cycles

Clock Number

Instruction Number

1

2

3

4

5

6

7

Instruction I

Instruction I+1

Instruction I+2

Instruction I+3

Instruction I +4

IF

ID

IF

EX

ID

IF

MEM

EX

ID

IF

WB

MEM

EX

ID

IF

WB

MEM

EX

ID

8

WB

MEM

EX

WB

MEM

9

WB

Time to fill the pipeline

MIPS Pipeline Stages:

IF

ID

EX

MEM

WB

= Instruction Fetch

= Instruction Decode

= Execution

= Memory Access

= Write Back

Last instruction,

I+4 completed

First instruction, I

Completed

5 pipeline stages

Ideal CPI =1

EECC722 - Shaaban

#40 Lec # 1 Fall 2003 9-8-2003

A Pipelined MIPS Datapath

• Obtained from multi-cycle MIPS datapath by adding buffer registers between pipeline stages

• Assume register writes occur in first half of cycle and register reads occur in second half.

EECC722 - Shaaban

#41 Lec # 1 Fall 2003 9-8-2003

Pipeline Hazards

• Hazards are situations in pipelining which prevent the next

instruction in the instruction stream from executing during

the designated clock cycle.

• Hazards reduce the ideal speedup gained from pipelining

and are classified into three classes:

– Structural hazards: Arise from hardware resource

conflicts when the available hardware cannot support all

possible combinations of instructions.

– Data hazards: Arise when an instruction depends on the

results of a previous instruction in a way that is exposed

by the overlapping of instructions in the pipeline

– Control hazards: Arise from the pipelining of conditional

branches and other instructions that change the PC

EECC722 - Shaaban

#42 Lec # 1 Fall 2003 9-8-2003

Performance of Pipelines with Stalls

• Hazards in pipelines may make it necessary to stall the pipeline

by one or more cycles and thus degrading performance from the

ideal CPI of 1.

CPI pipelined = Ideal CPI + Pipeline stall clock cycles per instruction

• If pipelining overhead is ignored (no change in clock cycle) and we

assume that the stages are perfectly balanced then:

Speedup = CPI un-pipelined / CPI pipelined

= CPI un-pipelined / (1 + Pipeline stall cycles per instruction)

EECC722 - Shaaban

#43 Lec # 1 Fall 2003 9-8-2003

Structural Hazards

• In pipelined machines overlapped instruction execution

requires pipelining of functional units and duplication of

resources to allow all possible combinations of instructions

in the pipeline.

• If a resource conflict arises due to a hardware resource

being required by more than one instruction in a single

cycle, and one or more such instructions cannot be

accommodated, then a structural hazard has occurred,

for example:

– when a machine has only one register file write port

– or when a pipelined machine has a shared singlememory pipeline for data and instructions.

stall the pipeline for one cycle for register writes or

memory data access

EECC722 - Shaaban

#44 Lec # 1 Fall 2003 9-8-2003

MIPS with Memory

Unit Structural Hazards

EECC722 - Shaaban

#45 Lec # 1 Fall 2003 9-8-2003

Resolving A Structural

Hazard with Stalling

EECC722 - Shaaban

#46 Lec # 1 Fall 2003 9-8-2003

Data Hazards

• Data hazards occur when the pipeline changes the order of

read/write accesses to instruction operands in such a way that

the resulting access order differs from the original sequential

instruction operand access order of the unpipelined machine

resulting in incorrect execution.

• Data hazards usually require one or more instructions to be

stalled to ensure correct execution.

• Example:

DADD R1, R2, R3

DSUB R4, R1, R5

AND R6, R1, R7

OR R8,R1,R9

XOR R10, R1, R11

– All the instructions after DADD use the result of the DADD instruction

– DSUB, AND instructions need to be stalled for correct execution.

EECC722 - Shaaban

#47 Lec # 1 Fall 2003 9-8-2003

Data

Hazard Example

Figure A.6 The use of the result of the DADD instruction in the next three instructions

causes a hazard, since the register is not written until after those instructions read it.

EECC722 - Shaaban

#48 Lec # 1 Fall 2003 9-8-2003

Minimizing Data hazard Stalls by Forwarding

• Forwarding is a hardware-based technique (also called register

bypassing or short-circuiting) used to eliminate or minimize

data hazard stalls.

• Using forwarding hardware, the result of an instruction is copied

directly from where it is produced (ALU, memory read port

etc.), to where subsequent instructions need it (ALU input

register, memory write port etc.)

• For example, in the DLX pipeline with forwarding:

– The ALU result from the EX/MEM register may be forwarded or fed

back to the ALU input latches as needed instead of the register

operand value read in the ID stage.

– Similarly, the Data Memory Unit result from the MEM/WB register

may be fed back to the ALU input latches as needed .

– If the forwarding hardware detects that a previous ALU operation is to

write the register corresponding to a source for the current ALU

operation, control logic selects the forwarded result as the ALU input

rather than the value read from the register file.

EECC722 - Shaaban

#49 Lec # 1 Fall 2003 9-8-2003

EECC722 - Shaaban

#50 Lec # 1 Fall 2003 9-8-2003

MIPS Pipeline

with Forwarding

A set of instructions that depend on the DADD result uses forwarding paths to avoid the data hazard

EECC722 - Shaaban

#51 Lec # 1 Fall 2003 9-8-2003

Data Hazard Classification

Given two instructions I, J, with I occurring before J

in an instruction stream:

• RAW (read after write): A true data dependence

J tried to read a source before I writes to it, so J

incorrectly gets the old value.

• WAW (write after write):

A name dependence

J tries to write an operand before it is written by I

The writes end up being performed in the wrong order.

• WAR (write after read): A name dependence

J tries to write to a destination before it is read by I,

so I incorrectly gets the new value.

• RAR (read after read): Not a hazard.

EECC722 - Shaaban

#52 Lec # 1 Fall 2003 9-8-2003

Data Hazard Classification

I (Write)

I (Read)

Shared

Operand

J (Read)

Shared

Operand

J (Write)

Read after Write (RAW)

Write after Read (WAR)

I (Write)

I (Read)

Shared

Operand

Shared

Operand

J (Write)

Write after Write (WAW)

J (Read)

Read after Read (RAR) not a hazard

EECC722 - Shaaban

#53 Lec # 1 Fall 2003 9-8-2003

Data Hazards Requiring Stall Cycles

EECC722 - Shaaban

#54 Lec # 1 Fall 2003 9-8-2003

Compiler Instruction Scheduling

for Data Hazard Stall Reduction

• Many types of stalls resulting from data hazards are very

frequent. For example:

A = B+ C

produces a stall when loading the second data value (B).

• Rather than allow the pipeline to stall, the compiler could

sometimes schedule the pipeline to avoid stalls.

• Compiler pipeline or instruction scheduling involves

rearranging the code sequence (instruction reordering) to

eliminate the hazard.

EECC722 - Shaaban

#55 Lec # 1 Fall 2003 9-8-2003

Compiler Instruction Scheduling Example

• For the code sequence:

a=b+c

d=e-f

a, b, c, d ,e, and f

are in memory

• Assuming loads have a latency of one clock cycle, the following

code or pipeline compiler schedule eliminates stalls:

Original code with stalls:

LD

Rb,b

LD

Rc,c

Stall

DADD Ra,Rb,Rc

SD

Ra,a

LD

Re,e

LD

Rf,f

Stall

DSUB Rd,Re,Rf

SD

Rd,d

Scheduled code with no stalls:

LD

Rb,b

LD

Rc,c

LD

Re,e

DADD Ra,Rb,Rc

LD

Rf,f

SD

Ra,a

DSUB Rd,Re,Rf

SD

Rd,d

EECC722 - Shaaban

#56 Lec # 1 Fall 2003 9-8-2003

Control Hazards

• When a conditional branch is executed it may change the PC

and, without any special measures, leads to stalling the pipeline

for a number of cycles until the branch condition is known.

• In current MIPS pipeline, the conditional branch is resolved in

the MEM stage resulting in three stall cycles as shown below:

Branch instruction

Branch successor

Branch successor + 1

Branch successor + 2

Branch successor + 3

Branch successor + 4

Branch successor + 5

IF ID EX MEM WB

IF stall stall

IF ID

IF

EX

ID

IF

MEM

EX

ID

IF

WB

MEM WB

EX

MEM

ID

EX

IF

ID

IF

Assuming we stall on a branch instruction:

Three clock cycles are wasted for every branch for current MIPS pipeline

EECC722 - Shaaban

#57 Lec # 1 Fall 2003 9-8-2003

Reducing Branch Stall Cycles

Pipeline hardware measures to reduce branch stall cycles:

1- Find out whether a branch is taken earlier in the pipeline.

2- Compute the taken PC earlier in the pipeline.

In MIPS:

– In MIPS branch instructions BEQZ, BNE, test a register

for equality to zero.

– This can be completed in the ID cycle by moving the zero

test into that cycle.

– Both PCs (taken and not taken) must be computed early.

– Requires an additional adder because the current ALU is

not useable until EX cycle.

– This results in just a single cycle stall on branches.

EECC722 - Shaaban

#58 Lec # 1 Fall 2003 9-8-2003

Modified MIPS Pipeline:

Conditional Branches

Completed in ID Stage

EECC722 - Shaaban

#59 Lec # 1 Fall 2003 9-8-2003

Compile-Time Reduction of Branch Penalties

• One scheme discussed earlier is to flush or freeze the pipeline

by whenever a conditional branch is decoded by holding or

deleting any instructions in the pipeline until the branch

destination is known (zero pipeline registers, control lines).

• Another method is to predict that the branch is not taken where

the state of the machine is not changed until the branch

outcome is definitely known. Execution here continues with

the next instruction; stall occurs here when the branch is taken.

• Another method is to predict that the branch is taken and begin

fetching and executing at the target; stall occurs here if the

branch is not taken.

• Delayed Branch: An instruction following the branch in a

branch delay slot is executed whether the branch is taken or

not.

EECC722 - Shaaban

#60 Lec # 1 Fall 2003 9-8-2003

Reduction of Branch Penalties:

Delayed Branch

• When delayed branch is used, the branch is delayed by n cycles,

following this execution pattern:

conditional branch instruction

sequential successor1

sequential successor2

……..

sequential successorn

branch target if taken

• The sequential successor instruction are said to be in the branch

delay slots. These instructions are executed whether or not the

branch is taken.

• In Practice, all machines that utilize delayed branching have

a single instruction delay slot.

• The job of the compiler is to make the successor instructions

valid and useful instructions.

EECC722 - Shaaban

#61 Lec # 1 Fall 2003 9-8-2003

Delayed Branch Example

EECC722 - Shaaban

#62 Lec # 1 Fall 2003 9-8-2003

Pipeline Performance Example

• Assume the following MIPS instruction mix:

Type

Arith/Logic

Load

Store

branch

Frequency

40%

30%

of which 25% are followed immediately by

an instruction using the loaded value

10%

20%

of which 45% are taken

• What is the resulting CPI for the pipelined MIPS with

forwarding and branch address calculation in ID stage

when using a branch not-taken scheme?

• CPI = Ideal CPI + Pipeline stall clock cycles per instruction

=

=

=

=

1 +

1 +

1 +

1.165

stalls by loads + stalls by branches

.3 x .25 x 1

+

.2 x .45 x 1

.075

+

.09

EECC722 - Shaaban

#63 Lec # 1 Fall 2003 9-8-2003

Pipelining and Exploiting

Instruction-Level Parallelism (ILP)

• Pipelining increases performance by overlapping the execution

of independent instructions.

• The CPI of a real-life pipeline is given by (assuming ideal

memory):

Pipeline CPI = Ideal Pipeline CPI + Structural Stalls + RAW Stalls

+ WAR Stalls + WAW Stalls + Control Stalls

• A basic instruction block is a straight-line code sequence with no

branches in, except at the entry point, and no branches out

except at the exit point of the sequence .

• The amount of parallelism in a basic block is limited by

instruction dependence present and size of the basic block.

• In typical integer code, dynamic branch frequency is about 15%

(average basic block size of 7 instructions).

EECC722 - Shaaban

#64 Lec # 1 Fall 2003 9-8-2003

Increasing Instruction-Level Parallelism

• A common way to increase parallelism among instructions is

to exploit parallelism among iterations of a loop

– (i.e Loop Level Parallelism, LLP).

• This is accomplished by unrolling the loop either statically

by the compiler, or dynamically by hardware, which

increases the size of the basic block present.

• In this loop every iteration can overlap with any other

iteration. Overlap within each iteration is minimal.

for (i=1; i<=1000; i=i+1;)

x[i] = x[i] + y[i];

• In vector machines, utilizing vector instructions is an

important alternative to exploit loop-level parallelism,

• Vector instructions operate on a number of data items. The

above loop would require just four such instructions.

EECC722 - Shaaban

#65 Lec # 1 Fall 2003 9-8-2003

MIPS Loop Unrolling Example

• For the loop:

for (i=1000; i>0; i=i-1)

x[i] = x[i] + s;

The straightforward MIPS assembly code is given by:

Loop: L.D

ADD.D

S.D

DADDUI

BNE

F0, 0 (R1)

F4, F0, F2

F4, 0(R1)

R1, R1, # -8

R1, R2,Loop

;F0=array element

;add scalar in F2

;store result

;decrement pointer 8 bytes

;branch R1!=R2

R1 is initially the address of the element with highest address.

8(R2) is the address of the last element to operate on.

EECC722 - Shaaban

#66 Lec # 1 Fall 2003 9-8-2003

MIPS FP Latency Assumptions

• All FP units assumed to be pipelined.

• The following FP operations latencies are used:

Instruction

Producing Result

Instruction

Using Result

Latency In

Clock Cycles

FP ALU Op

Another FP ALU Op

3

FP ALU Op

Store Double

2

Load Double

FP ALU Op

1

Load Double

Store Double

0

EECC722 - Shaaban

#67 Lec # 1 Fall 2003 9-8-2003

Loop Unrolling Example (continued)

• This loop code is executed on the MIPS pipeline as follows:

No scheduling

Clock cycle

Loop: L.D

F0, 0(R1)

1

stall

2

ADD.D F4, F0, F2

3

stall

4

stall

5

S.D

F4, 0 (R1)

6

DADDUI R1, R1, # -8

7

stall

8

BNE

R1,R2, Loop

9

stall

10

With delayed branch scheduling

Loop: L.D

DADDUI

ADD.D

stall

BNE

S.D

F0, 0(R1)

R1, R1, # -8

F4, F0, F2

R1,R2, Loop

F4,8(R1)

6 cycles per iteration

10/6 = 1.7 times faster

10 cycles per iteration

EECC722 - Shaaban

#68 Lec # 1 Fall 2003 9-8-2003

Loop Unrolling Example (continued)

• The resulting loop code when four copies of the loop body

are unrolled without reuse of registers:

No scheduling

Loop: L.D

F0, 0(R1)

ADD.D F4, F0, F2

SD

F4,0 (R1)

; drop DADDUI & BNE

LD

F6, -8(R1)

ADDD F8, F6, F2

SD

F8, -8 (R1), ; drop DADDUI & BNE

LD

F10, -16(R1)

ADDD F12, F10, F2

SD

F12, -16 (R1) ; drop DADDUI & BNE

LD

F14, -24 (R1)

ADDD F16, F14, F2

SD

F16, -24(R1)

DADDUI R1, R1, # -32

BNE

R1, R2, Loop

Three branches and three

decrements of R1 are eliminated.

Load and store addresses are

changed to allow DADDUI

instructions to be merged.

The loop runs in 28 assuming each

L.D has 1 stall cycle, each ADD.D

has 2 stall cycles, the DADDUI 1

stall, the branch 1 stall cycles, or

7 cycles for each of the four

elements.

EECC722 - Shaaban

#69 Lec # 1 Fall 2003 9-8-2003

Loop Unrolling Example (continued)

When scheduled for pipeline

Loop: L.D

L.D

L.D

L.D

ADD.D

ADD.D

ADD.D

ADD.D

S.D

S.D

DADDUI

S.D

BNE

S.D

The execution time of the loop

has dropped to 14 cycles, or 3.5

clock cycles per element

F0, 0(R1)

F6,-8 (R1)

F10, -16(R1)

F14, -24(R1)

compared to 6.8 before scheduling

F4, F0, F2

and 6 when scheduled but unrolled.

F8, F6, F2

F12, F10, F2

Unrolling the loop exposed more

F16, F14, F2

computation that can be scheduled

F4, 0(R1)

to minimize stalls.

F8, -8(R1)

R1, R1,# -32

F12, -16(R1),F12

R1,R2, Loop

F16, 8(R1), F16 ;8-32 = -24

EECC722 - Shaaban

#70 Lec # 1 Fall 2003 9-8-2003

Loop-Level Parallelism (LLP) Analysis

• Loop-Level Parallelism (LLP) analysis focuses on whether data

accesses in later iterations of a loop are data dependent on data

values produced in earlier iterations.

e.g. in

for (i=1; i<=1000; i++)

x[i] = x[i] + s;

the computation in each iteration is independent of the previous

iterations and the loop is thus parallel. The use of X[i] twice is within

a single iteration.

Thus loop iterations are parallel (or independent from each other).

• Loop-carried Dependence: A data dependence between different

loop iterations (data produced in earlier iteration used in a later one).

• LLP analysis is normally done at the source code level or close to it

since assembly language and target machine code generation

introduces a loop-carried name dependence in the registers used for

addressing and incrementing.

• Instruction level parallelism (ILP) analysis, on the other hand, is

usually done when instructions are generated by the compiler.

EECC722 - Shaaban

#71 Lec # 1 Fall 2003 9-8-2003

LLP Analysis Example 1

• In the loop:

for (i=1; i<=100; i=i+1) {

A[i+1] = A[i] + C[i]; /* S1 */

B[i+1] = B[i] + A[i+1];} /* S2 */

}

(Where A, B, C are distinct non-overlapping arrays)

– S2 uses the value A[i+1], computed by S1 in the same iteration. This

data dependence is within the same iteration (not a loop-carried

dependence).

does not prevent loop iteration parallelism.

– S1 uses a value computed by S1 in an earlier iteration, since iteration i

computes A[i+1] read in iteration i+1 (loop-carried dependence,

prevents parallelism). The same applies for S2 for B[i] and B[i+1]

These two dependences are loop-carried spanning more than one iteration

preventing loop parallelism.

EECC722 - Shaaban

#72 Lec # 1 Fall 2003 9-8-2003

• In the loop:

LLP Analysis Example 2

for (i=1; i<=100; i=i+1) {

A[i] = A[i] + B[i];

B[i+1] = C[i] + D[i];

/* S1 */

/* S2 */

}

– S1 uses the value B[i] computed by S2 in the previous iteration (loopcarried dependence)

– This dependence is not circular:

• S1 depends on S2 but S2 does not depend on S1.

– Can be made parallel by replacing the code with the following:

Loop Start-up code

A[1] = A[1] + B[1];

for (i=1; i<=99; i=i+1) {

B[i+1] = C[i] + D[i];

A[i+1] = A[i+1] + B[i+1];

}

B[101] = C[100] + D[100]; Loop Completion code

EECC722 - Shaaban

#73 Lec # 1 Fall 2003 9-8-2003

LLP Analysis Example 2

Original Loop:

Iteration 1

for (i=1; i<=100; i=i+1) {

A[i] = A[i] + B[i];

B[i+1] = C[i] + D[i];

}

Iteration 2

A[1] = A[1] + B[1];

A[2] = A[2] + B[2];

B[2] = C[1] + D[1];

B[3] = C[2] + D[2];

/* S1 */

/* S2 */

......

......

Loop-carried

Dependence

Iteration 99

A[99] = A[99] + B[99];

A[100] = A[100] + B[100];

B[100] = C[99] + D[99];

B[101] = C[100] + D[100];

A[1] = A[1] + B[1];

for (i=1; i<=99; i=i+1) {

B[i+1] = C[i] + D[i];

A[i+1] = A[i+1] + B[i+1];

}

B[101] = C[100] + D[100];

Modified Parallel Loop:

Iteration 98

Loop Start-up code

Iteration 100

Iteration 1

A[1] = A[1] + B[1];

A[2] = A[2] + B[2];

B[2] = C[1] + D[1];

B[3] = C[2] + D[2];

....

Not Loop

Carried

Dependence

Iteration 99

A[99] = A[99] + B[99];

A[100] = A[100] + B[100];

B[100] = C[99] + D[99];

B[101] = C[100] + D[100];

Loop Completion code

EECC722 - Shaaban

#74 Lec # 1 Fall 2003 9-8-2003

Reduction of Data Hazards Stalls

with Dynamic Scheduling

• So far we have dealt with data hazards in instruction pipelines by:

– Result forwarding and bypassing to reduce latency and hide or

reduce the effect of true data dependence.

– Hazard detection hardware to stall the pipeline starting with the

instruction that uses the result.

– Compiler-based static pipeline scheduling to separate the dependent

instructions minimizing actual hazards and stalls in scheduled code.

• Dynamic scheduling:

– Uses a hardware-based mechanism to rearrange instruction

execution order to reduce stalls at runtime.

– Enables handling some cases where dependencies are unknown at

compile time.

– Similar to the other pipeline optimizations above, a dynamically

scheduled processor cannot remove true data dependencies, but tries

to avoid or reduce stalling.

EECC722 - Shaaban

#75 Lec # 1 Fall 2003 9-8-2003

Dynamic Pipeline Scheduling: The Concept

• Dynamic pipeline scheduling overcomes the limitations of in-order

execution by allowing out-of-order instruction execution.

• Instruction are allowed to start executing out-of-order as soon as

their operands are available.

Example:

In the case of in-order execution

SUBD must wait for DIVD to complete

which stalled ADDD before starting execution

In out-of-order execution SUBD can start as soon

as the values of its operands F8, F14 are available.

DIVD F0, F2, F4

ADDD F10, F0, F8

SUBD F12, F8, F14

• This implies allowing out-of-order instruction commit (completion).

• May lead to imprecise exceptions if an instruction issued earlier

raises an exception.

• This is similar to pipelines with multi-cycle floating point units.

EECC722 - Shaaban

#76 Lec # 1 Fall 2003 9-8-2003

Dynamic Pipeline Scheduling

• Dynamic instruction scheduling is accomplished by:

– Dividing the Instruction Decode ID stage into two stages:

• Issue: Decode instructions, check for structural hazards.

• Read operands: Wait until data hazard conditions, if any,

are resolved, then read operands when available.

(All instructions pass through the issue stage in order but can

be stalled or pass each other in the read operands stage).

– In the instruction fetch stage IF, fetch an additional instruction

every cycle into a latch or several instructions into an instruction

queue.

– Increase the number of functional units to meet the demands of

the additional instructions in their EX stage.

• Two dynamic scheduling approaches exist:

– Dynamic scheduling with a Scoreboard used first in CDC6600

– The Tomasulo approach pioneered by the IBM 360/91

EECC722 - Shaaban

#77 Lec # 1 Fall 2003 9-8-2003

Dynamic Scheduling With A Scoreboard

• The score board is a hardware mechanism that maintains an execution

rate of one instruction per cycle by executing an instruction as soon as

its operands are available and no hazard conditions prevent it.

• It replaces ID, EX, WB with four stages: ID1, ID2, EX, WB

• Every instruction goes through the scoreboard where a record of data

dependencies is constructed (corresponds to instruction issue).

• A system with a scoreboard is assumed to have several functional units

with their status information reported to the scoreboard.

• If the scoreboard determines that an instruction cannot execute

immediately it executes another waiting instruction and keeps

monitoring hardware units status and decide when the instruction can

proceed to execute.

• The scoreboard also decides when an instruction can write its results to

registers (hazard detection and resolution is centralized in the

scoreboard).

EECC722 - Shaaban

#78 Lec # 1 Fall 2003 9-8-2003

The basic structure of a MIPS processor with a scoreboard

EECC722 - Shaaban

#79 Lec # 1 Fall 2003 9-8-2003

Instruction Execution Stages with A Scoreboard

1 Issue (ID1):

If a functional unit for the instruction is available, the

scoreboard issues the instruction to the functional unit and updates its

internal data structure; structural and WAW hazards are resolved here.

(this replaces part of ID stage in the conventional MIPS pipeline).

2 Read operands (ID2):

The scoreboard monitors the availability

of the source operands. A source operand is available when no earlier

active instruction will write it. When all source operands are available

the scoreboard tells the functional unit to read all operands from the

registers (no forwarding supported) and start execution (RAW hazards

resolved here dynamically). This completes ID.

3 Execution (EX):

The functional unit starts execution upon

receiving operands. When the results are ready it notifies the

scoreboard (replaces EX, MEM in MIPS).

4 Write result (WB):

Once the scoreboard senses that a functional

unit completed execution, it checks for WAR hazards and stalls the

completing instruction if needed otherwise the write back is completed.

EECC722 - Shaaban

#80 Lec # 1 Fall 2003 9-8-2003

Three Parts of the Scoreboard

1

2

Instruction status: Which of 4 steps the instruction is in.

Functional unit status: Indicates the state of the functional

unit (FU). Nine fields for each functional unit:

–

–

–

–

–

–

Busy

Op

Fi

Fj, Fk

Qj, Qk

Rj, Rk

Indicates whether the unit is busy or not

Operation to perform in the unit (e.g., + or –)

Destination register

Source-register numbers

Functional units producing source registers Fj, Fk

Flags indicating when Fj, Fk are ready

(set to Yes after operand is available to read)

3

Register result status: Indicates which functional unit will

write to each register, if one exists. Blank when no pending

instructions will write that register.

EECC722 - Shaaban

#81 Lec # 1 Fall 2003 9-8-2003

Dynamic Scheduling:

The Tomasulo Algorithm

• Developed at IBM and first implemented in IBM’s 360/91

mainframe in 1966, about 3 years after the debut of the scoreboard

in the CDC 6600.

• Dynamically schedule the pipeline in hardware to reduce stalls.

• Differences between IBM 360 & CDC 6600 ISA.

– IBM has only 2 register specifiers/instr vs. 3 in CDC 6600.

– IBM has 4 FP registers vs. 8 in CDC 6600.

• Current CPU architectures that can be considered descendants of

the IBM 360/91 which implement and utilize a variation of the

Tomasulo Algorithm include:

RISC CPUs: Alpha 21264, HP 8600, MIPS R12000, PowerPC G4

RISC-core x86 CPUs: AMD Athlon, Pentium III, 4, Xeon ….

EECC722 - Shaaban

#82 Lec # 1 Fall 2003 9-8-2003

Tomasulo Algorithm Vs. Scoreboard

•

•

•

•

•

Control & buffers distributed with Function Units (FU) Vs. centralized in

Scoreboard:

– FU buffers are called “reservation stations” which have pending instructions

and operands and other instruction status info.

– Reservations stations are sometimes referred to as “physical registers” or

“renaming registers” as opposed to architecture registers specified by the

ISA.

ISA Registers in instructions are replaced by either values (if available) or

pointers to reservation stations (RS) that will supply the value later:

– This process is called register renaming.

– Avoids WAR, WAW hazards.

– Allows for hardware-based loop unrolling.

– More reservation stations than ISA registers are possible , leading to

optimizations that compilers can’t achieve and prevents the number of ISA

registers from becoming a bottleneck.

Instruction results go (forwarded) to FUs from RSs, not through registers, over

Common Data Bus (CDB) that broadcasts results to all FUs.

Loads and Stores are treated as FUs with RSs as well.

Integer instructions can go past branches, allowing FP ops beyond basic block in

FP queue.

EECC722 - Shaaban

#83 Lec # 1 Fall 2003 9-8-2003

Dynamic Scheduling: The Tomasulo Approach

The basic structure of a MIPS floating-point unit using Tomasulo’s algorithm

EECC722 - Shaaban

#84 Lec # 1 Fall 2003 9-8-2003

Reservation Station Fields

• Op Operation to perform in the unit (e.g., + or –)

• Vj, Vk Value of Source operands S1 and S2

– Store buffers have a single V field indicating result to

be stored.

• Qj, Qk Reservation stations producing source

registers. (value to be written).

– No ready flags as in Scoreboard; Qj,Qk=0 => ready.

– Store buffers only have Qi for RS producing result.

• A: Address information for loads or stores. Initially

immediate field of instruction then effective address

when calculated.

• Busy: Indicates reservation station and FU are busy.

• Register result status: Qi Indicates which functional

unit will write each register, if one exists.

– Blank (or 0) when no pending instructions exist that

EECC722 - Shaaban

will write to that register.

#85 Lec # 1 Fall 2003 9-8-2003

1

Three Stages of Tomasulo Algorithm

Issue: Get instruction from pending Instruction Queue.

– Instruction issued to a free reservation station (no structural hazard).

– Selected RS is marked busy.

– Control sends available instruction operands values (from ISA registers)

to assigned RS.

– Operands not available yet are renamed to RSs that will produce the

operand (register renaming).

2

Execution (EX): Operate on operands.

– When both operands are ready then start executing on assigned FU.

– If all operands are not ready, watch Common Data Bus (CDB) for needed

result (forwarding done via CDB).

3

Write result (WB): Finish execution.

–

–

Write result on Common Data Bus to all awaiting units

Mark reservation station as available.

• Normal data bus: data + destination (“go to” bus).

• Common Data Bus (CDB): data + source (“come from” bus):

– 64 bits for data + 4 bits for Functional Unit source address.

– Write data to waiting RS if source matches expected RS (that produces result).

– Does the result forwarding via broadcast to waiting RSs.

EECC722 - Shaaban

#86 Lec # 1 Fall 2003 9-8-2003

Dynamic Conditional Branch Prediction

•

•

•

Dynamic branch prediction schemes are different from static

mechanisms because they use the run-time behavior of branches to make

more accurate predictions than possible using static prediction.

Usually information about outcomes of previous occurrences of a given

branch (branching history) is used to predict the outcome of the current

occurrence. Some of the proposed dynamic branch prediction

mechanisms include:

– One-level or Bimodal: Uses a Branch History Table (BHT), a table

of usually two-bit saturating counters which is indexed by a portion

of the branch address (low bits of address).

– Two-Level Adaptive Branch Prediction.

– MCFarling’s Two-Level Prediction with index sharing (gshare).

– Hybrid or Tournament Predictors: Uses a combinations of two or

more (usually two) branch prediction mechanisms.

To reduce the stall cycles resulting from correctly predicted taken

branches to zero cycles, a Branch Target Buffer (BTB) that includes the

addresses of conditional branches that were taken along with their

targets is added to the fetch stage.

EECC722 - Shaaban

#87 Lec # 1 Fall 2003 9-8-2003

Branch Target Buffer (BTB)

•

•

•

•

•

•

Effective branch prediction requires the target of the branch at an early pipeline

stage.

One can use additional adders to calculate the target, as soon as the branch

instruction is decoded. This would mean that one has to wait until the ID stage

before the target of the branch can be fetched, taken branches would be fetched

with a one-cycle penalty (this was done in the enhanced MIPS pipeline Fig A.24).

To avoid this problem one can use a Branch Target Buffer (BTB). A typical BTB

is an associative memory where the addresses of taken branch instructions are

stored together with their target addresses.

Some designs store n prediction bits as well, implementing a combined BTB and

BHT.

Instructions are fetched from the target stored in the BTB in case the branch is

predicted-taken and found in BTB. After the branch has been resolved the BTB

is updated. If a branch is encountered for the first time a new entry is created

once it is resolved.

Branch Target Instruction Cache (BTIC): A variation of BTB which caches

also the code of the branch target instruction in addition to its address. This

eliminates the need to fetch the target instruction from the instruction cache or

from memory.

EECC722 - Shaaban

#88 Lec # 1 Fall 2003 9-8-2003

Basic Branch Target Buffer (BTB)

EECC722 - Shaaban

#89 Lec # 1 Fall 2003 9-8-2003

EECC722 - Shaaban

#90 Lec # 1 Fall 2003 9-8-2003

One-Level Bimodal Branch Predictors

• One-level or bimodal branch prediction uses only one level of branch

history.

• These mechanisms usually employ a table which is indexed by lower

bits of the branch address.

• The table entry consists of n history bits, which form an n-bit

automaton or saturating counters.

• Smith proposed such a scheme, known as the Smith algorithm, that

uses a table of two-bit saturating counters.

• One rarely finds the use of more than 3 history bits in the literature.

• Two variations of this mechanism:

– Decode History Table: Consists of directly mapped entries.

– Branch History Table (BHT): Stores the branch address as a tag. It

is associative and enables one to identify the branch instruction

during IF by comparing the address of an instruction with the stored

branch addresses in the table (similar to BTB).

EECC722 - Shaaban

#91 Lec # 1 Fall 2003 9-8-2003

One-Level Bimodal Branch Predictors

Decode History Table (DHT)

High bit determines

branch prediction

0 = Not Taken

1 = Taken

N Low Bits of

Table has 2N entries.

Example:

For N =12

Table has 2N = 212 entries

= 4096 = 4k entries

0

0

1

1

0

Not Taken

1

0

1 Taken

Number of bits needed = 2 x 4k = 8k bits

EECC722 - Shaaban

#92 Lec # 1 Fall 2003 9-8-2003

One-Level Bimodal Branch Predictors

Branch History Table (BHT)

High bit determines

branch prediction

0 = Not Taken

1 = Taken

EECC722 - Shaaban

#93 Lec # 1 Fall 2003 9-8-2003

Basic Dynamic Two-Bit Branch Prediction:

Two-bit Predictor State

Transition Diagram

EECC722 - Shaaban

#94 Lec # 1 Fall 2003 9-8-2003

Prediction Accuracy

of A 4096-Entry Basic

Dynamic Two-Bit

Branch Predictor

Integer average 11%

FP average 4%

Integer

EECC722 - Shaaban

#95 Lec # 1 Fall 2003 9-8-2003

From The Analysis of Static Branch Prediction :

MIPS Performance Using Canceling Delay Branches

EECC722 - Shaaban

#96 Lec # 1 Fall 2003 9-8-2003

Correlating Branches

Recent branches are possibly correlated: The behavior of

recently executed branches affects prediction of current

branch.

Example:

B1

B2

B3

if (aa==2)

aa=0;

if (bb==2)

bb=0;

if (aa!==bb){

L1:

L2:

DSUBUI

BENZ

DADD

DSUBUI

BNEZ

DADD

DSUBUI

BEQZ

R3, R1, #2

R3, L1

R1, R0, R0

R3, R1, #2

R3, L2

R2, R0, R0

R3, R1, R2

R3, L3

; b1 (aa!=2)

; aa==0

; b2 (bb!=2)

; bb==0

; R3=aa-bb

; b3 (aa==bb)

Branch B3 is correlated with branches B1, B2. If B1, B2 are

both not taken, then B3 will be taken. Using only the behavior

of one branch cannot detect this behavior.

EECC722 - Shaaban

#97 Lec # 1 Fall 2003 9-8-2003

Correlating Two-Level Dynamic GAp Branch Predictors

•

•

•

•

Improve branch prediction by looking not only at the history of the branch in

question but also at that of other branches using two levels of branch history.

Uses two levels of branch history:

– First level (global):

• Record the global pattern or history of the m most recently executed

branches as taken or not taken. Usually an m-bit shift register.

– Second level (per branch address):

• 2m prediction tables, each table entry has n bit saturating counter.

• The branch history pattern from first level is used to select the proper

branch prediction table in the second level.

• The low N bits of the branch address are used to select the correct

prediction entry within a the selected table, thus each of the 2m tables

has 2N entries and each entry is 2 bits counter.

• Total number of bits needed for second level = 2m x n x 2N bits

In general, the notation: (m,n) GAp predictor means:

– Record last m branches to select between 2m history tables.

– Each second level table uses n-bit counters (each table entry has n bits).

Basic two-bit single-level Bimodal BHT is then a (0,2) predictor.

EECC722 - Shaaban

#98 Lec # 1 Fall 2003 9-8-2003

Dynamic

Branch

Prediction:

Example

if (d==0)

d=1;

if (d==1)

L1:

BNEZ

ADDI

SUBI

BNEZ

R1, L1

R1, R0, #1

R3, R1, #1

R3, L2

; branch b1 (d!=0)

; d==0, so d=1

; branch b2 (d!=1)

.. .

L2:

EECC722 - Shaaban

#99 Lec # 1 Fall 2003 9-8-2003

Dynamic

Branch

Prediction:

Example

(continued)

if (d==0)

d=1;

if (d==1)

L1:

BNEZ

ADDI

SUBI

BNEZ

R1, L1

; branch b1 (d!=0)

R1, R0, #1 ; d==0, so d=1

R3, R1, #1

R3, L2

; branch b2 (d!=1)

.. .

L2:

EECC722 - Shaaban

#100 Lec # 1 Fall 2003 9-8-2003

Low 4 bits of address

Organization of A Correlating Twolevel GAp (2,2) Branch Predictor

Global

(1st level)

Second Level

High bit determines

branch prediction

0 = Not Taken

1 = Taken

Selects

correct

entry in table

Adaptive

GAp

per address

(2nd level)

m = # of branches tracked in first level = 2

Thus 2m = 22 = 4 tables in second level

N = # of low bits of branch address used = 4

Thus each table in 2nd level has 2N = 24 = 16

entries

Selects correct

table

First Level

(2 bit shift register)

n = # number of bits of 2nd level table entry = 2

Number of bits for 2nd level = 2m x n x 2N

= 4 x 2 x 16 = 128 bits

EECC722 - Shaaban

#101 Lec # 1 Fall 2003 9-8-2003

Basic

Basic

Correlating

Two-level

GAp

Prediction Accuracy

of Two-Bit Dynamic

Predictors Under

SPEC89

EECC722 - Shaaban

#102 Lec # 1 Fall 2003 9-8-2003

Multiple Instruction Issue: CPI < 1

•

To improve a pipeline’s CPI to be better [less] than one, and to utilize ILP

better, a number of independent instructions have to be issued in the same

pipeline cycle.

•

Multiple instruction issue processors are of two types:

– Superscalar: A number of instructions (2-8) is issued in the same

cycle, scheduled statically by the compiler or dynamically

(Tomasulo).

• PowerPC, Sun UltraSparc, Alpha, HP 8000 ...

– VLIW (Very Long Instruction Word):

A fixed number of instructions (3-6) are formatted as one long

instruction word or packet (statically scheduled by the compiler).

– Joint HP/Intel agreement (Itanium, Q4 2000).

– Intel Architecture-64 (IA-64) 64-bit address:

• Explicitly Parallel Instruction Computer (EPIC): Itanium.

• Limitations of the approaches:

– Available ILP in the program (both).

– Specific hardware implementation difficulties (superscalar).

– VLIW optimal compiler design issues.

EECC722 - Shaaban

#103 Lec # 1 Fall 2003 9-8-2003

Multiple Instruction Issue:

Superscalar Vs.

• Smaller code size.

• Binary compatibility

across generations of

hardware.

VLIW

• Simplified Hardware for

decoding, issuing

instructions.

• No Interlock Hardware

(compiler checks?)

• More registers, but

simplified hardware for

register ports.

EECC722 - Shaaban

#104 Lec # 1 Fall 2003 9-8-2003

Simple Statically Scheduled Superscalar Pipeline

•

•

•

•

Two instructions can be issued per cycle (two-issue superscalar).

One of the instructions is integer (including load/store, branch). The other instruction

is a floating-point operation.

– This restriction reduces the complexity of hazard checking.

Hardware must fetch and decode two instructions per cycle.

Then it determines whether zero (a stall), one or two instructions can be issued per

cycle.

Instruction Type

1

2

3

Integer Instruction

IF

IF

ID

ID

IF

IF

EX

EX

ID

ID

IF

IF

FP Instruction

Integer Instruction

FP Instruction

Integer Instruction

FP Instruction

Integer Instruction

FP Instruction

4

MEM

EX

EX

EX

ID

ID

IF

IF

Two-issue statically scheduled pipeline in operation

FP instructions assumed to be adds

5

6

WB

EX

MEM

EX

EX

EX

ID

ID

7

WB

WB

EX

MEM

EX

EX

EX

WB

WB

EX

MEM

EX

8

WB

WB

EX

EECC722 - Shaaban

#105 Lec # 1 Fall 2003 9-8-2003

Intel/HP VLIW “Explicitly Parallel

Instruction Computing (EPIC)”

• Three instructions in 128 bit “Groups”; instruction template

fields determines if instructions are dependent or independent

– Smaller code size than old VLIW, larger than x86/RISC

– Groups can be linked to show dependencies of more than three

instructions.

• 128 integer registers + 128 floating point registers

– No separate register files per functional unit as in old VLIW.

• Hardware checks dependencies

(interlocks binary compatibility over time)

• Predicated execution: An implementation of conditional

instructions used to reduce the number of conditional branches

used in the generated code larger basic block size

• IA-64 : Name given to instruction set architecture (ISA).

• Itanium : Name of the first implementation (2001).

EECC722 - Shaaban

#106 Lec # 1 Fall 2003 9-8-2003

Intel/HP EPIC VLIW Approach

original source

code

compiler

Expose

Instruction

Parallelism

Instruction Dependency

Analysis

Exploit

Parallelism:

Generate

VLIWs

Optimize

128-bit bundle

127

0

Instruction 2

Instruction 1

Instruction 0

Template

EECC722 - Shaaban

#107 Lec # 1 Fall 2003 9-8-2003