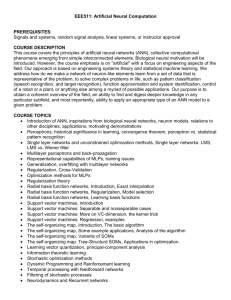

Hierarchical Clustering of Gene Expression Data

advertisement

Hierarchical Clustering of Gene Expression Data Author : Feng Luo, Kun Tang Latifur Khan Graduate : Chien-Ming Hsiao Outline Motivation Objective Introduction Hierarchical Clustering Self-Organizing Tree algorithm hierarchical growing self-organizing tree algorithm Preliminary result Conclusions Personal Opinion Motivation Rapid development of biological technologies generates a hug amount of data. Analyzation and interpretation of these massive data is a challenging task. we are interested in data analysis tools that can help researchers to detect patterns hidden behind these complex initial data. Objective to extract useful and rational fundamental patterns of gene expression inherent in these huge data. introduction Current approaches for measuring gene expression profiles SAGE, RT/PCR, cDNA, oligonucleotide microarray Sample of Microarray introduction Two classes of algorithms have been successfully used to analyze gene expression data. hierarchical clustering a self-organizing tree Hierarchical Clustering Self-Organizing Tree algorithm Self-Organizing Tree algorithm (SOTA) based on the Kohonen’s self-organizing map (SOM) and Fritzke’s growing cell structures output of SOTA is a binary tree topological neural network Self-Organizing Tree algorithm Step 1: Initially the system as a binary tree with three nodes Node Node Node Cell Cell w m Cell Cell A w s Cell B (A) Initial Architecture of SOTA. (B) Two Difference Reference Vector Updating Schemas. Self-Organizing Tree algorithm Step 2: Present all data and compute distances from each data to all external Cells (tree leaves) Euclidean distances cosine distances Step 3: Select output winning cell c with minimum distance dij for each data. Self-Organizing Tree algorithm Step 4: Update reference vector of winning cell and its neighbors wi (t ) ( x wi ) Where (t) is the learning function: (t ) (t ) The (t) is the learning rate function, (t) = 1/t is a learning constant. will have a different value for the winning cell and Its neighbors. Self-Organizing Tree algorithm Step 2,3,4 form a Cycle. While relative error of the entire tree is greater than a threshold repeat the cycle. Step 5: If a cycle finished, increase the network size: two new cells are attached to the cell with highest resources. This cell becomes a node. Resources: an average of the distances of the input data associated this cell to itself. D d ( xi , wi ) D j 1 Re sourcei Step 6: Repeat Step 2 until convergence (resources are below a threshold). Self-Organizing Tree algorithm Time Complexity of SOTA is O( n log N) Space Complexity of SOTA is O (n) The incorrect capture of the hierarchical relationship SOTA hierarchical growing selforganizing tree algorithm hierarchical growing self-organizing tree algorithm (HGSOT) The HGSOT grows vertical grows adds descendents the same strategy used in SOTA horizontal grows adds more siblings a level threshold : controlling growth in the sibling generation hierarchical growing selforganizing tree algorithm To determine horizontal growth hierarchical growing selforganizing tree algorithm Initialization Vertical Growing Horizontal Growing Distribution Distribution the error of the entire tree the error of the entire tree to grow The pseudo code of HGSOT 1. Initialization 2. Vertical Growing 3. change the leaf cell to a node and add two children to each. The reference vector of a new cell is initialized as the node’s reference vector. Distribution 4. initially the tree only has one root node. Initialize its reference vector with the centroid of entire data and all data will be associated with the root. distribute each input datum between two newly created cells; find the winning cell (using KLD, see 2.2.1), and then update the reference vector of the winning cell and its neighbor. Error when the error of the entire tree is larger than a threshold, called error threshold (TE), repeat Step 3. The pseudo code of HGSOT 5. Horizontal Growing 6. Distribution 7. distribute the input data associated with x into its descendents along siblings; find the winning cell (using KLD, see 2.2.), then update the reference vector of the winning cell and its neighbor. Error 8. when the difference between the minimum and maximum distance of all children cells of a node (x) is less than a threshold, called level threshold (TL), a child is added to this node; on the other hand if the difference is greater than the TL, a child is deleted from this node, and the horizontal growth terminated. if the error of the entire tree is greater than (TE), then repeat Step 6. if there are more levels to grow in the hierarchy, and then return to Step 2, otherwise, stop. Hierarchical Cluster Algorithms How we can distribute input data of selected node among these new created cells. Similar to the SOTA approach. Input data of selected node will be distributed not only its new created cells but also its neighbor cells. We determine K level apart ancestor node of selected node. We determine sub-tree of rooted by the ancestor node and input data of selected cell will be distributed among all cells (leaf) of this sub-tree. The latter approach is known as K level distribution (KLD). Hierarchical Cluster Algorithms KLD: We need to distribute data associated with node M to new created cells. For K=1, Data of node M will be distributed to cells, B, C, D & E. If K=0, data of M will be distributed between B and C. Preliminary result Experiment setup Experiment Data 112 genes expression data of rat central nervous system (CNS) Four Gene Families: Neuro-Glial Markers Family (NGMs), Neurotransmitter receptors Family (NTRs), Peptide Signaling Family (PepS) and Diverse These gene expression data were measured by using RT-PCR in mRNA expression in rat’s cervical spinal cord tissue over nine different developmental time points from embryonic days 11 through 21, postnatal days 0 through 14 and adult. For each gene, data are normalized to the maximal expression level among the nine time points Preliminary result Experiment setup The Parameters of HGSOT The winner learning rate w and sibling learning rate s of HGSOT is 0.15 and 0.015. The error threshold is 0.001. The level threshold is 0.8, which means the minimum distance will not be less than 80% of the maximum distance. The distribution level K is equal to 4. Euclidean distance is used to calculate the similarity. Preliminary result Preliminary result Conclusions can successfully gain five clusters similar to Wen et al’s original HAC result and gives a better hierarchical structure. this algorithm can detect more subtle patterns at the lower hierarchical levels, and it shows a more suitable clustering than HAC on some genes. Personal Opinion we would like to do more experiments on different data sets