(d) to a maximum load (L). - Applied Science University

advertisement

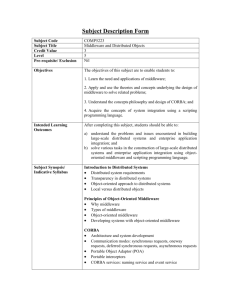

Q1: JAWS: Understanding High Performance Web Systems Introduction The emergence of the World Wide Web (Web) as a mainstream technology has forced the issue on many hard problems for network application writers, with regard to providing a high quality of service (QoS) to application users. Client side strategies have included client side caching, and more recently, caching proxy servers. However, the other side of the problem persists, which is that of a popular Web server which cannot handle the request load that has been placed upon it. Some recent implementation of Web servers have been designed to deal specifically with high load, but they are tied down to a particular platform (e.g., SGI WebFORCE) or employ a specific strategy (e.g., single thread of control). I believe that the key to developing high performance Web systems is through a design which is flexible enough to accommodate different strategies for dealing with server load and is configurable from a high level specification describing the characteristics of the machine and the expected use load of the server. There is a related role of server flexibility, namely that of making new services, or protocols, available. The Service Configurator pattern has been identified as a solution towards making different services available, where inetd being cited as an example of this pattern in use. While a per process approach to services may be the right abstraction to use some of the time, a more integrated (yet modular!) approach may allow for greater strategic reuse. That is, a per process model of services requires each server to redesign and reimplement code which is common to all, and at the same time making it difficult to reuse strategies developed for one service in another. To gain ground in this area, the server should be designed so that new services can be easily added, and can easily use strategies provided by the adaptive server framework. But generalizing the notion of server-side service adaptation, one can envision a framework in which clients negotiate with servers about how services should handled. Most protocols today have been designed so that data manipulation is handled entirely on one side or the other. An adaptive protocol would enable a server and a client to negotiate which parts of a protocol should be handled on each end for optimal performance. Motivation Web servers are synonymous with HTTP servers and the HTTP 1.0 and 1.1 protocols are relatively straightforward. HTTP requests typically name a file and the server locates the file and returns it to the client requesting it. On the surface, therefore, Web servers appear to have few opportunities for optimization. This may lead to the conclusion that optimization efforts should be directed elsewhere (such as transport protocol optimizations, specialized hardware, and client-side caching). Empirical analysis reveals that the problem is more complex and the solution space is much richer. For instance, our experimental results show that a heavily accessed Apache Web server (the most popular server on the Web today) is unable to maintain satisfactory performance on a dual-CPU 180 Mhz UltraSPARC 2 over a 155 Mbps ATM network, due largely to its choice of process-level concurrency. Other studies have shown that the relative performance of different server designs depends heavily on server load characteristics (such as hit rate and file size). The explosive growth of the Web, coupled with the larger role servers play on the Web, places increasingly larger demands on servers. In particular, the severe loads servers already encounter handling millions of requests per day will be confounded with the deployment of high speed networks, such as ATM. Therefore, it is critical to understand how to improve server performance and predictability. Server performance is already a critical issue for the Internet and is becoming more important as Web protocols are applied to performance-sensitive intranet applications. For instance, electronic imaging systems based on HTTP (e.g., Siemens MED or Kodak Picture Net) require servers to perform computationally-intensive image filtering operations (e.g., smoothing, dithering, and gamma correction). Likewise, database applications based on Web protocols (such as AltaVista Search by Digital or the Lexis Nexis) support complex queries that may generate a higher number of disk accesses than a typical Web server. Modeling Overview of the JAWS Model Underlying Assumptions Infinite network bandwidth. This is consistent with my interests in high-speed networks. For a model of Web servers which limites the network bandwidth, see [Slothouber:96] Fixed network latency. We assume the contribution of network latency to be negligible. This is will be more true with persistent HTTP connections, and true request multiplexing. Client requests are "serialized". Simply meaning that the server will process successive requests from a single client in the order they are issued from the client. Research questions What is performance when average server rate is constant. What is performance when average server rate degrades with request rate? What degradation best models actual performance? Benchmarking Benchmarking Testbed Overview Hardware Testbed Our hardware testbed consisted of one Sun Ultra-1 and four Sun Ultra-2 workstations. The Ultra-1 has 128MB of RAM with an 167MHz UltraSPARC processor. Each Ultra-2 has 256MB of RAM, and is equipped with 2 UltraSPARC processors running at 168MHz. Each processor has 1MB of internal cache. All the machines are connected to a regular Ethernet configuration. The four Ultra-2 workstations are connected via an ATM network running through a Bay Networks LattisCell 10114 ATM, with a maxmimum bandwidth of 155Mbps. One of the Ultra-2 workstations hosted the Web server, while the three remaining Ultra-2 workstations were used to generate requests to benchmark the server. The Ultra-1 workstation served to coordinate the startup of the benchmarking clients and the gathering of data after the end of benchmarking runs. Software Request Generator Request load was generated by the WebSTONE webclient, that was modified to be multithreaded. Each ``child'' of the webclient iteratively issues a request, receives the requested data, issues a new request, and so on. Server load can be increased by increasing the number of webclient ``children''. The results of the tests are collected and reported by the webclients after all the requests have completed. Experiments Each experiment consists of several rounds, one round for each server in our test suite. Each round is conducted as a series of benchmarking sessions. Each session consists of having the benchmarking client issue a number of requests (N) for a designated file of a fixed size (Z), at a particular load level beginning at l. Each successive session increases the load by some fixed step value (d) to a maximum load (L). The webclient requests a standard file mix distributed by WebSTONE, which is representative of typical Web server request patterns. Findings By far, the greatest impediment to performance is the host filesystem of the Web server. However, factoring out I/O, the primary determinant to server performance is the concurrency strategy. For single CPU machines, single-threaded solutions are acceptable and perform well. However, they do not scale for multi-processor platforms. Process-based concurrency implementations perform reasonably well when the network is the bottleneck. However, on high-speed networks like ATM, the cost of spawning a new process per request is relatively high. Multi-threaded designs appear to be the choice of the top Web server performers. The cost of spawning a thread is much cheaper than that of a process. Additonal information is available in this paper. Adaptation Concurrency Strategies Each concurrent strategy has positive and negative aspects, which are summarized in the table below. Thus, to optimize performance, Web servers should be adaptive, i.e., be customizable to utilize the most beneficial strategy for particular traffic characteristics, workload, and hardware/OS platforms. In addition, workload studies indicate that the majority of the requests are for small files. Thus, Web servers should adaptively optimize themselves to provide higher priorities for smaller requests. These techniques combined could potentially produce a server capable of being highly responsive and maximizes throughput. The next generation of the JAWS server plans to implement the prioritized strategy. Strategy Advantages Disadvantages Single No context switching overhead. Does not scale for multi-processor Threaded Highly portable. systems. Process-per- More portable for machines Creation cost high. Resource request without threads. intensive. Process pool Avoids creation cost. Requires mutual exclusion in some operating systems. Thread-perrequest Much faster than fork. May require mutual exclusion. Not as portable. Thread pool Avoids creation cost. Requires mutual exclusion in some operating systems. Summary of Concurrency Strategies Protocol Processing There are instances where the contents being transferred may require extra processing. For instance, in HTTP/1.0 and HTTP/1.1 files may have some encoding type. This generally corresponds to a file having been stored in some compressed format (e.g., gzip). In HTTP, it has been customary for the client to perform the decoding. However, there may be cases where the client lacks the proper decoder. To handle such cases, it would be nice if the server would do the decoding on behalf of the client. A more advanced server may detect that a particularly large file would transfer more quickly for the client in some compressed form. But this kind of processing would require negotiation between the client and the server as to the kinds of content transformations are possible by the server and acceptable to the client. Thus, the server would be required to adapt to the abilities of the client, as well as the conditions of the network connection. JAWS Adaptive Web Server Here we will breifly describe the object-oriented architecture of the JAWS Web server framework. In order to understand the design, it is important to motivate the need for framework architectures. Solutions to the Reuse Problem Software reuse is a vital issue in successful development of large software systems. Software reuse can reduce development effort and maintenance costs. Thus, much effort in software engineering techniques has been devoted to the problem of creating reusable software. The techniques for developing reusable software have evolved through several generations of language features (e.g., structured programming, functional programming, 4GLs, object-oriented programming), compilation tools (e.g., source file inclusion, compiled object files, class libraries, components), and system design methods (e.g., functional design, complexity analysis, formal methods, object-oriented design, design patterns). While each of these techniques help to facilitate the development and integration of reusable software, their roles are passive. This means that the software developer must make the decisions of how to put together the software system from the repository of reusable software. The figure below illustrates the passive nature of these solutions. Application development with class libraries and design patterns. The advantages of this approach is that it maximizes the number of available options to software developers. This can be important in development environments with openended requirements, so that design flexibility is of premium value. However, the disadvantage is that every new project must be implemented from the ground up every single time. To gain architectural reuse, software developers may utilize an application framework to create a system. An application framework provides reusable software components for applications by integrating sets of abstract classes and defining standard ways that instances of these classes collaborate. Thus, a framework provides an application skeleton which can be customized by inheriting and instantiating from reuseable components in the framework. The result is pre-fabricated design at the cost of reduced design flexibility. An application framework architecture is shown in the figure below. Application development with an application framework. Frameworks can allow developers to gain greater reuse of designs and code. This comes from leveraging the knowledge of an expert applications framework developer who has pre-determined largely what libraries and objects to use, what patterns they follow, and how they should interact. However, frameworks are much more difficult to develop than a class library. The design must provide an adequate amount of flexibility and at the same time dictate enough structure to be a nearly complete application. This balance must be just right for the framework to be useful. The JAWS Web Server Framework The figure below illustrates the object-oriented software architecture of the JAWS Web server framework. As indicated earlier, our results demonstrate the performance variance that occurs as a Web server experiences changing load conditions. Thus, performance can be improved by dynamically adapting the server behavior to these changing conditions. JAWS is designed to allow Web server concurrency and event dispatching strategies to be customized in accordance with key environmental factors. These factors include static characteristics, such as support for kernel-level threading and/or asynchronous I/O in the OS, and the number of available CPUs, as well as dynamic factors, such as Web traffic patterns, and workload characteristics. JAWS Framework Overview JAWS is structured as a framework that contains the following components: an Event Dispatcher, Concurrency Strategy, I/O Strategy, Protocol Pipeline, Protocol Handlers, and Cached Virtual Filesystem. Each component is structured as a set of collaborating objects implemented with the ACE C++ communication framework. The components and their collaborations follow several design patterns which are named along the borders of the components. Each component plays the following role in JAWS: Event Dispatcher: This component is responsible for coordinating the Concurrency Strategy with the I/O Strategy. The passive establishment of connections with Web clients follows the Acceptor Pattern. New incoming requests are serviced by some concurrency strategy. As events are processed, they are dispensed to the Protocol Handler, which is parametized by I/O strategy. The ability to dynamically bind to a single concurrency strategy and I/O strategy from a number of choices follows the Strategy Pattern. Concurrency Strategy: This implements concurrency mechanisms (such as single-threaded, thread-per-request, or thread pool) that can be selected adaptively at run-time, using the State Pattern or pre-determined at initialization-time. Configuring the server as to which concurrency strategies are available follows the Service Configurator Pattern. When concurrency involves multiple threads, the strategy creates protocol handlers that follow the Active Object Pattern. I/O Strategy: This implements the I/O mechanisms (such as asynchronous, synchronous and reactive). Multiple I/O mechanisms can be used simultaneously. Asynchronous I/O is implemented utilizing the Asynchronous Completion Token Pattern. Reactive I/O is accomplished through the Reactor Pattern. Both Asynchronous and Reactive I/O utilize the Memento Pattern to capture and externalize the state of a request so that it can be restored at a later time. Protocol Handler: This object allows system developers to apply the JAWS framework to a variety of Web system applications. A Protocol Handler object is parameterized by a concurrency strategy and an I/O strategy, but these remain opaque to the protocol handler. In JAWS, this object implements the parsing and handling of HTTP request methods. The abstraction allows for other protocols (e.g., HTTP/1.1 and DICOM) to be incorporated easily into JAWS. To add a new protocol, developers simply write a new Protocol Handler implementation, which is then configured into the JAWS framework. Protocol Pipeline: This component provides a framework to allow a set of filter operations to be incorporated easily into the data being processed by the Protocol Handler. This integration is achieved by employing the Adapter Pattern. Pipelines follow the Streams Pattern for input processing. Pipeline components are made available with the Service Configurator Pattern. Cached Virtual Filesystem: The component improves Web server performance by reducing the overhead of filesystem accesses. The caching policy is strategized (e.g., LRU, LFU, Hinted, and Structured) following the Strategy Pattern. This allows different caching policies to be profiled for effectiveness and enables optimal strategies to be configured statically or dynamically. The cache is instantiated using the Singleton Pattern. Tilde Expander: This mechanism is another cache component that uses a perfect hash table that maps abbreviated user login names (e.g. ~schmidt to user home directories (e.g., /home/cs/faculty/schmidt). When personal Web pages are stored in user home directories, and user directories do not reside in one common root, this component substantially reduces the disk I/O overhead required to access a system user information file, such as /etc/passwd. Q2: ECE1770: Trends in Middleware Systems Course Lecture Jan 18, 2001 This lecture includes the following: o o o o Examples of distributed applications Problems in developing distributed applications Middleware Platforms, definitions and characteristics Categories of middleware Examples of Distributed Application Distributed applications are applications that are designed to run on distributed systems. Our concern of distributed systems in this course is network-based or connected systems such as LANs and WANs and not clusters or multiprocessor machines. Some of the most popular distributed applications are: OLTP (Online Transaction Processing) Online reservation systems in traveling agencies are an example of OLDP. In these kind of systems there is a central system and there are many other machines that are connected to the central system from different geographical locations. Banking Applications Database Management Applications Groupware applications like Lotus Note In the above systems there are usually clients and servers and there is network in between. On the server side there may be databases and database management systems and on the client side there may be any number of clients with different applications. Client applications may be thick or thin. Thin client programs have very little business logic in them like an applet or an HTML browser but thick client programs have a lot of business logic and computations in them like banking or simulation applications. Some problems in developing distributed applications: o o o o o Data Sharing and Concurrency Heterogeneity: different operating systems, different platforms, different architectures, different programming languages and so on. For instance transferring data between machines with different architectures needs conversion, because different machines use different data formats. Imagine there is a 64-bit architecture in one side and a 32-bit architecture on the other side or a machine with little-endian binary format in one side and big-endian format on the other side. Since these machines interpret information differently, for exchanging numbers or other information between these systems an especial program is needed to do the required conversions. Reliability in communication: If there is a failure in a single machine, the machine simply crashes but in distributed systems it is more complicated. For example if one side send a request and doesn’t get the response from the other side, what is supposed to be done as reaction? The connection is down or the server is down? Should the caller application wait or should it send another request? Session management tracking Securities: That is more severe in distributed systems than the local systems There are many other problems that must be addressed for a distributed application to be developed. If one is going to implement a distributed system all by himself/herself, he/she has to solve all such problems. Due to theses problems we need to come up with a standard solution. With modules, libraries, functionality and services to address these problems and this is what generally refer to as Middleware. Middleware tries to address these problems. Middleware Platforms, definitions and characteristics Although there are many definitions for middleware, still there is not a very clear and exact definition for it. There are some services that used to be part of operating systems and now are considered as part of middleware. On the other hand there are some services that are part of middleware and in future will be part of operating systems. Some people may attribute other services such as TCP/IP protocol that is a part of network layer as middleware or as a middleware layer. The essential role of middleware is to manage the complexity and heterogeneity of distributed infrastructures and thereby provides a simpler programming environment for distributed application developer. It is therefore most useful to define middleware as any software layer that is placed above the distributed system’s infrastructure – the network OS and APIs – and below the application layer. One way of viewing a middleware platform is to look at it as residing in the middle. It means under neat the application and above the operating system. This is possibly where the term "middleware" came from. The classical definition of an operating system is "the software that makes the hardware usable". Similarly, middleware can be considered to be the software that makes a distributed system programmable. Just as a bare computer without an operating system could be programmed with great difficulty, programming a distributed system is in general much more difficult without middleware. Middleware is sometimes informally called "plumbing" because it connects parts of a distributed application with data pipes and then passes data between them. It is also called "glue" technology sometimes, because it is often used to integrate legacy components. Middleware provides transparency with respect to implementation language and to the other heterogeneity issues mentioned above. Transparency in this context means that it is invisible to the application developer and at the implementation level. Any kind of service that can be fit into one of the aforementioned definitions, can be considered as middleware platform. Figure 1. Early distributed systems technologies such as OSF DCE (Open Software Foundation Distributed Computing environment), and Sun’s RPC (Remote Procedure Call) can be viewed as middleware. DCE has a very good security support, it has a time service, a directory service but supports only the C language. Several distributed object platforms have recently become quite popular. These platforms extend earlier distributed systems technologies. These middleware platforms are CORBA (Common Open Request Broker Architecture), DCOM (Distributed Component Object Model) and JAVA RMI (Remote Method Invocation). The differences between the above middlewares are the languages and the platforms that they support. CORBA supports multi languages and multi platforms. One can use different operating systems in his/her distributed system while he/she uses CORBA. It also supports object orientation. JAVA RMI supports just one language – JAVA - but it can run on different platforms provided the existence of JAVA virtual machine on the platforms. It supports object orientation too. DCOM is a Microsoft middleware solution. It only runs on Microsoft's operating systems, Windows et al and it supports only Microsoft's programming languages. Therefore it doesn’t support multi platforms. DCOM is also object oriented. Which is the best? Which one should we use? Which one will survive in future? There is not a simple answer to the above questions. Each of the above middlwares has strengths and weaknesses. The characteristics of the new middleware platforms can be summarized as follow: o o o o o Masking heterogeneity in the underlying infrastructure by cloaking system specifics. Permitting heterogeneity at the application level by allowing the various components of the distributed application to be written in any suitable language Providing structure for distributed components by adopting objectoriented principles Offering invisible, behind-the-scenes distribution as well as the ability to know what’s happening behind the scenes Providing general-purpose distributed services that aid application development and deployment Categories of middleware platforms o Distributed Tuple Spaces A distributed relational database offers the abstraction of distributed tuples. Its Structured Query Language (SQL) allows programmers to manipulate sets of these tuples in a declarative language yet with intuitive semantics and rigorous mathematical foundations based on set theory. Linda is a framework offering a distributed tuple abstraction called Tuple Space (TS). It allows people to publish information into TS. On one side there are publishers and on the other side there are subscribers. Publishers can publish into TS and subscribers can subscribe to an item of interest and use the published materials. The advantage of such a system is offering a spatial decoupling by allowing depositing and withdrawing processes to be unaware of each other’s identities. Publishers or producers and subscribers or consumers are decoupled i.e. they don’t have to be at the system at the same time, however they can work concurrently. Data items flow to and flow out of the system separately. Javaspaces is a concept very closely related to Linda’s TS. Jini is a network technology built on top of the Javapaces. Jini network technology provides a simple infrastructure for delivering services in a network and for creating spontaneous interaction between programs that use these services regardless of their hardware/software implementation. Any kind of network made up of services (applications, databases, servers, devices, information systems, mobile appliances, storage, printers, etc.) and clients (requesters of services) of those services can be easily assembled, disassembled, and maintained on the network using Jini technology. Services can be added or removed from the network, and new clients can find existing services, all without administration. o RPC (Remote Procedure Call) RPC offers the abstraction of being able to invoke a procedure whose body is across the network. RPC is a call sent from one machine or process to another machine or process for some service. An RPC is synchronous, beginning with a request from a local calling program to use a remote procedure and ending when the calling program receives the results from the procedure. An implementation of Distributed Computing Environment, DCE RPC, includes a compiler that translates an interface definition into a client stub, which marshals a procedure call and its parameters into a packet, and a server stub, which unmarshals the packet into a local server call. The client stub can marshal parameters from a language and machine representation different from server stub’s, thereby enabling interoperation. For example if client machine has a 32-bit architecture and server machine has a 64-bit architecture and server machine is to send an integer to client machine, this is the stub that is aware of the differences in data formats and does the conversion. All this process is invisible to the applications (Transparency). An RPC implementation also includes a run-time library which implements the protocol for message exchanges on a variety of network transports, enabling interoperation at that level. Note: There is a problem with transparency! Imagine a client and a server and a simulation application with a lot of computation on the server side and the visualization on the client side. Therefore server computes and sends the result to the client machine. Now imagine the server program includes three nested loops as follows: for i=1 to 100 for j=1 to 100 for k=1 to 100 RPC will be called a million times. Every time that RPC is called there are some operations that must be done, like opening connections, before the actual data is sent, depending on the RPC protocol. In this case a million times a connection will be opened and each time a few bytes of information will be sent. The ideal is opening the connection once and sending a lot of information. Since the RPC call is invisible to the programmer, the code might become very inefficient. The point is that although transparency is a very good feature, it allows programmers to implement inefficient systems. o Distributed Object Middleware This is a refinement of RPC category. RPC category is procedural oriented and languages like C, Pascal and Modula are involved. Distributed Object Middleware is an evolution of RPC with object oriented technology. It provides the abstraction of an object that is remote but its methods can be invoked just like those of an object in the same address space as the caller. CORBA (Common Object Request Broker Architecture) is a standard for distributed object computing. It is part of OMA (Object Management Architecture), developed by OMG (Object Management Group). CORBA is considered by most experts to be the most advanced kind of middleware commercially available, and the most faithful to classical object oriented programming principles. Figure 2. DCOM (Distributed Component Object Model) is a distributed object technology from Microsoft that evolved from its OLE (Object Linking and Embedding) and COM (Component Object Model). Figure 3. Java has a facility called RMI (Remote Method Invocation) that is similar to the distributed object abstraction of CORBA and DCOM. RMI is a specification from Sun Microsystems that enables the programmer to create distributed Java-to-Java applications in which the methods of remote Java objects can be invoked from other Java virtual machines, possibly on different hosts. RMI provides heterogeneity across operating system and Java vendor, but not across language. Figure 4. Note: what is the advantage of object orientation? The advantage of object orientation can be viewed from user and application developer perspectives. Some of the advantages from user perspective are as follows: o o o Application objects are presented as objects that can be manipulated in a way that is similar to the manipulation of the real world objects. Common functionality in different applications that is realized by common shared objects, leading to a uniform and consistent user interface. Existing applications can be embedded in an objectoriented environment and object oriented technology does not make existing applications obsolete. Some of the advantages from application developer’s view are as follows: o o o Through encapsulation of object data, applications are built in a truly modular fashion. It is possible to build applications in an incremental way, preventing correctness during the development process. Cost and lead-time can be saved by making use of existing components. Encapsulation, Inheritance and polymorphism are features of object orientation. o MOM (Message Oriented Middleware) MOM provides the abstraction of a message queue that can be accessed across a network. In this system, information is passed in the form of a message from one program to one or more other programs. In MOM there are queues on client and server sides. These queues can be viewed as mailboxes, storage places or buffers that are managed by the MOM. Such queues can support persistency. Persistent queues store and keep the values and if the message arrives and system is not ready to get the message or request, the queue guarantees that the message will be available to the system. The issues with such queues are managing the information or messages in the queue (database issues), reliability i.e. messages will be delivered in order even if they arrive out of order. In systems with lots of request processing and computations, queues are very useful in order for the system not to get overflowed. Another kind of applications that can benefit from persistent queues are applications that deal with disconnected operations i.e. the system is not online all the time. It may be stalled or migrated or it may be a wireless device that can not receive the information in special situations. By having persistent queues, messages or requests are stored in the queue and whenever the system become available again, it can get the information from the queue. MOM provides temporal and spatial decoupling. NOTE: Persistency and transistency are two terms in programming languages with the following meanings: Persistency: Data units or objects life cycle is beyond the program life cycle. The program is executed completely but data units or objects related to it still exist. This becomes possible by using especial storage like queues for data units or objects. Transistency: Data units or objects do not exist after the program completely executed and just the result may be available. o TP Monitors (Transaction Processing Monitors) The main function of a TP monitor is to coordinate the flow of requests between terminals or other devices and application programs that can process these requests. A request is a message that asks the system to execute a transaction. The application that executes the transaction usually accesses resource managers, such as database and communications systems. A typical TP monitor includes functions for transaction management, transactional interprogram communications, queuing, and forms and menu management. In banking applications imagine if there is a crash or power outage during a withdrawal or deposit activity from or to an account. The system must rollback all the process and doesn’t change the account balance. In such systems the entire workload is one unit of work (transaction) either all of it or none of it gets done. TP monitors provide primitives, functionality and services to realize transactions in a distributed system. It can be said that the concept of middleware started from early TP monitors in OLTP and has evolved to today’s middleware concepts. There can be a combination of message queues and TP that combine the properties of message queue and TP and it is an evolution of each of the message queue and TP concepts. o Directory Services A directory is like a database, but tends to contain more descriptive, attribute-based information. The information in a directory is generally read much more often than it is written. As a consequence, directories don't usually implement the complicated transaction or rollback, schemes regular databases use for doing high-volume complex updates. Directory updates are typically simple, all-or-nothing changes, if they are allowed at all. Directories are tuned to give quick-response to high-volume lookup or search operations. They may have the ability to replicate information widely in order to increase availability and reliability, while reducing response time. When directory information is replicated, temporary inconsistencies between the replicas may be OK, as long as they get in sync eventually. DNS (Domain Name System) is a distributed database that resides in multiple machines on the Internet and is used to convert between names and addresses and provide email routing information. LDAP (Lightweight Directory Access Protocol) is a protocol for accessing online directory services. It runs over TCP/IP and it is based on entries. An entry is a collection of attributes that has a name, called a distinguished name (DN). The DN is used to refer to the entry unambiguously. Each of the entry's attributes has a type and one or more values. The types are typically mnemonic strings, like "cn" for common name, or "mail" for email address. The values depend on what type of attribute it is. For example, a mail attribute might contain the value "babs@umich.edu". A jpeg Photo attribute would contain a photograph in binary JPEG/JFIF format. In LDAP, directory entries are arranged in a hierarchical tree-like structure that reflects political, geographic and/or organizational boundaries. Entries representing countries appear at the top of the tree. Below them are entries representing states or national organizations. Below them might be entries representing people, organizational units, printers, documents, or just about anything else you can think of. In addition, LDAP allows you to control which attributes are required and allowed in an entry through the use of a special attribute called objectclass. The values of the objectclass attribute determine the schema rules the entry must obey. A trader service is like yellow pages. There is a language that can be used to access the trader that allows you to specify categories. For instance one can use a trader to publish a system that has 8 processors with 64-bit architecture and high speed interconnects and someone else is looking for such a machine. He/she can use the language of the trader to submit his/her query and the trader will return the object reference to the address of this machine. o Component Oriented Frameworks Application programming models fall into two categories. The classic model entails the creation of an application as a single standalone entity, whereas the component model allows the creation of an application as a set of reusable components. Perhaps the most significant recent development is components. Component-based middleware evolves beyond object-oriented software: You develop applications by gluing together off-theshelf components that may be supplied in binary form from a range of vendors. This type of development has been strongly influenced by Sun’s java Beans and Microsoft’s COM technologies. EJB (Enterprise Java Beans) is a specification created by Sun Microsystems that defines a framework for server-side Java components. EJB makes distributed object technology more accessible and easier to use by offering an abstraction level that is higher and therefore more efficient for software development. COM+ (Component Object Model) is the next generation of DCOM that greatly simplifies the programming of DCOM. COM refers to both a specification and implementation developed by Microsoft Corporation which provides a framework for integrating components. This framework supports interoperability and reusability of distributed objects by allowing developers to build systems by assembling reusable components from different vendors which communicate via COM. By applying COM to build systems of preexisting components, developers hope to reap benefits of maintainability and adaptability. COM defines an application-programming interface (API) to allow creation of components for use in integrating custom applications or to allow diverse components to interact. However, in order to interact, components must adhere to a binary structure specified by Microsoft. As long as components adhere to this binary structure, components written in different languages can interoperate. CCM (Corba Component Model) o Database Access Technology Mediators ODBC (Open Database Connectivity) was developed to create a single standard for database access in the Windows environment. JDBC is an API for database access. Java's JDBC API provides a shared language through which the applications can talk to database engines. It offers a set of interfaces that create a common point at which database applications and database engines can meet. o Application Servers Application servers are applications that realize most of the business logic. The concept of application servers argues that business logic should be in the thick client instead of the server. The advent of the PC made possible a dramatic paradigm shift from the monolithic architecture (the user interface, business logic, and data access functionality are all contained in one application) of mainframe-based applications. The client/server architecture was in many ways a revolution from the old way of doing things. Despite solving the problems with mainframe-based applications, however, client/server was not without faults. For example because database access functionality and business logic were often contained in the client component, any changes to the business logic, database access, or even the database itself often required the deployment of new client component to all the users of the application. Usually such changes would break earlier versions of the client components, resulting in a fragile application. The problems with the traditional client/server (two-tier client/server) were addressed by the multi-tier client/server architecture. Conceptually an application can have any number of tiers, but the most popular multi-tier architecture is three-tier, which partitions the system into three logical tiers: o o User interface layer Business rules layer o Database access layer Three-tier client/server enhances the two-tier client/server architecture by further insulating the client from changes in the rest of the application and hence creating a less fragile application. DBMS (Database Management System) Web Servers o References Course Lecture Middleware, David E. Bakken, Washington State University Managing Complexity: Middleware Explained, Andrew T. campbell, geoff Coulson, and Michael E. Kounavis Middleware: A model for Distributed System Services The Design of the TAO Real-Time Object Request Broker, Douglas C. Schmidt, David L. Levine, and Sumedh Mungee, Department of Computer Science, Washington University, January 18, 1999 CORBA in 14 Days, Jeremy Rosenberg Communication Networks Fundamental Concepts and Key Architectures, Leon-Garcia, and Widjaja Implementation Remote Procedure Calls, Andrew D. Birrell and Bruce Jay Nelson