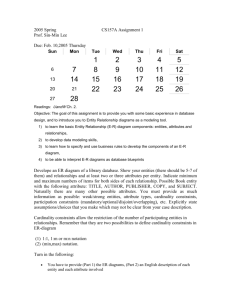

Database Solutions Review Questions

advertisement