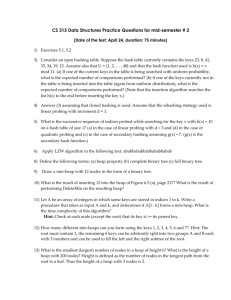

1 3,9, ,32,11,50,7

advertisement

WUCT103 Algorithms & Problem Solving session 12 Revision Wollongong College Australia DIPLOMA IN INFORMATION TECHNOLOGY Sherine Antoun - 2007 Types of Problems Well structured The details, goals and possible actions are all clearly stated 3x = 2 , solve for x Poorly-structured There is uncertainty in details &/or goals &/or possible actions Cook dinner Build a successful career Problem solving method 5 steps 1. 2. 3. 4. 5. Analyse and define the problem Generate alternate solutions Evaluate the alternate solutions and choose one Implement the chosen solution Evaluate the outcome and learn from the experience Analyse & define the problem Understand & analyse Organise & represent the information Look at the problem from different perspectives if necessary Define (formulate) the problem Analyse & define the problem Understand & analyse Facts, constraints, conditions, assumptions Knowledge, experience, skills Gather/acquire new knowledge Current state & goals Permissible actions Definition of an algorithm A step-by-step method for solving a problem or doing a task Input List ALGORITHM Output List Improving your algorithm DEFINE ACTIONS Must explain what is done in each step REFINE Use consistent terms and language Ensure the algorithm knows where to ‘start’ GENERALISE Can you use it to handle a wider variety of similar problems? Three constructs It has been proven that only 3 “constructs” are necessary to make an algorithm work for computer science SEQUENCE An ordered ‘list’ (sequence) of instructions DECISION (selection) May need to test if some condition is true or not true REPETITION May need to repeat some sequence of instructions Representing an algorithm Visually Flowchart PseudoCode Plain ‘english’ The three constructs shown visually sequence decision Action 1 false Action 2 Action n test true repetition false while condition true Another sequence of actions A sequence of actions A sequence of actions The three constructs shown in pseudo code sequence action1 action 2 . . . action n decision repetition IF (condition) WHILE (condition) THEN action action ... ELSE action action … END IF action action … END WHILE A better definition of an algorithm An ordered set of unambiguous steps that produces a result and terminates in a finite time Ordered set Unambiguous steps Produce a result Terminate (halt) in a finite time PROBLEM SOLVING STRATEGIES Four main basic strategies that are widely employed Brute Force Analogy Trial & error Heuristics Divide & Conquer Greedy Finite State Machine (wikipedia) an abstract machine that has only a finite, constant amount of memory. The internal states of the machine carry no further structure. FSMs are very widely used in modelling of application behaviour, design of hardware digital systems, software engineering, study of computation and languages Finite State Machines also known as Finite State Automation (ai-depot.com) At their simplest, model the behaviours of a system, with a limited number of defined conditions or modes, where mode transitions change with circumstance. Finite state machines consist of 4 main elements: 1. 2. 3. 4. states which define behaviour and may produce actions state transitions which are movement from one state to another rules or conditions which must be met to allow a state transition input events which are either externally or internally generated, which may possibly trigger rules and lead to state transitions A finite state machine must have an initial state which provides a starting point, and a current state which remembers the product of the last state transition. Received input events act as triggers, which cause an evaluation of some kind of the rules that govern the transitions from the current state to other states. The best way to visualize a FSM is to think of it as a flow chart of states Finite State Machine (wikipedia) Can be represented using a state diagram. There are finitely many states, and each state has transitions to states. There is an input string that determines which transition is followed (some transitions may be from a state to itself). Finite State Machines There are several types of finite state machines including Acceptors (a.k.a. Recognizers) They either accept/recognize their input or do not. Transducers They generate output from given input. Mealy machines have actions (outputs) associated with transitions Moore machines Have actions (outputs) associated with states Variables However, in computer science a variable is a “place in memory” where we can store something Imagine we have a box called “my box of stones” … we can add stones to the box, we can take stones out of the box and we can look inside the box and check how many stones are there … we can say that “my box of stones” has a certain value at any given time! Sets Sets are used to describe groups of variables In mathematics, a set is simply an unordered collection of elements (things) In computer science we don’t have any data structures that are directly equivalent to sets – but we do have structures that are similar Arrays But if all five student marks are collected together under one name, how do we refer to the mark of any one particular student in the array or do calculations with it? We need to identify each of the ‘cells’ in the array – usually with numbers These ‘cells’ are correctly called INDEXES or INDECES So the value (mark) for the 3rd student is students[3] If that student just scored another 6 marks from a quiz, then that mark is now: students[3] = students[3] + 6 students [1] [2] [3] 51 72 12 [4] [5] 95 2 Linked Lists Each ‘box’ (node) has some extra information that tells you where to find the next one … so they are “joined” in a linear chain Head pointer data link data link data link Linked Lists Head pointer link data link data data link Can look a little like one-dimensional arrays … students [1] [2] [3] 51 72 12 [4] [5] 95 2 But there are significant differences … Linked Lists – different to Arrays An Array uses just one single chunk of memory A Linked List is a number of chunks ‘chained’ together Arrays allow direct access to the content of the cells Eg: you can go directly to the cell: “students[4]” In a Linked List you have to start at the first node and move through the list one-by-one until you find what you are looking for To change the size of an Array, you have to construct a new array, copy the old data into it (‘recast’), then delete the old array To change the size of a Linked List, you just add some more nodes or remove some nodes Linked Lists Two common types of specialized (or ‘restricted’) linked lists are: LIFO (last in – first out) also called a stack FIFO (first in – first out) also called a queue Singly linked lists An ordered collection of data in which each element contains the location of the next element Singly Linked lists Element or node Head next next next Head pList=null Head Pointer Head next Linked lists next Data can be • A Number •Text •Array •Record Record Data key field1 field2 field3 … fieldn End Data Singly linked lists Basic operations Create a list, insert node, delete node & retrieve node Example: a singly linked list of numbers Create an empty list Head Head = null Insert Node – general case Step 1: a list .. 39 Head Step 2: Create new node 72 New Step 3: set New.next = Head set Head = New 72 Head 39 Insert into a null (empty) list Step 1: an empty list .. Head Step 2: Create new node 72 New Step 3: set New.next = Head set Head = New 72 Head Insert at beginning Head 75 39 New New.next = Head Head = New Head 39 75 Insert in middle Head 39 75 Pre 52 New New.next = Pre.next Pre.next = New Head 75 52 39 Delete Node Head 75 52 39 Delete first node Head 75 52 39 Kill Kill=Head Head = Kill.next recycle(Kill) Head 75 52 39 General delete case Head 75 52 Pre Kill 39 Kill=Pre.next Pre.next = Kill.next recycle(Kill) Head 75 52 39 Arrays An array is a fixed-size, sequenced collection of elements of the same data type element 0 element 1 element 2 • The length or size of an array is the number of elements in the array • Each element in the array is identified by an integer called index, which indicates the position of the element in the array. element 6 • For zero-based array, index starts from 0 to the length minus 1 • The upper bound of an array is the index of the last element numbers Q: how many elements in this array? There are 7, from “0” to “n” = (n+1) Two Dimensional Arrays Size of A: 5x4 Access an element: A[row_index][col_index] A[2][1] A why do you think images are usually stored in 2D arrays ? Records A collection of related elements, possibly of different types, having a single name Each element in a record is called a field. A Field has type and is the smallest accessible element emp_no emp_name Field: emp_no pay_rate hours_worked employee Record name The elements in a record can be of the same or different types. But all elements in the record must be related. Records Use period . Accessing records Individual field: record_variable_name.fieldname Whole record: record_variable_name student1 is a record of the type: student student1 and student2 are records of the same type: student student1.id = 102345 Example: set student1 to student2 student1.name = “john” Safe way: copy it field by field student1.gradepoint = 85 student1.id = student2.id student1.name = student2.name student1.gradePoint = student2.gradePoint Graphs The nodes of a graph are called VERTICES The links are called ARCS One type of graph is a DIRECTED GRAPH or “DIGRAPH”, where each ARC has a specific direction Graphs – some terms: If there is no direction in the ARCS, then the graph is an UNDIRECTED GRAPH. And the arcs are called EDGES. A PATH is a sequence of vertices where each vertex is adjacent (next to) the next one A bit like having a Linked List as part of the Graph A CYCLE is a path of at least 3 vertices that starts and ends with the same vertex This path of 3 vertices forms a CYCLE Graphs – some more terms: Two Vertices are CONNECTED if there is a path between them A Graph is CONNECTED if there is some pathway between any two vertices in the graph (in at least one direction) That is: you can “get to anywhere”, somehow A Graph is DISJOINT if it is not CONNECTED Eg: if there is some node ‘floating’ off by itself The DEGREE of a vertex is the number of EDGES (or ARCS) that connect to it Graphs – even more terms A DIGRAPGH (one with arrows) is STRONGLY CONNECTED if there is a path from each vertex to every other vertex A DIGRAPH (one with arrows) is WEAKLY CONNECTED otherwise Imagine a city street that is BOTH a “one-way” AND a “dead-end” Then, you’d get trapped at the end wouldn’t you! UNDIRECTED GRAPHS, if they are connected (ie: not ‘disjoint’) are always STRONGLY CONNECTED, because there is no directionality, so you can eventually get to any node from any other node … so there is no real need to talk about “weak” or “strong” with UNDIRECTED GRAPHS, only whether they are connected or not. Graphs – terms continued The OUTDEGREE of a vertex in a digraph is the number of arcs that leave it The INDEGREE of a vertex in a digraph is the number of arcs that enter it This vertex has OUTDEGREE = 1 and INDEGREE = 2 Graphs - weighting A WEIGHTED GRAPH or network is a graph that has numerical weights attached to each edge (or arc) Imagine a roadmap, where the lines joining each town are marked with their distance, or perhaps with the travel-time Imagine a circuit diagram where each connection is shown with its resistance Imagine a map of airline flights between cities, with the ticket price of each flight marked on them A NEURAL NETWORK is a very special type of weighted graph. A programme can ADJUST the weightings on the edges and make certain edges “easier”. In this way, a neural network can “learn” to solve certain types of problems by itself! Every time it gets a successful result, it reinforces that pathway by adjusting the weights to make it more preferred. This is how we believe the human brain learns! Graphs Many problems that are modelled by weighted graphs (networks) require that the shortest path (‘cheapest’ or ‘quickest’) be found between 2 particular vertices The SHORTEST PATH between two vertices is defined as the path that connects those vertices with the lowest possible total weight .. ie: no other path between them has less weight. Trees A TREE is an ACYCLIC GRAPH, also connected & directed That simply means, a graph with no CYCLES in it, also where there are no ‘lonely’ nodes, and the links are directed ‘downwards’ Unlike real natural trees, which have their roots at the bottom and their leaves at the top, – computer science trees are usually drawn upside down! Trees Trees are extremely useful data structures, so it is worth spending quite a bit of time on them Trees are very good at setting out classification systems (taxonomies) … such as: Your family members over a few generations The library catalogue system Organisation of an army Possible moves in games Tree structures are used extensively in AI (artificial intelligence) Trees Trees are probably the dominant form of data structure in computer science, and even the file-system of your computer is set out in a tree structure Trees can even be used for more complex ideas, such as representing equations in a structure called an EXPRESSION TREE + a This is an example expression tree: d * Its meaning may change depending on the method of tree traversal + b c + Trees EXPRESSION TREE Infix … a*(b+c)+d Prefix … +*a+bcd Postfix … abc+*d+ a d * + b c Trees Trees are made up of NODES, joined by edges which we call BRANCHES We talk about the LEVEL of the nodes, starting from level 0 at the top and working down Level 0 Level 1 Level 2 Level 3 Trees - terms A node that is connected by a single branch to a node at a lower number level (ie: the next row up) is said to be the CHILD of that higher node A node that is connected by a single branch to a node at a higher number level (ie: the next row down) is said to be the PARENT of that lower node Nodes that have the same parent, are said to be SIBLINGS (like “brothers & sisters”) Trees – more terms A node that is connected by a number of branches to a node at a lower number level (a few levels up) is said to be its DESCENDANT A node that is connected by a number of branches to a node at a higher number level (a few levels down) is said to be that nodes ANCESTOR DESCENDANT = child of a child of a … ANCESTOR = parent of a parent of a … This is like “grandfather” or “great grandfather” etc and “grandchild” or “great grandchild” etc Trees – even more terms A ROOTED tree has a special node, called the ROOT NODE, which is the ANCESTOR of all the other nodes in the tree Most of the trees we see in Computer Science are ROOTED TREES Trees – terms continued Nodes in a tree may be referred to as INTERNAL NODES, meaning that they have at least one child Nodes with no children are called LEAF NODES The HEIGHT of a node is the number of edges in the longest path from the node to a LEAF The DEPTH of a node is the number of edges in the path from the ROOT to that node Root node (depth = 0) Leaf node: HEIGHT = 0 DEPTH = 2 Internal node: HEIGHT = 2 DEPTH = 1 Leaf node (height = 0) Minimum Spanning Tree 1 1 4 6 2 2 4 5 3 G 1 6 1 5 7 2 3 3 6 4 3 7 2 4 8 4 4 3 5 4 G 6 3 7 Binary Trees BINARY TREES are trees where each node can have AT MOST, 2 children These are called the LEFT CHILD & the RIGHT CHILD Binary Trees – Child-Sibling Trees Whilst a binary tree can only have up to 2 children per node, a general tree can have any number of children from each node We can convert general trees into binary trees by representing them as child-sibling trees Binary Trees – Child-Sibling Trees A B E A C This graph is NOT binary, node A has 3 children! D B F G E H A E H D C Re-arrange the child sibling into an equivalent tree of binary form D F H B C F G G Join PARENT to LEFT CHILD, then to NEXT SIBLING .. Then each node has AT MOST 2 edges going out Suppose “0” is not a valid item for insertion in the tree R Complete the tree by adding this ‘invalid’ value to the empty nodes C A 0 Z K 0 R C Z D A K T N T 0 S 0 0 W 0 D N S 0 W 0 0 0 Constructing The Tree We need a rule to determine where to place new nodes in the tree This will depend on your application The tree on the previous slide is actually built in alphabetical order The node on the left of each subtree appears before its parent in the alphabet The node on the right of each subtree appears after its parent in the alphabet We can use a binary tree to SORT data R C K N Z T S D A W R C A Z K D T N S W Binary Tree Sort Algorithm: binarytreesort Purpose: sort items that can be ordered Input: items to be sorted, root of tree Output: binary tree with items in order Pre: items must be able to be sorted Post: inorder traversal of the tree produces items in order Return: BEGIN binarytreesort IF the tree is empty THEN set the root to item set inserted to true ENDIF WHILE not inserted do IF item < root IF root has left child THEN set root to left child ELSE set left child to item set inserted to true ENDIF ELSE (item >= root) IF root has right child THEN set right child to item set inserted to true ENDIF Depth-first traversal of a binary tree PREORDER traversal Visit the root of the tree Preorder traverse the left subtree Preorder traverse the right subtree 1 2 3 INORDER traversal Inorder traverse the left subtree Visit the root of the tree Inorder traverse the right subtree 2 1 3 POSTORDER traversal Postorder traverse the left subtree Postorder traverse the right subtree Visit the root of the tree 3 1 2 Preorder traversal of a binary tree A B C A B C D E F E D F Inorder traversal of a binary tree A B C C B D A E F E D F Postorder traversal of a binary tree A B C C D B F E A E D F IntuitiveSort Space: need an extra array of same size as input one, to store the sorted data Operations: For N numbers, need N2 comparisons x 3,9,1,32,11,50,7 x x 1,3,7,x,x,x,x 1,3,7,9,32,11,50 Sorted part 1,3,7,9,32,11,50 Unsorted part Unsorted part 1,3,7,9,11,32,50 Sorted part Selection sort 0 N-2 N-1 FindSmallest Find the smallest Swap smallest Swap the smallest with the first element in the unsorted part 3,9,1,32,11,50,7 Find the smallest number & swap it with the first element 1,9,3,32,11,50,7 Find the smallest number among the numbers from the second to the last & swap it with the second element (or the first element in the unsorted part) 1,3,9,32,11,50,7 Find the smallest number among the numbers from the third to the last swap it with the third element (or the first element in the unsorted part) 1,3,7,32,11,50,9 Selection Sort Space: no extra array is needed Number of operations N-1 + (N-2) + (N-3) + ……+1 = (N-1)*(N-1+1)/2 Worst case: if input is 50,32,11,9,7,3,1 21 comparisons and 21 swaps Best case: if input is 1,3,7,9,11,32,50 21 comparisons, but no swaps = N(N-1)/2 Take the next number of the unsorted part (1), And “hold it” … Insertion sort1 3,9,1,32,11,50,7 Then find the correct place to put in, into the sorted part 3,9, ,32,11,50,7 Compare 1 to 9, 9 is bigger than 1, so move 9 “up” 3,9, 9 9,32,11,50,7 Compare 1 to 3, 3 is bigger than 1, so move 3 “up” 3, 3 3,9,32,11,50,7 Insert the “held over” 1 into the space you just made 1 ,3,9,32,11,50,7 NB: this is not really swapping! .. We are actually “holding” the new smallest Number, and “shuffling” the others “up” until you find the right place to insert it 3,9,1,32,11,50,7 Transferred the first element into the sorted part 3,9,1,32,11,50,7 Transferred the second element into the sorted part and place it in order 3,9,1,32,11,50,7 Transferred the third element into the sorted part and place it properly 1,3,9,32,11,50,7 Insertion Sort Space – not any extra array is needed Operations Worst case N(N-1)/2 comparisons & “moves” Best case N comparisons, no swaps Bubble sort 3 9 1 32 11 50 7 3 9 1 32 11 7 50 3 9 1 32 7 11 50 3 9 1 7 32 11 50 3 9 1 7 32 11 50 3 1 9 7 32 11 50 1 3 9 7 32 11 50 1 3 9 7 32 11 50 Bubble Sort Space: not any extra array is needed Operations – comparisons & swaps Worst case: N(N-1)/2 comparisons & swaps Best case: N comparisons, 0 swaps Sequential Search Sequential Search Operations Worst case: N comparisons Best case: 1 comparison How Can We Search Faster? Sort the array first! Target given 11 3,9,1,32,11,50,7 Find its location Sorting 1,3,7,9,11,32,50 We can now stop searching if we find a match, OR if we see a number BIGGER than the one we’re searching for Binary Search – even better ! Target given 11 3,9,1,32,11,50,7 Find its location 1,3,7,9,11,32,50 Sorting Shell’s Sort (ShellSort) We chop the array into smaller pieces and separate-out some elements and sort them Example: What if we just looked at every 3rd element ShellSort Shellsort Divide the list into h segments using increment, h, and apply Insertion Sort to each segment. The list will be sorted in an efficient way by applying a sequence of diminishing increments, hn, hn-1, …, h1, 1. ShellSort Consider an array with 16 elements Lets ‘chop’ it into 2 chunks .. The “front 8” & the “back 8” Let’s look at the first elements of EACH chunk [that gives us a “group of two” (sub-array) to consider, one from each chunk] Group of two elements to consider: [1] = 4 & [9] = 3 Is this group-of-elements sorted? .. NO! we need to sort this group! (because 3 is smaller than 4) 4 7 10 0 3 9 3 1 3 18 [1] [2] [3] [4] [5] [6] [7] [8] [9] [10] [11] [12] [13] [14] [15] [16] 2 23 8 2 0 6 ShellSort Now you may feel that this was MORE work than doing a simple Insertion Sort on the whole array from the start! Intuitively the ShellSort algorithm feels like it shouldn’t work any better, yet it does! In fact, it cannot do any worse than Insertion Sort (or Bubble Sort) and almost always actually does considerably better! NB: ShellSort is heavily studied by mathematicians who has STILL not quite found all its secrets! So what did we actually do here? With 16 elements: We examined every 8th element (cut in half) Then every 4th element (cut into quarters) Then every 2nd element (cut into eighths) Then finally every 1st element (cut into sixteenths) Each step made us do bigger calculations, on bigger sub-arrays ~ BUT Each step got easier because the sub-arrays were partially sorted from the previous step! MergeSort (John von Newmann) A classic example of “divide & conquer” recursive Split the list into left and right halves Merge the sorted left half and right half into a sorted list Mergesort Performance Needs extra memory for merge On Average, Mergesort (~ n Log n) is even better than Shellsort QuickSort – basic concept A little like MergeSort, but this time we do the hard work deciding how to split the array, THEN its easy to “merge” it In a way, this is “conquer & divide” The relationship between QuickSort & MergeSort is a little bit like the relationship between InsertionSort & SelectionSort QuickSort – basic concept Take the first element, call it the PIVOT Then “slide along right” from the 2nd element looking for anything larger than PIVOT Then “slide along left” from the end, looking for anything smaller than PIVOT If you find ‘stopping points’ then SWAP then, and keep sliding again … Then when the “lo point” and the “hi point” meet, you move the PIVOT to the middle point. You get an array with “lower” points on the left & “higher” points on the right Then you do the same thing to the left side, and to the right side and recursively continue doing this till you are just looking at single elements … THEN you simply concatenate (join) all these single elements together and you have the finished sorted array pivot Basic concept Left Right x,x,x,x, ….., x,x,x,x,x, ……x,y,x. , x,x,x,x,x If there is a special element, y, such that y is greater than or equal to all elements on its left side AND y is smaller than or equal to all elements on its right side? Use this special element, y, to split the array into Left and Right, both excluding y, sort Left Merge is simple sort Right here ! merge sorted left and right (concatenation only) Quicksort Two questions Base cases If the number of elements is 0 or 1 How to partition the list using a pivot Partitioning algorithm Select any element, y, in the list as a pivot Move it to its correct position, say Iy in the list Partition the list at Iy Iy=1 4,2,6,9,15,76,36 x,4,x,x,x,x,x Left Right Selecting an element to be a pivot there are some PROBLEM cases! What if the array is already sorted? You STILL have to do all that horrible work! Sometimes the array is already partially sorted So, what if you choose the biggest or smallest element as PIVOT? Selecting an element to be a pivot there are some PROBLEM cases! Lowest or highest element (Never use these!) 4,2,6,9,15,76,36 4,2,6,9,15,76,36 Middle element 4,2,6,9,15,76,36 Median of first, middle and last elements 4,2,6,9,15,76,36 Quicksort Complexity No extra space (memory) needed Operations Average ~2NlgN Worst ~N2 Comments Most well studied sorting algorithm Pivot selection Never select first or last element Middle element is reasonable Median-of-Three (first, middle and last) partitioning is better Binary Search If the array is SORTED, it makes searching a LOT easier! 2 5 6 9 11 16 24 32 66 79 Array is sorted, small to large (left to right) Guess the middle position Is it the number we want? YES: we are finished NO: THEN ASK: IS THE NUMBER WE GUESSED TOO BIG? Then look ONLY at numbers LEFT of this position, and repeat process IS THE NUMBER WE GUESSED TOO SMALL? Then look ONLY at numbers RIGHT of this position and repeat process Recursive binary search Simpler version of itself ? Search the lower half - “first to mid-1 elements” or Search the upper half - “mid+1 to last elements” Base cases ? Find the target identified by “mid” No target found – “last < first” Heaps A heap is a complete binary tree (Rule 1) the key value of each node is >= to the key values of all nodes in all of its sub-trees (Rule 2) Root has the largest key value. (max-heap) each sub-trees is a heap as well Question Differences between BSTs & heaps? BSTs are not necessarily complete binary trees, heaps are complete binary trees In BST, root key value is greater than the key values of the nodes in its left sub-tree and less than the key values in its right sub-tree In heaps, root key value is the largest in the tree and key values in right sub-tree are not necessarily greater than key values in the left sub-tree. Basic Heap Operations Two basic operations are performed on a heap: Insert – insert a node into a heap Delete – remove root node from the heap Heap operations Insert a new node with key value 11 24 9 12 10 7 11 Need to repair the structure in order to maintain heap property: ReheapUP Heap operations Delete the root node 7 24 9 12 10 7 Need to repair the structure in order to maintain heap property: ReheapDown Basic Heap Algorithms To implement the insert and delete operations, we need two basic buildingblock algorithms: ReheapUp ReheapDown ReheapUp Imagine we have a complete binary tree with N elements. Assume that the first N-1 elements satisfy the order property of heaps, but the last element does not. The ReheapUp operation repairs the structure so that it is a heap by “floating” the last element up the tree until that element is in its correct location in the tree. ReheapDown Imagine we have a complete binary tree that satisfies the heap order property except in the root position. This situation occurs when the root is deleted from the tree, leaving two disjoint heaps. To combine the two disjoint heaps, we move the data in the last tree node to the root position. “SWAP” the last leaf to the root position Obviously, this action destroys the tree’s heap properties! ReheapDown To restore the heap property we need an operation that will “sink” the root down until it is in a position where the heap order property is satisfied. We call this operation ReheapDown. Heap Data Structure As heap is a complete binary tree, it is most often implemented in an array Hence, the relationship between a node and its children is fixed and can be calculated. Heap Data Structure For a node located at index i, its children are found at: Left child: 2 i +1 Right child: 2 i +2 0 1 3 2 4 5 The parent of a node at index i is found at: (i -1)/2 Heap Data Structure Given the index for a left child, j, its right sibling, if any, is found at j+1. Given the index for a right child, k, its left sibling, which must exist, is found at k-1. Build Heap Build a heap from an array of elements that are in random order Divide the array into two parts: the left being heap & the right being data to be inserted into the heap. Initially, the heap only contains the first element. Start from the second element, calling ReheapUp for each array element to be inserted into the heap. Insert Heap Once a heap is build, a new data item (new node) can inserted into the heap as long as there is room in the array To insert a new node into a heap Locate the first empty leaf in the array – immediate after the last node Place the new node at the first empty leaf ReheapUp the new node Delete Heap When deleting a node from a heap, the most common & meaningful logic is to delete the root. The heap is thus left without a root, to re-establish the heap Move the data in the last heap node to the root (swap last leaf to root position) ReheapDown the root. Heap Applications Three common applications of heaps Selection algorithms Select k’th largest numbers in an unsorted list of numbers Sorting Sort a list of numbers in descending (max-heap) / ascending (min-heap) order Priority queues Heap Applications Selection Create a heap from the list of unsorted number Delete k-1 elements from the heap (and place the deleted elements at the end of the heap and reduce the heap size by 1) The k’th element is now at the top - root Example: Select the 4’th largest number from the list of 10 numbers Heap Application Heap sort Build a heap from the list of elements to be sorted Delete the root of the heap, place the deleted elements at the end of the array that stores the heap & reduce the heap size by 1. Once all items have been deleted, you will have a sorted array Heap Sort Heap sort is an improved version of the selection sort in which the largest element (the root) is selected & exchanged with the last element of the unsorted list (or heap) Complexity nlog2n recursive problems Generally we can solve any problem recursively provided: 1. We an specify some specific solutions explicitly (base or stopping cases) 2. The remaining solutions can be defined in terms of these simpler solutions 3. Each recursive call in the definition brings the result closer to the explicitly defined solutions Solving problems using recursion 1. Express the problem as a simpler version of itself 2. Determine the base case(s) 3. Determine the recursive steps General form of recursion Algorithm recursion Purpose this illustrates the general form of a recursive algorithm Post: the solution has been found recursively BEGIN recursion1 IF the base case is reached THEN solve the problem ELSE split the problem into a simpler case using recursion ENDIF END recursion1 ASIDE … intro to complexity One ‘pass’ through a bubble-sort requires about 1 ‘calculation’ for each element, … so that is “n” number of calculations A complete bubble sort requires one ‘pass’ for each element, … so that is about “n x n” = “n2” number of calculations NB: “n x (n-1)” is about the same as “n2” A single traversal down a binary tree is about of order “log n” number of calculations Something like a Binary Search requires one traversal for each element, … so that is about “n x log n” number of calculations ASIDE … intro to complexity n n2 n Log n Log n n Log n n! 1 1 1 0 0 1 2 4 8 0.301 0.602 2 3 9 27 0.477 1.413 6 4 16 64 0.602 2.408 24 5 25 125 0.698 3.498 120 6 36 216 0.778 4.668 720 7 49 343 0.854 5.916 5,040 8 64 512 0.903 7.224 40,320 9 81 729 0.954 8.588 362,880 10 100 1,000 1 10 3,628,800 100 10,000 1,000,000 2 200 9.33x10157 1000 1,000,000 1,000,000,000 3 3,000 *huge*! Greedy Algorithms These algorithms work by taking what seems to be the best decision at each step No backtracking is done, can’t change your mind (once a choice is made we are stuck with it) Easy to design Easy to implement Efficient (when they work) Greedy Algorithms Example 1: making change Given we have $2, $1, 50c, 20c, 10c, 5c and 1c coins; what is the best (fewest coins) way to pay any given amount? The greedy approach is to pay as much as possible using the largest coin value possible, repeatedly until the amount is paid. E.g. to pay $17.97 we pay 8 x $2 coins, (=$16. cant pay 3 of these, or its too much!) 1 x $1 coin, (=$17) 1 x 50c coin, (=$17.50) 2 x 20c coins, (=$17.90) 1 x 5c coin (=$17.95) & 2 x 1c coins(15 coins total). This is the optimal solution (although this is harder to prove than you might think). Note that this algorithm will not work with an arbitrary set of coin values. Adding a 12c coin would result in 15c being made from 1 12c and 3 1c (4 coins) instead of 1 10c and 1 5c coin (2 coins). Greedy Algorithms General Characteristics We start with a set of candidates which have not yet been considered for the solution As we proceed, we construct two further sets: Candidates that have been considered and selected Candidates that have been considered and rejected At each step, we check to see if we have reached a solution At each step, we also check to see if a solution can be reached at all At each step, we select the best acceptable candidate from the unconsidered set and move it into the selected set We also move any unacceptable candidates into the rejected set Backtracking The Eight Queens problem We wish to place 8 queens on a chessboard in such a way that no queen threatens another. In other words, no two queens may be in the same row, column or diagonal. Let us assume we have a function solution(x) which returns True when x is a solution and False when it is not. Branch & Bound This time, we try to find some guidelines or hints to help us search the tree more efficiently If we can say that a certain branch CANNOT give us a good answer, then we can just “chop it off” and look elsewhere! Branch and bound The assignment problem A set of n agents are assigned n tasks. Each agent performs exactly one task. If agent i is assigned task j then a cost cij is associated with this combination. The problem is to minimise the total cost C. Backtracking is a kind of DEPTH-FIRST travel through a tree It will find AN answer If you keep doing it, it will find ALL answers eventually Branch & Bound is a kind of BREADTH-FIRST travel through a tree It will find the BEST (optimal) answer But, because it chops whole branches off, it usually wont find ALL possible answers Heuristic strategy Backtracking and branch-and-bound are “blind” in the sense that they do not look ahead beyond a local neighborhood when expanding the state-space-tree. Heuristic strategy explores further It looks at the difference between the current state and the goal state for pruning the state-space-tree. Heuristic strategy A promising node in the space-state-tree is defined as the one having the minimum value of a cost function Cost function for node, v, is defined as Cost(v) = g(v) + h(v) Where: g(v) is the cost from the start state (root node) to v and h(v) is estimated cost from v to the goal. As the path from v to goal has not bee found yet, the best can be done is to utilize a heuristic value for h(v) Strings a linear (typically very long) sequence of characters, e.g. A name, address, telephone number A word or text file or a book 0101010100000111100 (e.g. computer graphics) CGTAAACTGCTTTAATCAAACGC (a DNA sequence) Two common types of strings Text string – characters are from an alphabet (e.g. a sub set of ASCII or Unicode character set) Binary string – a simple sequence of 0 and 1 Strings – some definitions Length of a string Number of characters in the string Empty string String with zero number of characters, or length=0 A string is often represented as an array, e.g. S[0…n-1] – this is a string S of length n Substring A continuous part of a string, e.g. S[10…19] String Matching Given a text string of length n, and a pattern which is also a string, of length m (m<=n), .. find occurrences of the pattern within the text Here, we use “text” to refer to all types of strings. Example: text T = “In one sense, text strings are quite different objects from binary strings, since they are made up of characters from a large alphabet. In another sense, though, the two types of strings are equivalent, since each text character can be coded as a binary strings” pattern P =“strings” String Matching Let T[0…n-1] be the text (size n) & P[0…m-1] be the pattern (size m), To find an occurrence of P in T is like finding a position s, such that, T[s…,s+m-1] = P[0…m-1], 0 <= s <= n-m text T pattern P a b c a b a a b c a b a n=12 S=3 m=4 a b a a Brute-Force String Matching 1. Align the pattern P (size m) against the first m characters of the text T 2. Match the corresponding pairs of characters from left to right If all m pairs of the characters match, then, an occurrence of P has been found in T & the algorithm can stop If a mismatching pair is encountered, then the pattern P is shifted one position to the right & repeat Step 2 Brute-Force String Matching Complexity Two nested loops Outer loop – indexing through all possible starting indices of P in T At most executed ( n-m+1) times Inner loop – indexing to each character in P At most executed – (m) times Worst case O((n-m+1)m) -> O(nm) O(n2) if m=n/2 Average case Do not need to compare all m characters before shifting the pattern or increasing the outer loop index, s, ~ O(n+m) Work even with a potentially unbounded alphabet Brute-Force String Matching Example: T= “abacaabaccabacabaabb” (n=20) P= “abacab” (m=6) The Brute-Force algorithm performs 27 comparisons. If we make some clever observations along the way, we can reduce this number greatly! … Horspool’s algorithm Assume the alphabet is of fixed, finite size. eg: just 26 possible letters to choose from Basic concept The comparison starts at the end of P & moves backward to the front of P Maximize the “shift” of P without risking the possibility of missing an occurrence of P By looking at the character in T, T[i], that align with the last character of P, then the shift can be determined according to what is in P. Horspool’s algorithm Calculate shift values For a particular character c in T, its shift depends on if & how it appears in the pattern P. For a fixed, finite size of alphabet, we can precompute the shifts for all possible characters in the alphabet and store the shift values in a lookup table The table will be indexed by all possible characters that be encountered in a text Horspool’s algorithm Calculate shift values, t(c) Shift by pattern’s length ‘m’, if c is not among the first m-1 characters of the pattern Otherwise: Shift by the distance from the rightmost c among the first m-1 characters of the pattern to its last character. P = “leader” P = “recorder” t(a) = 3, t(c) = 5, t(d) = 2 t(d) = 2 t(e) = 1 t(e) = 1 t(l) = 5 t(o) = 4 t(r) = 6 t(r) = 3 Horspool’s algorithm Calculate shift values, t(c) Shift by the pattern’s length, m, if c is not among the first m-1 characters of the pattern Otherwise: shift by the distance from the rightmost c among the first m1 characters of the pattern to its last character index 0 1 2 3 4 5 6 7 Pattern (m=8) R E C O R D E R 5 4 3 2 1 Shift value (t(c)) m-1-(index of the rightmost occurrence of the letter within the first m-1 characters of P) Horspool’s algorithm 1. 2. 3. For a given pattern P, of length m and the alphabet used in both pattern and text T, construct the shift table, Table Align P against the beginning of T Repeat the following until either a match of P is found or P reaches beyond the last character of T 1) 2) Start with the last character in P Compare the corresponding characters in P and T until either all m characters in P are matched or mismatching pair is encountered a. If P is fully matched, stop b. If a mismatching pair is encountered, shift the pattern by Table(c) characters to the right along the text, where c is the character in T that is aligned with the last character of the pattern P How much to jump? Move pattern to its next place Check pattern against text, starting from the right Continue till you find an error … Look up the “wrong” text letter in the jump table “jump” the pattern that far … LESS the number of characters along in the pattern you found the error .. Say the error letter was “S” and S = “jump 10” BUT, you found the “S error” 4 letters ‘into’ the Pattern THEN you jump 10 – 4 = 6 spaces c a b … h i … m … r … t … y x t(c) 5 5 3 5 4 5 2 5 5 5 1 5 5 5 b h m r i t h m r i t h m Error letter is a “b” … the Table says “for b, jump = 5” BUT, the error was found 2 places ‘back’ in the Pattern, so really only Jump 5-2 = 3 places Find a Hamiltonian circuit starting from a vertex (say “a”) from the following graph using backtracking a b c d f e Hamiltonian circuit – A path that visits all the graph’s vertices exactly once before returning to the starting vertex Complexity P NP NP-complete