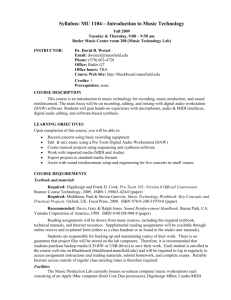

The instructor will discuss… - TI:ME Technology In Music Education

advertisement