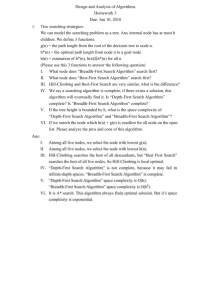

NP-Completeness

advertisement

Scott Perryman

Jordan Williams

NP-completeness is a class of unsolved decision

problems in Computer Science.

A decision problem is a YES or NO answer to an

algorithm that has two possible outputs.(ie Is this path

optimal?)

NP is NOT Non-Polynomial, it is Nondeterministic Polynomial.

A decision problem is NP-complete if it is

classified as both NP and NP-Hard.

They can be verified quickly in polynomial time

(P), but can take years to solve as their data sets

grow.

Was introduced by Stephen Cook in 1971 at the 3rd Annual

ACM Symposium on Theory of Computing

His paper, The complexity of theorem-proving procedures,

started the debate whether NP-Complete problem could be

solved in polynomial time on a deterministic Turing machine.

This debate has led to a $1 million reward from the Clay Mathematics

Institute for proving that NP-Complete problems can be solved in

polynomial time so that P=NP or disproving it thus P≠NP.

Approximation: Find the most optimal solution…almost.

Heuristic: An algorithm that works in many cases, but there

is no proof that it is both always fast and always has a correct

answer.

Parameterization: If certain parameters of the input are

constant, you can usually find a faster algorithm.

Restriction: By reducing the structure of the input to be less

complex, faster algorithms are usually possible.

Randomization: Use randomness to reduce the average

running time, and allow some small possibility of failure.

Using a list of cities and the distances between each

pair of cities find the shortest route by going to each

city and returning to the original.

The decision version of the problem, when given a

tour length L, decide whether the graph has any tour

shorter than L, is NP-complete.

Optimal solution would be to travel to each city only

once without any big “jumps”

Brute force runtime = O(n!)

Heuristic algorithm

Starting node could be random or same every

time

Finds the node with the least weight from the

starting node and goes there

Repeats from that node until back to start

Not guaranteed to be the best solution but runs

relatively quickly

Runtime of O(n2)

Starting

Map

Best

Solution

found

Initialization

c← 0

Cost ← 0

visits ← 0

e = 1 /* pointer of the visited city */

For 1 ≤ r ≤ n

Do {

Choose pointer j with minimum = c (e, j) = min{c (e, k);

visits (k) = 0 and 1 ≤ k ≤ n }

cost ← cost + minimum - cost

e=j

C(r) ← j

C(n) = 1

cost = cost + c (e, 1)

Given a set of items with a weight and a value,

determine which items you should pick that

will maximize the value while staying within

the weight limit of your knapsack (a

backpack).

The optimal solution is the highest value that

will “fit” in the knapsack

Brute force runtime = O(2n)

Starts with the last item in the list and removes

it if over capacity

Recurses through every item and adds to

knapsack based on Value and if there is still

room

Works for item duplicates

Runtime = O(kn) where k is capacity

INTEGER-KNAPSACK(Weight,Value,Item,Capacity)

{

If Item = 0

then

return 0

else if (Capacity – Weight[Item] < 0)

return INTEGER-KNAPSACK(Weight,Value,Item-1,Capacity)

else

a = INTEGER-KNAPSACK(Weight,Value,Item-1,Capacity)

b = INTEGER-KNAPSACK(Weight,Value,Item-1,Capacity)

return max(a,b)

}

Given a set of integers, does this set contain a

non-empty subset which has a sum of zero

Given this set

Would be true because

A variation would be for the subset sum to

equal some integer n

Brute force runtime = O(2nn)

Algorithm trims itself down to remain running

in polynomial time

Set for finding a certain sum

A list U consists of numbers lists T and S have

in common

Return True if list S has a number that is both

smaller than total sum but grater than the total

negative sum

a list S contains one element 0.

for i = 1 to N

T =a list of xi + y, for all y in S

U=T⋃S

sort [U] //sorts where U[0] is smallest

delete S[]

y = U[0]

S.push(y)

for z = 0 to U.size()

//eliminate numbers close to one another

//and throw out elements greater than s

if (y + cs/N < z ≤ s)

y=z

S.push(z)

if S contains a number between (1 − c)s and s

return true

Else

return false

NP-complete problems are pushing the limits

of our computing power

The limits of NP-completeness are set based

on the question of whether P=NP or P≠NP

If one problem can be solved quickly then

they all can be solved quickly.

Some areas of cryptography, such as public

key cryptography, which rely on being

hard, would be broken easily

The other six millennial problems could

instantly be solved.

Transportation become more efficient

thanks to easy path finding.

Operations research and protein structures

in biology would be easy to solve

Any yes/no question could be answered by

machine, not by a person, as long as the

polynomial constant factor of the

algorithms is low, not, for example, n1000

Currently believed to be true,

based on years of research and

the lack of an effective algorithm

Hard problems couldn’t be

solved efficiently , so Computer

Scientists would be focused on

developing only partial solutions.

This result still leaves open the

average case complexity of hard

problems in NP.

http://xkcd.com/399/

http://www.personal.kent.edu/~rmuhamma/Compgeometry/MyCG/CGApplets/TSP/notspcli.htm

http://cacm.acm.org/magazines/2009/9/38904-the-status-of-the-p-versus-npproblem/fulltext

http://clipper.cs.ship.edu/~tbriggs/dynamic/index.html

http://www.mathreference.com/lan-cx-np,intro.html

http://web.mst.edu/~ercal/253/Papers/NP_Completeness.pdf

http://cgi.csc.liv.ac.uk/~ped/teachadmin/COMP202/annotated_np.html

Computers and Intractability: A Guide to the Theory of NP-Completeness. New

York: W.H. Freeman.

ISBN 0-7167-1045-5

http://www.seas.gwu.edu/~ayoussef/cs212/npcomplete.html