Emerging Technologies

advertisement

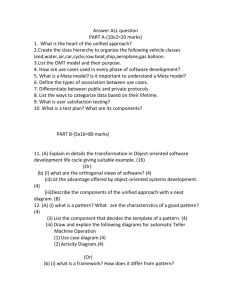

Emerging Technologies Project Prioritization Washington DC, March 18th 2013 Agenda • 10 – 10: 30 Presentation of 3 projects – Semantic Web – Meta-data – Data visualization • 10:30 – 11:00: identification of additional projects i • 11:00 – 12:00: prioritization & lead identification Project Prioritization Project Semantic web Data visualization Meta data definition Meta data E2E process Meta data: study level standard to BRIDG/JANUS Meta data: contextual data Meta data: versioning, upcoding, … CSV & multi-tenant cloud solutions Big data: definition & architecture Priority Feasibility Technology Lead (FDA) Lead (Industry) Project X. Title • Objective/Scope: …… • Approach – Step 1 – Step 1 • Deliverable: – document? – Pilot ? • Timelines:… Project Description Template Project A. Semantic web • Objective/Scope: – investigate how formal semantic standards can support the clinical trial data life cycle from protocol to submission – Clarify the type of skill set needed around RDF knowledge to develop in the industry • Approach – Investigate Opportunities & challenges (barriers) – Identify use cases (inventory of what can be done) • • • • Area 1: data standards Area 2: represent clinical trial data in RDF (more experimental – done by W3C) Area 3: annotated TLFs with information on where the data came from (linked to traceability) Area 4. Modelling the protocol (try to understand what is feasible with what has already been done) – Linking with other “knowledge providers” (NCI, BRIDG, SNOMED, ..) • Deliverable: – Readiness assessment – is semantic web technology useful or not - and what is the relationship meta data ? – Catalogue & prioritization of uses cases – Identification, execution and experiment on high priority use case • Timelines: – (see project proposal from Fred – deliverable every 3 months) Project B. Data Visualization • • Objective/Scope: identify new technologies on how to navigate into lots of information to find the “tree in the forest” (e.g. safety signal) …… Approach – – Identification of audience (reviewers, DMC, …) and related pain points where visualization could help in facilitating decision making Uses case/requirements: why/how data visualization (in addition to table) Note: make sure to re-use work done already (including work done at FDA/DIA) around visualization • • • – – – • Identify open source technology that could support visualization faster/better/cheaper Interface/integration between data visualization tools and data standards – what would be the requirements for data visualization Develop test cases (e.g. NIH) with one Deliverable: – – – – • Scientific reviewers (NIH, FDA CDER, FDA CBER, sponsor medical team..) How does clinical data monitoring/visualization translates into risk based monitoring Should we include in there a gallery of visualization possibilties Stakeholder and Use case document Catalogue of open source tools that would fit requirements Requirement for data standards Pilot with one tool (expanding on NIH ?) Timelines:… Project 1. Meta Data Definitions • Objective/Scope: common definitions around meta data and related aspects across the industry • Approach – Definition + example / short description of usage – Build/update on existing definition from FDA guidance's, CDISC glossary, check cross industry definition (e.g. Gartner) – To include • • • • • Meta data (structural & operational), data elements, attributes Master data & master reference data Controlled terminology, code systems, value sets, permissible values Data pooling, data integration, data aggregation Interoperability, semantic interoperability • Deliverable: not only a glossary but a practical guide with example to ensure common understanding and usage • Timelines: 6 months Project 2. User guide for E2E use & implementation of meta data • Objective/Scope: – – • practical guide on how to implement meta-data management (potentially with existing tools) within an organisation for a single trial Gather set of key requirements for Meta data management tool that would fulfil the needs for an E2E process within an organisation , as well as supporting data consistency for cross trial analysis Approach – – – – – Define study level meta-data versus enterprise wide meta-data (what is needed to ensure automation) Main user needs (collect user stories) at different phases: protocol definition, system set-up, data visualization & monitoring, integration of collected data, analysis & reporting .. Main process changes at different phases; definition of key steps in needed new process (basis of WI/SOP) Link with data traceability project Identify additional requirements related to • • • – • Pilot with available tools Deliverable: – – – • Versioning Expansion of meta-data structure Support to upcoding of standards & terminology Draft user stories for MDR tool selection Draft set of Work instruction to support MDR deployment Pilot ? Timelines: 12 to 18 months Project 3. Study level standards, define.xml, Study meta data in JANUS/BRIDG: do they fit ? • Objective/Scope: ensure that the meta-data defined in sponsor and send to FDA through define.xml fit the need of JANUS (and BRIDG based scientific data repository) for cross trial analysis • Approach – Describe how study level meta-data + trial summary in SDTM are stored in BRIDG (DefineActivity) – is this enough for cross trial analysis ? • Need to confirm if this is include visit – How define.xml can be transformed into DefinedActivity – is define complete ? • Deliverable: – Recommendation for update on content/format to define.xml – Specification of transformation function from define.xml to BRIDG DefineActivity • Timelines: 6 to 12 months Project 4. Contextual meta data for cross trial analysis • • Objective/Scope: definition of “contextual” metadata needed to support data analysis – in addition to structural meta data such as SDTM - and that should be collected together with the data during clinical trial execution. Approach – – collect and document concrete examples (“user stories”) encountered by staff performing cross trial analysis with different type of contextual information that would be useful to have in the context of data analysis (primary or secondary) of clinical trial data. Note: would this be specific for a Therapeutic Area or can they be general across TA ? - to be further clarified after few user stories • – – – • extract patterns out of these user stories and define a generic structure that would allow to store and exchange this information between the different stakeholders . The structure should be compliant with ISO21090 and BRIDG Characterize how we would store & exchange these metadata (as part of define.xml ???) Pilot the propose exchange standardApproach Deliverable: – – • Check with AdAM team and Integrated Analysis group to ensure we build on work done Description of additional – non structural like SDTM – meta data to be collected and store for cross trial analysis Recommendation for update to defiine.xml or for a new standards to exchange these meta-data Timelines: 12 months Project C. CSV and multi-tenant cloud solutions • • Objective/Scope: lower barriers to adoption and validation of multi-tenant cloud solutions & build understanding and awareness and gaps and technical solutions Approach 1. 2. 3. 4. 5. 6. 7. • Catalogue of existing efforts (challenges & lessons learned – e.g. OMOP) Establish definitions: multi-tenant shared infrastructure and ensuring scope, regulatory frameworks (GXP..) that are relevant Write up and share valuable uses cases – examples of what you would like to do today in a validated cloud environment and how it would be valuable Write and share the list of real and perceived gaps Write up best practices, available solutions, technical requirements to address the gaps, meeting specific regulatory requirements and recommend best practice for multi-tenant solutions Define a use case to pilot/test/leverage FedRamp (set of processes in government to approve cloud) - Inward/Outward facing Scientific Enclave concept ? Share examples of “uses cases” or successful implementation and explain the value Deliverable: – Documentation (PhUse Wiki ?) ? – FDA pilot ? • Timelines:… Project X. Big Data definition & architecture • Objective/Scope: define what is big data and how we could/should share this type of information • Approach – Scoping • • • • Definition & level setting Scope – type of information challenges & benefits work that has been done – where this discussion have been taking place already – Collect use cases – Research: propose model or architecture for sharing this type of information • Deliverable: – Definition of Big Data – Use case – Architecture for Big Data sharing • Timelines:… Project X. Title • Objective/Scope: …… • Approach – Step 1 – Step 1 • Deliverable: – document? – Pilot ? • Timelines:… Project X. Title • Objective/Scope: …… • Approach – Step 1 – Step 1 • Deliverable: – document? – Pilot ? • Timelines:… BACKUP from Meta-data Management “table” Background on Meta data discussion • 6 recurrent themes 1. 2. 3. 4. 5. 6. Consistent use across the life-cycle (Protocol, SAP ----> Submission) Across studies within an organisation Across studies and across partners Move away from MS Excel Version management (versions of standards, terminology, what used when) Extensible metadata structures • Scope – Structural meta data (SDTM, terminology) – Operational meta data (contextual, implicit info) – Master data Problem and how metadata helps? • Attendee No 1. – Communicate across groups within a company the data needs – To pass data across groups – E.g. Relationships Body Position with Observation value • No 2. – Protocol, data collection, tabulations stds – If one changes, how is rest kept consistent – E.g. Impact analysis for a change • No 3. – Share across silos, need to share the semantics – Unambiguous communication • No 4. – Analyse across different studies – Semantics needs to be consistent – Structured and unstructured metadata Problem and how metadata helps? • No 5. – Standards management, consistent and checking when used in studies, all aligns – Version management • No 6. – Use it to support the lifecycle – More automation – E.g. schedule of events out of protocol • No 7. – Terminology management – Managing the CDISC terminology • No 8. – End to end process, across different collections systems – Away from excel – E.g. Protocol amendments, the unstructured logic behind Problem and how metadata helps? • No 9. – Define concept once and reuse many – e.g. Endpoints and population from protocol into SAP, copied by hand – Version management • No 10. – Tools need to adapt to changing needs and greater capability – Version management • No 11. – Clinical observation metadata ok, rapid version change – While still serving the current business needs – What did we use when, what versions used • No 12. – – – – Is XML passed its sell by date, can it meets the need Traceability, providence Building on shifting sands of constant version change Automation, remove silios Problem and how metadata helps? • No 13. – Within org ok, outsourcing causes lack of understanding, communication – E.g. SDTM Domain spec to CRO Themes • Consistent use across the life-cycle – Protocol, SAP ----> Submission • • • • Across studies within an organisation Across studies and across partners Move away from MS Excel Version management (versions of standards, terminology, what used when) • Extensible metadata structures Metadata • Definition of metadata – Structural • Description of the data, e.g. SDTM, data point attributes and data source, terminologies – Non structural • Protocol amendment, data traceability – Master data – SAP? • Standard v TA v Study v Organisation v Industry