Models for Evaluating Grade-to-Grade Growth

advertisement

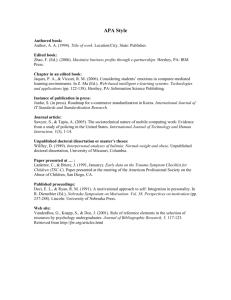

Models for Evaluating Grade-toGrade Growth LMSA Presentation Robert L. Smith and Wendy M. Yen, Educational Testing Service Unpublished Work © 2005 by Educational Testing Service Introduction • • • • NCLB requires states to measure proficiency in specific content areas with outcome measures tied to content and performance standards. Most users first think of vertical scales to measure growth. Title I of this legislation does not require use of vertical scales and most states do not have them in place. Most states are currently using cross-sectional results to evaluate their adequate yearly progress. Unpublished Work © 2005 by Educational Testing Service 2 Introduction • Tests to assess skills taught in stand-alone courses (e.g., Algebra, Geometry, Biology, Physics) are not amenable to vertical scaling because they assess different kinds of knowledge and skills. • Despite these issues, educators have a need to measure student growth from year to year. • This presentation explores the growth questions raised by parents and educators and describes three methods of assessing this growth. Unpublished Work © 2005 by Educational Testing Service 3 Different Constituencies • In order to really understand what educators wanted in terms of growth measures, we gathered information from educators via phone interviews, large group meetings, and a small working group within a particular state. • With these educators we discussed the pros and cons of three types of growth measures and listened to the issues the educators were trying to address. • It became clear that within a school district there are different constituencies that want information on student growth. Unpublished Work © 2005 by Educational Testing Service 4 Different Constituencies • These constituencies included parent, teachers and administrators. • In many cases they wanted similar information, but there were some important differences. Unpublished Work © 2005 by Educational Testing Service 5 Parents’ Interests • • • • • • Is my child making a year’s worth of progress in a year? Is my child growing appropriately toward meeting state standards? How far away is my child from becoming Proficient? Is my child growing as much in English Language Arts as in Math? Did my child grow as much this year as last year? Is Child A growing as much as Child B (who is in a different grade)? Unpublished Work © 2005 by Educational Testing Service 6 Teachers’ Interests Did my students make a year’s worth of progress in a year? • Did my students grow appropriately toward meeting state standards? • How close are my students to becoming Proficient? • Are there students with unusually low growth who deserve special attention? Unpublished Work © 2005 by Educational Testing Service 7 Administrators’ Interests • • • • • Did the students in our district/school make a year’s worth of progress this year in all content areas? Are our students growing appropriately toward meeting state standards? How close are our students to becoming Proficient? Does this school or program show as much growth as another school or program? Does this district show as much growth as the state? Unpublished Work © 2005 by Educational Testing Service 8 Administrators’ Interests • In answering these questions, administrators care about the following: – – – Can I measure the growth of students even if they do not change proficiency classifications from one year to the next? Can I do this taking into account the full information of the test scores (i.e., look at all changes in student scores, not just changes in proficiency categories)? Can I do this in a way that is technically sound? Unpublished Work © 2005 by Educational Testing Service 9 Administrators’ Interests – – – Can I pool together results from different grades to draw summary conclusions? Can I gauge expected growth in high school where students are moving across courses (e.g., Biology to Chemistry) that cannot be vertically scaled? Can I communicate important results clearly to teachers, other administrators, the school board, and the media? Unpublished Work © 2005 by Educational Testing Service 10 Nature of Growth Information • All of the questions raised here presume a method of evaluating whether a given amount of growth is reasonable and appropriate. • This evaluation has two necessary aspects: – One is normative. – The other is absolute. Unpublished Work © 2005 by Educational Testing Service 11 Nature of Growth Information • Normative growth information provides appropriate background for evaluating whether growth is typical or unusually large or small • Absolute growth is essential in a standards-referenced testing environment where student performance is compared to absolute standards (e.g., Proficient). Unpublished Work © 2005 by Educational Testing Service 12 Growth Model Options Given • the complexity of K-12 assessment • the limited ability to track students • the non-progressive nature of some programs • the different constituencies interested in growth information It may be difficult to recommend any single approach to measuring growth. Unpublished Work © 2005 by Educational Testing Service 13 Growth Model Options We describe 3 methods for measuring growth in a K-12 context and discuss their strengths and weaknesses: • Vertical Scales • Norms • Expectancy Tables Unpublished Work © 2005 by Educational Testing Service 14 Vertical Scales • In a vertical scale, scale scores are produced that run continuously from the lowest grade to the highest grade, with substantial overlap of the scale scores produced at adjacent grades • The goal is to have scale scores obtained from different test levels that have the same meaning ( a 500 means the same thing if obtained from the grade 4 test or the grade 5 test). Unpublished Work © 2005 by Educational Testing Service 15 Vertical Scales • Most commonly built by linking tests in adjacent grades using IRT • Most commonly used IRT models assume the construct being measured is essentially unidimensional. • If a vertical scale is built to span tests administered in grades 2-11, this would imply a progression of learning throughout this range of grades. • These assumptions can be untenable if curriculum is designed to have large distinct sub-areas of content that are not taught or learned hierarchically. Unpublished Work © 2005 by Educational Testing Service 16 Vertical Scales Advantages 1. When the underlying assumptions are met, vertically scaled tests produce scale scores that are comparable across grades. Growth can be assessed by looking at the change in a student’s scale scores from one grade to the next. 2. If students are tracked over more than adjacent grades, vertical scaling is conceptually the most straightforward option. 3. The scale scores can be used for many types of statistical analyses. Unpublished Work © 2005 by Educational Testing Service 17 Vertical Scales Disadvantages 1. 2. Vertical scaling makes the implicit assumption that the same construct is being measured at the top and bottom of the scale. For example, the mathematics taught in grade 11 is assumed to be a progression of mathematics taught in grade 2. This may be difficult to justify when the vertical scaling includes many grades. If there is substantial grade-specific content, there can be disordinal results (e.g., grade 6 scores on average are lower than grade 5 scores). Caution is needed when comparing growth in different parts of the scale. As Braun (1988) noted, growth is most accurately evaluated by comparing students who start at the same place. When students start at different places on a scale, differences in scale units can greatly complicate interpretations. Unpublished Work © 2005 by Educational Testing Service 18 Vertical Scales Disadvantages 3. By themselves, vertical scales carry no normative information or standards-referenced information. 4. A vertical scale can highlight inconsistencies between standards set at different grades. For example, they might show that the Basic level of proficiency requires a scale score of 510 at grade 4 and a 508 at grade 5; this disordinality of standards would not be desirable. Unpublished Work © 2005 by Educational Testing Service 19 Vertical Scales Disadvantages 5. Scale scores have no intrinsic meaning and can be difficult to explain. It is possible to conduct “scale anchoring,” which provides information about what a 500 means (i.e., what students at that score typically know and are able to do). Over time, users can develop an understanding of the scores. 6. The development of vertical scales requires a special data collection and analysis. 7. Courses with distinct content cannot be vertically scaled. 8. Vertical scaling does not always work. Unpublished Work © 2005 by Educational Testing Service 20 Vertical Scales 9. In reality, because student learning and test content change so much over grades, 1 unit of growth at grade 3 typically does not mean the same thing as 1 unit of growth at, say, grade 7. Thus, even if a vertical scale is produced, some type of normative or expected growth information must be considered in determining if a given amount of growth is “appropriate.” Unpublished Work © 2005 by Educational Testing Service 21 Norms • • • • • • Provide information about a student in relation to a reference group Information is usually in the form of percentiles, normal curve equivalents (NCEs), or Z-scores Each student can be assigned a normative score that indicates their relative position in the grade cohort. On average, it is expected that a student, when at a higher grade the next year, will receive about the same score. A negative change indicates less growth than typically seen. A positive change indicates more growth than typically seen. Unpublished Work © 2005 by Educational Testing Service 22 Norms Advantages 1. Norms are fairly well understood and fairly easy to explain to parents. 2. Norms allow comparisons of relative standing and growth in relation to the reference group. 3. Norms make minimal assumptions about the curriculum, tests, or test scales. 4. Norms allow comparisons of performance across content areas, e.g., Johnny performed relatively better in math than he did in English/language arts. 5. Given that states currently conduct census testing for NCLB, the development of state norms require no special data collection. Unpublished Work © 2005 by Educational Testing Service 23 Norms Disadvantages 1. Norms are calculated relative to a particular population at a particular time. If “rolling” norms are used to accommodate changes in populations over time, changes in the norms must be considered separately from changes in individual students. For example, if the norm group increases in over-all performance from 2003 to 2004, then a student who improves in an absolute sense can appear to not be improving in a relative sense (i.e., not improving as much as students did on the average). 2. There is no continuous growth scale on which to display performance or conduct statistical analyses. 3. Expectations are based on cross-sectional data, not longitudinal data that reflect actual student growth. Unpublished Work © 2005 by Educational Testing Service 24 Norms Disadvantages 4. Changes in population demographics can require the development of new norms to provide an appropriate reference group. 5. There is no direct connection between normative expectations and whether a student is progressing sufficiently toward becoming Proficient (or some other absolute standard). Unpublished Work © 2005 by Educational Testing Service 25 Norms • On the surface, norms are relatively easy to understand and therefore appealing for a parent/teachers audience. However, norms in and of themselves are not growth measures, and analyses of them are required to draw appropriate conclusions about growth. For example, • • – – on average a student’s score is not expected to be as extreme the following year as the previous one or that technically sound growth measures should be expressed in NCS vs. percentiles. Unpublished Work © 2005 by Educational Testing Service 26 Tables of Expected Growth Using longitudinal data, regression analyses can be conducted where higher-grade scores can be regressed on to the next lower-grade scores. The results of this analysis could be summarized in tables that show, e.g., how grade 3 students who obtained a given score on the grade 3 test usually scored on the grade 4 test. These tables would be used to determine whether a student is making typical progress. Unpublished Work © 2005 by Educational Testing Service 27 Tables of Expected Growth These results can be developed and expressed using within-grade scales that are not vertically connected. Differences between actual and expected performance can also be standardized to allow for comparisons across grades and content areas. Unpublished Work © 2005 by Educational Testing Service 28 Tables of Expected Growth Advantages 1. Expectations are fairly straightforward to explain and understand, but more complicated than norms. 2. Expectations permit comparisons of growth relative to growth typically seen in other students in the state, district, or school, depending on the level of analysis. 3. Growth expectations require minimal assumptions about the data. Unpublished Work © 2005 by Educational Testing Service 29 Tables of Expected Growth Advantages 4. Expectations can be developed between nonhierarchical courses (e.g., Biology and Chemistry). 5. Growth expectations are based on longitudinal data reflecting how other students have progressed from Year 1 to Year 2. Thus, expectations can be more accurate than the cross-sectional normative data. Unpublished Work © 2005 by Educational Testing Service 30 Tables of Expected Growth Disadvantages 1. Expectations are calculated relative to a particular population at a particular time. The expectations might have to be recalculated every year and would thus be labor intensive. 2. There is no continuous growth scale on which to display performance or to conduct statistical analyses. Unpublished Work © 2005 by Educational Testing Service 31 Tables of Expected Growth Disadvantages 3. There is no direct connection between normative expectations and whether a student is progressing sufficiently toward becoming Proficient (or some other absolute standard). However, absolute standards can be related to expectations. 4. This method would require matched data in adjacent grades and presumes the existence of unique student identifiers so that students could be tracked. Unpublished Work © 2005 by Educational Testing Service 32 Tables of Expected Growth In addition, for a parent/teacher audience, a graphical Student Growth Report could be provided to help students, parents and teachers understand: • How much the student grew during the past year and • How much growth would be needed in the upcoming year to reach a Proficient cut score. Unpublished Work © 2005 by Educational Testing Service 33 Discussions with Users We discussed the three growth models with educators in large groups and small working groups. The major conclusions from the discussion were: 1. Different users have different needs and different levels of understanding of measurement. In particular, the needs of parents and teachers differ from those of school administrators, researchers, and program evaluators. It is not necessary or appropriate to select only one growth measure or provide the same measure to all audiences. Unpublished Work © 2005 by Educational Testing Service 34 Discussions with Users 2. All audiences wanted to be able to measure growth within proficiency levels and to know how close students in a Basic category were to the Proficient cut score. 3. There was interest in making comparisons of performance at high school in content areas where a vertical scale was not possible (e.g., between Biology and Chemistry). Unpublished Work © 2005 by Educational Testing Service 35 Discussions with Users 4. There was concern that the use of norms would confuse the state’s message about the importance of standards. Also, many people do not understand norms, confusing them with percent correct scores, or they believe that norms will mask student progress or be used to make excuses for poor progress. However, these educators believed that school administrators could appropriately use normative data. Unpublished Work © 2005 by Educational Testing Service 36 Discussions with Users 5. There was interest in and extended discussion of growth expectations. – It was acknowledged that such expectations realistically accounted for the fact that Proficient was more difficult to reach at some grades than at others. – There was consideration of the possibility of producing different expectation tables for different populations, such as English learners, but it was essential that the reality of the extra challenges faced by those students not be used as an excuse for accepting non-Proficient performance from them. – The educators suggested providing simple classifications as part of the expectations: Growth Below Expectation (1), At Expectation (2), Above Expectation (3). These classifications could be used in parent/teacher conferences as well as in school/district analyses. Unpublished Work © 2005 by Educational Testing Service 37 Discussions with Users The majority of participants strongly opposed the release of norms for state standards-referenced tests, believing this would be a “step backward”, away from standards-referenced testing. Others thought that norms could be a useful adjunct to standards-referenced scores. Expected growth appeared to offer the information that was needed to evaluate “how much growth was reasonable”. Evaluators felt it was essential that norms or demonstration of “typical” growth not be used as an excuse for a student not growing sufficiently toward becoming proficient. Unpublished Work © 2005 by Educational Testing Service 38 Conclusions After discussions with potential users it appeared that the growth expectations method could address, in a technically sound manner, all of the parent/teacher/administrator questions listed previously. The development of such growth expectations avoids the assumptions, expense , and uncertainty of success in the development of vertical scales. The growth expectations can be communicated effectively to the different audiences. Unpublished Work © 2005 by Educational Testing Service 39 Conclusions A growth expectations table could be used by administrators to determine growth for students in a district. A single digit growth classification, indicating whether a student’s growth was Below Average, Average, or Above Average could provide an indicator of how well students were performing relative to expectations and be provided to administrators, teachers, and parents. A score report could be constructed that combines normative and absolute considerations in evaluating growth without reliance on a vertical scale. Unpublished Work © 2005 by Educational Testing Service 40