C - Amazon S3

advertisement

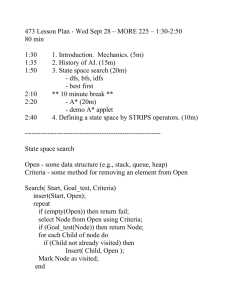

Graphs vs trees up front; use grid too; discuss for BFS, DFS, IDS, UCS Cut back on A* optimality detail; a bit more on importance of heuristics, performance data Pattern DBs? General Tree Search function TREE-SEARCH(problem) returns a solution, or failure initialize the frontier using the initial state of problem loop do if the frontier is empty then return failure choose a leaf node and remove it from the frontier if the node contains a goal state then return the corresponding solution expand the chosen node, adding the resulting nodes to the frontier Important ideas: Frontier Expansion: for each action, create the child node Exploration strategy Main question: which frontier nodes to explore? A note on implementation Nodes have state, parent, action, path-cost PARENT A child of node by action a has state = result(node.state,a) parent = node action = a path-cost = node.path-cost + step-cost(node.state,a,state) Node 5 4 6 1 88 7 3 22 ACTION = Right PATH-COST = 6 STATE Extract solution by tracing back parent pointers, collecting actions Depth-First Search Depth-First Search Strategy: expand a deepest node first G a c b Implementation: Frontier is a LIFO stack e d f S h p r q S e d b c a a e h p q q c a h r p f q G p q r q f c a G Search Algorithm Properties Search Algorithm Properties Complete: Guaranteed to find a solution if one exists? Optimal: Guaranteed to find the least cost path? Time complexity? Space complexity? … 1 node b nodes b2 nodes Cartoon of search tree: b is the branching factor m is the maximum depth solutions at various depths b m tiers bm nodes Number of nodes in entire tree? 1 + b + b2 + …. bm = O(bm) Depth-First Search (DFS) Properties What nodes does DFS expand? Some left prefix of the tree. Could process the whole tree! If m is finite, takes time O(bm) How much space does the frontier take? … b 1 node b nodes b2 nodes m tiers Only has siblings on path to root, so O(bm) Is it complete? m could be infinite, so only if we prevent cycles (more later) Is it optimal? No, it finds the “leftmost” solution, regardless of depth or cost bm nodes Breadth-First Search Breadth-First Search Strategy: expand a shallowest node first G a c b Implementation: Frontier is a FIFO queue e d S f h p r q S e d Search Tiers b c a a e h p q q c a h r p f q G p q r q f c a G Breadth-First Search (BFS) Properties What nodes does BFS expand? Processes all nodes above shallowest solution Let depth of shallowest solution be s s tiers Search takes time O(bs) How much space does the frontier take? … b 1 node b nodes b2 nodes bs nodes Has roughly the last tier, so O(bs) Is it complete? s must be finite if a solution exists, so yes! Is it optimal? Only if costs are all 1 (more on costs later) bm nodes Quiz: DFS vs BFS Quiz: DFS vs BFS When will BFS outperform DFS? When will DFS outperform BFS? [Demo: dfs/bfs maze water (L2D6)] Video of Demo Maze Water DFS/BFS (part 1) Video of Demo Maze Water DFS/BFS (part 2) Iterative Deepening Idea: get DFS’s space advantage with BFS’s time / shallow-solution advantages Run a DFS with depth limit 1. If no solution… Run a DFS with depth limit 2. If no solution… Run a DFS with depth limit 3. ….. Isn’t that wastefully redundant? Generally most work happens in the lowest level searched, so not so bad! … b Finding a least-cost path GOAL a 2 2 c b 1 3 2 8 2 e d 3 9 8 START p 15 2 h 4 1 f 4 q 2 r BFS finds the shortest path in terms of number of actions. It does not find the least-cost path. We will now cover a similar algorithm which does find the least-cost path. Uniform Cost Search Uniform Cost Search 2 Strategy: expand a cheapest node first: b d S 1 c 8 1 3 Frontier is a priority queue (priority: cumulative cost) G a 2 9 p 15 2 e 8 h f 2 1 r q S 0 Cost contours b 4 c a 6 a h 17 r 11 e 5 11 p 9 e 3 d h 13 r 7 p f 8 q q q 11 c a G 10 q f c a G p 1 q 16 Uniform Cost Search (UCS) Properties What nodes does UCS expand? Processes all nodes with cost less than cheapest solution! If that solution costs C* and arcs cost at least , then the “effective depth” is roughly C*/ C*/ “tiers” C*/ Takes time O(b ) (exponential in effective depth) How much space does the frontier take? Has roughly the last tier, so O(bC*/) Is it complete? Assuming best solution has a finite cost and minimum arc cost is positive, yes! Is it optimal? Yes! (Proof next lecture via A*) b … c1 c2 c3 Uniform Cost Issues Remember: UCS explores increasing cost contours … c1 c2 c3 The good: UCS is complete and optimal! The bad: Explores options in every “direction” No information about goal location We’ll fix that soon! Start Goal Search Gone Wrong? Tree Search vs Graph Search Tree Search: Extra Work! Failure to detect repeated states can cause exponentially more work. State Graph Search Tree m O(2 ) O(m) Tree Search: Extra Work! Failure to detect repeated states can cause exponentially more work. State Graph Search Tree O(2m2) m O(4 ) m=20: 800 states, 1,099,511,627,776 tree nodes General Tree Search function TREE-SEARCH(problem) returns a solution, or failure initialize the frontier using the initial state of problem loop do if the frontier is empty then return failure choose a leaf node and remove it from the frontier if the node contains a goal state then return the corresponding solution expand the chosen node, adding the resulting nodes to the frontier General Graph Search function GRAPH-SEARCH(problem) returns a solution, or failure initialize the frontier using the initial state of problem initialize the explored set to be empty loop do if the frontier is empty then return failure choose a leaf node and remove it from the frontier if the node contains a goal state then return the corresponding solution add the node to the explored set expand the chosen node, adding the resulting nodes to the frontier but only if the node is not already in the frontier or explored set Tree Search vs Graph Search Graph search Avoids infinite loops: m is finite in a finite state space Eliminates exponentially many redundant paths Requires memory proportional to its runtime! CS 188: Artificial Intelligence Informed Search Instructor: Stuart Russell University of California, Berkeley Search Heuristics A heuristic is: A function that estimates how close a state is to a goal Designed for a particular search problem Examples: Manhattan distance, Euclidean distance for pathing 10 5 11.2 Example: distance to Bucharest Oradea 71 Neamt Zerind 87 151 75 Iasi Arad 140 Sibiu 99 92 Fagaras 118 Vaslui 80 Rimnicu Vilcea Timisoara 111 Lugoj 97 142 211 Pitesti 70 98 Mehadia 146 75 Drobeta 85 101 138 Bucharest Urziceni h(x) Hirsova 86 120 90 Craiova Giurgiu Eforie Example: Pancake Problem Example: Pancake Problem Step Cost: Number of pancakes flipped Example: Pancake Problem State space graph with step costs 4 2 2 3 3 4 3 4 3 2 2 2 4 3 Example: Pancake Problem Heuristic: the number of the largest pancake that is still out of place 3 h(x) 4 3 4 3 0 4 4 3 4 4 2 3 Effect of heuristics Guide search towards the goal instead of all over the place Start Goal Uninformed Start Informed Goal Greedy Search Greedy Search Expand the node that seems closest…(order frontier by h) What can possibly go wrong? Sibiu-Fagaras-Bucharest = Sibiu Fagaras 99 99+211 = 310 176 253 Sibiu-Rimnicu Vilcea-Pitesti-Bucharest = 80 Rimnicu Vilcea 80+97+101=278 193 97 211 Pitesti 100 101 0 Bucharest Greedy Search Strategy: expand a node that seems closest to a goal state, according to h Problem 1: it chooses a node even if it’s at the end of a very long and winding road Problem 2: it takes h literally even if it’s completely wrong … b Video of Demo Contours Greedy (Empty) Video of Demo Contours Greedy (Pacman Small Maze) A* Search A* Search UCS Greedy A* Combining UCS and Greedy Uniform-cost orders by path cost, or backward cost g(n) Greedy orders by goal proximity, or forward cost h(n) 8 S g=1 h=5 h=1 e 1 S h=6 c h=7 1 a h=5 1 1 3 b h=6 2 d h=2 G g=2 h=6 g=3 h=7 a b d g=4 h=2 e g=9 h=1 c G g=6 h=0 d g = 10 h=2 G g = 12 h=0 h=0 A* Search orders by the sum: f(n) = g(n) + h(n) g=0 h=6 Example: Teg Grenager Is A* Optimal? h=6 1 S A h=7 3 G h=0 5 What went wrong? Actual bad goal cost < estimated good goal cost We need estimates to be less than actual costs! Admissible Heuristics Idea: Admissibility Inadmissible (pessimistic) heuristics break optimality by trapping good plans on the frontier Admissible (optimistic) heuristics slow down bad plans but never outweigh true costs Admissible Heuristics A heuristic h is admissible (optimistic) if: where is the true cost to a nearest goal Examples: 4 15 Coming up with admissible heuristics is most of what’s involved in using A* in practice. Optimality of A* Tree Search Optimality of A* Tree Search Assume: A is an optimal goal node B is a suboptimal goal node h is admissible Claim: A will be chosen for expansion before B … Optimality of A* Tree Search: Blocking Proof: Imagine B is on the frontier Some ancestor n of A is on the frontier, too (maybe A!) Claim: n will be expanded before B 1. f(n) is less or equal to f(A) … Definition of f-cost Admissibility of h h = 0 at a goal Optimality of A* Tree Search: Blocking Proof: Imagine B is on the frontier Some ancestor n of A is on the frontier, too (maybe A!) Claim: n will be expanded before B 1. f(n) is less or equal to f(A) 2. f(A) is less than f(B) … B is suboptimal h = 0 at a goal Optimality of A* Tree Search: Blocking Proof: Imagine B is on the frontier Some ancestor n of A is on the frontier, too (maybe A!) Claim: n will be expanded before B 1. f(n) is less or equal to f(A) 2. f(A) is less than f(B) 3. n expands before B All ancestors of A expand before B A expands before B A* search is optimal … Properties of A* Properties of A* Uniform-Cost b … A* b … UCS vs A* Contours Uniform-cost expands equally in all “directions” Start A* expands mainly toward the goal, but does hedge its bets to ensure optimality Start Goal Goal Video of Demo Contours (Empty) -- UCS Video of Demo Contours (Empty) -- Greedy Video of Demo Contours (Empty) – A* Video of Demo Contours (Pacman Small Maze) – A* Comparison Greedy Uniform Cost A* A* Applications Video games Pathing / routing problems Resource planning problems Robot motion planning Language analysis Machine translation Speech recognition … [Demo: UCS / A* pacman tiny maze (L3D6,L3D7)] [Demo: guess algorithm Empty Shallow/Deep (L3D8)] Video of Demo Empty Water Shallow/Deep – Guess Algorithm Creating Heuristics Creating Admissible Heuristics Most of the work in solving hard search problems optimally is in coming up with admissible heuristics Often, admissible heuristics are solutions to relaxed problems, where new actions are available 366 15 Example: 8 Puzzle Start State What are the states? How many states? What are the actions? How many actions from the start state? What should the step costs be? Actions Goal State 8 Puzzle I Heuristic: Number of tiles misplaced Why is it admissible? h(start) = 8 This is a relaxed-problem heuristic Start State Goal State Average nodes expanded when the optimal path has… …4 steps …8 steps …12 steps UCS TILES 112 13 6,300 39 3.6 x 106 227 Statistics from Andrew Moore 8 Puzzle II What if we had an easier 8-puzzle where any tile could slide any direction at any time, ignoring other tiles? Total Manhattan distance Start State Goal State Why is it admissible? Average nodes expanded when the optimal path has… h(start) = 3 + 1 + 2 + … = 18 …4 steps …8 steps …12 steps TILES 13 39 227 MANHATTAN 12 25 73 Combining heuristics Dominance: ha ≥ hc if Roughly speaking, larger is better as long as both are admissible The zero heuristic is pretty bad (what does A* do with h=0?) The exact heuristic is pretty good, but usually too expensive! What if we have two heuristics, neither dominates the other? Form a new heuristic by taking the max of both: Max of admissible heuristics is admissible and dominates both! Example: number of knight’s moves to get from A to B h1 = (Manhattan distance)/3 (rounded up to correct parity) h2 = (Euclidean distance)/√5 (rounded up to correct parity) h3 = (max x or y shift)/2 (rounded up to correct parity) Optimality of A* Graph Search This part is a bit fiddly, sorry about that A* Graph Search Gone Wrong? State space graph Search tree A S (0+2) 1 1 h=4 S h=1 h=2 C 1 2 3 B h=1 G h=0 A (1+4) B (1+1) C (2+1) C (3+1) G (6+0) G (5+0) Simple check against explored set blocks C Fancy check allows new C if cheaper than old but requires recalculating C’s descendants Consistency of Heuristics Main idea: estimated heuristic costs ≤ actual costs Admissibility: heuristic cost ≤ actual cost to goal A 1 h=4 h=2 h(A) ≤ actual cost from A to G C h=1 Consistency: heuristic “arc” cost ≤ actual cost for each arc h(A) – h(C) ≤ cost(A to C) 3 Consequences of consistency: The f value along a path never decreases G h(A) ≤ cost(A to C) + h(C) A* graph search is optimal Optimality of A* Graph Search Sketch: consider what A* does with a consistent heuristic: Fact 1: In tree search, A* expands nodes in increasing total f value (f-contours) Fact 2: For every state s, nodes that reach s optimally are expanded before nodes that reach s suboptimally Result: A* graph search is optimal … f1 f2 f3 Optimality Tree search: A* is optimal if heuristic is admissible UCS is a special case (h = 0) Graph search: A* optimal if heuristic is consistent UCS optimal (h = 0 is consistent) Consistency implies admissibility In general, most natural admissible heuristics tend to be consistent, especially if from relaxed problems A*: Summary A*: Summary A* uses both backward costs and (estimates of) forward costs A* is optimal with admissible / consistent heuristics Heuristic design is key: often use relaxed problems