Questa Overview DAC 2005

advertisement

Assertion-Based Verification

Industry Myths and Realities

July 2008

Harry D. Foster

Chief Verification Scientist

Fundamental Challenge of Verification

Have I written enough checkers?

Verification Environment

01110101010100000000011101011011011110111

Design

Have I generated enough stimulus?

2

HDF – CAV 2008

Roadmap

State of the Industry

ABV Overview

Industry Successes

Industry Challenges

Future Opportunities

3

HDF – CAV 2008

State of the Industry

Mindless

4

HDF – CAV 2008

Statistics

Verification Trends

Design Size in Millions of Gates

Verification

Gap

Design

Gap

Ability to Fabricate

80

60

Ability to Design

40

20

0

1988

Ability to Verify

1992

1996

2000

2005

The Verification Gap

—

Many companies still using 1990’s verification technologies

—

Traditional verification techniques can’t keep up

* Based on data from the Collett International 2004 FV Survey

5

HDF – CAV 2008

5

The Verification Gap

Directed Test

State-of-the-Art Verification Circa 1990

Imagine verifying a car using a directed-test approach

—

Requirement: Fuse will not blow under any normal operation

A Few Weeks Later. . . . .

Scenario 714: accelerate

Accelerate to

to 48

48 mph,

mph, roll

roll down

down the

the window,

window,

and

turn on

the left-turn

Scenario

1: accelerate

tosignal.

37 mph, pop in the new

John Mayer CD, and turn on the windshield wipers.

X

O

6

HDF – CAV 2008

Concurrency Challenge

A purely directed-test methodology does not scale

—

—

Truly heroic effort—but not practical

Imagine writing a directed test for this scenario!

7

HDF – CAV 2008

Concurrency Challenge

Tx

PCI Express

TLP

From

Fabric

Seq_num

Tx

LCRC

Retry buffer

DLLP

Rx

From

RX

8

HDF – CAV 2008

arbiter

To

PHY

Design Engineers Are Becoming

Verification Engineers

Design

54%

Other

14%

Verification

46%

Design

51%

Verification

35%

Source: 2008 Farwest Research IC/ASIC Functional Verification Study, Used with Permission

9

HDF – CAV 2008

Moore with Less

2005

1.72B

Transistors

2004

592M

Transistors

Itanium 2 (9MB cache)

2002

2000

1999

220M

Transistors

Itanium 2

42M

Transistors

Pentium 4

9.5M+

Transistors

Verification for Every Man, 1997

Woman, and Child in India

Pentium III

7.5m+

Transistors

1995

5.5M+

Transistors

1993

3.1M+

Transistors

1989

1985

1982

1979

29,000

Transistors

Pentium

1,290,000

Transistors

486

275,000

Transistors

386

134,000

Transistors

286

8088

10

WCR

2008

HDF –February,

CAV 2008

Pentium Pro

Pentium II

Dual Core Itanium

More and More Verification Cycles

11

HDF – CAV 2008

Faster Computers

10000.00

1000.00

MIPs

100.00

10.00

1.00

0.10

0.01

1975

1980

12

HDF – CAV 2008

1985

1990

1995

2000

2005

Lots of Computers

25

20

AMD Grid

AMD Grid Growth 2001-2006

(Relative to 2001 = 1.0)

15

10

5

Year

# of Servers

1996

50

2006

5000+

(over 10,000 CPUs)

0

2001

2002

2003

2004

Source: The AMD Grid: Enabling Grid Computing for the Corporation, August 2006

13

HDF – CAV 2008

2005

2006

Results

Number of Respins

2002

45%

40%

35%

30%

2004

2007

39% 42%

38%

39%

33%

28%

25%

20% 21%

20%

17%

15%

8%

6% 6%

10%

5%

1%

1%

2%

0%

1 (FIRST

SILICON

SUCCESS)

2

Source: 2008 Far West Research and Mentor Graphics

14

HDF – CAV 2008

3

4

5

6

7 SPINS or

MORE

Results

Techniques Used by 1st Silicon Success Teams

% of Designs Achieving 1st Silicon Success by Verification Technique

TLM/SystemC

Assertion

Constrained-Random

FPGA prototyping

Verification Techniques

Commercial emulation system

Full-Timing

Directed

Code Coverage

Equivalency Checking

Custom built system

Standard C/C++

HW/SW Co-Verification

Transistor-level Sim.

Special Test Chips

Model Checking

Sim. Acceleration

Collett International Research Inc.,

2004/2005 IC/ASIC Functional Verification Study,

Used with permission

0%

15

HDF – CAV 2008

5%

10%

15%

20%

25%

1st Silicon Success Rate %

30%

35%

40%

45%

Results

Types of Flaws

2004

2007

100%

80%

75% 77%

60%

40%

33%

32%

26%

24%

23%

21%

20%

0%

20%

22%

19%

19%

27%

27%

25%

14%

17%

15% 13%

11%

LOGIC OR

FUNCTIONAL

CLOCKING

TUNING

ANALOG

CIRCUIT

Source: 2008 Far West Research and Mentor Graphics

CROSSTALKPOWER

MIXED-SIGNAL

INDUCED

CONSUMPTION INTERFACE

DELAYS,

GLITCHES

16

HDF – CAV 2008

YIELD OR

RELIABILITY

TIMING – PATH

TOO SLOW

FIRMWARE

11%

TIMING – PATH

TOO FAST,

RACE

CONDITION

7%

IR DROPS

5% 7%

OTHER

Results

Causes of Functional Flaws

70%

60%

60%

50%

41%

40%

35%

30%

18%

20%

15%

10%

3%

0%

INCORRECT or

INCOMPLETE

SPECIFICATION

CHANGES IN

SPECIFICATION

Source: 2008 Far West Research and Mentor Graphics

17

HDF – CAV 2008

DESIGN ERROR

FLAW IN

INTERNAL

REUSED BLOCK,

CELL,

MEGACELL or IP

FLAW IN

EXTERNAL IP

BLOCK or

TESTBENCH

OTHER

Results

2/3 Projects Miss Schedule

Designs completed on time according to project's original schedule

30.0

25.0

> +10%

20.0

+10%

0

-10%

15.0

-20%

-30%

-40%

-50%

10.0

> -50%

5.0

0.0

> +10%

+10%

Source: 2008 Far West Research and Mentor Graphics

18

HDF – CAV 2008

0

-10%

-20%

-30%

-40%

-50%

> -50%

Stop, time to recap. . . .

19

HDF – CAV 2008

Recap

1. Industry fails to mature its processes

2. Concurrency is difficult to verify

3. Throw lots of bodies at the problem

4. Throw lots of computers at it too

5. All this . . . and poor results

20

HDF – CAV 2008

New Problems

Multiple Power Domain Interaction

Power Domain 1

RESTORE

21

HDF – CAV 2008

SAVE

PCON3

Power Domain 2

PCON1

PCON2

Power Domain 3

New Problems

Asynchronous Clock-Domain Crossing

Logi

c

Logi

c

35%

30%

B

A

25%

CLK

20%

15%

D

10%

Q

5%

0%

1

2

3-4

5-10

2007 Average

Source: 2008 Far West Research and Mentor Graphics

22

HDF – CAV 2008

11-20

>20

Verification Challenges Keep Coming

Low power

Clock domain crossing

Hardware/software

Network-on-chip

Multi-level verification

Multi-core verification

System verification

IP reuse (black box)

Mixed-signal (RF/analog/digital)

23

WCR

2008

HDF –February,

CAV 2008

Hey. . . I thought this was supposed

to be about assertions!

24

HDF – CAV 2008

Debugging is the Bottleneck

Effort Allocation of Dedicated Verification

Engineers by Type of Activity

40%

60%

Verification Debug

Testbench Development

Source: 2004 IC/ASIC Functional Verification Study, Collett International Research, Used with Permission

25

HDF – CAV 2008

Roadmap

State of the Industry

ABV Overview

Industry Successes

Industry Challenges

Future Opportunities

26

26

HDF – CAV 2008

Assertion-Based Verification

“How can one check a large routine in

the sense of making sure that it’s right?

In order that the man who checks may

not have too difficult a task, the

programmer should make a number of

definite assertions which can be

checked individually, and from which

the correctness of the whole program

easily flows.”

Alan Turing, 1949

27

HDF – CAV 2008

Assertion-Based Verification

Level A

Property

Level B

test

— a statement of design intent

— used to specify behavior

Level C

env

Assertion

— A verification directive

High-level

— Architectural, -architectural

A

State space

Simulation trace

28

HDF – CAV 2008

Trace from

simulation

Assertion-Based Verification

Property

— a statement of design intent

— used to specify behavior

Assertion

A

— A verification directive

High-level

A

— Architectural, -architectural

Low-level

— Implementation, embedded in RTL

29

HDF – CAV 2008

// Assert that the FIFO controller

// cannot overflow nor underflow

Assertion Stakeholders

Verification Engineer

Design Engineer

Requirement Assertions

Implementation Assertions

Compliance Traceability

Improve Quality

Reduce Debugging Time

Reduce Debugging Time

Assertion Languages (PSL, SVA)

Assertion Libraries (OVL)

Protocol Checkers

Protocol Checkers

30

HDF – CAV 2008

Temporal Logic Pioneers

Foundation for Today’s Property Languages

LTL, CTL, CTL*, Regular Expressions . . . .

Emerson

Clarke

Pnueli

Vardi

31

HDF – CAV 2008

Wolper

Assertion Language and Library Standards

1993-1997

1998

1999

2000

2001

2002

Sugar Sugar

Sugar RCTL

2004

PSL

PSL

v1.01

v1.1

2.0

1.0

IBM

2003

2005

2006

PSL

IEEE Std 1850-2005

CBV

Motorola

Assertion

Languages

Temporal e

Verisity

ForSpec

SVA SVA

Intel

v3.0

Sun -> Synopsys

SMAC

OVL

HP

Verplex

IEEE Std 1800-2005

OVL

OVL OVL OVL

Accellera

0-In/Mentor

HDF – CAV 2008

v3.1a

v2.0

CheckerWare

32

SVA

OVA

Vera/TXP OVA

Assertion

Libraries

v3.1

SVA

1.0 R4

1.5 1.6 1.7

IEEE 1850 PSL Example

assert never ( { rose(req) ; { !gnt[0] [+] } && { gnt[1] [->2] } } ) @(posedge clk);

clk

req[0]

req[0]

req[1]

gnt[0]

Arbiter

gnt[0]

gnt[1]

gnt[1]

33

HDF – CAV 2008

IEEE 1800 SystemVerilog Example

clk

start

done

error

assert property ( @(posedge clk) disable iff (reset_n)

start |=> (!start throughout done [->1]) );

34

HDF – CAV 2008

IEEE 1800 SystemVerilog Example

SVA local variables

—

Can eliminate the need for extra modeling code

—

Technical paper [DAC 2007, Long, Seawright]

—

Methodology paper [DVCon 2007, Long, Seawright, Foster]

property check_id;

logic [3:0] lv_id;

@(posedge clk) disable iff (~rst_n)

($rose(snd), lv_id=snd_id) |-> ##[0:3] (bck && (bck_id==lv_id));

endproperty

35

HDF – CAV 2008

Accellera OVL Example

Semantics

—

Between start_event and end_event inclusive, test_expr must

not change

clk

we

8’b0

addr[7:0]

8’b1

done

error

test_expr

assert_win_unchange #(0,8) addrStbl ( clk, rst_n, we, addr, done);

severity

36

HDF – CAV 2008

width

start_event

end_event

Standards Use

Current

Next 12 Months

100%

80%

70%

61%

60%

40%

26% 25%

20%

14% 12%

5% 4%

7% 6%

0%

OVL

SystemVerilog

Assertions

Source: 2008 Far West Research and Mentor Graphics

37

HDF – CAV 2008

PSL Assertions

OVA Assertions

OTHER Assertions

Why Libraries?

Adopting an assertion language standard does not

prevent ad hoc flows

—

If not managed, the results can be disruptive

Multiple ways users report errors

Multiple ways users enable / disable assertions

Multiple ways users reset assertions

Libraries within an organization

—

Encapsulate and enforce methodology

—

Provide pre-verified parameterized sets of assertions

—

Eliminate need for everyone to be an expert

38

HDF – CAV 2008

Use Profile for 22,033 OVLs

13119

3905

1270

1217

562

462

405

288

59% assert_always

17% assert_never

5% assert_never_unknown

5% assert_implication

2% assert_zero_one_hot

2% assert_one_hot

1% assert_next

1% assert_win_unchange

154 0% assert_cycle_sequence

143 0% assert_range

102

71

69

46

38

31

29

23

23

19

18

14

12

4

4

3

2

0

0

0

0

0

0

0% assert_quiescent_state

0% assert_transition

0% assert_no_transition

0% assert_time

0% assert_unchange

0% assert_always_on_edge

0% assert_width

0% assert_proposition

0% assert_frame

0% assert_change

0% assert_never_unknown_async

0% assert_window

0% assert_handshake

0% assert_no_underflow

0% assert_no_overflow

0% assert_fifo_index

0% assert_win_change

0% assert_one_cold

0% assert_odd_parity

0% assert_increment

0% assert_even_parity

0% assert_delta

0% assert_decrement

39

HDF – CAV 2008

Data source from two different

companies across multiple designs

Many users chose simple OVL +

auxiliary logic; e.g., DFF for delay

Top ten OVLs account for 98% of

instances

Top Eight OVLs

13119 59%

3905 17%

1270 5%

1217 5%

562 2%

462 2%

405 1%

288 1%

40

HDF – CAV 2008

assert_always

assert_never

assert_never_unknown

assert_implication

assert_zero_one_hot

assert_one_hot

assert_next

assert_win_unchange

OVL and IP

IP provider ARM uses OVL internally across a

wide range of verification methodologies

ARM distributes the OVL in one of two ways:

—

As part of the design embedded in RTL

Users can enable the OVL assertions to test their particular

configuration, or

Use them as an integration check to ensure the design is

correctly wired

—

As a standalone piece of verification IP

For example, AMBA protocol checkers

[Foster, Larsen, Turpin - DVCon 2006, DAC 2008]

41

HDF – CAV 2008

Roadmap

State of the Industry

ABV Overview

Industry Successes

Industry Challenges

Future Opportunities

42

42

HDF – CAV 2008

Assertions Improve Observability

Reduces time-to-bug

Testbench

=

Bugs missed due to

poor observability

=

Reduce debugging up to 50% [CAV 2000, IBM FoCs paper]

Bugs detected closer to their source due to improved observability

43

HDF – CAV 2008

Published Data on Assertions Use

Assertion Monitors

Cache Coherency Checkers

Register File Trace Compare

Memory State Compare

End-of-Run State Compare

PC Trace Compare

Self-Checking Test

Simulation Output Inspection

Simulation Hang

Other

34%

9%

8%

7%

6%

4%

11%

7%

6%

8%

17% of bugs found by assertions on Cyrix M3(p1) project

[Krolnik '98]

50% of bugs found by assertions on Cyrix M3(p2) project

[Krolnik ‘98]

85% of bugs found using over 4000 assertions on an HP

server chipset project

Kantrowitz and Noack [DAC 1996]

[Foster and Coelho HDLCon 2001]

Assertion Monitors

25%

Register Miscompare

Simulation "No Progress”

PC Miscompare

Memory State Miscompare

Manual Inspection

Self-Checking Test

Cache Coherency Check

SAVES Check

22%

15%

14%

8%

6%

5%

3%

2%

Thousands of assertions in Intel Pentium project

[Bentley 2001]

10,000 OVL assertion in Cisco project

[Sean Smith 2002]

Taylor et al. [DAC 1998]

44

HDF – CAV 2008

© DAC Tutorial 2003

Sun

Assertion-Based Verification of a 32 thread SPARC™ CMT Processor

[Turumella, Sharma, DAC 2008]

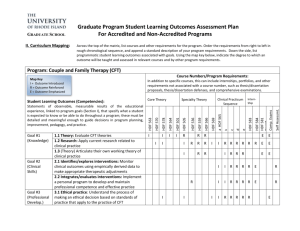

Category

Unique

Instantiated

Low-Level

3912

132773

Interface

5004

44756

High-Level

1930

18618

Bugs Found by Type of Assertion

Bugs Found Using Assertions

Low-level

Formal

Simulation

45

HDF – CAV 2008

Interface

High-level

Sun

Assertion-Based Verification of a 32 thread SPARC™ CMT Processor

DAC 2008, Turumella, Sharma

Category

Unique

Instantiated

Low-Level

3912

132773

Interface

5004

44756

High-Level

1930

18618

Hours

Average Debug Time

16

14

12

10

8

6

4

2

0

Effort Allocation of Dedicated Verification

Engineers by Type of Activity

>50%

Formal

85%

40%

Sim + Assert

Sim + None

Formal

Sim + Assert

Process

46

HDF – CAV 2008

Sim + None

Verification Debug

60%

Testbench Development

ABV Improves Controllability

Time-to-Functional-Closure

Reference Model

Testbench

?

ABV can provide actionable metrics to improve coverage

47

HDF – CAV 2008

ABV throughout Verification

State Search

Testbench

RTL

FPGA or

Emulation

Formal

Prop’s

passing tests

Formal Verification

Assertions

Coverage

Simulation

O/S Trials

[Foster, Larsen, Turpin - DVCon 2006]

48

HDF – CAV 2008

Beyond Syntax and Semantics

Industry Experiences with OVL/PSL/SVA

http://www.eda-stds.org/ieee-1850/dac08/ABVworkshop.pdf

ARM

Intel

SUN

IBM

Broadcom

49

HDF – CAV 2008

Roadmap

State of the Industry

ABV Overview

Industry Successes

Industry Challenges

Future Opportunities

50

50

HDF – CAV 2008

Industry Challenges

The Industry. . .

Resists adoption of assertions

Lacks roadmap for maturing

processes and skills

Perceives ABV difficult

—

Declarative forms of specification

—

Temporal logic difficult

Applies formal in an ad hoc

manner

51

HDF – CAV 2008

Verification Techniques in Use

Industry Resists Adoption

67%

FUNCTIONAL SIMULATION AT RTL LEVEL

51%

TIMING SIMULATION AT GATE LEVEL

48%

FUNCTIONAL SIMULATION AT GATE LEVEL

41%

40%

FPGA PROTOTYPING

FUNCTIONAL COVERAGE

37%

36%

33%

ASSERTIONS

FUNCTIONAL SIMULATION ABOVE RTL LEVEL

C/C++ SIMULATION

27%

26%

ANALOG/MIXED-SIGNAL SIMULATION

HARDWARE/SOFTWARE CO-VERIFICATION

25%

24%

23%

SYSTEM C SIMULATION

TRANSISTOR-LEVEL SIMULATION

TRANSACTION-LEVEL SIMULATION

21%

EMBEDDED CHECKERS TO TRAP ILLEGAL CONDITIONS IN THE DESIGN

EMULATION (COMMERCIAL SYSTEMS)

ACCELERATED SIMULATION

EMULATION (CUSTOM BUILT SYSTEMS)

RF SIMULATION

PROTOTYPING WITH SPECIAL TEST CHIPS

0%

Source: 2008 Far West Research and Mentor Graphics

52

HDF – CAV 2008

10%

10%

10%

9%

7%

20%

40%

60%

80%

100%

Objections

Assertions slow down simulations

—

25% to 100%

Assertions might be buggy

Assertion maintenance underestimated

Where am I going to

find time to write

assertions? I don’t

even have time to

write comments!

53

HDF – CAV 2008

Building Skills

Levels of Maturity

1

Ad hoc

2

Managed

3

Defined

4

Quantitatively

Managed

Process Capability Maturity Model

Value

Infrastructure

Skills

54

HDF – CAV 2008

Resources

Metrics

5

Optimizing

Timing Diagrams

assert_next

#(severity_level, num_cks, check_overlapping,check_missing_start, property_type, msg, coverage_level)

u1 (clk, reset_n, start_event, test_expr)

test_expr must hold num_cks after start_event holds

ASSERT

forall t.

conditions imply

requirements

start_event

test_expr

clk

55 DVCon 2006

assert_next #(0,1,1,0)

t

t+1

N Cycles

num_cks=1

check_overlapping=1

check_missing_start=0

Patterns

A pattern describes a proven solution to

a recurring design problem

req

grant

start

56

56

HDF – CAV 2008

Patterns

Why Use Patterns?

—

They have been proven

—

They are reusable

—

Patterns reflect the experience, knowledge and insight of

developers who have used these patterns in their own work

Patterns provide a ready-made solution that can be adapted

to different problems

They are expressive

Patterns provide a common vocabulary of solutions that can

express large solutions succinctly

57

57

HDF – CAV 2008

Event-Bounded Window Pattern

Context

—

The event-bounded window pattern applies to protocol

transactions verification or control logic involving data

stability requirements, where the window of time for the

transaction or data-stability is not explicitly stated, but is

bounded by events within the design.

start

Block

58

HDF – CAV 2008

done

Block

Event-Bounded Window Pattern

Solution

—

Events bound an arbitrary window of time during which another

active event must never occur

Events can be written as Boolean expressions in Verilog

—

For example, a rising start or an active done in the follow assertion

assert property (@(posedge clk) disable iff (reset_n)

$rose(start) |=> (!start throughout done [-> 1]) );

59

HDF – CAV 2008

Event-Bounded Window Pattern

clk

start

done

error

assert property (@(posedge clk) disable iff (reset_n)

$rose(start) |=> (!start throughout done [-> 1]) );

60

HDF – CAV 2008

Building Skills

1

2

3

Can an organization with ad hoc methodologies

successfully build a reusable object-oriented

constrained-random

coverage-driven testbench . . . repeatedly?

Can an organization lacking sufficient skills

formally prove a cache controller?

61

HDF – CAV 2008

4

5

Formal Testplanning

Identify

// --------------------------------------------// SVA : Bus legal states

// --------------------------------------------property

DataChannel

Link Layer

TX p_valid_inactive_transition;

PHY

@(posedge

monitor_mp.clk)

disable

iff

(bus_reset)

Encoder

Decoder

( bus_inactive) |=> (( bus_inactive) || (bus_start));

Compressed

endproperty

Audio

RX

a_valid_inactive_transition:

assert property (p_valid_inactive_transition) else begin

• Sequential in nature

status = new()

• •Potentially

involves data transformation

Concurrency

status.set_err_trans_inactive();

• Multiple

streams

if (status_af

!= null) status_af.write(status);

Not

end a good candidate for formal!

Good candidates for formal!

Identify

Candidate

Describe

Document

Interfaces

Capture

Executable

PropertiesSpec

Formalize Properties

Define Coverage

Define

Closure

Select Strategy

Execution

Strategy

[ DVCon 2006 Foster, Singhal, Loh, Rabii]

62

HDF – CAV 2008

Formal Verification

Formal Testplanning

Identify

Order your list of properties:

Did a respin previously occur for a similar

property?

Identify

Candidate

Describe

Document

Interfaces

Capture

Are you concerned about achieving high

coverage for a particular property?

Executable

PropertiesSpec

Formalize Properties

Is the property control-intensive?

Is there sufficient access to the design team

for a particular property?

63

HDF – CAV 2008

Define Coverage

Define

Closure

Select Strategy

Execution

Strategy

Formal Verification

Levels of Maturity and Formal

Design Block

Level of Maturity

1

2

Difficulty

Arbiter

3

Easy

Timing Controller

3

Easy

AHB Bus Bridge

3

Easy

SRAM Controller

3

Easy

AXI Bus Bridge

3

OK

SDRAM Controller

3

OK (more difficult with data integrity)

DDR Controller

3

OK (more difficult with data integrity)

DDR2 Controller

3

Medium

USB Controller

4

Difficult

Cache Controller

4

More Difficult

PCI-Express

4

Hard

JPEG/MPEG

-

NO-GOOD-FOR-FORMAL

DSP

-

NO-GOOD-FOR-FORMAL

Encryption

-

NO-GOOD-FOR-FORMAL

Floating-Point Unit

-

NO-GOOD-FOR-FORMAL

64

HDF – CAV 2008

3

4

5

Processor Example and Levels of Maturity

Level 3

Bus Interface Unit (BIU)

Level 4 Instruction Cache Unit

(ICU)

Data Cache Unit

(DCU)

Integer Unit

(IU)

Level 3 - 4

PwrDwn, Clock

Scan Unit (PCSU)

Floating Point Unit

(FPU)

Stack Manager

Unit (SMU)

Level 3

65

HDF – CAV 2008

Level 4

Not good for

model

checking

Memory Management Level 3 - 4

Unit (MMU)

Processor Example and Levels of Maturity

Level 3 — Bug hunting hot spots with assertions and formal

Bus Interface Unit (BIU)

Instruction Cache Unit

(ICU)

Data Cache Unit

(DCU)

Integer Unit

(IU)

Floating Point Unit

(FPU)

PwrDwn, Clock

Scan Unit (PCSU)

Stack Manager

Unit (SMU)

Memory Management

Unit (MMU)

= Embedded RTL assertions for hot spot

66

HDF – CAV 2008

Bus-Based Design Example

CPU 1

Bridge

CPU 2

Control

UART

Datapath

FIFO

Bus A

Arbiter

Bus B

I/F

I/F

Datapath

Memory

Controller

Graphics

Controller

67

HDF – CAV 2008

FIFO

Timer

Nonpipelined Bus Interface

clk

rst_n

sel[0]

en

I/F

addr

I/F

write

rdata

Master

68

HDF – CAV 2008

wdata

Slave 0

Non-Burst Write Cycle

0

1

2

3

4

Addr 1

addr

write

sel[0]

en

Data 1

wdata

state

INACTIVE

69

HDF – CAV 2008

START

ACTIVE

INACTIVE

Non-Burst Read Cycle

0

1

2

3

4

Addr 1

addr

write

sel[0]

en

Data 1

rdata

state

INACTIVE

70

HDF – CAV 2008

START

ACTIVE

INACTIVE

Conceptual Bus States

INACTIVE

no transfer

sel[0] == 0

en == 0

setup

no transfer

START

sel[0] == 1

en == 0

transfer

ACTIVE

sel[0] == 1

en == 1

71

HDF – CAV 2008

setup

Interface Requirements

Property Name

Description

Bus legal treansitions

p_state_reset_inactive

Initial state after reset is INACTIVE

p_valid_inactive_transition

ACTIVE state does not follow INACTIVE

p_valid_start_transition

Only ACTIVE state follows START

p_valid_active_transition

ACTIVE state does not follow ACTIVE

p_no_error_state

Bus state must be valid

Bus stable signals

p_sel_stable

Slave select signals remain stable from START to ACTIVE

p_addr_stable

Address remains stable from START to ACTIVE

p_write_stable

Control remains stable from START to ACTIVE

p_wdata_stable

Data remains stable from START to ACTIVE

INACTIVE

no transfer

sel[0] == 0

en == 0

setup

no transfer

START

sel[0] == 1

en == 0

transfer

ACTIVE

sel[0] == 1

en == 1

72

HDF – CAV 2008

setup

Use Modeling Code to Simplify Assertion

Coding

`ifdef ASSERTION_ON

//Map bus control values to conceptual states

if (rst_n) begin

bus_reset = 1;

bus_inactive = 1;

bus_start = 0;

bus_active = 0;

bus_error = 0;

end

else begin

bus_reset = 0;

bus_inactive = ~sel & ~en;

bus_start

= sel & ~en;

bus_active = sel & en;

bus_error

= ~sel & en;

end

....

`endif

73

HDF – CAV 2008

INACTIVE

no transfer

sel[0] == 0

en == 0

setup

no transfer

START

sel[0] == 1

en == 0

transfer

ACTIVE

sel[0] == 1

en == 1

setup

OVL Assertions

Property Name

Description

Bus legal transitions

p_valid_inactive_transition

ACTIVE state does not follow INACTIVE

INACTIVE

no transfer

sel[0] == 0

en == 0

// --------------------------------------------// REQUIREMENT: Bus legal states

// ---------------------------------------------

setup

no transfer

assert_next p_valid_inactive_transition

(clk, rst_n, bus_inactive, (bus_inactive || bus_start));

START

sel[0] == 1

en == 0

transfer

ACTIVE

sel[0] == 1

en == 1

74

HDF – CAV 2008

setup

Industry Challenges

Verification

Gap

Design

Gap

Design Size in Millions of Gates

Levels of Maturity

1

Ad hoc

Ability to Fabricate

80

60

40

2

Managed

3

Defined

4 Ability to Design 5

Quantitatively

Optimizing

Managed

20

0

1988

Ability to Verify

1992

1996

2000

2005

Process Capability Maturity Model

75

HDF – CAV 2008

75

Roadmap

State of the Industry

ABV Overview

Industry Successes

Industry Challenges

Future Opportunities

76

HDF – CAV 2008

Which Verification Challenge Would You

Most Like to See Solved?

Other

1%

DEFINING APPROPRIATE COVERAGE METRICS

9%

TIME TO DISCOVER THE NEXT BUG

9%

TIME TO ISOLATE AND RESOLVE A BUG

MANAGING THE VERIFICATION PROCESS

KNOWING MY VERIFICATION COVERAGE

CREATING SUFFICIENT TESTS TO VERIFY THE DESIGN

10%

15%

18%

38%

0% 5% 10% 15% 20% 25% 30% 35% 40%

Source: 2008 Far West Research and Mentor Graphics

77

HDF – CAV 2008

Coverage

Coverage seems to have evolved in an ad hoc fashion

Few meaningful links between simulation and formal

78

HDF – CAV 2008

Coverage Pain

Coverage closure is the big unknown in the process

—

Huge effort debugging un-hit coverage targets

—

Huge effort biasing random constraints or writing directed test to hit

coverage holes

Planned

Covered

Doesn’t

converge

1400

1300

1200

1100

1000

900

800

700

600

63%

87%

81%

70%

55%

Month 0

Setup

Month 1

Test

writing

Coverage

remodeling

Month 2

Large holes

Month 3

Small holes

[Singhal PCI-Express Example 2006]

79

HDF – CAV 2008

Emerging Flows

System Specification

UML

or ?

Algorithm Model

(C/C++)

Processor

IP

Architecture Mapping

(HW/SW Partition)

Architecture Model

(SystemC untimed TLM)

Functional Verification

(reference)

Performance

Analysis

Memory

IP

Bus

IP

TLM

HW refinement

Performance

Estimation

FW Development

10X to 1000X faster!

Cycle Accurate (CA)

(SystemC)

CA Verification

Behavioral Synthesis

HDL for FPGA

CA Simulation Model

HDL to CA C trans

RTL Model (SystemVerilog, Verilog, VHDL)

RTL

Prototype

Verification

80

HDF – CAV 2008

RTL

Verification

Accommodates

late-stage changes

in requirements!

ASIC

Implementation

Lack of sequential

equivalence checking

between levels is an

obstacle!

The Need for TLM

Scoreboard

Test

Controller

Response

Compare

Coverage

Reference

Model

Monitor

Stimulus

trans

trans

Generator

Driver

Monitor

Design

DUT

Transaction

RTL

Model

81

HDF – CAV 2008

Responder

Abstraction

Conversion

Slave

Transaction

Level

Verification Component Reuse

Scoreboard

Test

Controller

Response

Compare

Coverage

Reference

Model

Monitor

Stimulus

Generator

Driver

Monitor

DUT

RTL

82

HDF – CAV 2008

Responder

Abstraction

Conversion

Slave

Transaction

Level

TLM

Reasoning about untimed transactions

—

—

Execution Semantics and Formalisms for Multi-Abstraction

TLM Assertions [Ecker, et. al, MEMOCODE 06]

MEMOCODE, DATE, HLDVT, ICCD, IESS, . . .

Start

End

Start

Start

Transaction B

Start

Transaction A

83

HDF – CAV 2008

End

End

Roadmap

State of the Industry

ABV Overview

Industry Successes

Industry Challenges

Future Opportunities

Summary

84

84

HDF – CAV 2008

Summary

State of the Industry

Assertion Language Standards

ABV Value Propositions

Observability

— Controllability

—

Success case studies

Industry challenges

Formal Testplanning

Opportunities

85

HDF – CAV 2008

There is no silver bullet in verification!

Understand process strengths and weaknesses

Choose the right combination for the job

It’s a very humbling

experience to make a

multi-million-dollar

mistake, but it is also

very memorable.

Dr. Fredrick Brooks

No Silver Bullet: Essence and Accidents of Software Engineering,

Information Processing. 1986.

86

HDF – CAV 2008

What’s the Solution to the Verification Gap?

Deming’s fundamental principle:

We should cease inspection for the

purpose of identifying defects since

inspection is costly, unreliable, and

ineffective.

Instead, we should design quality in

from the start.

87

HDF – CAV 2008

W. Edwards Deming

State of the Industry

1

Mindless

88

HDF – CAV 2008

2

3

4

5

Statistics

Assertion-Based Verification

The process of creating assertions

forces the engineer to think. . . and in this

incredible world of automation, there is

no substitute for thinking.

89

HDF – CAV 2008

90

WCR

2008

HDF –February,

CAV 2008

Which of the following best describes

your company?

35%

29%

30%

27%

25%

20%

16%

14%

15%

10%

10%

4%

5%

0%

ASIC Vendor

Fabless IC

Vendor

Source: Far West Research and Mentor Graphics

91

HDF – CAV 2008

IC

Manufacturer

Systems

Company

Design

Services

Compay

IP Vendor

Company

N=401

Please describe your IC/ASIC design

Gates of Logic &

Datapath

35%

30%

25%

23%

20%

17%

14%

15%

13%

12%

11%

10%

6%

5%

5%

0%

100K or

LESS

>100K 500K

>500K - 1M

Source: Far West Research and Mentor Graphics

92

HDF – CAV 2008

>1M - 5M

>5M - 10M

>10M - 15M >15M - 20M

>20M

N=400

Please describe your IC/ASIC design

Gates of Logic & Datapath Trend

2002

2007

35%

30%

30%

25%

25%

22% 23%

20%

17%

15%

15%

14%

13%

12%

11%

10%

6%

5%

6%

5%

3%

0%

100K or

LESS

>100K - 500K >500K - 1M

Source: Far West Research and Mentor Graphics

93

HDF – CAV 2008

>1M - 5M

>5M - 10M

>10M - 15M

>15M - 20M

>20M

Please describe your IC/ASIC design

Synthesized Gates (excluding Test Circuitry)

35%

30%

25%

23%

20%

15%

17%

16%

14%

13%

10%

8%

5%

5%

>10M 15M

>15M 20M

5%

0%

100K or

LESS

>100K 500K

>500K 1M

Source: Far West Research and Mentor Graphics

94

HDF – CAV 2008

>1M - 5M

>5M 10M

20M

N=400

Please describe your IC/ASIC design

Synthesized Gates Trend (excluding Test

Circuitry)

2004

35%

2007

32%

30%

23%

25%

20%

15%

18%

19%

17%

14%

17% 16%

13%

10%

10%

5%

5%

5%

5%

0%

100K or

LESS

>100K 500K

Source: Far West Research and Mentor Graphics

95

HDF – CAV 2008

>500K 1M

>1M - 5M >5M - 10M

>10M 15M

>15M 20M

Please describe your IC/ASIC design

BYTES of Embedded Memory

35%

30%

25%

20%

20%

19%

17%

15%

12%

11%

10%

9%

7%

6%

5%

0%

NONE

20K or

LESS

>20K 100K

Source: Far West Research and Mentor Graphics

96

HDF – CAV 2008

>100K 500K

>500K - 1M

>1M - 5M

>5M - 15M

>15M

N=397

Please describe your IC/ASIC design

Embedded Microprocessor Cores (Hard or Soft)

45%

39%

40%

35%

32%

30%

25%

20%

14%

15%

10%

7%

6%

5%

3%

0%

NONE

1

Source: Far West Research and Mentor Graphics

97

HDF – CAV 2008

2

3

4

5 or MORE

N=399

Please describe your IC/ASIC design

Embedded Microprocessor Cores Trend (Hard or Soft)

50%

2004

48%

2007

45%

39%

40%

35%

35%

32%

30%

25%

20%

14%

11%

15%

10%

7% 6%

5%

7%

3%

0%

NONE

1

Source: Far West Research and Mentor Graphics

98

HDF – CAV 2008

2

3

4

5 or MORE

Please describe your IC/ASIC design

Sources of on-chip microprocessors

45%

40%

40%

35%

30%

27%

25%

20%

16%

15%

10%

7%

6%

9%

11%

4%

5%

6%

0%

PROPRIETARY

ARC

ARM

Source: Far West Research and Mentor Graphics

99

HDF – CAV 2008

Freescale

IBM

(PowerPC)

MIPS

Tensilica

Texas

Instruments

OTHER

VENDOR

N=271

Please describe your IC/ASIC design

Sources of on-chip microprocessors

Trend

2004

2007

45%

40%

40%

35%

30%

27%

25%

20%

16%

16%

15%

15%

9%

10%

7%

6%

4%

5%

12%

11%

6%

4%

6%

1%

0%

PROPRIETARY

ARC

ARM

Source: Far West Research and Mentor Graphics

100

HDF – CAV 2008

Freescale

IBM (PowerPC)

MIPS

Tensilica

Texas

Instruments

OTHER

VENDOR

Please describe your IC/ASIC design

Embedded DSP Cores (Hard or Soft)

70%

65%

60%

50%

40%

30%

20%

20%

9%

10%

2%

2%

3%

3

4

5 or MORE

0%

NONE

1

2

N=400

101

HDF – CAV 2008

Please describe your IC/ASIC design

Sources of On-Chip

DSPs

80%

70%

70%

60%

50%

47%

40%

30%

22%

20%

13%

6%

5%

10%

4%

1%

0%

13%

4%

0%

PROPRIETARY

ARC

ARM

Source: Far West Research and Mentor Graphics

102

HDF – CAV 2008

CAST

CEVA

Freescale

Im prov

Texas

System s Instrum ents

3DSP

Verisilicon

OTHER

VENDOR

N=400

Please describe your IC/ASIC design

Sources of On-Chip DSPs Trend

2004

2007

80%

70%

70%

60%

50%

47%

40%

30%

22%

20%

12%

10%

6%

5%

1% 1%

13%

7%

4%

0%

13%

4%

0%

PROPRIETARY ARC

ARM

Source: Far West Research and Mentor Graphics

103

HDF – CAV 2008

CAST

CEVA

Freescale Im prov

Texas

3DSP

System sInstrum ents

Verisilicon OTHER

VENDOR

Please describe your IC/ASIC design

Mean Design Composition (in %)

60

41

40

33

20

13

13

PURCHASED IP

ANALOG, RF

AND/OR MIXED

SIGNAL

0

NEW LOGIC

REUSED LOGIC

(Developed inhouse)

Source: Far West Research and Mentor Graphics

104

HDF – CAV 2008

N=392

Please describe your IC/ASIC design

Fastest on-chip clock used for Logic (MHz)

35%

30%

25%

21%

22%

20%

14%

15%

12%

11%

9%

10%

6%

5%

5%

0%

<50

50 - 133

>133 - 250

Source: Far West Research and Mentor Graphics

105

HDF – CAV 2008

>250 - 400

>400 - 600

>600 1,000

>1,000 1,500

N=400

>1,500

Please describe your IC/ASIC design

Fastest on-chip clock used for Logic Trend (MHz)

2002

2004

2007

35%

29%

30%

28%

25%

25%

22%

21%

18%

20%

15%

15%

16%

14%

14%

12%

11%

12%

11%

10%

7%

5%

9%

8%

5%

7% 6%

5%

3%

0%

<50

50 - 133

>133 - 250

Source: Far West Research and Mentor Graphics

106

HDF – CAV 2008

>250 - 400

>400 - 600

>600 - 1,000

>1,000 - 1,500

>1,500

Please describe your IC/ASIC design

Independent Clock Domains

35%

30%

30%

25%

25%

20%

15%

15%

11%

10%

9%

4%

5%

3%

4%

0%

1

2

Source: Far West Research and Mentor Graphics

107

HDF – CAV 2008

3--4

5--10

11--20

21--30

31--50

N=399

>50

Please describe your IC/ASIC design

Independent Clock Domains Trend

2002

2004

2007

40%

36%

35%

31%

30%

30%

30%

25%

25%

23%

20%

15%

10%

16%

14%15%

14%

12%

11%

9%

8%

7%

6%

4%

5%

4%

3%

4%

0%

1

2

3--4

Source: Far West Research and Mentor Graphics

108

HDF – CAV 2008

5--10

11--20

21--30

31--50

>50

Please describe your IC/ASIC design

Are gate clocks used?

100%

77%

80%

60%

40%

23%

20%

0%

Yes

Source: Far West Research and Mentor Graphics

109

HDF – CAV 2008

No

N=397

Please describe your IC/ASIC design

Are gate clocks used?

2002

2004

2007

100%

77%

80%

60%

58%

54%

42%

46%

40%

23%

20%

0%

Yes

Source: Far West Research and Mentor Graphics

110

HDF – CAV 2008

No

Please describe your IC/ASIC design

Asynchronous Clock Boundaries

30%

24%

25%

24%

23%

20%

15%

13%

10%

6%

5%

5%

3%

2%

16--20

21--30

0%

0

1--2

Source: Far West Research and Mentor Graphics

111

HDF – CAV 2008

3--4

5--10

11--15

>30

N=398

Please describe your IC/ASIC design

Asynchronous Clock Boundaries Trend

2002

30%

25%

26%

23% 24% 24%

24%

22%

22%

20%

2004

2007

27%

23%

18%

16%

13%

15%

11%

10%

10%

6%

5%

5%

3%

2%

16--20

21--30

0%

0

1--2

Source: Far West Research and Mentor Graphics

112

HDF – CAV 2008

3--4

5--10

11--15

>30

Please describe your IC/ASIC design

Are cycle-sharing latch techniques

used?

100%

80%

71%

60%

40%

29%

20%

0%

Yes

Source: Far West Research and Mentor Graphics

113

HDF – CAV 2008

No

N=397

Please describe your IC/ASIC design

Are cycle-sharing latch techniques used Trend?

2002

2004

2007

100%

79%

80%

81%

70.8%

60%

40%

29.0%

21%

19%

20%

0%

Yes

Source: Far West Research and Mentor Graphics

114

HDF – CAV 2008

No

Please describe your IC/ASIC design

Number of I/Os (exclude power/ground)

30%

24%

25%

21%

20%

19%

15%

15%

11%

10%

7%

5%

3%

0%

50 or LESS

>50 - 100

Source: Far West Research and Mentor Graphics

115

HDF – CAV 2008

>100 - 200

>200 - 400

>400 - 600

>600 - 800

>800

N=399

Please describe your IC/ASIC design

Number of I/Os (exclude power/ground) Trend

2002

30%

2007

28%

25%

25%

25%

20%

2004

18%

19%

20%

24%

21%

18%

15%

15%

12%

12%

12%

11% 11%

10%

13%

7%

5%

7%

3%

0%

50 or LESS

>50 - 100

Source: Far West Research and Mentor Graphics

116

HDF – CAV 2008

>100 - 200

>200 - 400

>400 - 600

>600 - 800

>800

Please describe your IC/ASIC design

On-chip buses

50%

46%

45%

40%

35%

30%

22%

25%

20%

17%

15%

10%

10%

5%

1%

3%

1%

0%

NONE

PROPRIETARY ARM AMBA

Source: Far West Research and Mentor Graphics

117

HDF – CAV 2008

Hitachi

IBM

CoreConnect

Sonics

Other

N=398

Please describe your IC/ASIC design

Process geometry (drawn feature size)

60%

50%

40%

30%

22%

26%

22%

20%

10%

6%

11%

11%

0.18µ

0.25µ or

LARGER

3%

0%

0.045µ (45nm )

or SMALLER

0.065µ (65nm )

Source: Far West Research and Mentor Graphics

118

HDF – CAV 2008

0.09µ (90nm )

0.13µ

0.15µ

N=398

Please describe your IC/ASIC design

Process geometry (drawn feature size) Trend

2002

2004

2007

60%

48%

50%

40%

35%

31%

30%

26%

22%

26%

21%

22%

20%

12%

10%

6%

2%

7%

2%

11%

12%

11%

6%

3%

0%

0.045µ (45nm ) 0.065µ (65nm ) 0.09µ (90nm )

or SMALLER

Source: Far West Research and Mentor Graphics

119

HDF – CAV 2008

0.13µ

0.15µ

0.18µ

0.25µ or

LARGER

Please describe your IC/ASIC design

Style that best describes the overall implementation of the

design

70%

59%

60%

50%

40%

30%

20%

10%

14%

6%

5%

Gate array

Embedded

array

9%

8%

0%

Source: Far West Research and Mentor Graphics

120

HDF – CAV 2008

Standard cell

Structured

ASIC

Structured

custom

Full custom

N=398

Please describe your IC/ASIC design

Style that best describes the overall implementation of the design

Trend

2002

70%

59%

60%

2004

2007

60%

59%

50%

40%

30%

23%

20%

9%

10%

18%

14%

7% 6%

2%

2%

8%9%

5%

6% 6% 8%

0%

Gate array

Embedded

array

Source: Far West Research and Mentor Graphics

121

HDF – CAV 2008

Standard

cell

Structured

ASIC

Structured

custom

Full custom

Please describe your IC/ASIC design

Expected lifetime volume (total unit

shipment)

30%

25%

21%

21%

20%

18%

15%

14%

15%

12%

10%

5%

0%

10K UNITS or LESS

>10K - 100K

Source: Far West Research and Mentor Graphics

122

HDF – CAV 2008

>100K - 1M

>1M - 5M

>5M - 25M

>25M UNITS

N=396

Please describe your IC/ASIC design

Design completed according to project’s original schedule

30%

28%

28%

25%

20%

16%

15%

11%

10%

6%

4%

5%

4%

3%

1%

0%

More than 10%

EARLY

10% EARLY

ON-SCHEDULE

Source: Far West Research and Mentor Graphics

123

HDF – CAV 2008

10% BEHIND

SCHEDULE

20%

30%

40%

50%

>50% BEHIND

SCHEDULE

N=399

What is the primary application market for

the IC or ASIC design you are describing?

25%

20%

15%

14%

10%

7%

5%

2%

0%

PC / Workstation / Server / Mainframe

Peripherals

Office Equipment

N=92

Source: Far West Research and Mentor Graphics

124

HDF – CAV 2008

What is the primary application market for

the IC or ASIC design you are

describing(Trend)? 2002 2004 2007

25%

20%

20%

15%

14%

15%

14%

14%

10%

7%

5%

1%

1%

2%

0%

PC / Workstation / Server /

Mainframe

Source: Far West Research and Mentor Graphics

125

HDF – CAV 2008

Peripherals

Office Equipment

What is the primary application market for the

IC or ASIC design you are describing?

2002

20%

2004

2007

18%

15%

12% 12%

10%

9%

8%

6%

5%

7%

6%

3%

5%

4%

2%

6%

4%

3%

5%

3%

1%

6%

4%

2%

0%

W ir eless I

W ir eless II

126

HDF – CAV 2008

N et wo r king I

N et wo r king II

N et wo r king III

N et wo r king IV

Ot her

C o mmunicat io ns

What is the primary application market for

the IC or ASIC design you are describing?

2002

20%

2007

19%

14%

15%

15%

10%

2004

10%

10%

8%

6%

5%

3%

2%

2%

0%

Consumer Audio, Video,

Games

127

HDF – CAV 2008

Automotive

Aerospace/Military

Other Industry

Indicate the peak number of engineers for

IC/ASIC design

Design Engineers

40%

30%

30%

24%

20%

20%

15%

9%

10%

0%

0%

0

1--2

Source: Far West Research and Mentor Graphics

128

HDF – CAV 2008

3--4

5--9

10--15

>15

N=401

Indicate the peak number of engineers for

IC/ASIC design

Verification Engineers

40%

31%

30%

26%

20%

17%

10%

12%

10%

3%

0%

0

1--2

Source: Far West Research and Mentor Graphics

129

HDF – CAV 2008

3--4

5--9

10--15

>15

N=401

Of the Verification Engineers

Formal analysis

40%

38%

30%

24%

19%

20%

10%

10%

6%

2%

0%

0

1

Source: Far West Research and Mentor Graphics

130

HDF – CAV 2008

2

3

4

5 or More

N=400

Of the Verification Engineers

FPGA prototyping

40%

37%

31%

30%

18%

20%

10%

7%

3%

5%

0%

0

1

Source: Far West Research and Mentor Graphics

131

HDF – CAV 2008

2

3

4

5 or MORE

N=399

Of the Verification Engineers

Emulation engineers

45%

41%

29%

30%

13%

15%

9%

6%

3%

0%

0

1

Source: Far West Research and Mentor Graphics

132

HDF – CAV 2008

2

3

4

5 or More

N=396

Estimate time design engineers spend on

following activities

60

54

46

40

20

0

DESIGN

Source: Far West Research and Mentor Graphics

133

HDF – CAV 2008

VERIFICATION

N=396

Estimate time verification engineers

spend on following activities

60

52

40

34

20

14

0

VERIFICATION/DEBUG

Source: Far West Research and Mentor Graphics

134

HDF – CAV 2008

TESTBENCH

DEVELOPMENT

OTHER ACTIVITIES

N=396

Percentage of total project time from first

draft to final transfer to final

manufacturing spent on verification

25%

20.3%

20%

18.5%

16.8%

16.5%

15%

12.5%

10%

7.3%

5.3%

5%

3.0%

0%

<20%

20 - 30

>30 - 40

Source: Far West Research and Mentor Graphics

135

HDF – CAV 2008

>40 - 50

>50 - 60

>60 - 70

>70 - 80

>80%

N=400

How many individual tests were created to

verify the design?

25%

21%

21%

19%

20%

15%

12%

10%

10%

8%

7%

5%

3%

0%

1 - 100

>100-200

>200-500

Source: Far West Research and Mentor Graphics

136

HDF – CAV 2008

>500 - 700

>700 - 1000

>1000 1500

>1500 2000

> 2000

N=399

What percentage were directed tests?

80

64

65

Directed

Random/Constrained

60

40

20

0

Source: Far West Research and Mentor Graphics

137

HDF – CAV 2008

N=392

How long did typical regression test take

to simulate?

20%

19%

18%

15%

13%

13%

11%

10%

10%

7%

6%

5%

3%

0%

1-4 HOURS

>4-8 HOURS >8-16 HOURS

Source: Far West Research and Mentor Graphics

138

HDF – CAV 2008

>16 - 24

HOURS

>1 DAY - 1.5 >1.5 - 2 DAYS >2 - 3 DAYS

DAYS

>3 - 4 DAYS

>4 DAYS

N=398

How many simulation licenses were used

to verify the design?

30%

28%

25%

25%

23%

20%

15%

10%

10%

4%

5%

4%

5%

2%

0%

1-5

>5-10

Source: Far West Research and Mentor Graphics

139

HDF – CAV 2008

>10-30

>30 - 50

>50 - 75

>75- 100

>100- 200

>200- 400

N=396

What is the mean composition of the

testbench (%) for this design?

60

50

41

40

20

8

0

NEW

Source: Far West Research and Mentor Graphics

140

HDF – CAV 2008

REUSED FROM OTHER

DESIGNS

ACQUIRED EXTERNALLY

Which languages and/or libraries were

used in the IC or ASIC design you are

describing?

Design

Current

Next 12 Months

100%

81% 80%

80%

60%

40%

37%

32%

26%

16%

11%

20%

22%21%

15%

3% 1%

0%

VHDL

Verilog

Source: Far West Research and Mentor Graphics

141

HDF – CAV 2008

System C

SystemVerilog

C/C++

OTHER Design

N=398

Which languages and/or libraries were

used in the IC or ASIC design you are

describing?

Testbench

Current

Next 12 Months

100%

80%

68%

64%

60%

41%

40%

30%29%

27%25%

20% 24%

17%

20%

11%

10%

9%

3% 4%

7%

5% 3%

0%

VHDL

Verilog

Synopsys

Vera

Source: Far West Research and Mentor Graphics

142

HDF – CAV 2008

System C SystemVerilog

PSL

Specman e

C/C++

OTHER

Testbench

N=399

Which languages and/or libraries were

used in the IC or ASIC design you are

describing?

Assertions

Current

Next 12 Months

100%

80%

70%

61%

60%

40%

26% 25%

20%

14% 12%

5%

4%

7%

6%

0%

SystemVerilog

Assertions

OVL

Source: Far West Research and Mentor Graphics

143

HDF – CAV 2008

PL Assertions

PVA Assertions

OTHER Assertions

N=308

Which languages and/or libraries do you

anticipate using on a project that will

begin within the next 12 months?

Design

100%

80%

80%

60%

40%

32%

26%

16%

20%

21%

1%

0%

VHDL

Verilog

Source: Far West Research and Mentor Graphics

144

HDF – CAV 2008

System C

System

Verilog

C/C++

Other

N=398

Which languages and/or libraries do you

anticipate using on a project that will

begin within the

next

12

months?

Testbench

100%

80%

64%

60%

41%

40%

29%

25%

20%

20%

9%

4%

7%

3%

0%

VDHL

Verilog

Synopsys

Vera

Source: Far West Research and Mentor Graphics

145

HDF – CAV 2008

System C

System

Verilog

PSL

Specm an e

C/C++

N=397

Other

Which languages and/or libraries do you

anticipate using on a project that will

begin within the next 12 months?

Assertions

100%

80%

70%

60%

40%

25%

20%

12%

4%

6%

0%

SystemVerilog

Assertions

OVL

Source: Far West Research and Mentor Graphics

146

HDF – CAV 2008

PL Assertions

PVA Assertions

Other

N=337

Did you actively manage power in the IC

or ASIC design you are describing?

80%

59%

60%

41%

40%

20%

0%

Yes

Source: Far West Research and Mentor Graphics

147

HDF – CAV 2008

No

N=401

Which of the following techniques did you

use to manage your power in this design?

DYNAMIC VOLTAGE FREQUENCY

SCALING

17%

16%

ASYNCHRONOUS LOGIC

STATIC VOLTAGE FREQUENCY

SCALING

15%

GLITCH SUPPRESSION USING

LATCHES

10%

INRUSH TRANSIENT ANALYSIS

9%

ADAPTIVE VOLTAGE SCALING

9%

Coarse Grain MTCMOS (pow er

sw itches outside of cells)

7%

Fine Grain MTCMOS (sleep

transistors in cells)

OTHER

5%

1%

0%

Source: Far West Research and Mentor Graphics

148

HDF – CAV 2008

5%

10%

15%

20%

N=237

Which of the following static verification

techniques and/or tools were used on the

design you are describing?

83%

STATIC TIMING ANALYSIS

FORMAL VERIFICATION - EQUIVALENCE

CHECKING AT GATE LEVEL

62%

57%

CODE REVIEWS

48%

CODE COVERAGE ANALYSIS

CODING GUIDELINES THAT ARE

ENFORCED

44%

LINT CHECKERS OR OTHER AUTOMATIC

CODE ANALYSIS

43%

FORMAL VERIFICATION - EQUIVALENCE

CHECKING ABOVE GATE LEVEL

40%

35%

DESIGN FOR VERIFICATION TECHNIQUES

FORMAL VERIFICATION - PROPERTY OR

MODEL CHECKING

OTHER

19%

1%

0%

Source: 2008 Far West Research and Mentor Graphics

149

HDF – CAV 2008

20%

40%

60%

80%

100%

Which of the following dynamic verification techniques

and/or tools were used in this design?

67%

FUNCTIONAL SIMULATION AT RTL LEVEL

51%

TIMING SIMULATION AT GATE LEVEL

48%

FUNCTIONAL SIMULATION AT GATE LEVEL

41%

40%

FPGA PROTOTYPING

FUNCTIONAL COVERAGE

37%

36%

33%

ASSERTIONS

FUNCTIONAL SIMULATION ABOVE RTL LEVEL

C/C++ SIMULATION

27%

26%

ANALOG/MIXED-SIGNAL SIMULATION

HARDWARE/SOFTWARE CO-VERIFICATION

25%

24%

23%

SYSTEM C SIMULATION

TRANSISTOR-LEVEL SIMULATION

TRANSACTION-LEVEL SIMULATION

21%

EMBEDDED CHECKERS TO TRAP ILLEGAL CONDITIONS IN THE DESIGN

EMULATION (COMMERCIAL SYSTEMS)

ACCELERATED SIMULATION

EMULATION (CUSTOM BUILT SYSTEMS)

RF SIMULATION

PROTOTYPING WITH SPECIAL TEST CHIPS

0%

Source: 2008 Far West Research and Mentor Graphics

150

HDF – CAV 2008

10%

10%

10%

9%

7%

20%

40%

60%

80%

100%

How did you select and create the test

stimulus to apply to this design?

78%

MANUALLY CREATED TESTS BASED ON THE REQUIREMENTS IN THE SPEC

MANUALLY CREATED CORNER CASE TESTS SELECTED TO STRESS THE LIIMITS

OF THE DESIGN

64%

41%

CONSTRAINED RANDOM STIMULUS GENERATION

31%

STIMULUS GENERATED FROM HIGHER LEVEL ABSTRACTION MODELS

WHITE BOX TESTS, SPECIFICALLY DESIGNED TO EXERCISE SPECIFIC INTERNAL

FUNCTIONS OF THE DESIGN

26%

KNOWN INCORRECT STIMULUS TO CHECK FOR ERROR HANDLING

26%

19%

IN-CIRCUIT TEST

18%

CAPTURED REAL-WORLD STIMULUS

13%

INDUSTRY STANDARD TEST SUITES

OTHER

1%

0%

Source: Far West Research and Mentor Graphics

151

HDF – CAV 2008

20%

40%

60%

80%

N=398

100%

How did you determine that the design

produced the correct response?

AUTOMATIC CHECKING AGAINST RESULTS

FROM A HIGHER LEVEL OF ABSTRACTION

36%

BUILT IN SELF TEST IMPLEMENTED AS

HARDWARE BLOCKS IN DESIGN

34%

BUILT IN SELF TEST IMPLEMENTED AS

SOFTWARE RUNNING ON EMBEDDED

PROCESSOR

19%

AUTOMATIC CHECKING AGAINST A

FORMAL DESCRIPTION OF THE DESIGN

16%

BUILT IN WHITE BOX CHECKERS

15%

AUTOMATIC CHECKING AGAINST AN

INDUSTRY STANDARD SET OF REFERENCE

RESULTS

15%

STANDARDS CONFORMANCE BY

EMBEDDED CHECKER TECHNOLOGY

OTHER

0%

6%

1%

5%

Source: Far West Research and Mentor Graphics

152

HDF – CAV 2008

10%

15%

20%

25%

30%

35%

N=399

40%

Which of the following best represents the

criterion you used for sign-off of the

design you are describing?

W HEN A LL T EST S D OC U M EN T ED IN T HE V ER IF IC A T ION

PLA N A R E C OM PLET E A N D PA SS

47%

W HEN T HE PR OJEC T PLA N SA Y S SIGN - OF F , A SSU M IN G

V ER IF IC A T ION LOOKS OK

17%

W HEN C OD E C OV ER A GE SA Y S W E HA V E A C HIEV ED A

T A R GET

10%

W HEN T HE EM U LA T ED OR PR OT OT Y PED D ESIGN IS

W OR KIN G IN - SIT U

10%

W HEN T HE R A T E OF B U GS F OU N D PER W EEK D R OPS B ELOW

A SPEC IF IED GOA L

9%

W HEN W E C A N N O- LON GER T HIN K OF A N Y M OR E T EST S T O

W R IT E

5%

W HEN T HE PR OJEC T PLA N SA Y S SIGN - OF F , R EGA R D LESS

OF ST A T U S

2%

OT HER

2%

0%

Source: Far West Research and Mentor Graphics

153

HDF – CAV 2008

10%

20%

30%

40%

50%

N=398

Which of the following reuse techniques

were applied in this design?

REUSE OF BLOCKS FROM PREVIOUS

PROJECTS WITH MODIFICATION

74%

REUSE OF TESTBENCH COMPONENTS,

STANDARDS CHECKERS, OR OTHER

VERIFICATION IP

61%

REUSE OF FUNCTIONAL BLOCKS FROM A

PREVIOUS PROJECT WITHOUT

MODIFICATION

46%

STANDARD DESIGN AND VERIFICATION

FILE MANAGEMENT, DIRECTORY

STRUCTURE AND REVISION CONTROL

41%

REUSE OF FUNCTIONAL BLOCKS FROM A

THIRD PARTY (INCLUDING INTERNAL

GROUP) WITHOUT MODIFICATION

34%

31%

REUSE OF TEST VECTORS

VERIFICATION IP FROM EXTERNAL

COMPANIES

OTHER

16%

1%

0%

10%

Source: Far West Research and Mentor Graphics

154

HDF – CAV 2008

20%

30%

40%

50%

60%

70%

N=393

80%

Please indicate the sources of the

verification models for IC components

used to verify the design you are

describing

None

Synopsys

Cadence

Denali

Mento

HDL

Expert I/O

OTHER

Avery Design

Arasan Chip

Structured

Silicon Cores

Cold Spring

Yogitech

Vesta

Globaltech

Tata

eInfochips

Zaiq

Sidsa

nSys

IPextreme

Comit

Temento

Persistent

Intrinsix

Tenesix

Legend

16%

15%

5%

4%

3%

3%

3%

2%

2%

2%

1%

1%

1%

1%

1%

1%

1%

1%

1%

1%

1%

1%

1%

0%

0%

0%

30%

30%

10%

Source: Far West Research and Mentor Graphics

155

HDF – CAV 2008

20%

36%

30%

40%

N=399

Please indicate the number of spins of

silicon required to release the design to

volume manufacturing

45%

40%

35%

30%

25%

20%

15%

10%

5%

0%

42%

28%

21%

6%

1 (FIRST

SILICON

SUCCESS)

2

Source: Far West Research and Mentor Graphics

156

HDF – CAV 2008

3

4

1%

1%

2%

5

6

7 SPINS or

MORE

N=401

Please indicate the number of spins of

silicon required to release the design to

volume manufacturing

2002

45%

40%

35%

30%

2004

2007

39% 42%

38%

39%

33%

28%

25%

20% 21%

20%

17%

15%

8%

6% 6%

10%

5%

1%

1%

2%

0%

1 (FIRST

SILICON

SUCCESS)

2

Source: Far West Research and Mentor Graphics

157

HDF – CAV 2008

3

4

5

6

7 SPINS or

MORE

Which of the following types of flaws

caused the respins of this design?

100%

80%

77%

60%

40%

24%

23%

21%

20%

0%

LOGIC OR

CLOCKING

FUNCTIONAL

TUNING

ANALOG

CIRCUIT

20%

19%

17%

15%

11%

11%

CROSSTALKPOWER MIXED-SIGNAL YIELD OR TIMING – PATH

TIMING – PATH FIRMWARE

INDUCED CONSUMPTION INTERFACE RELIABILITY TOO SLOW TOO FAST,

DELAYS,

RACE

GLITCHES

CONDITION

Source: Far West Research and Mentor Graphics

158

HDF – CAV 2008

7%

7%

IR DROPS

OTHER

N=290

Which of the following types of flaws

caused the respins of this design?

2002

2004

2007

100%

80%

75% 77%

71%

60%

40%

33%

23%

33%

32%

24%

27%26%

23%

21%

20%

0%

LOGIC OR

C LOC KIN G

F U N C T ION A L

Source: Far West Research and Mentor Graphics

T U N IN G

A N A LOG

C IR C U IT

27%

25%

20%

22%

19%

20% 17%

18%

18% 19%

17%

15%

27%

13%

11%

7%

16%

14%

12%

11%

7%

C R OSST A LKPOW ER M IX ED - SIGN A L Y IELD OR T IM IN G – PA T H F IR M W A R ET IM IN G – PA T H IR D R OPS

IN D U C ED C ON SU M PT IONIN T ER F A C E R ELIA B ILIT Y T OO SLOW

T OO F A ST ,

D ELA Y S,

RACE

GLIT C HES

C ON D IT ION

159

HDF – CAV 2008

5%7%

5%

OT HER

Please indicate the causes of the logic or

functional flaws in this design

70%

60%

60%

50%

41%

40%

35%

30%

18%

20%

15%

10%

3%

0%

INCORRECT or

INCOMPLETE

SPECIFICATION

CHANGES IN

SPECIFICATION

Source: Far West Research and Mentor Graphics

160

HDF – CAV 2008

DESIGN ERROR

FLAW IN

INTERNAL

REUSED BLOCK,

CELL,

MEGACELL or IP

FLAW IN

EXTERNAL IP

BLOCK or

TESTBENCH

OTHER

N=223

Please indicate the causes of the logic or

functional flaws in this design

2002

2004

2007

100%

80%

60%

82% 83%

60%

33% 41%

32%

40%

47%

45%

35%

18%

16%

20%

14%

15%

10% 10%

4% 2% 3%

0%

DESIGN ERROR

CHANGES IN

SPECIFICATION

Source: Far West Research and Mentor Graphics

161

HDF – CAV 2008

FLAW IN

INCORRECT or

INTERNAL

INCOMPLETE

SPECIFICATION REUSED BLOCK,

CELL,

MEGACELL or IP

FLAW IN

EXTERNAL IP

BLOCK or

TESTBENCH

OTHER

Which verification challenge did you

encounter in this design and would most

like to see solved?

Other

2%

DEFINING APPROPRIATE COVERAGE METRICS

9%

TIME TO DISCOVER THE NEXT BUG

9%

TIME TO ISOLATE AND RESOLVE A BUG

MANAGING THE VERIFICATION PROCESS

KNOWING MY VERIFICATION COVERAGE

10%

15%

18%

38%

CREATING SUFFICIENT TESTS TO VERIFY THE DESIGN

0% 5% 10% 15% 20% 25% 30% 35% 40%

Source: Far West Research and Mentor Graphics

162

HDF – CAV 2008

N=223

How satisfied are you with the

effectiveness of the verification process?

35%

28%

30%

29%

25%

20%

15%

14%

15%

9%

10%

5%

3%

2%

0%

1 Very

Dissatisfied

2

Source: 2008 Far West Research and Mentor Graphics

163

HDF – CAV 2008

3

4

5

6

7 Very

Satisfied

How likely are you to recommend the

verification methodology to a friend or

colleague?

30%

26%

25%

25%

20%

16%

16%

15%

10%

8%

6%

5%

3%

0%

1 Very

Unlikely

2

Source: Far West Research and Mentor Graphics

164

HDF – CAV 2008

3

4

5

6

Very

Likely

N=397

Please indicate which specific EDA tools

our team used on the design

Simulation

50%

44%

41%

40%

35%

30%

20%

15%

10%

5%

3%

3%

0%

NONE USED IN

THIS DESIGN

Cadence Incisive

Source: Far West Research and Mentor Graphics

165

HDF – CAV 2008

Mentor Graphics–

ModelSim/Questa

Synopsys – VCS

Internal/Proprietary

Semiconductor

Vendor

Other simulation

N=390