Detecting problems in Internet services by mining text logs

advertisement

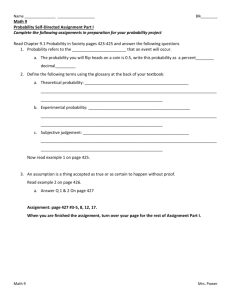

UC Berkeley Detecting Large-Scale System Problems by Mining Console Logs Wei Xu (徐葳)* Ling Huang† Armando Fox* David Patterson* Michael Jordan* *UC Berkeley † Intel Labs Berkeley Jan 18, 2009, MSRA 1 Why console logs? • Detecting problems in large scale Internet services often requires detailed instrumentation • Instrumentation can be costly to insert & maintain • Often combine open-source building blocks that are not all instrumented • High code churn • Can we use console logs in lieu of instrumentation? + Easy for developer, so nearly all software has them – Imperfect: not originally intended for instrumentation 2 The ugly console log HODIE NATUS EST RADICI FRATER today unto the Root a brother is born. * "that crazy Multics error message in Latin." http://www.multicians.org/hodie-natus-est.html 3 Result preview Parse Detect 200 nodes, >24 million lines of logs Visualize Abnormal log segments A single page visualization • Fully automatic process without any manual input 4 Outline Key ideas and general methodology • Methodology illustrated with Hadoop case study • Four step approach • Evaluation • An extension: supporting online detection • Challenges • Two stage detection • Evaluation • Related work and future directions 5 Our approach and contribution Parsing Feature Creation Machine Learning Visualization • A general methodology for processing console logs automatically (SOSP’09) • A novel method for online detection (ICDM ’09) • Validation on real systems • Working on production data from real companies 6 Key insights for analyzing logs • The log contains the necessary information to create features • Identifiers • State variables • Correlations among messages (sequences) receiving blk_1 received blk_1 receiving blk_2 NORMAL ERROR • Console logs are inherently structured • Determined by log printing statement 7 Outline • Key ideas and general methodology Methodology illustrated with Hadoop case study • Four step approach • Evaluation • An extension: supporting online detection • Challenges • Two stage detection • Evaluation • Related work and future directions 8 Step 1: Parsing • Free text → semi-structured text • Basic ideas Receiving block blk_1 Log.info(“Receiving block ” + blockId); Receiving block (.*) [blockId] Type: Variables: Receiving block blockId(String)=blk_1 • Non-trivial in object oriented languages •String representation of objects •Sub-classing • Our Solution •Type inference on source code •Pre-compute all possible message templates 9 Parsing: Scale parsing with mapreduce Time used (min) 20 24 Million lines of console logs = 203 nodes * 48 hours 15 10 5 0 0 20 40 60 Number of nodes 80 100 10 Parsing: Language specific parsers • Source code based parsers • Java (OO Language) • C / C++ (Macros and complex format strings) • Python (Scripting/dynamic languages) • Binary based parsers • Java byte code • Linux + Windows binaries using printf format strings 11 Step 2: Feature creation Message count vector • Identifiers are widely used in logs • Variables that identify objects manipulated by the program receiving blk_1 • file names, object keys, user ids receiving blk_2 • Grouping by identifiers • Similar to execution traces receiving blk_1 received blk_2 received blk_1 received blk_1 receiving blk_2 • Identifiers can be discovered automatically 12 Feature creation – Message count vector example • Numerical representation of these “traces” • Similar to bag of words model in information retrieval Receiving blk_1 Receiving blk_2 Received blk_2 Receiving blk_1 Received blk_1 Received blk_1 Receiving blk_2 blk_1 ■ ■0 ■0 ■ 0■ 0■ 0■ 2 ■2 ■1 ■2 ■0 ■0 ■2 ■ 0■ 0■ 00 blk_2 0 2 ■1 ■0 ■1 ■2 ■0 ■0 ■ 2■ 0■ 00 ■■ ■0 ■0 ■ 0■ 0■ 13 Step 3: Machine learning – PCA anomaly detection • Most of the vectors are normal • Detecting abnormal vectors • Principal Component Analysis (PCA) based detection • PCA captures normal patterns in these vectors • Based on correlations among dimensions of the vectors 02 21200200000000 receiving blk_1 received blk_1 receiving blk_2 NORMAL ERROR 14 Evaluation setup • Experiment on Amazon’s EC2 cloud • • • • 203 nodes x 48 hours Running standard map-reduce jobs ~24 million lines of console logs ~575,000 HDFS blocks • 575,000 vectors • ~ 680 distinct ones • Manually labeled each distinct cases • Normal/abnormal • Tried to learn why it is abnormal • For evaluation only 15 PCA detection results Anomaly Description 1 2 3 4 5 6 7 8 9 10 11 Actual Detected Forgot to update namenode for deleted block Write block exception then client give up Failed at beginning, no block written Over-replicate-immediately-deleted Received block that does not belong to any file Redundant addStoredBlock request received Trying to delete a block, but the block no longer exists on data node Empty packet for block Exception in receiveBlock for block PendingReplicationMonitor timed out Other anomalies Total anomalies Normal blocks Description False Positives 1 2 Normal background migration Multiple replica ( for task / jobdesc files ) Total 4297 3225 2950 2809 1240 953 724 476 89 45 108 4297 3225 2950 2788 1228 953 650 476 89 45 107 16916 16808 558223 False Positives 1397 349 1746 How can we make the results easy for operators to understand? 16 Step 4: Visualizing results with decision tree writeBlock # received exception >=1 ERROR 0 # Starting thread to transfer block # to # >=3 <=2 #: Got exception while serving # to #:# >=1 OK 0 Unexpected error trying to delete block #\. BlockInfo Not found in volumeMap >=1 ERROR 1 0 addStoredBlock request received for # on # size # But it does not belong to any file >=1 ERROR 1 0 # starting thread to transfer block # to # >=1 0 OK 0 #Verification succeeded for # >=1 0 OK 0 Receiving block # src: # dest: # >=3 OK 0 ERROR 1 <=2 ERROR 1 17 The methodology is general • Surveyed a number of software apps • Linux kernel, OpenSSH • Apache, MySQL, Jetty • Cassandra, Nutch • Our methods apply to virtually all of them • Same methodology worked on multiple features / ML algorithms • Another feature “state count vector” • Graph visualization • WIP: Analyzing production logs at Google 18 Outline • Key ideas and general methodology • Methodology illustrated with Hadoop case study • Four step approach • Evaluation An extension: supporting online detection • Challenges • Two stage detection • Evaluation • Related work and future directions 19 What makes it hard to do online detection? Parsing Feature Creation PCA Detection Visualization Message count vector feature is based on sequences, NOT individual messages 20 Online detection: when to make detection? • Cannot wait for the entire trace • Can last arbitrarily long time • How long do we have to wait? • Long enough to keep correlations • Wrong cut = false positive • Difficulties • No obvious boundaries • Inaccurate message ordering • Variations in session duration receiving blk_1 receiving blk_1 received blk_1 received blk_1 reading blk_1 reading blk_1 deleting blk_1 deleted blk_1 Time 21 Frequent patterns help determine session boundaries • Key Insight: Most messages/traces are normal • Strong patterns • “Make common paths fast” • Tolerate noise 22 Two stage detection overview Dominant cases Parsing Frequent pattern based filtering Non-pattern Normal cases Free text logs 200 nodes PCA Detection Real anomalies OK OK ERROR 23 Stage 1 - Frequent patterns (1): Frequent event sets Repeat until all patterns found Coarse cut by time Find frequent item set receiving blk_1 receiving blk_1 received blk_1 reading blk_1 received blk_1 Refine time estimation error blk_1 reading blk_1 deleting blk_1 deleted blk_1 PCA TimeDetection 24 Stage 1 - Frequent patterns (2) : Modeling session duration time • Cannot assume Gaussian • 99.95th percentile estimation is off by half • 45% more false alarms • Mixture distribution Count Pr(X>=x) • Power-law tail + histogram head Duration Duration 25 Frequent pattern matching filters most of the normal events 86% 100% Parsing* Dominant cases Frequent pattern based filtering 14% Free text logs 200 nodes OK Non-pattern 13.97% Normal cases PCA Detection Real anomalies OK ERROR 0.03% 26 Frequent patterns in HDFS Frequent Pattern 99.95th percentile Duration Allocate, begin write % of messages 13 sec 20.3% 8 sec 44.6% Delete - 12.5% Serving block - 3.8% Read exception - 3.2% Verify block - 1.1% Done write, update metadata Total • Covers most messages • Short durations 85.6% (Total events ~20 million) 27 Detection latency • Detection latency is dominated by the wait time Frequent pattern (matched) Single event pattern Frequent pattern (timed out) Non pattern events 28 Detection accuracy True Positives False Positives False Negatives Precision Recall Online 16,916 2,748 0 86.0% 100.0% Offline 16,808 1,746 108 90.6% 99.3% (Total trace = 575,319) • Ambiguity on “abnormal” • Manual labels: “eventually normal” • > 600 FPs in online detection as very long latency • E.g. a write session takes >500sec to complete (99.99th percentile is 20sec) 29 Outline • Key ideas and general methodology • Methodology illustrated with Hadoop case study • Four step approach • Evaluation • An extension: supporting online detection • Challenges • Two stage detection • Evaluation Related work and future directions 30 Related work: system monitoring and problem diagnosis Heavier instrumentation Finer granularity data (Passive) data collection •Syslog •SNMP •Chukwa [Boulon08] Configurable Tracing frameworks Source/binary •Dtrace •Xtrace [Fonseca07] •VM-based tracing [King05] •Aspect-oriented programming •Assertions Numerical traces Path-based problem detection SystemHistory[Cohen05] Fingerprints[Bodik10] [Lakhina04] Pinpiont [Chen04] Statistical debugging [Zheng06] AjaxScope [Kiciman07] Replay-based Deterministic replay [Altekar09] Event as time series [Hellerstein02] [Lim08] [Yamanishi05] Alternative logging PeerPressure[Wang04] Heat-ray [Dunagan09] [Kandula09] Runtime predicates D3S [Liu08] 31 Related work: log parsing • Rule based and scripts • Microsoft log parser, splunk, … • Frequent pattern based • [Vaarandi04] R. Vaarandi. A breadth-first algorithm for mining frequent patterns from event logs. In proceedings of INTELLCOMM, 2004. • Hierarchical • • • [Fu09] Q. Fu, et al. Execution Anomaly Detection in Distributed Systems through Unstructured Log Analysis. In Proc. of ICDM 2009 [Makanju09] A. A. Makanju, et al. Clustering event logs using iterative partitioning. In Proc. of KDD ’09 [Fisher08] K. Fisher, et al. From dirt to shovels: fully automatic tool generation from ad hoc data. In Proc. of POPL ’08 • Key benefit of using source code • Handles rare messages, which is likely to be more useful in problem detection 32 Related work: modeling logs • As free texts • [Stearley04] J. Stearley. Towards informatic analysis of syslogs. In Proc. of CLUSTER, 2004. • Visualize global trends • • [CretuCioCarlie08] G. Cretu-Ciocarlie et al, Hunting for problems with Artemis, In Proc of WASL 2008 [Tricaud08] S. Tricaud, Picviz: Finding a Needle in a Haystack, In Proc. of WASL 2008 • A single event stream (time series) • • • [Ma01] S. Ma, et.al.. Mining partially periodic event patterns with unknown periods. In Proceedings of the 17th International Conference on Data Engineering, 2001. [Hellerstein02] J. Hellerstein, et.al. Discovering actionable patterns in event data, 2002. [Yamanishi05] K. Yamanishi, et al. Dynamic syslog mining for network failure monitoring. In Proceedings of ACM KDD, 2005. • Multiple streams / state transitions • • [Tan08] J. Tan, et al. SALSA: Analyzing Logs as State Machines. In Proc. of WASL 2008 [Fu09] Q. Fu, et al. Execution Anomaly Detection in Distributed Systems through Unstructured Log Analysis. In Proc. of ICDM 2009 33 Related work: techniques that inspired this project • Path based analysis • • [Chen04] M. Y. Chen, et al. Path-based failure and evolution management. In Proceedings of NSDI, 2004. [Fonseca07] R. Fonseca, et.al. Xtrace: A pervasive network tracing framework. In In NSDI, 2007. • Anomaly detection and kernel methods • • [Gartner03] T. Gartner. A survey of kernels for structured data. SIGKDD Explore, 2003. [Twining03] C. J. Twining, et al. The use of kernel principal component analysis to model data distributions. Pattern Recognition, 36(1):217–227, January 2003. • Vector space information retrieval • • [Papineni 01] K. Papineni. Why inverse document frequency? In NAACL, 2001. [Salton87] G. Salton et al. Term weighting approaches in automatic text retrieval. Cornell Technical report, 1987. • Using source code as alternative info • [Tan07] L. Tan, et.al.. /*icomment: bugs or bad comments?*/. In Proceedings of ACM SOSP, 2007. 34 Future directions: console log mining • Distributed log stream processing • Handle large scale cluster + partial failures • Integration with existing monitoring systems • Allows existing detection algorithm to apply directly • • • • Logs from the entire software stack Handling logs across software versions Allowing feedback from operators Providing suggestions on improving logging practice 35 Beyond console logs: textual information in SW dev-operation Documentation Comments Bug tracking Version control logs Unit testing Operation docs Scripts Configurations Problem tickets Code Paper Makefiles Versioning diffs Continuous integration Profiling Monitoring data Platform counters Console logs Core dumps 36 Summary Parsing Feature Creation Machine Learning Visualization • A general methodology for processing console logs automatically (SOSP’09) • A novel method for online detection (ICDM ’09) • Validation on real systems http://www.cs.berkeley.edu/~xuw/ Wei Xu <xuw@cs.berkeley.edu> 37 Backup slides 38 Rare messages are important Receiving block blk_100 src: … dest:... Receiving block blk_200 src: … dest:... Receiving block blk_100 src: …dest:… Received block blk_100 of size 49486737 from … Received block blk_100 of size 49486737 from … Pending Replication Monitor timeout block blk_200 39 Compare to natural language (semantics) based approach • We use console log solely as a trace of program execution • Semantic meanings sometimes misleading • Does “Error” really mean it? • Missing link between the message and the actual program execution • Hard to completely eliminate manual search 40 Compare to text based parsing • Existing work on inferring structure of log messages without source codes • • • • • Patterns in strings Words weighing Learning “grammar” of logs Pros -- Don’t need source code analysis Cons -- Unable to handle rare messages • 950 possible, only <100 appeared in our log • Per-operation features require highly accurate parsing 41 Better logging practices • We do not require developers to change their logging habits • Use logging • Traces vs. error reporting • Distinguish your messages from others’ • printf(“%d”, i); • Use a logging framework • Levels, timestamps etc. • Use correct levels (think in a system context) • Unnecessary WARNINGs and ERRORs 42 Ordering of events • Message count vectors do not capture any ordering/timing information • Cannot guarantee the global ordering on distributed systems – Needs significant change to the logging infrastructure 43 Too many alarms -- what can operators do with them? • Operators see clusters of alarms instead of individual ones • Easy to cluster similar alarms • Most of the alarms are the same • Better understanding on problems • Deterministic bugs in the systems • Provides more informative bug reports • Either fix the bug or the log • Due to transient failures • Clustered around single nodes/subnets/time • Better understanding of normal patterns 44 Case studies • Surveyed a number of software apps • • • • Linux kernel, OpenSSH Apache, MySQL, Jetty Hadoop, Cassandra, Nutch Our methods apply to virtually all of them 45 Log parsing process 46 Step 1: Log parsing Challenges in object oriented languages LOG.info(“starting:” + transact); starting: xact 325 is PREPARING starting: xact 325 is STARTING at Node1:1000 starting: (.*) [transact][Transaction] [Participant.java:345] xact (.*) is (.*) [tid, state] [int, String] starting: xact 325 is PREPARING starting: (.*) [transact][Transaction] [Participant.java:345] xact (.*) is (.*) at (.*) [tid, state, node] [int, String, Node] starting: xact 325 is STARTING at Node1:1000 47 Intuition behind PCA 48 Another decision tree 49 Existing work on textual log analysis • Frequent item set mining – R. Vaarandi. SEC - a lightweight event correlation tool. IEEE Workshop on IP Operations and Management, 2002. – • Temporal properties analysis – – Y. Liang, A. Sivasubramaniam, and J. Moreira. Filtering failure logs for a bluegene/L prototype. In Proceedings of IEEE DSN, 2005. C. Lim, et.al. A log mining approach to failure analysis of enterprise telephony systems. In Proceedings of IEEE DSN, 2008. • Statistical modeling of logs – R. K. Sahoo, et.al.. Critical event prediction for proactive management in largescale computer clusters. In Proceedings of ACM KDD, 2003. 50 Real world logging tools • Generation • Log4j, Jakarta commons logging, logback, SLF4J, ... • Standard web servers log format (W3C, Apache) • Collection - syslog and beyond • syslog-ng (better availability) • nsyslog, socklog… (better security) • Analysis – Query languages for logs • Microsoft log parser • SQL and continuous query / Complex events processing / GUI – Talend Open Studio – Manually specified rules • A. Oliner and J. Stearley. What supercomputers say: A study of five system logs. In Proceedings of IEEE DSN, 2007. • logsurfer, Swatch, OSSEC – an open source host intrusion detection system – Searching the logs • Splunk – Log visualization • Syslog watcher … 51 • • • • • • • • • • • [1] R. S. Barga, J. Goldstein, M. Ali, and M. Hong. Consistent streaming through time: A vision for event stream processing. In Proceedings of CIDR, volume 2007, pages 363–374, 2007. [2] D. Borthakur. The hadoop distributed file system: Architecture and design. Hadoop Project Website, 2007. [3] R. Dunia and S. J. Qin. Multi-dimensional fault diagnosis using a subspace approach. In Proceedings of American Control Conference, 1997. [4] R. Feldman and J. Sanger. The Text Mining Handbook: Advanced Approaches in Analyzing Unstructured Data. Cambridge Univ. Press, 12 2006. [5] R. Fonseca, G. Porter, R. H. Katz, S. Shenker, and I. Stoica. Xtrace: A pervasive network tracing framework. In In NSDI, 2007. [6] T. G¨artner. A survey of kernels for structured data. SIGKDD Explor. Newsl.,5(1):49–58, 2003. [7] T. G¨artner, J.W. Lloyd, and P. A. Flach. Kernels and distances for structured data. Mach. Learn., 57(3):205–232, 2004. [8] M. Gilfix and A. L. Couch. Peep (the network auralizer): Monitoring your network with sound. In LISA ’00: Proceedings of the 14th USENIX conference on System administration, pages 109–118, Berkeley, CA, USA, 2000. USENIX Association. [9] L. Girardin and D. Brodbeck. A visual approach for monitoring logs. In LISA ’98: Proceedings of the 12th USENIX conference on System administration, pages 299–308, Berkeley, CA, USA, 1998. USENIX Association. [10] C. Gulcu. Short introduction to log4j, March 2002. http://logging.apache.org/log4j. [11] J. Hellerstein, S. Ma, and C. Perng. Discovering actionable patterns in event data, 2002. 52 • • • • • • • [12] J. E. Jackson and G. S. Mudholkar. Control procedures for residuals associated with principal component analysis. Technometrics, 21(3):341–349, 1979. [13] A. Lakhina, M. Crovella, and C. Diot. Diagnosing network-wide traffic anomalies. In SIGCOMM ’04: Proceedings of the 2004 conference on Applications, technologies, architectures, and protocols for computer communications, pages 219–230, New York, NY, USA, 2004. ACM. [14] A. Lakhina, K. Papagiannaki, M. Crovella, C. Diot, E. D. Kolaczyk, and N. Taft. Structural analysis of network traffic flows. In SIGMETRICS ’04/Performance’04: Proceedings of the joint international conference on Measurement and modeling of computer systems, pages 61–72, New York, NY, USA, 2004. ACM. [15] Y. Liang, A. Sivasubramaniam, and J. Moreira. Filtering failure logs for a bluegene/L prototype. In DSN ’05: Proceedings of the 2005 International Conference on Dependable Systems and Networks, pages 476–485, Washington, DC, USA, 2005. IEEE Computer Society. [16] C. Lim, N. Singh, and S. Yajnik. A log mining approach to failure analysis of enterprise telephony systems. In Proceedings of International conference on dependable systems and networks, June 2008. [17] S. Ma and J. L. Hellerstein. Mining partially periodic event patterns with unknown periods. In Proceedings of the 17th International Conference on Data Engineering, pages 205–214, Washington, DC, USA, 2001. IEEE Computer Society. [18] I. Mierswa, M. Wurst, R. Klinkenberg, M. Scholz, and T. Euler. Yale: Rapid prototyping for complex data mining tasks. In L. Ungar, M. Craven, D. Gunopulos, and T. Eliassi-Rad, editors, KDD ’06: Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 935–940, New York, NY, USA, August 2006. ACM. 53 • • • • • • • • • [19] A. Oliner and J. Stearley. What supercomputers say: A study of five system logs. In DSN ’07: Proceedings of the 37th Annual IEEE/IFIP International Conference on Dependable Systems and Networks, pages 575–584, Washington, DC, USA, 2007. IEEE Computer Society. [20] J. E. Prewett. Analyzing cluster log files using logsurfer. In in Proceedings of the 4th Annual Conference on Linux Clusters, 2003. [21] R. K. Sahoo, A. J. Oliner, I. Rish, M. Gupta, J. E. Moreira, S. Ma, R. Vilalta, and A. Sivasubramaniam. Critical event prediction for proactive management in largescale computer clusters. In KDD ’03: Proceedings of the ninth ACM SIGKDD international conference on Knowledge discovery and data mining, pages 426–435, New York, NY, USA, 2003. ACM. [22] J. Stearley. Towards informatic analysis of syslogs. In CLUSTER ’04: Proceedings of the 2004 IEEE International Conference on Cluster Computing, pages 309–318, Washington, DC, USA, 2004. IEEE Computer Society. [23] J. Stearley and A. J. Oliner. Bad words: Finding faults in spirit’s syslogs. In CCGRID ’08: Proceedings of the 2008 Eighth IEEE International Symposium on Cluster Computing and the Grid (CCGRID), pages 765–770, Washington, DC, USA, 2008. IEEE Computer Society. [24] T. Takada and H. Koike. Tudumi: information visualization system for monitoring and auditing computer logs. pages 570–576, 2002. [25] C. J. Twining and C. J. Taylor. The use of kernel principal component analysis to model data distributions. Pattern Recognition, 36(1):217–227, January 2003. [26] R. Vaarandi. Sec - a lightweight event correlation tool. IP Operations and Management, 2002 IEEE Workshop on, pages 111–115, 2002. [27] R. Vaarandi. A data clustering algorithm for mining patterns from event logs. IP Operations 54 and Management, 2003. (IPOM 2003). 3rd IEEE Workshop on, pages 119–126, Oct. 2003. • • • • • • • [28] R. Vaarandi. A breadth-first algorithm for mining frequent patterns from event logs. In F. A. Aagesen, C. Anutariya, and V.Wuwongse, editors, INTELLCOMM, volume 3283 of Lecture Notes in Computer Science, pages 293–308. Springer, 2004. [29] I. H. Witten and E. Frank. Data mining: practical machine learning tools and techniques with Java implementations. Morgan Kaufmann Publishers Inc., 2000. [30] K. Yamanishi and Y. Maruyama. Dynamic syslog mining for network failure monitoring. In KDD ’05: Proceedings of the eleventh ACM SIGKDD international conference on Knowledge discovery in data mining, pages 499–508, New York, NY, USA, 2005. ACM. [31] P. Bodik, G. Friedman, L. Biewald, H. Levine, G. Candea, K. Patel, G. Tolle, J. Hui, A. Fox, M. I. Jordan, and D. Patterson. Combining visualization and statistical analysis to improve operator confidence and efficiency for failure detection and localization. volume 0, pages 89–100, Los Alamitos, CA, USA, 2005. IEEE Computer Society. [32] M. Y. Chen, A. Accardi, E. Kiciman, J. Lloyd, D. Patterson, A. Fox, and E. Brewer. Pathbased faliure and evolution management. In NSDI’04: Proceedings of the 1st conference on Symposium on Networked Systems Design and Implementation, pages 23–23, Berkeley, CA, USA, 2004. USENIX Association. [33] I. Cohen, S. Zhang, M. Goldszmidt, J. Symons, T. Kelly, and A. Fox. Capturing, indexing, clustering, and retrieving system history. SIGOPS Oper. Syst. Rev.,39(5):105–118, 2005. [34] E. Kiciman and B. Livshits. Ajaxscope: a platform for remotely monitoring the client-side behavior of web 2.0 applications. In SOSP ’07: Proceedings of twentyfirst ACM SIGOPS symposium on Operating systems principles, pages 17–30, New York, NY, USA, 2007. ACM. 55 • • • • • • • • [35] L. Tan, D. Yuan, G. Krishna, and Y. Zhou. /*icomment: bugs or bad comments?*/. In SOSP ’07: Proceedings of twenty-first ACM SIGOPS symposium on Operating systems principles, pages 145–158, New York, NY, USA, 2007. ACM. [36] K. Papineni. Why inverse document frequency? In NAACL ’01: Second meeting of the North American Chapter of the Association for Computational Linguistics on Language technologies 2001, pages 1–8, Morristown, NJ, USA, 2001. Association for Computational Linguistics. [37] G. Salton and C. Buckley. Term weighting approaches in automatic text retrieval. Technical report, Ithaca, NY, USA, 1987. Samuel T. King, George W. Dunlap, Peter M. Chen, "Debugging operating systems with timetraveling virtual machines", Proceedings of the 2005 Annual USENIX Technical Conference , April 2005 (presentation by Sam King). Best Paper Award. [Dunagan09] J. Dunagan, et al. Heat-ray: Combating Identity Snowball Attacks using Machine Learning, Combinatorial Optimization and Attack Graphs, In Proc. of SOSP 2009 [Kandula09] Detailed Diagnosis in Enterprise Networks Srikanth Kandula (Microsoft Research), Ratul Mahajan (Microsoft Research), Patrick Verkaik (UC San Diego), Sharad agarwal (Microsoft Research), Jitu Padhye (Microsoft Research), Paramvir Bahl (Microsoft Research), In Proc. of SigComm 2009 [Kiciman07] Emre Kiciman and Benjamin Livshits, AjaxScope: A Platform for Remotely Monitoring the Client-side Behavior of Web 2.0 Applications. In Proc. of SOSP 2007 [King05] Samuel T. King, George W. Dunlap, Peter M. Chen, "Debugging operating systems with time-traveling virtual machines", Proceedings of the 2005 Annual USENIX Technical Conference56, April 2005 (presentation by Sam King).